ELE Times

NANO Nuclear Energy: Pioneering Portable Microreactors and Vertically Integrated Fuel Solutions for Sustainable Power

NANO Nuclear Energy Inc. is making significant strides in the nuclear energy sector, focusing on becoming a diversified and vertically integrated company. On July 18, the company successfully closed an additional sale of 135,000 common stock shares at $20.00 per share, marking a significant financial milestone. NANO Nuclear, recognized as the first publicly listed portable nuclear microreactor company in the U.S., according to its website, is dedicated to advancing sustainable energy solutions through four main business areas: portable microreactor technology, nuclear fuel fabrication, nuclear fuel transportation, and consulting services within the nuclear industry.

NANO Nuclear is led by a team of world-class nuclear engineers who are developing cutting-edge products like the ZEUS solid core battery reactor and the ODIN low-pressure coolant reactor. These cutting-edge nuclear microreactors are engineered to provide clean, portable, and on-demand energy solutions, effectively meeting both present and future energy demands.

In a recent interview, NANO Nuclear Energy’s CEO, James Walker, outlined the company’s ambitious plans to establish a vertically integrated nuclear fuel business through its subsidiaries, Advanced Fuel Transportation Inc. (AFT) and HALEU Energy Fuel Inc. (HEF). The goal is to secure a reliable supply chain for high-assay, low-enriched uranium (HALEU) fuel, which is crucial for advanced nuclear reactors. HALEU, enriched to contain 5-19.9% of the fissile isotope U-235, enhances reactor performance, allowing for smaller designs with higher power density. Recognizing these advantages, HEF is planning to invest in fabrication facilities to meet the growing demand for advanced reactor fuel.

AFT, a key subsidiary of NANO Nuclear, is led by former executives from the world’s largest transportation companies. The subsidiary aims to establish a North American transportation network to supply commercial quantities of fuel to small modular reactors, microreactor companies, national laboratories, the military, and Department of Energy (DoE) programs. AFT’s position is strengthened by its exclusive license for a patented high-capacity HALEU fuel transportation basket, developed in collaboration with three prominent U.S. national nuclear laboratories and funded by the DoE. Concurrently, HEF is dedicated to establishing a domestic HALEU fuel fabrication pipeline to cater to the expanding advanced nuclear reactor market.

Walker acknowledged several challenges that the company faces and outlined strategies to overcome them. One of the main challenges lies in navigating the intricate regulatory landscape. Obtaining numerous permits and licenses from bodies like the Nuclear Regulatory Commission (NRC) and the DoE is essential for nuclear fuel operations. To address this, NANO Nuclear plans to invest in a dedicated regulatory affairs team to manage the licensing process and ensure ongoing compliance with stringent safety and environmental standards. Early and consistent engagement with regulators will also be crucial to align operations with regulatory expectations.

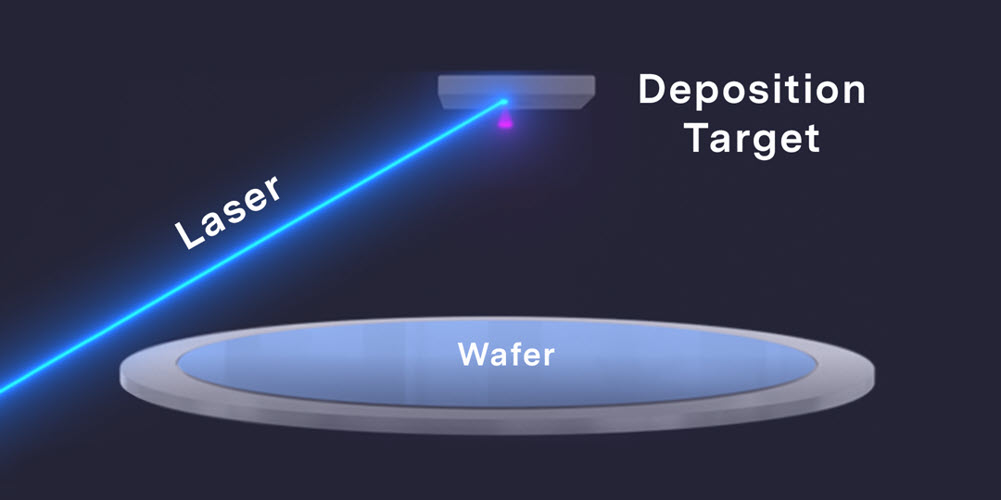

Technical and engineering challenges are also a significant focus for NANO Nuclear. Walker emphasized the importance of developing and optimizing the deconversion process to safely and efficiently handle enriched uranium hexafluoride (UF6) and convert it into other uranium fuel forms. Meeting reactor specifications requires attaining the high precision and quality essential in HALEU fuel fabrication. To overcome these challenges, NANO Nuclear intends to leverage expertise from experienced nuclear engineers and collaborate with research institutions for technology development. Rigorous quality control systems and continuous improvement practices will be key components in addressing these technical hurdles.

Another set of challenges relates to supply chain and logistics. Given the stringent safety protocols required for handling radioactive materials, ensuring the secure and safe transport of HALEU fuel is of utmost importance. Walker noted the importance of synchronizing activities across multiple facilities to avoid bottlenecks and delays. To effectively manage the supply chain, NANO Nuclear intends to establish strong transportation and security protocols in collaboration with specialized logistics companies, along with implementing advanced tracking and coordination systems.

Economic and financial viability is another critical consideration. Building facilities for deconversion, fuel fabrication, and transportation demands significant capital investment. To ensure the economic viability of the integrated supply chain, managing operational costs is essential. Walker highlighted the need to secure a range of funding sources, such as government grants, private investments, and strategic partnerships. To support these efforts, NANO Nuclear will develop detailed financial models to forecast costs and revenues and implement cost-control measures.

Market and demand uncertainties also pose challenges for the company. It is crucial to secure adequate demand for HALEU fuel, especially from microreactor manufacturers and other potential clients. To tackle this, NANO Nuclear intends to carry out market research to identify and secure long-term contracts with key customers. By differentiating its product offerings through quality, reliability, and integrated services, the company aims to compete effectively with existing fuel suppliers and new market entrants.

Addressing human resources and expertise is equally important for NANO Nuclear’s success. Recruiting and retaining highly skilled personnel with expertise in nuclear technology, engineering, and regulatory compliance is critical. To this end, Walker mentioned that the company will develop a comprehensive human resources strategy focusing on recruitment, training, and career development to ensure the necessary talent is in place.

The company’s advancements in microreactor technology are particularly noteworthy. The latest advanced microreactors, with a thermal energy output ranging from 1 to 20 megawatts, provide a flexible and portable option compared to traditional nuclear reactors. Microreactors can generate clean and reliable electricity for commercial use while also supporting a range of non-electric applications, such as district heating, water desalination, and hydrogen fuel production.

NANO Nuclear is at the forefront of this technology with its innovative ZEUS microreactor ZEUS boasts a distinctive design with a fully sealed core and a highly conductive moderator matrix for effective dissipation of fission energy. The entire core and power conversion system are housed within a single shipping container, making it easy to transport to remote locations. Engineered to deliver continuous power for a minimum of 10 years, ZEUS provides a dependable and clean energy solution for isolated areas, utilizing conventional materials to lower costs and expedite time to market.

The ZEUS microreactor’s completely sealed core design eliminates in-core fluids and associated components, significantly impacting overall system reliability and maintenance requirements. By reducing the number of components prone to failure, such as pumps, valves, and piping systems, the reactor’s design decreases the likelihood of mechanical failures and leaks, thereby enhancing overall reactor reliability. This inherently safer design also eliminates coolant loss scenarios, which are among the most severe types of reactor incidents.

With fewer moving parts, the maintenance intervals for ZEUS are significantly reduced. Components that avoid exposure to corrosive and erosive fluids have an extended service life, leading to fewer and less extensive maintenance activities. The absence of fluids simplifies inspections and replacements, making routine maintenance easier and quicker, ultimately reducing reactor downtime and operational costs.

Using an open-air Brayton cycle for power conversion in the ZEUS microreactor presents both significant benefits and challenges. The cycle’s high thermodynamic efficiency and mechanical robustness make it suitable for remote locations. By using air as the working fluid, the need for water is eliminated, reducing corrosion risk and making the reactor ideal for arid regions. However, challenges include managing high temperatures and ensuring material durability. Efficient heat exchanger design and advanced control systems are crucial, along with robust filtration and adaptable systems to handle dust and temperature extremes in remote areas.

The highly conductive moderator matrix in the ZEUS microreactor significantly enhances safety and efficiency in dissipating fission energy compared to traditional reactor designs. This advanced matrix ensures superior thermal conductivity, allowing for rapid and efficient heat transfer away from the reactor core. The matrix’s thermal properties also support passive cooling mechanisms, such as natural convection, that operate without external power, adding a critical safety layer during emergencies.

NANO Nuclear is also developing the ODIN advanced nuclear reactor to diversify its technology portfolio. The ODIN design will use conventional fuel with up to 20% enrichment, minimizing development and testing costs With its low-pressure coolant system, the design improves structural reliability and extends service life. ODIN’s high-temperature operation ensures resilient performance and high-power conversion efficiency. Utilizing natural convection for heat transfer and decay heat removal, it offers robust safety features that align with the company’s commitment to advancing nuclear technology.

In summary, NANO Nuclear Energy Inc. is pioneering advancements in nuclear energy through its focus on portable microreactor technology and a vertically integrated supply chain. The company’s innovative ZEUS and ODIN reactors, along with its strategic approach to addressing regulatory, technical, and market challenges, position it as a key player in the future of sustainable energy solutions.

The post NANO Nuclear Energy: Pioneering Portable Microreactors and Vertically Integrated Fuel Solutions for Sustainable Power appeared first on ELE Times.

Budget 24-25 calls for India-first schemes & policies to boost industries and morale of the nation

With the idea of “Viksit Bharat” in the making, the Union Budget 24-25 has brought a sense of motive and accomplishment to the social and economic fabric of the country. The compelling vision towards upskilling, research and development, employment, and women-centric opportunities seems to be a just and progressive way forward.

Not to mention, the govt. has stepped up substantially to elevate the electronics and technology industry. The intention is crystal clear and the focus is sharp. The allocation of Rs 21,936 crore to the Ministry of Electronics and Information Technology (MeitY), marks a significant 52% increase from the revised estimates of FY24, which were Rs 14,421 crore. This boost supports various incentive schemes and programs under MeitY, including semiconductor manufacturing, electronics production, and the India AI Mission.

Speaking of the ministry’s departments, the modified scheme for establishing compound semiconductors, silicon photonics, sensor fabs, discrete semiconductor fabs, and facilities for semiconductor assembly, testing, marking, and packaging (ATMP) and outsourced semiconductor assembly and testing (OSAT) received the highest allocation of Rs 4,203 crore, up from Rs 1,424 crore in FY24. Additionally, the scheme for setting up semiconductor fabs in India has been allocated Rs 1,500 crore for FY25, a big shout-out.

The production-linked incentive (PLI) scheme for large-scale electronics manufacturing also increased, with its outlay rising from Rs 4,489 crore in the revised estimates to Rs 6,125 crore for FY25. For the India AI Mission, the government has allocated Rs 511 crore for FY25.

Furthermore, the National Informatics Centre (NIC), responsible for e-governance and digital infrastructure has received an increased outlay of Rs 1,748 crore, up from Rs 1,552 crore in the previous fiscal year’s revised estimates. The substantial rise in MeitY’s budget, reaching Rs 21,936.9 crore for 2024-25, compared to Rs 14,421.25 crore for 2023-24, is largely due to the capital allocation towards the Modified Programme for Development of Semiconductors and Display Manufacturing Ecosystem in India, which saw a 355% increase to Rs 6,903 crore from Rs 1,503.36 crore in FY24.

Incentive schemes for semiconductors and large-scale electronics manufacturing, as well as IT hardware, are providing significant support to large companies like Micron and Tata Electronics to establish facilities in India. Additionally, Rs 551.75 crore has been allocated for the “India AI Mission” to enhance the country’s AI infrastructure. The previous NDA cabinet had approved over Rs 10,300 crore for the India AI Mission in March, aimed at catalyzing various components, including IndiaAI Compute Capacity, IndiaAI Innovation Centre, IndiaAI Datasets Platform, IndiaAI Application Development Initiative, IndiaAI FutureSkills, IndiaAI Startup Financing, and Safe & Trusted AI.

The other aspects of the budget concentrating on the Prime Minister’s “Vocal for Local” vision including “PM Surya Ghar Muft Bijli Yojana” among others is both timely and commendable. Overall, the budget is sure to empower the Indian spirit in action and open new growth avenues for indigenous players. I am excited to see how things will pan out for us as a nation in the next decade.

The post Budget 24-25 calls for India-first schemes & policies to boost industries and morale of the nation appeared first on ELE Times.

When did short range radio waves begin to shape our daily life?

Courtesy: u-blox

The roots of short-range wireless communicationYou arrive at your smart home after a long day. The phone automatically connects to the local network and the temperature inside is perfect, neither too cold nor too hot. As you settle into your favourite couch and plug in your headphones, ready to enjoy a good song, a family member asks you to connect your devices to share some files. While waiting, you are drawn to an old radio that once belonged to your grandmother. For a moment, everything vanishes, and you catch a glimpse into the past, imagining a distant decade when none of these short-range wireless technologies existed.

The mentioned activities require the transmission of data via radio waves traveling through the air at the speed of light. Although we cannot observe them, radio waves carry information between transmitters and receivers at different frequencies and distances. As a fundamental and ubiquitous information carrier, short-range wireless technology is now part of our daily lives. For this to happen, many scientific and technological developments had to come first.

A peek into short-range prehistoryThe electric telegraph was the first step – a revolutionary development that took shape in the first decades of the 19th century. Then, in the 1880s, Heinrich Hertz demonstrated the existence of electromagnetic waves (including radio waves), proving the possibility of transmitting and receiving electrical waves through the air. Building on Hertz’s work, Guillermo Marconi succeeded in sending a wireless message in 1895.

At the turn of the century, the application of radio waves for communication was a significant innovation. Thanks to the discovery of the radio and the development of transmitters and receivers, by the 1920s, it was possible to send messages, broadcast media, and listen to human voices and music remotely.

Radios penetrated millions of homes within just a few decades. While audio transmissions opened a new chapter in communications, visual broadcasting became the next challenge. Television quickly emerged as the next widely available communication technology.

The common denominator of these early communications and broadcasting tools was the use of high-power transmitters and radio frequency channels in the lower part of the spectrum. At the time, they were defined as long, medium, and short waves. But since the 1960s, using the specific frequency band or channel for each communication link has been more common than referring to the wavelength.

For decades, these developments focused on perfecting broadcast technologies, exploring the scope of long range communication, and reaching ever farther away places. The story didn’t stop there, though. Scientists and engineers went several steps further and began experimenting with cellular technology for mobile applications in the licensed spectrum and short range radio and wireless technologies in the license-free spectrum, opening up new personal and data communications possibilities.

The history of short range and cellular radio technology is rich. For this reason, we will focus on the former for now, while a future blog will cover the latter.

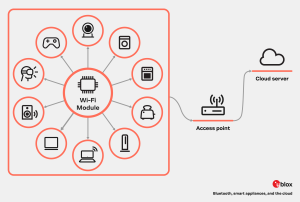

Short range radioWhen we talk about short-range wireless technologies, we refer to technologies that can communicate between devices within a range up to typically 10-30 m. Bluetooth and Wi-Fi are the most common short-range technologies. This communication is made possible by short-range wireless chips and modules embedded in smartphones and many other devices, enabling them to connect and communicate with others nearby.

Once the long-range transmission infrastructure and broadcast systems were in place, a sudden interest in short-range communications occurred about forty years ago. The expansion of the radio spectrum frequencies by the U.S. Federal Communications Commission allowed civilian devices to transmit at 900 MHz, 2.4 GHz, and 5.8 GHz. With the development of various communication technologies, the short-range wireless technology era was about to commence.

Wi-FiWe are all familiar with this term, and today, the first thing we do when we arrive at a new place, be it a friend’s house, a restaurant, or a train station, is to request the Wi-Fi password. Once your phone is ‘in,’ high-speed data transfer via radio waves begins.

What were you up to in the 1980s? While many of us were immersed in 80s culture, including fashion, music, and movies, technology companies were busy building the infrastructure for wireless local area networks (WLANs). Relying on this infrastructure, manufacturers began producing tons of devices. Soon, the incompatibility between devices from different brands led to an uncertain period that yearned for a common wireless standard.

This period came to an end with an agreement in 1997. The Institute of Electrical and Electronics Engineers released the common 802.11 standard, uniting some of the largest companies in the industry and paving the way for the Wireless Ethernet Compatibility Alliance (WECA). With the 802.11 standard, the technology soon to be known as Wi-Fi was born.

In 2000, the Wi-Fi Alliance organization continued promoting the new wireless networking technology, popularizing the term Wi-Fi (Wireless Fidelity). In the years that followed, Alliance members devoted much effort to secure applications, use cases, and interoperability for Wi-Fi products.

BluetoothAn iconic piece of technology from the 80s was the Walkman. It was everywhere and everyone loved it. Mixing your tapes to listen to music for at least an hour was like creating your favourite lists on Spotify.

Invented in the late 1970s, the Walkman was so revolutionary that it remained on the market for about 40 years, with sales peaking in the first two decades.

While highly innovative, this technology had one major drawback: the cord. When you exercised or engaged in any activity that required movement, you would inevitably get stuck or tangled in the objects around you.

The idea for Bluetooth technology originated from a patent issued in 1989 by Johan Ullman, a Swedish physician born in 1953. He obtained this patent while researching analog cordless headsets for mobile phones, possibly inspired by the inconvenience of tangled wires while using a Walkman. His work was the seed that laid the foundation for wireless headsets.

One of Ericsson’s most ambitious endeavors in the 1990s was materializing Ullman’s idea. Building upon his patent and another one from 1992, Nils Rydbeck, then CTO of Ericsson Mobile, commissioned a team of engineers led by Sven Mattisson to develop what we know today as Bluetooth technology. The innovation is captured as a modern runestone replica erected by Ericsson in Lund in 1999 in memory of Harald Bluetooth.

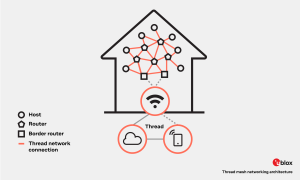

ThreadAlthough not defined as a short-range technology, this networking protocol is a newer tool for smart home and Internet of Things (IoT) applications. It is highly advantageous because it can provide reliable, low-power, and secure connectivity.

Thread’s origins date back to 2013, when a team at Nest Labs set out to develop a new networking protocol for smart home devices. The company had previously created an earlier version called Nest Weave. Much like the early days of Wi-Fi, this version showed a significant shortcoming: a lack of interoperability between devices from different manufacturers.

With the advent of IoT devices, the need for a specific networking protocol became evident. In 2015, the Thread Group – initially consisting of seven companies, including Samsung, and later joined by Google and Apple ‒ released the Thread Specification 1.0.

This specification defined the details of the networking protocol designed for IoT devices. Critical for manufacturers, this protocol enables the development of secure and reliable Thread-compatible devices and facilitates communication between smart devices in home environments.

This networking protocol is unique because of its mesh networking architecture, a key differentiator. The architecture enables multiple devices, or nodes, to form a sectioned mesh network in which each device can communicate with the other members of the set. A mesh topology makes communication efficient and reliable, even when specific nodes fail or are unavailable.

Thread technology has gained traction and support over the past decade, particularly among companies developing solutions for the smart home and IoT ecosystem. Device manufacturers, semiconductor companies, software developers, and service providers all recognize the relevance of this protocol for building connected and interoperable smart home systems.

Wave me up before you go!The amount of data transmitted over the air has never been as extreme as today. Signal transmission between electronic devices has increased exponentially. Both long- and short-range waves enable transmission and communication to, from, and between devices to join networks for accessing the Internet, for instance. Now, a myriad of radio waves surrounds us.

Over the past 34 years, each of these short-range technologies (comprising the protocol) has contributed to the advancement of connectivity in various industries, including automotive, industrial automation, and many others. Until recent years, they have done so independently.

Today, the challenge for manufacturers and other stakeholders is choosing the most appropriate technology for each application, such as Bluetooth or Thread. They have also realized that combining these technologies can further advance the possibilities of IoT connectivity.

Next time you connect your smartphone to your wireless headphones, ask for the network password at a coffee shop, or communicate with colleagues on a Thread network, take a moment to remember the steps needed to live in such a connected world.

The post When did short range radio waves begin to shape our daily life? appeared first on ELE Times.

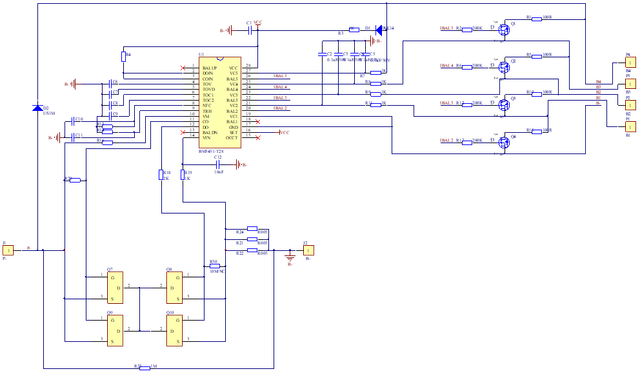

STM32CubeProgrammer 2.17 simplifies serial numbering and option byte configurations

Author : STMicroelectronics

STM32CubeProgrammer 2.17 is the very definition of a quality-of-life improvement. While it ensures support for the latest STM32s, it also brings features that will make a developer’s workflow more straightforward, such as writing ASCII strings in memory, automatic incrementation in serial numbering, or exporting and importing byte options. This new release also shows how ST listens to its community, which is why we continue to bring better support to Segger probes. In its own way, each release of STM32CubeProgrammer is a conversation we have with STM32 developers, and we can’t wait to hear what everyone has to say.

What’s new in STM32CubeProgrammer 2.17? New MCU SupportThis latest version of STM32CubeProgrammer supports STM32C0s with 128 KB of flash. It also recognizes the STM32MP25, which includes a 1.35-TOPS NPU , and all the STM32WB0s we recently released, including the STM32WB05, STM32WB05xN, STM32WB06, and STM32WB07. In the latter case, we announced their launch just a few weeks ago, thus showing that STM32CubeProgrammer keeps up with the latest releases to ensure developers can flash and debug their code on the newest STM32s as soon as possible.

New Quality-of-Life Improvements.The other updates brought on by STM32CubeProgrammer 2.17 aim to make a developer’s job easier by tailoring our utility to their workflow. For instance, we continue to build on Segger’s previous support of the J-Link and Flasher probes to ensure they support a read protection level (RDP) regression with password, thus bridging the gap between what’s possible with an STLINK probe and what’s available on the Segger models. Consequently, developers already using our partner’s probes won’t feel like they are missing out. Another update brought on by version 2.17 is the ability to generate serial numbers and automatically increment them within STM32CubeProgrammer, thus hastening the process of flashing multiple STM32s in one batch.

Other quality-of-life improvements aim to make STM32CubeProgrammer more intuitive. For instance, it is now possible to export an STM32’s option bytes. Very simply, they are a way to store configuration options, such as read-out protection levels, watchdog settings, power modes, and more. The MCU loads them early in the boot process, and they are stored in a specific part of the memory that’s only accessible by debugging tools or the bootloader. By offering the ability to export and import option bytes, STM32CubeProgrammer enables developers to configure MCUs much more easily. Similarly, version 2.17 can now edit memory fields in ASCII to make certain section a lot more readable.

What is STM32CubeProgrammer? An STM32 flasher and debuggerAt its core, STM32CubeProgrammer helps debug and flash STM32 microcontrollers. As a result, it includes features that optimize these two processes. For instance, version 2.6 introduced the ability to dump the entire register map and edit any register on the fly. Previously, changing a register’s value meant changing the source code, recompiling it, and flashing the firmware. Testing new parameters or determining if a value is causing a bug is much simpler today. Similarly, engineers can use STM32CubeProgrammer to flash all external memories simultaneously. Traditionally, flashing the external embedded storage and an SD card demanded developers launch each process separately. STM32CubeProgrammer can do it in one step.

Another challenge for developers is parsing the massive amount of information passing through STM32CubeProgrammer. Anyone who flashes firmware knows how difficult it is to track all logs. Hence, we brought custom traces that allow developers to assign a color to a particular function. It ensures developers can rapidly distinguish a specific output from the rest of the log. Debugging thus becomes a lot more straightforward and intuitive. Additionally, it can help developers coordinate their color scheme with STM32CubeIDE, another member of our unique ecosystem designed to empower creators.

STM32CubeProgrammer

What are some of its key features?

New MCU support

STM32CubeProgrammer

What are some of its key features?

New MCU support

Most new versions of STM32CubeProgrammer support a slew of new MCUs. For instance, version 2.16 brought compatibility with the 256 KB version of the STM32U0s. The device was the new ultra-low power flagship model for entry-level applications thanks to a static power consumption of only 16 nA in standby. STM32CubeProgrammer 2.16 also brought support for the 512 KB version of the STM32H5, and the STM32H7R and STM32H7S, which come with less Flash so integrators that must use external memory anyway can reduce their costs. Put simply, ST strives to update STM32CubeProgrammer as rapidly as possible to ensure our community can take advantage of our newest platforms rapidly and efficiently.

SEGGER J-Link probe supportTo help developers optimize workflow, we’ve worked with SEGGER to support the J-Link probe fully. This means that the hardware flasher has access to features that were previously only available on an ST-LINK module. For instance, the SEGGER system can program internal and external memory or tweak the read protection level (RDP). Furthermore, using the J-Link with STM32CubeProgrammer means developers can view and modify registers. We know that many STM32 customers use the SEGGER probe because it enables them to work with more MCUs, it is fast, or they’ve adopted software by SEGGER. Hence, STM32CubeProgrammer made the J-Link vastly more useful, so developers can do more without leaving the ST software.

Automating the installation of a Bluetooth LE stackUntil now, developers updating their Bluetooth LE wireless stack had to figure out the address of the first memory block to use, which varied based on the STM32WB and the type of stack used. For instance, installing the basic stack on the STM32WB5x would start at address 0x080D1000, whereas a full stack on the same device would start at 0x080C7000, and the same package starts at 0x0805A000 on the STM32WB3x with 512 KB of memory. Developers often had to find the start address in STM32CubeWB/Projects/STM32WB_Copro_Wireless_Binaries. The new version of STM32CubeProgrammer comes with an algorithm that determines the right start address based on the current wireless stack version, the device, and the stack to install.

A portal to security on STM32Readers of the ST Blog know STM32CubeProgrammer as a central piece of the security solutions present in the STM32Cube Ecosystem. The utility comes with Trusted Package Creator, which enables developers to upload an OEM key to a hardware secure module and to encrypt their firmware using this same key. OEMs then use STM32CubeProgrammer to securely install the firmware onto the STM32 SFI microcontroller. Developers can even use an I2C or SPI interface, which gives them greater flexibility. Additionally, the STM32H735, STM32H7B, STM32L5, STM32U5, and STM32H5 also support external secure firmware install (SFIx), meaning that OEMs can flash the encrypted binary on memory modules outside the microcontroller.

Secure ManagerSecure Manager is officially supported since STM32CubeProgrammer 2.14 and STM32CubeMX 1.13. Currently, the feature is exclusive to our new high-performance MCU, the STM32H573, which supports a secure ST firmware installation (SSFI) without requiring a hardware secure module (HSM). In a nutshell, it provides a straightforward way to manage the entire security ecosystem on an STM32 MCU thanks to binaries, libraries, code implementations, documentation, and more. Consequently, developers enjoy turnkey solutions in STM32CubeMX while flashing and debugging them with STM32CubeProgrammer. It is thus an example of how STM32H5 hardware and Secure Manager software come together to create something greater than the sum of its parts.

Other security features for the STM32H5STM32CubeProgrammer enables many other security features on the STM32H5. For instance, the MCU now supports secure firmware installation on internal memory (SFI) and an external memory module (SFIx), which allows OEMs to flash encrypted firmware with the help of a hardware secure module (HSM). Similarly, it supports certificate generation on the new MCU when using Trusted Package Creator and an HSM. Finally, the utility adds SFI and SFIx support on STM32U5s with 2 MB and 4 MB of flash.

Making SFI more accessible The STM32HSM used for SFI with STM32CubeProgrammer

The STM32HSM used for SFI with STM32CubeProgrammer

Since version 2.11, STM32CubeProgrammer has received significant improvements to its secure firmware install (SFI) capabilities. For instance, in version 2.15, ST added support for the STM32WBA5. Additionally, we added a graphical user interface highlighting addresses and HSM information. The GUI for Trusted Package Creator also received a new layout under the SFI and SFIx tabs to expose the information needed when setting up a secure firmware install. The Trusted package creator also got a graphical representation of the various option bytes to facilitate their configuration.

Secure secret provisioning for STM32MPxSince 2.12, STM32CubeProgrammer has a new graphical user interface to help developers set up parameters for the secure secret provisioning available on STM32MPx microprocessors. The mechanism has similarities with the secure firmware install available on STM32 microcontrollers. It uses a hardware secure module to store encryption keys and uses secure communication between the flasher and the device. However, the nature of a microprocessor means more parameters to configure. STM32CubeProgrammers’ GUI now exposes those settings previously available in the CLI version of the utility to expedite workflows.

Double authenticationSince version 2.9, the STM32CubeProgrammer supports a double authentication system when provisioning encryption keys via JTAG or a Boot Loader for the Bluetooth stack on the STM32WB. Put simply, the feature enables makers to protect their Bluetooth stack against updates from end-users. Indeed, developers can update the Bluetooth stack with ST’s secure firmware if they know what they are doing. However, a manufacturer may offer a particular environment and, therefore, may wish to protect it. As a result, the double authentication system prevents access to the update mechanism by the end user. ST published the application note AN5185 to offer more details.

PKCS#11 supportSince version 2.9, STM32CubeProgrammer supports PKCS#11 when encrypting firmware for the STM32MP1. The Public-Key Cryptography Standards (PKCS) 11, also called Cryptoki, is a standard that governs cryptographic processes at a low level. It is gaining popularity as APIs help embedded system developers exploit its mechanisms. On an STM32MP1, PKCS#11 allows engineers to segregate the storage of the private key and the encryption process for the secure secret provisioning (SSP).

SSP is the equivalent of a Secure Firmware Install for MPUs. Before sending their code to OEMs, developers encrypt their firmware with a private-public key system with STM32CubeProgrammer. The IP is thus unreadable by third parties. During assembly, OEMs use the provided hardware secure module (HSM) containing a protected encryption key to load the firmware that the MPU will decrypt internally. However, until now, developers encrypting the MPU’s code had access to the private key. The problem is that some organizations must limit access to such critical information. Thanks to the new STM32CubeProgrammer and PKCS#11, the private key remains hidden in an HSM, even during the encryption process by the developers.

Supporting new STM32 MCUs Access to the STM32MP13’s bare metalMicrocontrollers demand real-time operating systems because of their limited resources, and event-driven paradigms often require a high level of determinism when executing tasks. Conversely, microprocessors have a lot more resources and can manage parallel tasks better, so they use a multitasking operating system, like OpenSTLinux, our Embedded Linux distribution. However, many customers familiar with the STM32 MCU world have been asking for a way to run an RTOS on our MPUs as an alternative. In a nutshell, they want to enjoy the familiar ecosystem of an RTOS and the optimizations that come from running bare metal code while enjoying the resources of a microprocessor.

Consequently, we are releasing today STM32CubeMP13, which comes with the tools to run a real-time operating system on our MPU. We go into more detail about what’s in the package in our STM32MP13 blog post. Additionally, to make this initiative possible, ST updated its STM32Cube utilities, such as STM32CubeProgrammer. For instance, we had to ensure that developers could flash the NOR memory. Similarly, STM32CubeProgrammer enables the use of an RTOS on the STM32MP13 by supporting a one-time programmable (OTP) partition.

Traditionally, MPUs can use a bootloader, like U-Boot, to load the Linux kernel securely and efficiently. It thus serves as the ultimate first step in the boot process, which starts by reading the OTP partition. Hence, as developers move from a multitasking OS to an RTOS, it was essential that STM32CubeProgrammer enable them to program the OTP partition to ensure that they could load their operating system. The new STM32CubeProgrammer version also demonstrates how the ST ecosystem works together to release new features.

STM32WB and STM32WBA supportSince version 2.12, STM32CubeProgrammer has brought numerous improvements to the STM32WB series, which is increasingly popular in machine learning applications, as we saw at electronica 2022. Specifically, the ST software brings new graphical tools and an updated wireless stack to assist developers. For instance, the tool has more explicit guidelines when encountering errors, such as when developers try to update a wireless stack with the anti-rollback activated but forget to load the previous stack. Similarly, new messages will ensure users know if a stack version is incompatible with a firmware update. Finally, STM32CubeProgrammer provides new links to download STM32WB patches and get new tips and tricks so developers don’t have to hunt for them.

Similarly, STM32CubeProgrammer supports the new STM32WBA, the first wireless Cortex-M33. Made official a few months ago, the MCU opens the way for a Bluetooth Low Energy 5.3 and SESIP Level 3 certification. The MCU also has a more powerful RF that can reach up to +10 dBm output power to create a more robust signal.

STM32H5 and STM32U5The support for STM32H5 began with STM32CubeProgrammer 2.13, which added compatibility with MCUs, including anything from 128 KB up to 2 MB of flash. Initially, the utility brought security features like debug authentication and authentication key provisioning, which are critical when using the new life management system. The utility also supported key and certificate generation, firmware encryption, and signature. Over time, ST added support for the new STM32U535 and STM32U545 with 512 KB and 4 MB of flash. The MCUs benefit from RDP regression with a password to facilitate developments and SFI secure programming.

Additionally, STM32CubeProgrammer includes an interface for read-out protection (RDP) regression with a password for STM32U5xx. Developers can define a password and move from level 2, which turns off all debug features, to level 1, which protects the flash against certain reading or dumping operations, or to level 0, which has no protections. It will thus make prototyping vastly simpler.

STLINK-V3PWRIn many instances, developers use an STLINK probe with STM32CubeProgrammer to flash or debug their device. Hence, we quickly added support for our latest STLINK-PWR probe, the most extensive source measurement unit and programmer/debugger for STM32 devices. If users want to see energy profiles and visualize the current draw, they must use STM32CubeMonitor-Power. However, STM32CubeProgrammer will serve as an interface for all debug features. It can also work with all the probe’s interfaces, such as SPI, UART, I2C, and CAN.

Script modeThe software includes a command-line interface (CLI) to enable the creation of scripts. Since the script manager is part of the application, it doesn’t depend on the operating system or its shell environment. As a result, scripts are highly sharable. Another advantage is that the script manager can maintain connections to the target. Consequently, STM32CubeProgrammer CLI can keep a connection live throughout a session without reconnecting after every command. It can also handle local variables and even supports arithmetic or logic operations on these variables. Developers can thus create powerful macros to automate complex processes. To make STM32CubeProgrammer CLI even more powerful, the script manager also supports loops and conditional statements.

A unifying experienceSTM32CubeProgrammer aims to unify the user experience. ST brought all the features of utilities like the ST-LINK Utility, DFUs, and others to STM32CubeProgrammer, which became a one-stop shop for developers working on embedded systems. We also designed it to work on all major operating systems and even embedded OpenJDK8-Liberica to facilitate its installation. Consequently, users do not need to install Java themselves and struggle with compatibility issues before experiencing STM32CubeProgrammer.

Qt 6 supportSince STM32CubeProgrammer 2.16, the ST utility uses Qt 6, the framework’s latest version. Consequently, STM32CubeProgrammer no longer runs on Windows 7 and Ubuntu 18.04. However, Qt 6 patches security vulnerabilities, brings bug fixes, and comes with significant quality-of-life improvements.

The post STM32CubeProgrammer 2.17 simplifies serial numbering and option byte configurations appeared first on ELE Times.

How Synopsys IP and TSMC’s N12e Process are Driving AIoT

Hezi Saar | Synopsys

Artificial intelligence (AI) is revolutionizing nearly every aspect of our lives in all industries, driving the transformation of technology from development to consumption and reshaping how we work, communicate, and interact. On the other hand, the Internet of Things (IoT) connects everyday objects to the internet, enabling a network of interconnected devices that adds additional improved efficiency and enhanced convenience in our lives.

The union of AI and IoT, known as AIoT, integrates AI capabilities into IoT devices and is further poised to change our lives and drive the semiconductor industry’s expansion in the foreseeable future. AIoT devices can analyze and interpret data in real-time, enabling smart decisions, autonomously adapting to observed conditions. Promising heightened intelligence, connectivity, and device interactivity, AIoT is capable of handling vast data volumes without needing to rely on cloud-based processing methods.

Within AIoT devices, AI seamlessly integrates into infrastructure components, including programs and chipsets, all interconnected via IoT networks. From smart cities to smart homes and industrial automation, AIoT applications require real-time data processing that is powered by high-capacity on-chip memories, compute power, and minimal power consumption.

Read on to learn more about the opportunities and challenges of AIoT applications at the edge as well as Synopsys IP on TSMC’s N12e process and how it supports pervasive AI at the edge.

AIoT Applications at the EdgeAI is truly everywhere and can be found in data centers, cars, and high-end compute devices. However, processing data at or close to the source of information complements the cloud-based AI approach and allows for the immediate processing of data and speedy results for optimal service, more personalized functions to the user, protection of information/additional privacy, and additional reliability.

Everything from smartwatches, security cameras, smart fridges, automation-enabled factory machinery, smart traffic lights, and more are considered AIoT devices. Each of these devices is unique in some way which requires chip designers to find the right balance between performance, power usage, and cost.

For an application like smart cities, low power is the much bigger factor (although performance can’t be completely ignored). For example, think about a smart streetlamp with sensing capabilities that are programmed to come on at sunset and sunrise. With an average streetlamp measuring around 30 feet tall, changing out a burnt-out light bulb and any other components becomes a larger, costlier, and more time-consuming task. Also, controlling the time the lights are on at night at a lower strength creates a more cost-effective as well as environmentally friendly approach, and reduces the light pollution that these streetlamps usually cause. That’s why designing these smart devices to take up as little power as possible for years of use is so important; it extends the life of the streetlamp and enables a smart City environment.

Additionally, minimizing power consumption naturally leads to a smaller cost, size, and weight. It can also help to maximize the user experience, increase the silicon reliability, maximize the lifespan of the IoT device, and lessen environmental impact. Overall, AIoT applications are driving demand for high-performance and low-latency memory interfaces on low leakage nodes.

AIoT Products and Their Corresponding Power-Saving ApproachMany different power-saving approaches can be built into the IP and, ultimately, the chip depending on how the AIoT device is charged.

- Battery-Powered: Sensors that detect water, fire/smoke, intruders, etc. are idle until the alarm/camera/Wi-fi trigger is detected. Many times, the entire sensor needs to be replaced after the job is finished. External power gating (read more on that below) is the best solution. Other battery-powered applications such as door locks and key fobs allow for battery replacement and may require USB 1.1/2.0 connectivity with a power island from Vbus, and NVM.

- Battery-Powered with Energy Harvesting: Examples of this type might include doorbells, security cameras, environment sensors, price tagging, remote controls, and more. MAC IIP opportunities to address these products involve CSI for Camera, M-PHY or eMMC for storage, SPI/PCIe for Wi-Fi, DSI for display, and USB 2.0 for advanced products to assist with charging and firmware download.

- Portable: Users charge these products when/if needed based on use-case. For instance, wearables, personal infotainment devices, audio headsets, e-readers, etc. need to be charged every few days to several weeks depending on how often they are used. For other devices like laptops and phones, it is mandatory to save power when they are not connected to an external power source. This means requiring a fast sleep/resume and power gating if applicable.

- Stationary: Devices that facilitate home networking, home automation, and security, as well as home hubs like Alexa Echo Show or Google Nest are either powered most of the time in a docking station or need to be plugged in all the time with battery backup for keeping settings. The ability to fast sleep/resume and DVFS are both useful for saving power.

The semiconductor industry has considered 16nm and 12nm “long nodes” (or nodes that will be around for many, many years to come) for consumer, IoT, wireless, and certain automotive applications. These nodes can leverage AI because they have great performance using the FinFet process but are also cost-effective and low power.

TSMC has made investments to boost performance and power in these nodes, making them even more appealing for power-conscious designs. For example, N12e offers a device boost for higher density with good performance/power tradeoffs and ultra-low leakage static random-access memory (SRAMs).

Not only does this provide approximately 15% power savings and the memory required to process all that data at the edge, but it is also compatible with existing design rules to minimize IP investment. That’s where Synopsys comes in.

Synopsys IP reduces leakage even further through a variety of different techniques:

- Power Gating: This technique can be used from an active state or a disabled or “turned-off state.” An active state requires retention/restoring such that it is possible to save the state the IP is currently at once power gating is exited. To use this mode, circuits such as always-on domain retention and power control logic are required. Entering power gating from a disabled state requires IP that supports power collapsing and needs to be restarted after power gating is exited.

- Voltage Scaling: IP is also available to scale the voltage down in order to reduce the leakage consumption. There are two types of voltage scaling — dynamic voltage and frequency scaling. With frequency scaling, IP is still performing functional activities but the voltage is reduced in order to meet timing requirements.

- Retention: In this technique, voltage is reduced to a level where registers still hold their current value but it is expected that the IP is not performing functional activity. This means that there is no toggling, IDLE mode, or setup/hold sign-off.

The IP used to design AIoT chips must be versatile in order to support the many different use cases and applications that are powered by N12e process and other low power nodes. Higher-performance chips require a more sophisticated low-power strategy using the various techniques described above.

As AIoT devices become even more prevalent in our homes, workplaces, and cities, Synopsys and TSMC will continue to develop even more sophisticated high-performance, low-power solutions to fuel further innovation in this space.

The post How Synopsys IP and TSMC’s N12e Process are Driving AIoT appeared first on ELE Times.

Highlighting and Addressing the Dangers of Electric Cars

Courtesy: Revolutionized

As moving away from gas-powered vehicles becomes a more accessible option, some people wonder about the dangers of electric cars and whether those issues should discourage them from making the switch. First, consumers must remember that nothing they purchase, use or do in life is completely risk-free.

Driving an electric car is not automatically more dangerous than using one that runs on gas. Both types have associated risks that people can mitigate by taking the necessary precautions after learning about them.

Fires in Enclosed SpacesThe individual cells in EV batteries can experience thermal runaway events, leading to fires and explosions. Short circuits trigger these problems, resulting from battery damage, improper charging habits and poor maintenance.

In this regard, some of the primary dangers of electric cars relate to blazes in enclosed spaces, such as underground parking garages. Researchers are eager to learn more about what happens when those fires occur and how people should reduce the risks.

One Austrian study investigated electric car fires and their effects. A main takeaway was that the potential risks were not significantly more critical than those associated with combustion engine vehicles. However, the research also indicated that the fire-related dangers of electric cars are highest in indoor, multilevel parking garages.

The researchers also identified the need to gather additional information about commercial-grade battery-electric vehicles, such as buses. Their work involved intentionally setting the cars on fire and using 30 temperature sensors to determine how quickly the blazes released heat. Those efforts showed the warmth in the area was still safe enough for firefighters to attend to the matter.

However, that changed when the entire battery was simultaneously and fully on fire. In such cases, the heat release spiked noticeably over a few minutes, suggesting first responders must carefully monitor the fire’s state and progression when tending to it.

Additionally, this research assessed the amount of toxic gasses emitted during these events. Although those involved found comparably higher levels of hydrogen fluoride and carbon monoxide associated with EV fires, one fortunate aspect was that the most significant concentrations floated up beyond the level of those putting out the blazes. That finding kept humans safer and meant the gasses did not block potential escape routes.

Real-Life ConcernsUnfortunately, these dangers of electric cars are not hypothetical. The fires can also spread to nearby vehicles, quickly creating larger issues. One example happened at an airport, where an EV caught on fire, spreading the flames to four other surrounding automobiles.

However, it is also important to remember that these fires are not unique dangers of electric cars. A strong case for that assertion involves the 2023 incident at London Luton airport that affected 1,500 vehicles. However, investigations determined that everything started with a diesel-powered car.

There’s also a related danger, seemingly brought about by people not understanding that electric vehicle fires are relatively rare. One father posted on social media that he was forbidden to park his EV in a hospital garage when taking his child for an appointment. A security guard told him the vehicle could explode and that none were allowed in that garage until a sprinkler system upgrade occurred.

Hospital representatives later released a statement clarifying that EV owners could still use the facility’s main parking area but not the one the parent had tried to enter. However, an image circulated showing an entrance sign reading, “NO ELECTRIC VEHICLES,” with no mention that they would eventually be allowed in the garage. That approach conveys the idea that the hospital is wholly against them without a specified reason

In any case, the people who must primarily concern themselves with these blazes are those tasked with putting them out. EV fires have specific characteristics that prevent firefighters from dealing with them the same ways they would if other vehicles were ablaze. The more professionals learn about these details and keep their knowledge current, the better protected they are from these dangers of electric cars.

Isolated Charging PointsThe United States had more than 160,000 places to charge electric vehicles as of 2023. However, some people are concerned about more than the number. They also want to replenish their cars’ batteries in places that make them feel safe.

One woman in the United Kingdom experienced this during a 300-mile round trip and had to drive to several charging points before finding one that made her feel safe. The others visited before the one she ultimately chose were poorly lit, had little to no activity from other people and were not always staffed. Her challenges led her to start a business, ChargeSafe, which rates six charging station aspects on a five-point scale.

She also asserts that even one bad experience while charging an electric vehicle is too many. Indeed, some people — particularly females or those traveling alone — may resist stopping at specific stations that seem too risky. Such circumstances are often less common for gas stations, since many operate 24/7 and are almost always lit thoroughly.

A Potential Purchase BarrierMany humans dislike changes, and switching to an EV is a significant one for most. Gas-powered cars have become so embedded in modern society that some people would rather keep using them due to their familiarity. However, research also suggests that — among women — the lack of safe places to charge their vehicles could make them hesitate to purchase these options.

The study got perspectives from females in the United States and Canada, examining how charging stations influenced their overall feelings about owning EVs. The results indicated 43% of those in the United States and 30% in Canada had safety-related concerns.

Moreover, 40% of U.S. women said accessible, well-lit charging stations in well-populated areas would influence their vehicle purchasing decisions. The same was true for nearly half of the Canadians. Relatedly, 21% of those in the U.S. and 20% of Canadian respondents indicated tighter charging station security would positively impact their purchasing choices.

Addressing the Dangers of Electric CarsWhereas people interested in EV ownership commonly research range, prices and charger availability, the things on their minds won’t necessarily include the matters covered here. However, awareness is the first step to causing or supporting positive change.

Those who already have electric cars should always follow the manufacturer’s instructions for charging the battery. Additionally, they should get batteries checked for physical damage after even slight incidents that may have caused it. Those two simple but proactive measures can reduce the chances of thermal runaway events.

From a charging safety perspective, people should pay attention to related infrastructure updates in places where they live or frequently travel, advocating for EV charging stations to be in well-lit, populated locations that appear inviting and safe.

The post Highlighting and Addressing the Dangers of Electric Cars appeared first on ELE Times.

Big Future in a Small Space: Wireless SoCs Enable Wearable Medical and Wellness Devices to Realize Their Potential

Courtesy: Renesas

The technology of personal medical monitoring is changing incredibly fast. As little as ten years ago, the normal way that a patient would keep track of general health indicators, such as heart rate and blood pressure, or specific indicators such as blood glucose, was through an invasive medical procedure such as a blood test. Such testing would often need to take place away from the home, in a local clinic or a hospital.

Fast forward to today, and patients have it much better. Anyone – not just those receiving treatment from a medical practitioner – can benefit from monitoring vital signs, as well as other indicators of long-term health such as activity and sleep. That’s because a new generation of wearable medical and consumer devices have extraordinary sensor and data processing capabilities built into them. The newest types of products, such as continuous glucose monitors (CGM) and smart rings, can report on the wearer’s condition 24/7, yet are so small and light that the user is hardly aware of them, and are so convenient and easy to use that they are valuable to anyone.

At the heart of this new type of wearable medical device is advanced wireless connectivity technology: a Bluetooth Low Energy system-on-chip (SoC) for linking to a smartphone, tablet or personal computer, and NFC – the technology that enables contactless payment – for authentication, pairing, configuration, and charging.

Long Battery Life, Tiny Form FactorThe most important limits on the design of both a CGM and a smart ring are the same: space and power. Both devices perform monitoring 24/7, and the expectation is for long battery life, to limit the number of times that the user has to charge them. This puts a strong emphasis on the need for low power consumption in the wireless SoC, both in active and standby modes.

The size of the device is also a critical design parameter; its form factor has to be comfortable for all-day wearing, yet the manufacturer will be eager to pack as much functionality into the device as possible, to increase its value to the user. So this kind of wearable device requires wireless systems for connectivity and charging that are themselves small, and that require the use of the fewest number of external components, to keep the total board footprint to a minimum.

At the same time, these devices perform a range of sophisticated connectivity functions to support both local and remote data access. Despite the size and power constraints, their Bluetooth Low Energy SoC has to provide a robust and reliable connection to the user’s smartphone for data processing and analysis locally or in the cloud. And, NFC connectivity supports functions such as authentication of accessories and usage tracking as well as wireless charging. High performance is therefore an essential requirement.

This presents a tough challenge to the product designer. But manufacturers have a solution that is readily available to them: connectivity products from Renesas that save space and consume amazingly little power.

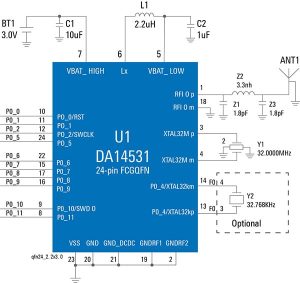

Performance and Low Power for CGM DesignsA typical example is the DA1453x series of Bluetooth SoCs, which combine very low power consumption with high integration, reducing the number of external components on the board and so saving space in CGM designs.

For example, the Bluetooth 5.1 DA14531 is available in a tiny 2.0mm x 1.7mm package, half the size of any offering from other leading manufacturers. On top of this, it only requires six external passive components and operates with a single crystal for timing input.

Record low hibernation and active power consumption ensure long operating and shelf life with even the smallest disposable batteries: the DA14531 is compatible with alkaline, silver oxide, and coin cell batteries, and includes an internal buck-boost DC/DC converter to extend the useful life of these battery types.

This combination of small size and very low power consumption makes the DA1453x SoCs ideal for a CGM, a small wearable device that has to perform 24/7 monitoring.

Smart Ring Designs: Multiple Functions in One Low-Power ChipRenesas Bluetooth SoCs such as the DA14695 or DA1459x are striking examples of the twin benefits of low power consumption and high integration for smart rings. The DA14695 SoC is based on a 96MHz Arm Cortex-M33F CPU core to run application functions such as processing signals from a smart ring alongside a 96MHz Cortex-M0+ core operating as a sensor node controller and configurable media access controller for the on-board Bluetooth Low Energy v5.2 radio.

The DA14695 offers a remarkable level of integration: on-chip features include a power management IC (PMIC), USB interface and USB charger, motor driver for haptic feedback, and a parallel interface driver for a display screen, as well as strong security protection. Yet in Deep Sleep mode, this high-performance device draws just 10µA.

Contrast this with other Bluetooth SoCs on the market which might provide a CPU, a protocol engine, and a sensor node controller but lack many of the peripheral capabilities in the DA14695, leading to a significant increase in the cost of components and in the amount of space required on the PCB.

Designers of smart rings and other wearable devices can use this type of Renesas Connectivity Solutions product to meet their toughest design requirements.

All-in-One Charger and Data Exchange ComponentA similar approach to power and space saving is enabled by Renesas’ NFC technology. NFC is best known for its use in contactless payment terminals, enabling a smartphone or smartwatch to securely exchange data packets to authorize a financial transaction.

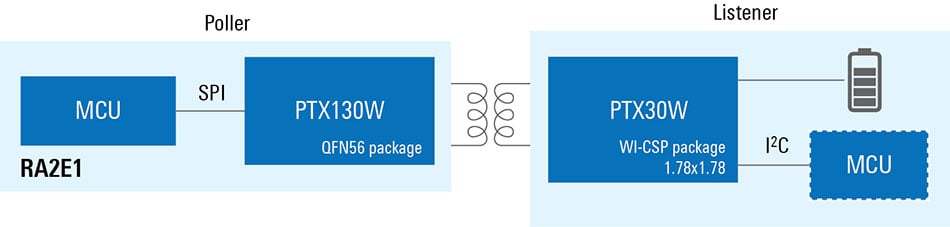

In a smart ring, this data exchange capability can also be used for contactless payments. But the same PTX30W NFC tag used for data exchange also enables NFC wireless charging.

Here again, Renesas has performed a miracle of integration to save space: the PTX30W packs an NFC tag, rectifier, limiter, battery charger circuit, and a dedicated core to handle the NFC wireless charging protocol in a miniature 1.78mm x 1.78mm WLCSP package.

The PTX30W pairs with the Renesas PTX130W, a dedicated NFC charging transmitter, called a poller, embedded in the smart ring’s charging case. The PTX130W provides for the maximum power transfer and fast charging thanks to its direct antenna connection technology (DiRAC). This technology also makes the PTX130W simpler to implement in a charging case design than competing products, as it eliminates the need for EMC filters, and eases antenna matching. The superior RF performance of the PTX130W enables the use of a small antenna and allows for flexible placement of the poller’s and listener’s antennas.

By eliminating the need for additional components, and enabling the use of a small antenna, the PTX30W provides a solution for charging which can be fitted onto the tiny PCB in a smart ring such as the Ring One from Muse Wearables.

A Renesas Ecosystem for Fast and Efficient Product DevelopmentHigh performance and seamless integration are watchwords of the Renesas Connectivity Solutions portfolio, which dovetails perfectly with the Renesas line of microcontrollers and applications processors. The portfolio also gives users access to the unparalleled suite of tools and resources available from Renesas for development, including the e² studio integrated development environment, Flexible Software Package (FSP) bundles for firmware, development kits, and Winning Combinations – pre-vetted sets of compatible components for specific product designs.

The post Big Future in a Small Space: Wireless SoCs Enable Wearable Medical and Wellness Devices to Realize Their Potential appeared first on ELE Times.

Qualcomm Linux sample apps – building blocks for AI inference and video in IoT applications (Part 1 of 2)

Courtesy: Qualcomm

Qualcomm Linux sample apps – building blocks for AI inference and video in IoT applications (Part 1 of 2)In this post we’ll explore the first two building-block applications.

1. Multi-camera streamingThe command-line application gst-multi-camera-example demonstrates streaming from two camera sensors simultaneously. It can apply side-by-side composition of the video streams to show on a display device, or it can encode and store the streams to files.

The application pipeline looks like this:

The application supports two configurations:

- Composition and display – The qtimmfsrc plugin on camera 0 and camera 1 capture the data from the two camera sensors. qtivcomposer performs the composition, then waylandsink displays the streams side by side on the screen.

- Video encoding – The qtimmfsrc plugin on camera 0 and camera 1 captures the data from the two camera sensors and passes it to the v4l2h264enc plugin. The plugin encodes and compresses the camera streams to H.264 format, then hands them off for parsing and multiplexing using the h264parse and mp4mux plugins, respectively. Finally, the streams are handed off to the filesink plugin, which saves them as files.

Here’s an example of the output from the first configuration: Right side image is monochrome, as second camera sensor on development kit is monochrome.

When would you use this application?gst-multi-camera-example is a building block for capturing data from two camera sensors, with options for either composing and displaying the video streams or encoding and storing the streams to files. You can use this sample app as the basis for your own camera capture/encoding applications, including dashcams and stereo cameras.

2. Video wall – Multi-channel video decode and displayThe command-line application gst-concurrent-videoplay-composition facilitates concurrent video decode and playback for AVC-coded videos. The app performs composition on multiple video streams coming from files or the network (e.g., IP cameras) for display as a video wall.

The application can take multiple (such as 4 or 8) video files as input, decode all the compressed videos, scale them and compose them as a video wall. The application requires at least one input video file, in MP4 format with an AVC codec.

The application pipeline looks like this for 4 channels:

Each channel uses plugins to perform the following processing:

- Reads compressed video data from a file using filesrc.

- Demultiplexes the file with qtdemux.

- Parses H.264 video streams using h264parse.

- Decodes the streams using v4l2h264dec.

- The decoded streams from all channels are then composed together using qtivcomposer and displayed using waylandsink.

Here’s an example of using the app gst-concurrent-videoplay-composition on 4 video streams:

When would you use this application?With gst-concurrent-videoplay-composition you can decode multiple compressed video streams, then compose them into a video wall; for example, in retail spaces and digital signage. As an edge box for video surveillance, you can capture input from multiple IP cameras and display it in a single screen. In a video conferencing application, you can process and display feeds from multiple people on the call, with each participant streaming a video.

The post Qualcomm Linux sample apps – building blocks for AI inference and video in IoT applications (Part 1 of 2) appeared first on ELE Times.

Powering the Future of IoT: The Role of 5G RedCap in Expanding Device Connectivity

The global adoption of 5G wireless networks has been slower than anticipated, with high costs, limited coverage, and the lack of necessity for some advanced features contributing to this lag. However, the introduction of the Reduced Capability (RedCap) standard by the 3rd Generation Partnership Project (3GPP) in 2021 aims to accelerate 5G’s expansion into new markets, including industrial, medical, home and buildings, and security sectors.

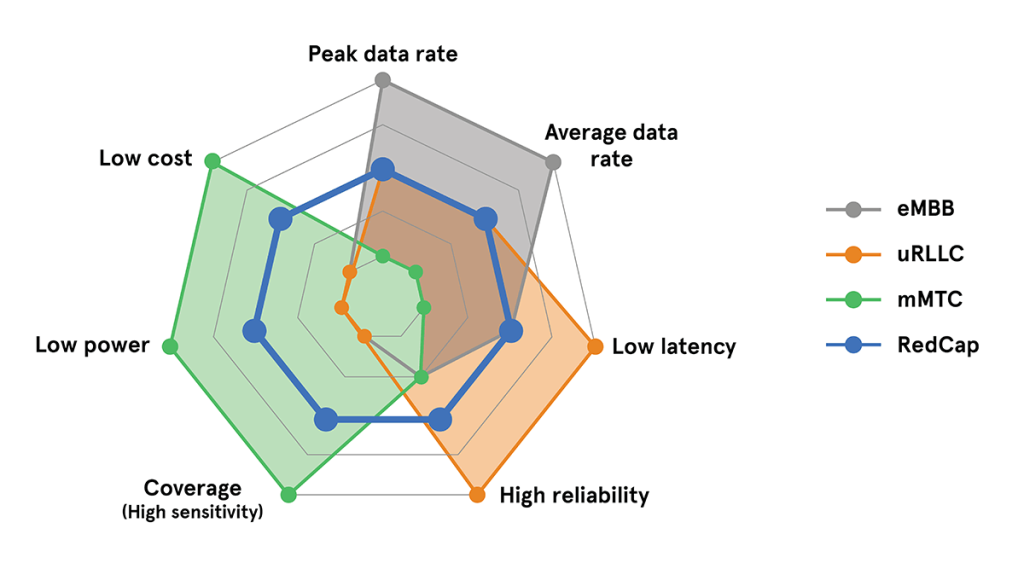

What is 5G RedCap? 5G relies on three main pillars all running inside the 5G Core: enhanced Mobile Broadband (eMBB), ultra-Reliable Low Latency Communication (uRLLC) and massive Machine Type Communication (mMTC). 5G RedCap addresses applications that fall between these extremes. (Source: 3GPP)

5G relies on three main pillars all running inside the 5G Core: enhanced Mobile Broadband (eMBB), ultra-Reliable Low Latency Communication (uRLLC) and massive Machine Type Communication (mMTC). 5G RedCap addresses applications that fall between these extremes. (Source: 3GPP)

5G RedCap, also known as 5G NR-Light or New Radio-Lite, is a simplified version of the 5G standard that bridges the gap between 4G and 5G. It is designed for use cases with minimal hardware requirements, where ultra-high data rates, ultra-low latency, or extremely low power are not essential, but reliable throughput is still necessary. RedCap devices are less complex and more cost-effective compared to baseline 5G devices defined by the 5G Release 15 standard.

One of the key features of RedCap devices is their ability to operate with a single receiving antenna, which reduces complexity and integration costs. These devices also incorporate low-power features like radio resource management (RRM) relaxation and extended Discontinuous Reception Mode (eDRX). These enhancements are particularly advantageous for applications involving static objects or devices that primarily upload data to the cloud, as they can significantly benefit from the resulting power savings.

Looking ahead, Enhanced Reduced Capability (eRedCap) is set to build on the benefits of RedCap by offering even smaller throughput while utilizing the same 5G standalone (SA) network.

5G RedCap: The Next Step Beyond LTE for IoT Connectivity5G RedCap and its enhanced version, eRedCap, represent the future of mid-end cellular IoT connectivity. These technologies are poised to replace LTE Cat 4 and LTE Cat 1 in the coming years, offering a more efficient solution for industrial, medical, and automotive markets that demand longevity. While it may be too early to discuss the phasing out of 4G, it is clear that from a cellular network and chipset perspective, further evolutions of 4G are unlikely.

RedCap increases the addressable 5G market by providing a functional middle ground between high-performance 5G and low-end cellular communication technologies like LTE-M and NB-IoT. Some use cases are already well-served by LTE, but new opportunities are emerging that are better suited to RedCap’s capabilities.

5G RedCap Set to Capture 18% of IoT Market by 2030The market potential for 5G RedCap is significant, particularly in regions where cost is a critical factor for the widespread adoption of digital technologies. According to the Global Cellular IoT Module Forecast, 5G RedCap modules are projected to represent 18% of all cellular IoT module shipments by 2030. This projection highlights the growing importance of RedCap technology, especially in developing nations.

5G RedCap is specifically designed to address emerging use cases that are not adequately served by existing advanced 5G standards, such as NB-IoT, Cat-M1, and 4G. With chipsets already available, 5G RedCap is poised for growth, offering reduced data flow and dependable connectivity while optimizing power consumption to significantly extend device battery life.

The flexibility and network advantages of 5G RedCap, including lower latency, higher speeds, and improved power efficiency compared to previous LTE generations, position it as a superior choice for future mass IoT deployments.

5G RedCap Powers Wearables, Surveillance, Medical Devices, and Industrial IoT: Use Cases5G RedCap is ideally suited for a diverse array of IoT applications, such as:

- Smart Wearables: Devices like smartwatches and low-end XR glasses can benefit from RedCap’s balance of performance and power efficiency.

- Video Surveillance: RedCap’s reliable connectivity and higher data rates are ideal for video surveillance applications.

- Medical Devices: Health monitors and other medical devices can leverage RedCap’s low latency and power efficiency.

- Utility/Smart Grid/Industrial Gateways: RedCap’s dependable connectivity makes it an excellent choice for utility and industrial applications.

5G RedCap provides several important advantages for IoT applications:

- Increased Peak Data Rate: RedCap can achieve peak data rates up to three times higher than LTE Cat 4, making it ideal for applications that demand greater data throughput.

- Higher Peak Data Rate: RedCap is capable of delivering peak data rates up to three times faster than LTE Cat 4, making it well-suited for applications that demand higher data throughput.

- Lower Latency: RedCap offers latency comparable to existing 4G LTE technologies, supporting near real-time data communication for applications like industrial automation and smart grids.

- Improved Power Consumption: By enhancing power efficiency, RedCap can extend the battery life of IoT devices, which is critical for long-term deployments.

5G RedCap represents a significant leap forward in 5G technology, specifically tailored to bridge the gap between high-speed enhanced mobile broadband (eMBB), ultra-reliable low latency communications (uRLLC), and low-throughput, battery-efficient Massive Machine-Type Communication (mMTC) use cases.

With chipsets already available and the potential for significant market growth, 5G RedCap is set to play a pivotal role in future IoT deployments, offering a flexible, efficient, and cost-effective solution for a wide range of applications. As 5G continues to evolve, RedCap’s role in expanding device connectivity and driving innovation will only grow, making it an essential technology for semiconductor manufacturers, OEMs, and the broader IoT ecosystem.

The post Powering the Future of IoT: The Role of 5G RedCap in Expanding Device Connectivity appeared first on ELE Times.

Automotive Industry Transformed: Innovations in AI, Architecture, and Semiconductors Drive Future Mobility

The automotive industry is on the brink of transformative change, with the next three years set to bring more advancements than the previous decade. As cars evolve into smarter, safer, and more efficient machines, software and digital technologies are driving this revolution, reshaping vehicles from the ground up. Key trends such as software-defined vehicles, autonomous driving, and electric vehicles (EVs) are emerging simultaneously, guiding the industry toward a new era of innovation.

This rapid evolution is opening up new avenues for differentiation in a highly competitive market. However, it also presents challenges, such as talent shortages in software and integrated chip development, and the need for significant investment in infrastructure and technology. In this article, we explore the critical areas where technological transformation is impacting the automotive industry and the innovative strategies stakeholders are adopting to stay ahead of the curve.

Autonomous Driving Revolution: AI and Sensors Transform Vehicle SafetyAutonomous driving stands out as one of the most groundbreaking developments in automotive history. Advances in artificial intelligence (AI), sensor technology, and high-speed connectivity are bringing us closer to a future where self-driving cars are the norm. These advancements are also increasing the demand for software-defined vehicles that can receive remote updates to introduce new functions and features, such as enhanced driver assistance, advanced safety systems, and improved connectivity and infotainment options. This evolution promises to make driving safer and more convenient while creating new opportunities for innovation.

Autonomous driving depends heavily on a complex sensor stack and sensor fusion technology, which act as the vehicle’s perceptive systems, or “eyes and ears.” This technology includes LiDARs, radars, cameras, ultrasonic sensors, and GPS data, all of which work together to give vehicles a comprehensive understanding of their surroundings. By combining data from all these sensors, vehicles can create a more accurate environmental model, improving their decision-making and task execution capabilities. This process, known as sensor fusion, is crucial for enabling cars to navigate their environments efficiently.

Continuous advancements in sensor fusion algorithms, powered by AI, are enhancing object classification, scenario interpretation, and hazard prediction. These improvements are essential for real-time driving decisions and safety, making autonomous driving not only possible but also reliable. The rise of autonomous driving represents a significant shift in the industry, promising safer roads and more leisure time for drivers. However, it also demands rigorous testing and close regulation, as manufacturers and tech giants work to lay the foundation for the future of mobility and redefine transportation.