ELE Times

New Renesas MCUs conserve energy with high-res analog and OTA update support

Low-Power, Streamlined Devices Target Energy Management, Home Appliances, Building Automation and Medical Applications

- Core: 48MHz Arm Cortex-M23

- Memory: 512KB integrated, dual-bank Flash memory and 48KB SRAM

- Analog Peripherals: 24-bit Sigma Delta ADC with digital filter, 12-bit ADC, and temperature sensor.

- Packages: 100-, 80- and 64-pin LFQFP

The post New Renesas MCUs conserve energy with high-res analog and OTA update support appeared first on ELE Times.

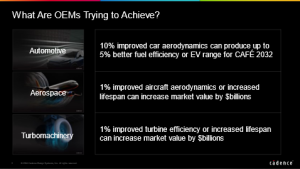

Cadence and NVIDIA Unveil Groundbreaking Generative AI and Accelerated Compute-Driven Innovations

Cadence Reality Digital Twin Platform integrated with NVIDIA Omniverse and Orion molecular design platform accelerated with NVIDIA BioNeMo will transform the future of design

Cadence Design Systems, Inc. today announced an expansion of its multi-year collaboration with NVIDIA across EDA, system design and analysis, digital biology and AI with the unveiling of two transformative solutions to reinvent design using accelerated computing and generative AI.

First, the new Cadence Reality Digital Twin Platform is the industry’s pioneering comprehensive digital twin solution to facilitate speed-of-light acceleration of the design, simulation and optimization of data centers across multiple industries. The platform virtualizes the entire data center and uses AI, high-performance computing (HPC) and physics-based simulation to significantly improve data center energy efficiency by up to 30%.

The Cadence Reality platform’s integration with NVIDIA Omniverse brings OpenUSD data interoperability and physically based rendering to the digital twin solution—helping accelerate data center design and simulation workflows by 30X.

Second, the companies are collaborating on generative AI to dramatically accelerate approaches to drug discovery. Cadence’s cloud-native molecular design platform Orion® will now be supercharged with NVIDIA’s generative AI tool, NVIDIA BioNeMo , and NVIDIA microservices for drug discovery to broaden therapeutic design capabilities and shorten time to trusted results. The collaboration brings together decades of expertise in scientific software and accelerated computing from the two companies to deliver transformative approaches to drug discovery. Accelerated by on-demand GPU access at an unprecedented scale, pharmaceutical companies can quickly and reliably generate and assess design hypotheses across a range of therapeutic modalities, including biologics, peptides and small molecules.

“The broadening collaboration between NVIDIA and Cadence is having a transformative impact on everything from data center design to drug discovery,” said Dr. Anirudh Devgan, president and CEO, Cadence. “As AI rapidly becomes a keystone technology driving data center and data center workload expansion, the Cadence Reality Digital Twin Platform integration with NVIDIA Omniverse will optimize every aspect of data center design and operations, use energy more efficiently, and pave the way for a more efficient, resilient, and environmentally friendly future. Our groundbreaking efforts with NVIDIA to integrate BioNeMo with our industry-leading Orion molecular design tools hold great promise for unlocking new ideas and transforming the future of therapeutics and drug discovery. Together, NVIDIA and Cadence are leading the AI revolution.”

“Digital twins will transform manufacturing, drug discovery and countless other industries,” said Jensen Huang, founder and CEO of NVIDIA. “Using NVIDIA Omniverse and generative AI technologies, Cadence can deliver simulation and digitalization technologies to benefit individuals, companie and societies in ways we have yet to imagine.”

Growing Importance of Digital Twin TechnologyDigital twin technology is increasingly becoming critical to designers and operators of complex data center systems in the AI era as through creating a virtual replica of a physical system, it can use real-time data to simulate its behavior, performance and interactions in various conditions. The Cadence Reality platform provides visibility across the entire value chain, enabling data center designers and operators to simulate the performance of integrated liquid and air-cooling systems, visualize the performance of data centers and plan for what-if scenarios.

The company’s collaboration with NVIDIA also expands the capabilities of the Orion drug discovery platform by providing key capabilities, including access to BioNeMo models for structure prediction, small molecule generation and molecular property prediction. Molecules generated with BioNeMo may then be profiled and iteratively enhanced and designed with Orion tools.

Today’s announcements build upon Cadence and NVIDIA’s long-standing collaboration in areas such as:

- AI-driven digital and custom IC design, including PPA, schedule and cost reduction of NVIDIA GPUs with Cadence Innovus and Cadence Cerebrus solutions

- Over 20 years of partnership in hardware and software verification, including Palladium, Protium, and now Cadence Verisium technologies

- System design and analysis, including GPU-optimized Cadence Fidelity CFD Software and the revolutionary Cadence Millennium Enterprise Multiphysics Platform

These announcements also open a new chapter of Cadence’s Intelligent System Design strategy to help customers develop differentiated products across a wide range of industries and market verticals.

The post Cadence and NVIDIA Unveil Groundbreaking Generative AI and Accelerated Compute-Driven Innovations appeared first on ELE Times.

STMicroelectronics Reports on Resolutions to be Proposed at the 2024 Annual General Meeting of Shareholders

STMicroelectronics, a global semiconductor leader serving customers across the spectrum of electronics applications, announced the resolutions to be submitted for adoption at the Annual General Meeting of Shareholders (AGM) which will be held in Amsterdam, the Netherlands, on May 22, 2024.

The resolutions, proposed by the Supervisory Board, are:

• The adoption of the Remuneration Policy for the Supervisory Board;

• The adoption of the Company’s statutory annual accounts for the year ended December 31, 2023, prepared in accordance with International Financial Reporting Standards (IFRS). The 2023 statutory annual accounts were filed with the Netherlands authority for the Financial Markets (AFM) on March 21, 2024 and are posted on the Company’s website (www.st.com) and the AFM’s website (www.afm.nl);

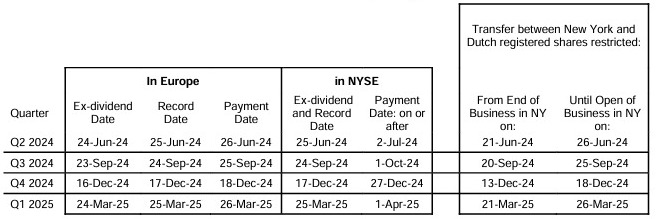

• The distribution of a cash dividend of US$ 0.36 per outstanding share of the Company’s common stock, to be distributed in quarterly installments of US$ 0.09 in each of the second, third and fourth quarters of 2024 and first quarter of 2025 to shareholders of record in the month of each quarterly payment as per the table below;

• The amendment to the Company’s Articles of Association;

• The adoption of the Remuneration Policy for the Managing Board;

• The reappointment of Mr. Jean-Marc Chery as member and Chairman of the Managing Board for a three-year term to expire at the end of the 2027 AGM;

• The approval of the stock-based portion of the compensation of the President and CEO;

• The appointment of Mr. Lorenzo Grandi as member of the Managing Board for a three-year term to expire at the end of the 2027 AGM;

• The approval of the stock-based portion of the compensation of the Chief Financial Officer;

• The approval of a new 3-year Unvested Stock Award Plan for Management and Key Employees;

• The reappointment of EY as external auditor for the 2024 and 2025 financial years;

• The reappointment of Mr. Nicolas Dufourcq, as member of the Supervisory Board, for a three-year term to expire at the end of the 2027 AGM;

• The reappointment of Ms. Janet Davidson, as member of the Supervisory Board, for a one-year term to expire at the end of the 2025 AGM;

• The appointment of Mr. Pascal Daloz, as member of the Supervisory Board, for a three-year term expiring at the 2027 AGM, in replacement of Mr. Yann Delabrière whose mandate will expire at the end of the 2024 AGM;

• The authorization to the Managing Board, until the conclusion of the 2025 AGM, to repurchase shares, subject to the approval of the Supervisory Board;

• The delegation to the Supervisory Board of the authority to issue new common shares, to grant rights to subscribe for such shares, and to limit and/or exclude existing shareholders’ pre-emptive rights on common shares, until the end of the 2025 AGM;

• The discharge of the member of the Managing Board; and

• The discharge of the members of the Supervisory Board.

The record date for all shareholders to participate at the Annual General Meeting of Shareholders will be April 24, 2024. The complete agenda and all relevant detailed information concerning the 2024 AGM, as well as all related AGM materials, are available on the Company’s website (www.st.com) and made available to shareholders in compliance with legal requirements as of March 21, 2024.

As for rule amendments from the Securities and Exchange Commission (SEC) and conforming FINRA rule changes, beginning on May 28, 2024, on US market the new standard for settlement will become the next business day after a trade or t+1. European settlement rule will remain at t+2.

The table below summarizes the full schedule for the quarterly dividends:The post STMicroelectronics Reports on Resolutions to be Proposed at the 2024 Annual General Meeting of Shareholders appeared first on ELE Times.

Precision at all Altitudes for Aerospace: Addressing the Challenges of Additive Manufacturing in Aerospace Production.

The aerospace industry in India is one of the fastest growing sectors with an increasingly strong domestic manufacturing base. To gain further competitive advantage, the implementation of new technologies such as additive manufacturing has been gaining importance in the recent past. While this method leads to cost reduction of building low-volume parts, as well as enables the industry to challenge the limits of efficiency through extremely accurate and complex design executions, the quality challenges faced by this new manufacturing processes should also be thoroughly addressed. High-precision metrology solutions are not only an opportunity to optimize the manufacturing process but also offer valuable insight for material sciences and ensure the quality of the output.

Additive Manufacturing as an Opportunity in AerospaceAir travel, a preferred mode of transportation, relies on aircraft parts meeting stringent quality standards. For instance, before a supplier commences production, up to 1500 inspection features of a turbine blade must be verified, adhering to tight tolerance ranges at every production step. Beyond this challenge, another is the vital maintenance and repair operations (MRO) which often involves replacing high-complexity, quality-intensive low-volume or single parts. Traditional manufacturing processes for MROs prove both time and cost-intensive, unable to meet the demanded complexity and accuracy efficiently. Consequently, additive manufacturing, specifically 3D printing, is increasingly integrated into the aerospace production chain in India, positioning the industry as a pioneer in additive manufacturing innovation. However, the adoption of this technology brings its own challenges, which our experience suggests can be effectively addressed through high-quality metrology solutions.

Hitting the Brake: The Process and Challenges of Additive ManufacturingPowder is the building block of additively manufactured parts. The particles are small, typically ranging from a few micrometers to tens of microns in diameter. Their size distribution and shape influence spread ability and hence possible defects might occur during the process. The defect density is among other aspects and also a factor for recycling and aging of the powder. A uniformly distributed powder bed is the essential basis for a stable and reliable additive manufacturing process. Improper powder quality, powder rheology and the process parameters might cause voids to form in the final structure. The additive manufacturing process, unlike traditional manufacturing methods, requires powders to be melted layer by layer during the build. Melt temperatures and process parameters greatly affect the crystallography and, as a consequence, part properties. After printing, the part is still attached to the build plate. It is then heat-treated for stress relieving and removed from the build plate with a band saw or wire EDM. Some parts are then heat treated again for microstructure changes. These processes possibly influence the characteristic and accuracy of the part, impacting the quality and safety. Post which, Dimensional accuracy and surface finish are critical to ensure proper assembly and consistent matching across multiple parts. Even though additive manufacturing is an immense opportunity since it enables an unprecedented control over material microstructures. Analyzing and understanding these structures is key for an efficient and optimized process that ensures the demanded quality and safety.

Precision at all Altitudes: Overcoming the ChallengesUtilizing cutting-edge measurement and inspection equipment is crucial for meeting aerospace parts’ sophisticated requirements. Our metrology solutions support and can be implemented throughout the manufacturing process, enabling immediate corrective actions, ensuring high-quality output, and promoting sustainable resourcing. We employ Light or Electron Microscopes and CT for continuous powder characterization, identifying sources of quality issues in the powder bed during or after printing. Defective parts can be detected and fixed during the build, avoiding downstream costs and increasing yield. Optical 3D-scanners, Coordinate Measuring Machines, and high-resolution CT validate accuracy, inspect finished parts, and analyze internal structures, contributing to defining optimal settings for future processes. The comprehensive data analysis across the process chain, facilitated by metrology devices equipped with IoT and PiWeb software by ZEISS, ensures correlation and supports an efficient and optimized process. Investing in high-quality metrology and research equipment is indispensable for ensuring safety and quality in the aerospace industry, particularly as ‘Make in India’ propels the sector’s growth, with additive manufacturing playing a vital role in material science and process optimization.

ZEISS, as a key global provider, plays a pivotal role with its Blue Line process, contributing to the industry’s success through precise metrology and quality solutions. Moreover, the company’s commitment to excellence extends beyond mere provision, as it actively engages in collaborative ventures. The company’s globally unique application lab not only facilitates joint customer projects and scientific studies but also serves as a dynamic hub for hands-on demonstrations. This collaborative approach fosters a rich environment for learning and knowledge distribution, ensuring that the aerospace industry benefits not only from cutting-edge technology but also from shared insights and collective expertise.

In my opinion, the aerospace industry in India stands at the forefront of innovation and technological advancements, embracing additive manufacturing as a crucial element in its production chain. By leveraging cutting-edge measurement and inspection equipment throughout the entire manufacturing process, the industry can achieve immediate corrective actions, increase yield, and streamline resource utilization. With continued investments in high-quality metrology and research equipment, the aerospace sector can ensure the safety and quality of its intricate and complex components, further solidifying its position as a leader in technological innovation.

AJAY KUMAR LOHANY

AJAY KUMAR LOHANYDelivery Sr. Director- Aero & Rail

Cyient

The post Precision at all Altitudes for Aerospace: Addressing the Challenges of Additive Manufacturing in Aerospace Production. appeared first on ELE Times.

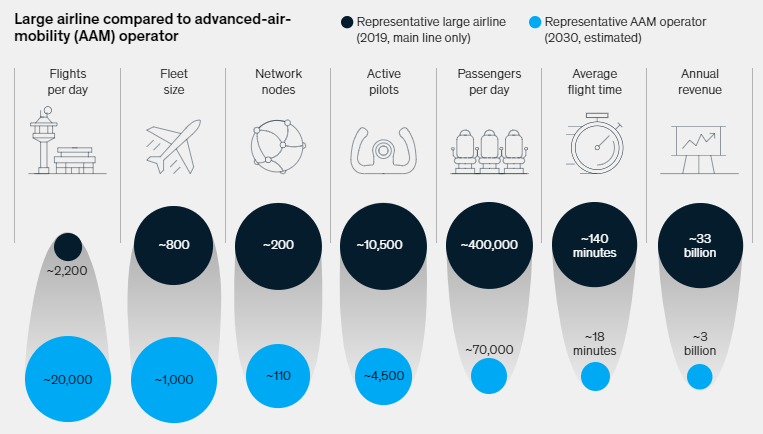

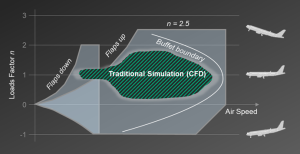

Emerging solutions in all-electric air mobility service

With projections indicating a doubling of air passenger numbers to 8.2 million by 2037, the advancement of all-electric and hybrid-electric propulsion for powering Advanced Air Mobility (AAM) is evolving into a billion-dollar industry. Recent assessments by Rolls Royce suggest that approximately 15,000 Electric Vertical Take-Off and Landing (eVTOL) vehicles will be indispensable across 30 major cities by 2035 solely to meet the demand for intracity travel. By 2030, top players in the passenger Advanced Air Mobility (AAM) sector could boast larger fleets and significantly more daily flights than the world’s biggest airlines. These flights, averaging just 18 minutes each, will typically carry fewer passengers (ranging from one to six, plus a pilot).

The increasing urbanization, expanding population, aging infrastructure, and the surge in e-commerce and logistics underscore the need for a contemporary, safe, and cost-effective transportation solution for both people and goods. Urban Air Mobility (UAM) presents a seamless, reliable, and swift mode of transportation, addressing present and future urban challenges. With the capacity to transform intra and inter-city transportation, UAM offers a quicker and more effective alternative to conventional ground-based transportation methods. The adoption of Urban Air Mobility hinges on five primary factors:

- Growing demand for alternate modes of transportation in urban mobility.

- Need for convenient, efficient and last mile delivery.

- Zero emission and noise free mandates.

- Advancement in technologies (Energy storage, Autonomous, Connected, Power Electronics).

- Security.

Despite the growing Urban Air Mobility (UAM) sector, it faces significant challenges that need addressing for future growth and success. These challenges range from developing reliable electric propulsion systems to achieving autonomous flight capabilities and establishing necessary infrastructure like vertiports and charging stations. Overcoming these hurdles is vital for unlocking UAM’s transformative potential in urban transportation.

AI Integration for UAM EnhancementUtilizing AI for predictive maintenance enables analysis of sensor data and onboard sources to forecast maintenance needs, reducing downtime and increasing aircraft availability. AI-enabled maintenance inspections allow for rapid issue identification through image analysis of eVTOLs and UAVs, minimizing errors and oversights. AI aids in making better decisions for aircraft maintenance support by thoroughly analyzing various considerations, likely leading to improved outcomes. Additionally, robotic systems equipped with AI algorithms can autonomously repair or replace minor parts, enhancing safety for maintenance teams. Moreover, AI facilitates better diagnostics and targeted troubleshooting, expediting issue identification and repair suggestions. Ultimately, proactive maintenance, data integration, and improved safety are promised by AI in UAM, ensuring aircraft are maintained effectively from takeoff to landing.

AI in Intelligent Cabin Management (ICMS)The Intelligent Cabin Management System (ICMS), utilized in aviation and rail industries, undergoes continuous advancements fueled by emerging technologies. Enhanced facial recognition algorithms, driven by artificial intelligence (AI), significantly improve efficiencies and reliability in user authentication, behavior analysis, safety, threat detection, and object tracking. Moreover, ICMS prioritizes monitoring passengers’ vital signs onboard for health safety.

This solution ensures cabin operations with a focus on passenger safety, security, and health, suitable for various passenger cabins in aircraft and rail, and particularly ideal for UAM applications. It facilitates cabin entry by authorized crew and passengers, guides seating arrangements, enforces luggage placement regulations, ensures compliance with air travel advisories, monitors passenger behavior for preemptive intervention, identifies permitted and potentially threatening objects, flags left luggage, and detects vital health parameters for real-time monitoring and control.

AI in UAM MaintenanceAI-driven predictive maintenance involves analyzing sensor data and onboard sources to anticipate UAM maintenance needs, aiding in proactive scheduling and minimizing downtime. Similarly, AI-based inspections utilize image analysis to swiftly identify potential issues during regular checks, enhancing accuracy and reducing errors. Additionally, AI supports maintenance decision-making by analyzing various factors like repair costs and part availability, providing informed recommendations. Future advancements may see autonomous maintenance systems, powered by AI, performing routine tasks such as inspections and minor repairs, improving efficiency and safety. Furthermore, AI assists technicians in diagnostics and troubleshooting by analyzing data and historical records to pinpoint issues and suggest appropriate solutions, streamlining maintenance processes and ensuring UAM operational reliability.

ConclusionThe integration of AI into UAM maintenance offers numerous benefits that significantly enhance the efficiency, safety, and reliability of UAM operations. Through proactive maintenance enabled by AI’s predictive capabilities, maintenance teams can anticipate and address potential failures before they occur, reducing unplanned downtime and enhancing operational reliability. Furthermore, AI-supported maintenance increases aircraft availability, ensuring vehicles are consistently safe and reliable, thus contributing to higher customer satisfaction and overall operational performance.

Moreover, AI-driven maintenance optimization leads to cost reduction by accurately predicting maintenance needs and minimizing unnecessary inspections and component replacements, thereby reducing labor and material costs. Additionally, AI’s continuous monitoring of UAM vehicle conditions enhances safety by detecting anomalies or safety risks in real-time, preventing accidents and ensuring timely maintenance. Overall, the application of AI in UAM maintenance represents a transformative step towards a more efficient, safe, and reliable urban air transportation system.

Ajay Kumar Lohany | Delivery Sr. Director- Aero & Rail | Cyient

Ajay Kumar Lohany | Delivery Sr. Director- Aero & Rail | Cyient

The post Emerging solutions in all-electric air mobility service appeared first on ELE Times.

Automotive PCIe: To Switch or Not to Switch?

Courtesy : Microchip

The myths and false economy of direct chip-to-chip PCIe connect in ADAS and vehicle autonomy applications.

PCIe’s Rising Role in Autonomous Driving and ADAS TechnologyBefore pondering the question of whether or not to switch, let’s first set the scene by considering why Peripheral Component Interconnect Express (PCIe) is becoming so popular as an interconnect technology in advanced driver assistance systems (ADAS) applications—and why it will be so crucial in the realization of completely autonomous driving (AD) as the automotive industry seeks standard interfaces that deliver performance while ensuring compatibility and ease-of-use.

With its roots in the computing industry, PCIe is a point-to-point bidirectional bus for connecting high-speed components. Subject to the system architecture (PCIe’s implementation), data transfer can take place over 1, 2, 4, 8 or 16 lanes, and if more than one lane is used the bus becomes a serial/parallel hybrid.

The PCIe specification is owned and managed by the PCI Special Interest Group (PCI-SIG), an association of 900+ industry companies committed to advancing its non-proprietary peripheral technology. As demand for higher I/O performance grows, the group’s scope and ecosystem reach are both expanding, and to paraphrase words from PCI-SIG’s membership page:

Current PCIe and other related technology roadmaps account for new form factors and lower power applications. Innovation on these fronts will remain true to PCI-SIG’s legacy of delivering solutions that are backward compatible, cost-efficient, high performance, processor agnostic, and scalable.

With vehicles becoming high-performance computing platforms (HPCs—and data centers, even) on wheels, these words are exactly what vehicle OEMs developing ADAS and AD solutions want to hear. Also, every generation of PCIe results in performance improvements – from gen 1.0’s data (giga) transfer rate of 2.5GT/s and total bandwidth of 4G/s (16 lanes) to today’s gen 6.0’s 64GT/s and 128G/s (16 lanes). Note: PCIe 7.0, slated to arrive in 2025, will have a data rate of 128GT/s and a bandwidth of 512GB/s through 16 lanes.

PCIe’s performance power cannot be disputed, and it will certainly be required to support the kind of real-time processing of large volumes of data needed for AI- and ML-enabled ADAS and AD applications.

But, as ever, there is debate around implementing PCIe-based architectures, not least when it comes to whether the connections between PCIe-enabled components should be direct or switched.

Making the ConnectionTo provide higher levels of automation, vehicles must incorporate increasingly sophisticated combinations of electronic components including central processing units (CPUs), electronic control units (ECUs), graphics processing units (GPUs), system-on-chips (SoCs), “smart sensors” and high-capacity and high-speed storage devices (such as NVMe memory).

Of these components, the ECUs (there are many) combine across separate zones based on a common functionality. These zonal ECUs communicate with HPC platforms using Ethernet. But within those platforms, there is a need for high-bandwidth processing to achieve real-time decision making.

Accordingly, PCIe technology is being used by automotive designers in a manner very similar to the way in which a data center is designed. Connecting sensors with high-speed serial outputs to processing units is best addressed with an open standard called Automotive SerDes Alliance (ASA).

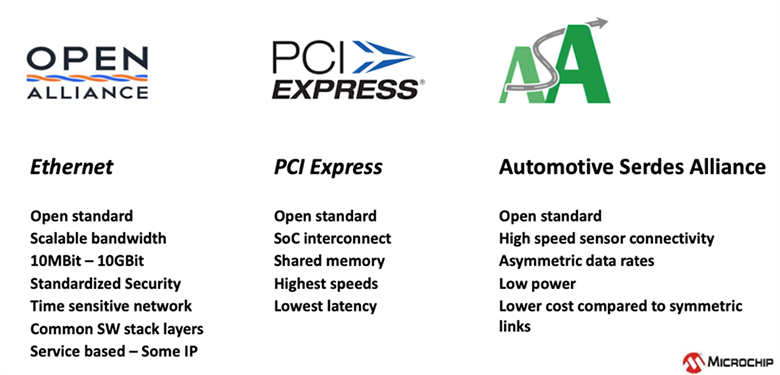

In essence, there are three pillars of automotive networking (see figure 1).

Figure 1 – Three Pillars of future of Automotive Networking

Figure 1 – Three Pillars of future of Automotive Networking

However, some SoC vendors are saying that for PCIe you can simply connect directly between chips without a switch. Well, yes, you can… but it doesn’t scale to higher ADAS levels and it’s a false economy do so.

An HPC system without a switch exponentially increases software complexity, as each end requires its own software stack. Also, there’s the “bigger picture” benefits of switched over unswitched PCIe to consider:

- IO Bandwidth Optimization: Packet switching reduces the SoC interconnection pin count requirement which lowers SoC power and cost.

- Peripheral Sharing: Single peripherals, such as SSD storage or ethernet controllers, may be shared across several SoCs

- Scalability: You can easily scale for more performance without changing the system architecture by increasing switch size, SoC count and peripheral count.

- Serviceability: PCIe has built-in error detection and diagnostic test features which have been thoroughly proven in the high-performance compute environment over many years to significantly ease serviceability.

- And as a result of the above points, a much better total cost of ownership (TCO) is possible.

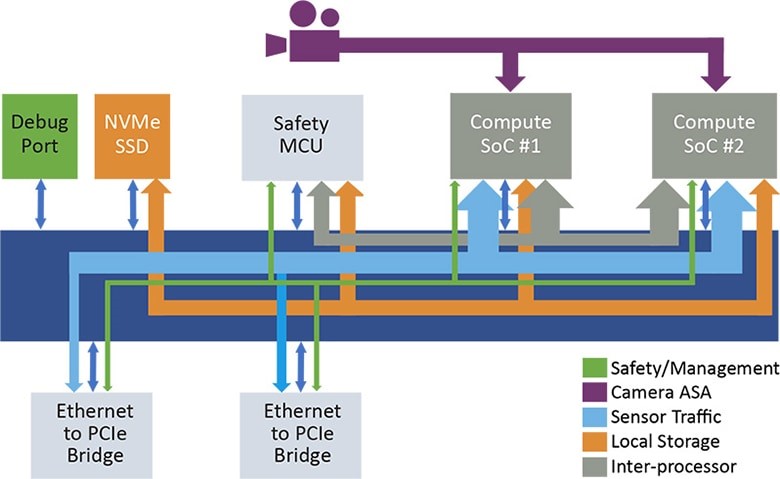

When PCIe combines forces with Ethernet and ASA, it allows for the creation of an optimized, heterogeneous system architecture (as figure 2 illustrates with respect to an ADAS example).

Figure 2 – Heterogenous architecture for ADAS

Figure 2 – Heterogenous architecture for ADAS

Although the three communications technologies evolved at different times to support different needs, and have their respective pros and cons, the heterogeneous architecture makes the best of each.

As mentioned, PCIe provides point-to-point connection, meaning devices are not competing for bandwidth, which is fine if only a few devices need to connect. However, an autonomous vehicle is best realized as a set of distributed workloads, which means bandwidth needs to be shared between multiple sub-system components.

In this respect, PCIe switches provide an excellent solution as they are “transparent,” meaning that software and other devices do not need to be aware of the presence of switches in the hierarchy, and no drivers are required.

The Answer: SwitchPCIe is ideal for ADAS, AD and other HPC applications within a vehicle, but its “point-to-point” connectivity has many thinking that that’s how it should be implemented—as chip-to-chip, for example. However, integrating switching using technologies such as the Microchip Switchtec family (the world’s first automotive-qualified PCIe switches) minimizes software complexity and realizes a host of other benefits for high-performance automotive systems with multiple sub-system components that demand low latencies and high data rates.

The post Automotive PCIe: To Switch or Not to Switch? appeared first on ELE Times.

Techniques to Identify and Correct Asymmetric Wafer Map Defects Caused by Design and Process Errors

JAMES KIM, Senior Semiconductor and Process Integration Engineer | Lam Research

Asymmetries in wafer map defects are usually treated as random production hardware defects. For example, asymmetric wafer defects can be caused by particles inadvertently deposited on a wafer during any number of process steps. In this article, I want to share a different mechanism that can cause wafer defects. Namely, that these defects can be structural defects that are caused by a biased deposition or etch process.

It can be difficult for a process engineer to determine the cause of downstream structural defects located at a specific wafer radius, particularly if these defects are located in varying directions or at different locations on the wafer. As a wafer structure is formed, process behavior at that location may vary from other wafer locations based upon the radial direction and specific wafer location. Slight differences in processes at different wafer locations can be exaggerated by the accumulation of other process steps as you move toward that location. In addition, process performance differences (such as variation in equipment performance) can also cause on-wafer structural variability.

In this study, structural defects will be virtually introduced on a wafer to provide an example of how structural defects can be created by differences in wafer location. We will then use our virtual process model to identify an example of a mechanism that can cause these types of asymmetric wafer map defects.

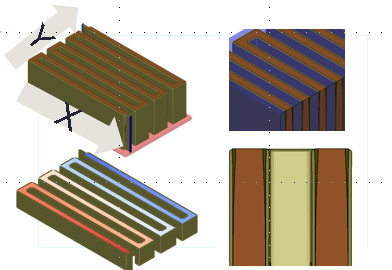

Methods Figure 1. Anisotropic liner/barrier metal

Figure 1. Anisotropic liner/barrier metaldeposition on a tilted structure caused by wafer warping

A 3D process model of a specific metal stack (Cu/TaN/Ta) on a warped wafer was created using SEMulator3D virtual fabrication (Figure 1). After the 3D model was generated, electrical analysis of 49 sites on the wafer was completed.

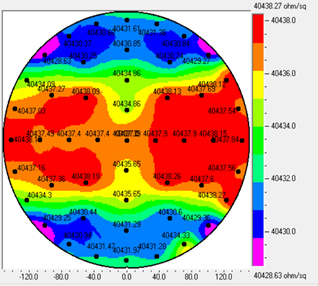

In our model, an anisotropic barrier/liner (TaN/Ta) deposition process was used. Due to wafer tilting, there were TaN/Ta deposition differences seen across the simulated high aspect ratio metal stack. To minimize the number of variables in the model, Cu deposition was assumed to fill in an ideal manner (without voids). Forty-nine (49) corresponding 3D models were created at different locations on the wafer, to reflect differences in tilting due to wafer warping. Next, electrical simulation was completed on these 3D models to monitor metal line resistance at each location. Serpentine metal line patterns were built into the model, to help simulate the projected electrical performance on the warped wafer at different points on the same radius, and across different directions on the wafer (Figure 2).

Figure 2 – Techniques to Identify and Correct Asymmetric Wafer Map Defects Caused by Design and Process Errors

Figure 2 – Techniques to Identify and Correct Asymmetric Wafer Map Defects Caused by Design and Process Errors

Using only incoming structure and process behavior, we can develop a behavioral process model and extend our device performance predictions and behavioral trend analysis outside of our proposed process window range. In the case of complicated processes with more than one mechanism or behavior, we can split processes into several steps and develop models for each individual process step. There will be phenomena or behavior in manufacturing that can’t be fully captured by this type of process modeling, but these models provide useful insight during process window development.

ResultsOf the 49 3D models, the models on the far edge of the wafer were heavily tilted by wafer warpage. Interestingly, not all of the models at the same wafer radius exhibited the same behavior. This was due to the metal pattern design. With anisotropic deposition into high aspect ratio trenches, deposition in specific directions was blocked at certain locations in the trenches (depending upon trench depth and tilt angle). This affected both the device structure and electrical behavior at different locations on the wafer.

Since the metal lines were extending across the x-axis, there were minimal differences seen when tilting the wafer across the x-axis in our model. X-axis tilting created only a small difference in thickness of the Ta/TaN relative to the Cu. However, when the wafer was tilted in the y-axis using our model, the high aspect ratio wall blocked Ta/TaN deposition due to the deposition angle. This lowered the volume of Ta/TaN deposition relative to Cu, which decreased the metal resistance and placed the resistance outside of our design specification.

X-axis wafer tilting had little influence on the device structure. The resistance on the far edge of the x-axis did not significantly change and remained in-spec. Y-axis wafer tilting had a more significant influence on the device structure. The resistance on the far edge of the y-axis was outside of our electrical specification (Figure 3).

Figure 3 – Techniques to Identify and Correct Asymmetric Wafer Map Defects Caused by Design and Process Errors

Conclusion

Figure 3 – Techniques to Identify and Correct Asymmetric Wafer Map Defects Caused by Design and Process Errors

Conclusion

Even though wafer warpage occurs in a circular manner due to accumulated stress, unexpected structural failures can occur in different radial directions on the wafer due to variations in pattern design and process behavior across the wafer. From this study, we demonstrated that asymmetric structures caused by wafer warping can create top-bottom or left-right wafer performance differences, even though processes have been uniformly applied in a circular distribution across the wafer.

Process simulation can be used to better understand structural failures that can cause performance variability at different wafer locations. A better understanding of these structural failure mechanisms can help engineers improve overall wafer yield by taking corrective action (such as performing line scanning at specific wafer locations) or by adjusting specific process windows to minimize asymmetric wafer defects.

The post Techniques to Identify and Correct Asymmetric Wafer Map Defects Caused by Design and Process Errors appeared first on ELE Times.

Executive Blog – Companies that Embrace Digital Transformation Have More Resilient Design and Supply Chains

Sailesh Chittipeddi | Executive Vice President Operations | Renesas

Digital transformation has evolved quickly from a conceptual phase to a semiconductor industry change agent. The rapid take up of AI-enhanced product development is only accelerating this transformation and is further influenced by two connected trends: The movement of Moore’s Law from transistor scaling to system-level scaling, and the relatively recent redistribution of the global electronics supply chain due to the COVID-19 pandemic.

I spoke on this subject earlier this month at the Industry Strategy Symposium 2024 in Half Moon Bay, California, where leaders from across the chip industry gather annually to share their insights on technology and trend drivers and what they could mean for our respective businesses.

Between the early 1970s and around 2005, increased chip performance was largely a function of clock frequency improvements driven by advances in lithography, transistor density, and energy efficiency. With increasing transistor counts (and die size), clock frequencies are limited by interconnect delays and not by transistor performance. To overcome this challenge, designers moved to multi-core designs to increase system performance without blowing up energy. Novel packaging techniques such as chiplets and multi-chip modules are helping further improve system performance, particularly in AI chips.

A single chip package may be comprised of multiple chiplets each housing specific functions such as high-performance logic elements, AI accelerators, high-bandwidth DDR memory, and high-speed peripherals. Very often, each of these components is sourced from a different fab, a trend that has resulted in a fragmented global supply chain. This creates its own set of challenges as die from multiple fabs must be integrated into a package or system that must then be thoroughly tested. Test failures at this stage have enormous financial consequences. These challenges, require a “shift left” mindset in product development. The shift left mentality has major ramifications for how we, as an industry, should be managing our supply chains by moving the heavy emphasis from architecture/design to final system testing and quality.

Supply chain challenges during the COVID pandemic have resulted in further decentralization of the supply chain components. To illustrate the enormity of the change underway, consider that between 2022 and December 2024 construction began on 93 wafer fabs around the world. Compare that to the global construction of automated test facilities. In 2021 alone, the industry broke ground on 484 back-end test sites, which provides a measure of how committed the chip sector is to driving resiliency across the manufacturing landscape.

The Role of AI in Semiconductor Design and ManufactureSo, where does AI come into the picture?

A key area in which AI will exert its influence is the shift from an analytic to a predictive model. Today, we wait to detect a problem and then look at past data to identify the root cause of the problem and prevent it from reoccurring. This inefficient approach adds time, cost, unpredictability, and waste to the supply chain. AI, on the other hand, allows us to examine current data to predict future outcomes.

Instead of using spreadsheets to analyze old data, we build AI models that production engineers continuously train with new data. This “new” data is no longer merely a set of numbers or measurements but includes unstructured data such as die photos, equipment noise, time series sensor data, and videos to make better predictions.

In the end, it’s about pulling actionable information from a sea of data points. In other words, data without action is mostly useless. Why am I driving this point home? Because today, 90 percent of data created by enterprises is never used. It’s dark data. And when you think about AI implementation, 46 percent of them never make it from pilot to production because the complexity of the programs is not scoped appropriately.

Despite these challenges, equipment makers are already starting to implement digital transformation techniques into their product development processes. The benefits are palpable. Research from Boston Consulting Group found that companies that have built resiliency into their supply and design chains recovered from COVID-related downturns twice as fast as companies that have yet to embrace digital transformation.

At Renesas, we acquired a company called Reality AI that generates a compact machine learning model that runs on a microcontroller or microprocessor. This provides the unique ability to quickly detect deviations from normal patterns that may cause equipment problems. It allows manufacturing facilities to schedule preventive maintenance or minimize downtime associated with sudden equipment failure.

Digital Transformation Is Future-Proofing Our IndustryDigital transformation with AI is key to business success today. As the semiconductor industry undergoes a major evolution – embracing system-level design and adapting to a changing global supply chain – digital transformation and the shift left approach are powerful tools that deliver on two fronts.

The first is a productivity increase that comes from optimized tools and design processes. The closer you are to where the failure is likely to occur, the more quickly you learn and the more quickly you can fix things.

Second, and perhaps most importantly, digital transformation solves one of the biggest problems the industry has with chip design – the availability of talent. When we reduce the time taken to design a chip, we’re making our engineers far more efficient than they would be otherwise, which is increasingly important as the semiconductor industry demographic skews older.

The post Executive Blog – Companies that Embrace Digital Transformation Have More Resilient Design and Supply Chains appeared first on ELE Times.

Network RTK vs PPP-RTK: an insight into real-world performance

By- Patty Felts, Product Marketing Manager, Product Center Services

Australian automation and positioning technology provider conduct static and kinematic tests

Locating people, animals, or objects on Earth with high precision requires the use of GNSS receivers and the support of network RTK correction services that account for errors caused by the atmosphere, satellite clock drift, and signal delays.

Three standard approaches to correct these errors are Real Time Kinematic (RTK), Precise Point Positioning (PPP) GNSS correction services, and a combination of the two, PPP-RTK. Beyond these, a pairing device such as a survey-grade GNSS receiver or a mass-market smart antenna is also required to enhance positioning accuracy. Combining any of these approaches with one device will optimize the positioning accuracy of the end-use application.

Many GNSS navigation applications require high accuracy. The accuracy of survey-grade GNSS receivers exceeds what mass-market smart antennas can provide. Of course, this comes at a price. Still, several high-precision GNSS navigation applications can do well with the accuracy offered by mass-market smart antennas. Examples include transportation, e-mobility, IoT use cases, and field robotics. Designers aim to equip devices with reliable, high-precision positioning at a reasonable cost.

GNSS users can verify the capabilities of setups by hitting the roads and testing them in real-world situations. Doing so enables them to understand the capabilities of these setups and differentiate them.

Aptella (formerly branded as Position Partners), an Australasian provider of automation and positioning technology solutions, had the opportunity to test the capabilities of network RTK vs PPP-RTK GNSS correction services and present the findings to their client.

We will discuss the findings, but as a first step, let us review how the RTK, PPP, and PPP-RTK approaches operate, the equipment needed, and the participants in this exercise.

Network RTK, Precise Point Positioning GNSS, and PPP-RTKThe mentioned correction approaches follow different paths. RTK GNSS correction services calculate and correct GNSS errors by comparing satellite signals from one or more reference stations. Any errors detected are then transmitted using IP-based communications, which can be reliable beyond a radius of 30 km from the nearest base station. Network RTK typically requires bi-directional communication between the GNSS receiver and the service, making the solution more challenging to scale. This approach can provide centimeter-level positioning accuracy in seconds.

Precise Point Positioning GNSS correction services operate differently. They broadcast a GNSS error model valid over large geographic regions. Because this service requires only unidirectional communication (IP-based or via satellite L-band), it’s more scalable to multiple users, unlike RTK.

PPP high-precision positioning takes between three minutes and half an hour to provide a position estimate with an accuracy of less than 10 cm. Static applications such as surveying or mapping typically use this solution, but it can be a poor fit for dynamic applications such as unmanned aerial vehicles or mobile robotics.

More recently, both approaches have been combined into what is known as PPP-RTK GNSS correction services (or State Space Representation (SSR) correction services). This combination provides the accuracy of the RTK network and its fast initialization times with the broadcast nature of Precise Point Positioning. Similar to PPP, the approach is based on a model of GNSS errors that has broad geographic validity. Once a GNSS receiver has access to these PPP-RTK correction data through one-way communication, it computes the GNSS receiver position.

Survey-grade GNSS receiver versus mass-market smart antennaSurvey-grade receivers are devices typically used for geodetic surveying and mapping applications. They are designed to provide highly accurate and precise positioning information for civil engineering, construction, GIS data, land development, mining, and environmental management.

Today’s modules can access data from multiple satellite constellations and network RTK support. These devices are typically very expensive, costing thousands of dollars each, because they are highly precise, with accuracies ranging from centimeters to millimeters.

Mass-market smart antennas are specialized receiver/antenna-integrated devices designed to receive signals from satellite constellations and GNSS correction services right out of the box. Smart antennas capture and process raw data to determine precise locations. Standalone GNSS antennas don’t have a precision rating, as this depends on the integrated GNSS receiver and correction service to which the antennas are coupled.

While mass-market smart antennas are more affordable than survey-grade GNSS receivers, there is a corresponding performance trade-off, with accuracies ranging from a few centimeters to decimeters.

The following tests used a survey-grade GNSS receiver to verify control coordinates in static mode and compare RTK versus PPP-RTK results in the kinematic mode. The GNSS smart antenna was also employed as a pairing device for these static and kinematic tests.

Participating companiesAptella is the company that conducted the performance test and presented the results to their client. However, the participation of four other companies was crucial.

AllDayRTK operates Australia’s highest-density network of Continuously Operating Reference Stations (CORS). Its network RTK correction services were used to compare with PPP-RTK.

u-blox’s PointPerfect provided the PPP-RTK GNSS correction services used in these tests.

Both correction services were coupled with a survey GNSS receiver, Topcon HiPer VR, and a mass-market smart antenna, the Tallysman TW5790.

Testing two correction services solutionsIn the Australian city of Melbourne, Aptella conducted static and kinematic tests with several objectives in mind:

- Test RTK and PPP-RTK GNSS corrections using a mass-market GNSS device like the Tallysman TW5790.

- Demonstrate the capabilities of the Tallysman smart antenna coupled with PPP-RTK corrections.

- Evaluate PointPerfect PPP-RTK GNSS corrections and assess “real world” results against published specifications.

- Determine whether these specifications meet mass-market applications and e-transport safety requirements of 30 cm @ 95%.

- Provide insight into use cases and applications suitable for PPP-RTK corrections.

Figure 1: gnss antenna and survey grade receiver

Figure 1: gnss antenna and survey grade receiver

These tests allowed experts to compare the accuracy of RTK and PPP-RTK GNSS correction services supported by a mass-market Tallysman smart antenna. They were also able to verify the PPP-RTK performance specifications published by u-blox.

First, a survey-grade Topcon HiPer VR GNSS receiver was used to verify the control coordinates in static mode. Once these were obtained, the Tallysman smart antenna took its place.

The table below summarizes representative results from both methods, PPP-RTK and RTK. Horizontal (planar) accuracy is similar for both, while the vertical accuracy is less accurate with PPP-RTK than RTK.

The horizontal accuracy level of RTK and PPP-RTK is in the centimeter range. In contrast, RTK maintains a centimeter range at the vertical accuracy level, but the PPP-RTK correction errors were in the decimeter range.

| GNSS augmentation

|

Horizontal error (m) | Vertical error (m) | Horizontal 95% (m) | Vertical 95% (m) |

| RTK AllDayRTK | 0.009 | 0.010 | 0.012 | 0.018 |

| PointPerfect PPP-RTK | 0.048 | 0.080 | 0.041 | 0.074 |

Furthermore, the accuracy of the mass market device is within published specifications to meet the 30 cm @ 95% for location (plan) even when obstructed. Still, when measuring heights, these were less accurate than 2D horizontal coordinates. Absolute horizontal location accuracy meets the mass market requirement of 30 cm @ 95%, although RTK is more accurate at a vertical level than PPP-RTK.

Kinematic resultsOn the streets of Melbourne, Aptella experts tested RTK and PPP-RTK corrections operating in different kinematic modes with variable speeds, such as walking under open skies and driving in different environments.

The test setup using an RTK network consisted of AllDayRTK corrections and a survey-grade GNSS receiver. On the other hand, the PPP-RTK test setup was supported by u-blox PointPerfect and the Tallysman smart antenna. The antennas for both setups were mounted on the roof of the vehicle and driven through different routes to encounter various GNSS conditions.

Walking in the open sky: This test involved a walk along the riverbank. Comparing the results, both were similar, proving that PPP-RTK is well-suited for mass-market applications.

Figure 2: walking tests with rtk and ppp-rtk

Figure 2: walking tests with rtk and ppp-rtk

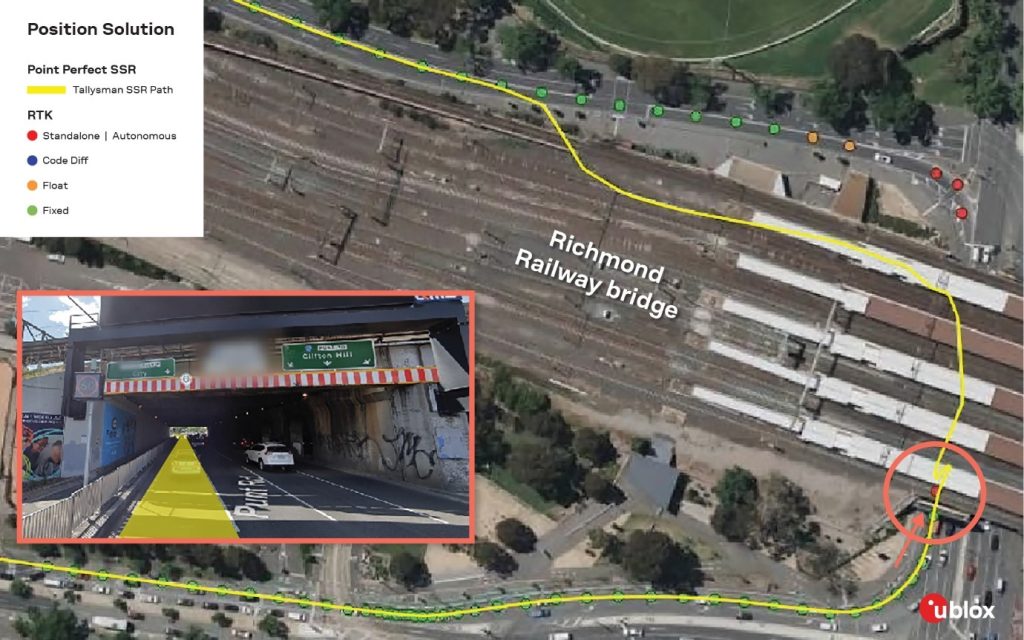

On-road driving with varying conditions: This test consisted of driving on Melbourne roads in different conditions, including open skies and partial or total obstructions to GNSS. The route included driving under bridges and areas with multipath effects. Vegetation in the area at the start of the test prevented the smart antenna’s IMU from initializing. No IMU/dead reckoning capability was used during the drive test.

The results obtained while the vehicle moved through a long tunnel under the railroad tracks were of utmost importance. In this situation, the PPP-RTK approach reported a position even in an adverse environment. In addition, PPP-RTK reconverged shortly after RTK.

Figure 3: rtk vs ppp-rtk under railway bridge in melbourne

Figure 3: rtk vs ppp-rtk under railway bridge in melbourne

Another revealing result of this second test was that the Tallysman smart antenna didn’t seem to deviate from its path when passing under short bridges.

Figure 4: rtk vs ppp-rtk under a short bridge

Figure 4: rtk vs ppp-rtk under a short bridge

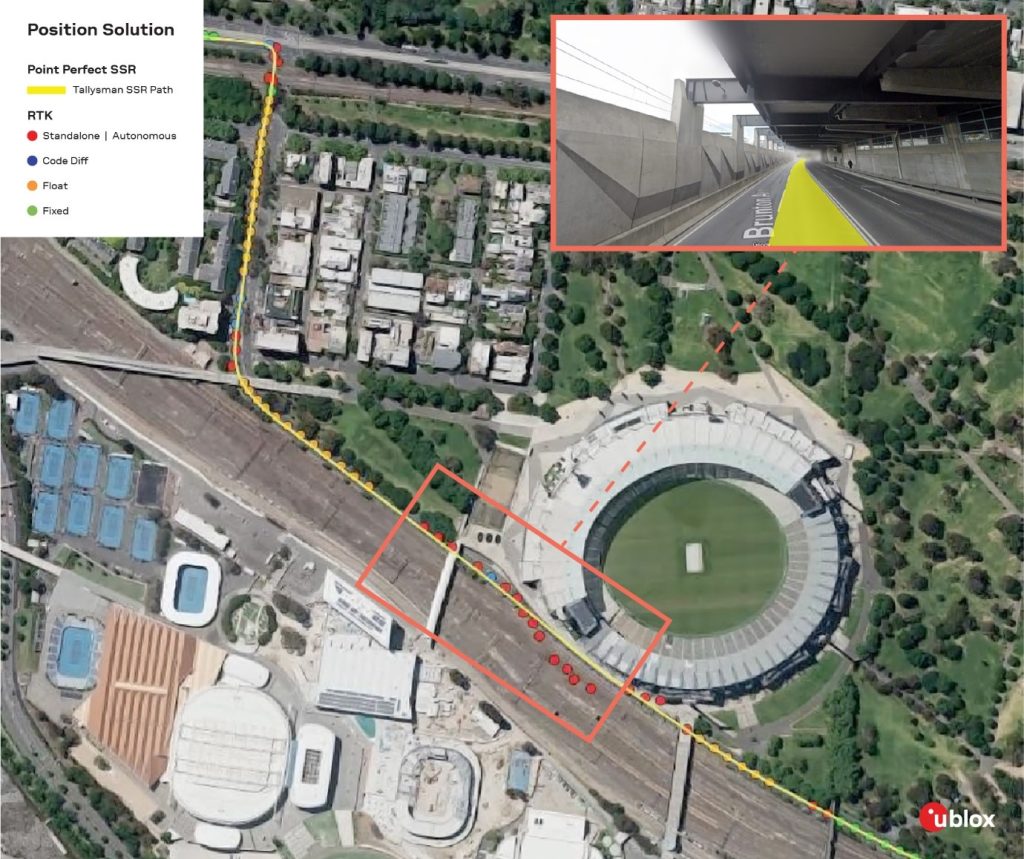

Driving through an outage: The outage test took place in an extended, challenging environment for GNSS. This occurred when the car drove under the pedestrian overpass at the Melbourne Cricket Ground. The PPP-RTK solution maintained the travel trajectory and effectively tracked the route (in yellow). On the other hand, the RTK network solution reported positions off the road and on the railway tracks. In this outage condition, RTK took a long time to reconverge to a fixed solution.

Figure 5: correction services tests under a long structure

Figure 5: correction services tests under a long structure

Open-sky driving: The final on-road test was conducted in an open-sky environment where the two setups performed similarly. They provided lane-level accuracy and suitability for mass-market applications. However, ground truthing and further testing are required to fully evaluate the accuracy and reliability of PPP-RTK in these conditions.

Figure 6: correction services comparison driving through Melbourne

Final remarks

Figure 6: correction services comparison driving through Melbourne

Final remarks

The five static and dynamic tests conducted by Aptella were instrumental in assessing the effectiveness of different setups to determine the position of stationary and moving entities.

- From the static test, Aptella concluded that PPP-RTK, coupled with the Tallysman smart antenna, provides centimeter-level horizontal accuracy and performs similarly to RTK. However, this was not the case for vertical accuracy, with PPP-RTK at the decimeter level.

- Regarding the kinematic tests, Aptella obtained significant results, particularly when the environment impeded communication with GNSS. Even without IMU or dead reckoning, the PPP-RTK performed well with lane-level tracking. With short outages such as railway bridges and underpasses, PPP-RTK maintained an acceptable trajectory, while RTK required a long time to reconverge after emerging from these challenging conditions.

- Overall, Aptella has demonstrated that the PPP-RTK and GNSS smart antenna combination delivers results suitable for mass-market applications requiring centimeter-level horizontal accuracy.

As mentioned above, survey-grade devices are costly although highly accurate. A combination of survey-grade GNSS receiver and network RTK correction service is recommended in geodetic surveying use cases that require high height accuracy.

Conversely, mass-market smart antenna devices using PPP-RTK corrections are less expensive but also less accurate. Nevertheless, they are well suited for static applications that don’t require GNSS heights at survey grade.

For many high-precision navigation applications, such as transportation, e-mobility, and mobile robotics, PPP-RTK is sufficient to achieve the level of performance these end applications require. The relative affordability of smart antenna devices, combined with PPP-RTK’s ability to broadcast a single stream of corrections to all endpoints, makes it easier to scale from a few prototypes to large fleets of mobile IoT devices.

The post Network RTK vs PPP-RTK: an insight into real-world performance appeared first on ELE Times.

Unparalleled capacitance for miniaturized designs: Panasonic Industry launches new ZL Series Hybrid capacitors

The compact and AEC-Q200-compliant EEH-ZL Series stands out with industry-leading capacitance and high Ripple Current specs

The ZL series is the latest offspring of Panasonic Industry’s Electrolytic Polymer Hybrid capacitor portfolio. Related to its compact dimensions, it offers unrivalled capacitance values – and hence might evoke a remarkable market echo:

Capacitance: For five case sizes from ø5×5.8 mm to ø10×10.2 mm, the ZL series offers the largest capacitance in the industry and exceeds the values of competitor standard products by approximately 170%.

Ripple Current performance outnumbers the competitor products’ specs besides lower ESR within the same case size.

The new ZL is AEC-Q200 compliant, enforcing strict quality control standards, particularly crucial for the automotive industry. It boasts high-temperature resistance, and is guaranteed to operate at 125°C and 135°C at 4000h. With a focus on durability, the ZL series offers vibration-proof variants capable of withstanding shocks up to 30G, making it a reliable choice.

In summary, this next-generation, RoHS qualified Hybrid Capacitor stands as the ultimate solution for automotive and industrial applications, where compact dimensions are an essential prerequisite.

Tailored for use in various automotive components including water pumps, oil pumps, cooling fans, high-current DC to DC converters, and advanced driver-assistance systems (ADAS), it also proves invaluable in industrial settings such as inverter power supplies for robotics, cooling fans, and solar power systems. Furthermore, it serves a pivotal role in industrial power supplies for both DC and AC circuits, spanning from inverters to rectifiers, and finds essential application in communication infrastructure equipment such as base stations, servers, routers, and switches.

The post Unparalleled capacitance for miniaturized designs: Panasonic Industry launches new ZL Series Hybrid capacitors appeared first on ELE Times.

DDS Option for high-speed AWGs generates up to 20 sine waves

20 independent sine waves up to 400 MHz can be controlled on one generator channel

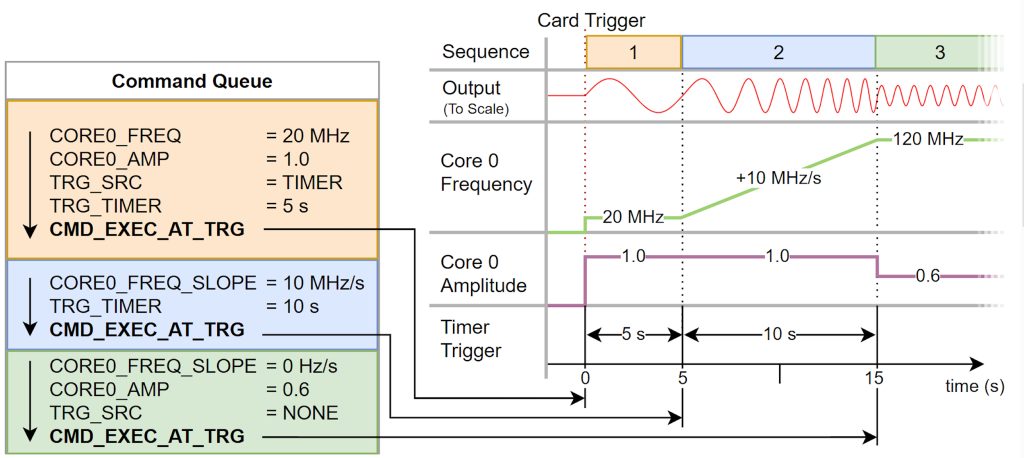

Spectrum Instrumentation has released a new firmware option for its range of versatile 16-bit Arbitrary Waveform Generators (AWGs) with sampling rates up to 1.25 GS/s and bandwidths up to 400 MHz. The new option allows users to define 23 DDS cores per AWG-card, that can be routed to the hardware output channels. Each DDS core (sine wave) can be programmed for frequency, amplitude, phase, frequency slope and amplitude slope. This enables, for example, the control of lasers through AODs and AOMs, as often used in quantum experiments, with just a few simple commands – instead of making large data array calculations. The DDS output can be synchronized with external trigger events or by a programmable timer with resolution of 6.4 ns.

DDS – Direct Digital Synthesis – is a method for generating arbitrary periodic sine waves from a single, fixed-frequency reference clock. It is a technique widely used in a variety of signal generation applications. The DDS functionality implemented on Spectrum Instrumentation’s AWGs is based on the principle of adding multiple ‘DDS cores’ to generate a multi-carrier (multi-tone) signal with each carrier having its own well-defined frequency, amplitude and phase.

Advantages of using DDS for arbitrary waveform generatorsWith the ability to switch between the normal AWG mode (which generates waveforms out of pre-programmed data) and the DDS mode (which needs only a few commands to generate sine wave carriers), the Spectrum AWGs are highly versatile and can be adapted to almost any application. In DDS-mode, the AWG acts as a base for the multi-tone DDS. The units built-in 4 GByte of memory and fast DMA transfer mode then allows the streaming of DDS commands at a rate as high as 10 million commands per second! This unique capability provides the flexibility to perform user-defined slopes (e.g. s-shaped) as well as various modulation types (e.g. FM and AM) with simple, easy-to-use, DDS commands.

DDS in Quantum Experiments In DDS-mode, only a few commands are needed to e.g. generate a sine wave (orange block), accelerate the frequency (blue block) and lower the amplitude (green block).

In DDS-mode, only a few commands are needed to e.g. generate a sine wave (orange block), accelerate the frequency (blue block) and lower the amplitude (green block).

For years now, Spectrum AWGs have been successfully used worldwide in pioneering quantum research experiments. Since 2021, Spectrum Instrumentation has been part of the BMBF (German federal ministry of education and research) funding program ‘quantum technologies – from basic research to market’ as part of the Rymax One consortium. The aim of this consortium is building a Quantum Optimizer. The development of the DDS option was based on feedback from the consortium partners and other research institutes worldwide.

The flexibility and fast streaming-mode of Spectrum’s AWGs, which also enables data to be streamed straight from a GPU, allows the control of Qubits directly from a PC. While using an AWG in this way offers full control of the generated waveforms, the drawback is that huge amounts of data need to be calculated. This slows the critical decision-making loop. In contrast, using the versatile multi-tone DDS functionality greatly reduces the amount of data that must be transferred, while still keeping full control. All the key functionality required for quantum research is built in. With just a single command users can apply intrinsic dynamic linear slope functions to produce extremely smooth changes to frequency and amplitude.

DDS controls waveforms in Test, Measurement and CommunicationsIn many kinds of testing systems, it is important to produce and readily control accurate waveforms. The DDS option provides an easy and programmable way for users to produce trains of waveforms, frequency sweeps or finely tuneable references of various frequencies and profiles. Applications that require the fast frequency switching and fine frequency tuning that DDS offers are widespread. They can be found in industrial, medical, and imaging systems, network analysis or even communication technology, where data is encoded using phase and frequency modulation on a carrier.

Availability of DDS option 23 different AWGs are able to use the new DDS firmware option. They offer 16-bit resolution, up to 1.25 GS/s speed and up to 32 channels.

23 different AWGs are able to use the new DDS firmware option. They offer 16-bit resolution, up to 1.25 GS/s speed and up to 32 channels.

The DDS option is available now for the full range of M4i.66xx PCIe cards, M4x.66xx PXIe modules, portable LXI/Ethernet DN2.66x units and multi-channel desktop LXI/Ethernet DN6.66xx products. By simply performing a firmware update, all previously purchased 66xx series products can be equipped with the new firmware option. Programming can be done using the existing driver SDKs that are included in the delivery. Examples are available for Python, C++, MATLAB, LabVIEW and many more. The option is available now.

About Spectrum InstrumentationSpectrum Instrumentation, founded in 1989, uses a unique modular concept to design and produce a wide range of more than 200 digitizers and generator products as PC-cards (PCIe and PXIe) and stand-alone Ethernet units (LXI). In over 30 years, Spectrum has gained customers all around the world, including many A-brand industry-leaders and practically all prestigious universities. The company is headquartered near Hamburg, Germany, known for its 5-year warranty and outstanding support that comes directly from the design engineers. More information about Spectrum can be found at www.spectrum-instrumentation.com

The post DDS Option for high-speed AWGs generates up to 20 sine waves appeared first on ELE Times.

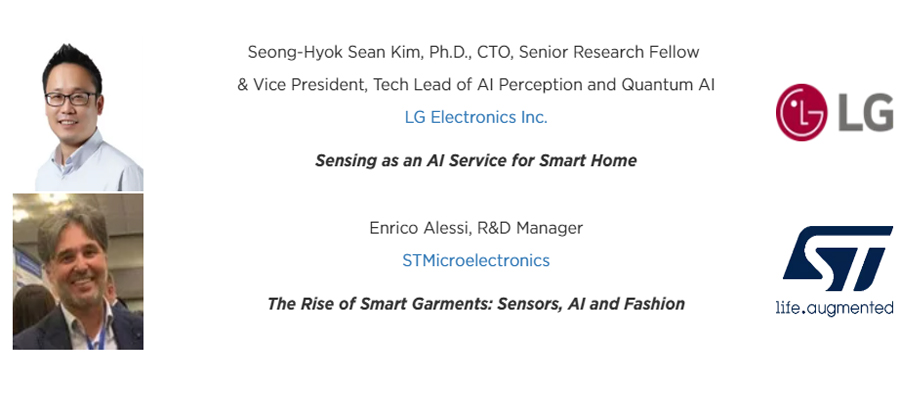

MSTC 2024 to Spotlight Latest MEMS and Sensors Advances Driven by Artificial Intelligence

Keynote speakers at the SEMI MEMS and Sensors Technical Congress (MSTC 2024) will highlight smart home and smart garment innovations driven by artificial intelligence (AI) as industry visionaries and experts gather May 1-2 at Covel Commons at University of California, Los Angeles (UCLA) to discuss the latest MEMS and sensors trends and innovations. Registration is open.

Themed Sensorizing Our World: Technology Driving Global Solutions, MSTC 2024 will feature keynotes and technical sessions on critical MEMS and sensors topics. Highlights also include posters showcasing novel applications from the next generation of innovators and networking opportunities for participants to help grow their businesses.

Sponsored by the SEMI MEMS & Sensors Industry Group (MSIG), MSTC 2024 will offer a deep dive into how to bring sensor products to market, from design through fabrication and testing and packaging to end-use applications. Industry experts will explore the software and systems needed to expand both legacy and emerging MEMS and sensors to open new markets and business opportunities.

MSTC 2024 Keynote Speakers MSTC 2024 Technical SessionsMSTC 2024 will also showcase MEMS and sensors applications in the following areas:

- AI-driven Sensor Systems

- MEMS Emerging Technology & Devices

- New Frontiers in MEMS & Sensors Fabrication

- Revolutionary Sensors for Biomedical Applications

- Smart Environmental Sensors

- Positioning, Navigation & Timing

- Market and Technology Trends and Forecast

- Automotive Sensor Tech Showdown

- UCLA lab tours

- Networking reception showcasing technology application posters created by students from UCLA

The post MSTC 2024 to Spotlight Latest MEMS and Sensors Advances Driven by Artificial Intelligence appeared first on ELE Times.

300mm Fab Equipment Spending Forecast to Reach Record $137 Billion in 2027, SEMI Reports

Global 300mm fab equipment spending for front-end facilities is forecast to reach a record US$137 billion in 2027 after topping US$100 billion for the first time by 2025 on the strength of the memory market recovery and strong demand for high-performance computing and automotive applications, SEMI highlighted today in its quarterly 300mm Fab Outlook Report to 2027 report.

Worldwide 300mm fab equipment investment is expected to increase 20% to US$116.5 billion in 2025 and 12% to US$130.5 billion in 2026 before hitting a record high in 2027.

“Projections for the steepening ramp of 300mm fab equipment spending in the coming years reflects the production capacity needed to meet growing demand for electronics across a diverse range of markets as well as a new wave of applications spawned by artificial intelligence (AI) innovation,” said Ajit Manocha, SEMI President and CEO. “The newest SEMI report also highlights the critical importance of increases in government investments in semiconductor manufacturing to bolster economies and security worldwide. This trend is expected to help significantly narrow the equipment spending gap between re-emerging and emerging regions and the historical top-spending regions in Asia.”

Regional GrowthThe SEMI 300mm Fab Outlook to 2027 report shows China continuing to lead fab equipment spending with US$30 billion in investments in each of the next four years fueled by government incentives and domestic self-sufficiency policies.

Supported by leading-edge nodes expansion for high-performance computing (HPC) and the memory market recovery, Taiwanese and Korean chip suppliers are increasing their equipment investments. Taiwan is expected to rank second in equipment spending at US$28 billion in 2027, up from US$20.3 billion in 2024, while Korea is expected to rank third at US$26.3 billion in 2027, an increase from US$19.5 billion this year.

The Americas is projected to double 300mm fab equipment investments from US$12 billion in 2024 to US$24.7 billion in 2027, while spending in Japan, Europe & the Middle East, and Southeast Asia are expected to reach US$11.4 billion, US$11.2 billion, and US$5.3 billion in 2027, respectively.

Segment GrowthFoundry segment spending is expected to decline 4% to US$56.6 billion this year due in part to the expected slowdown in mature nodes (>10nm) investment, though the segment continues to log the highest growth among all segments to meet market demand for generative AI, automotive and intelligent edge devices. The segment’s equipment spending is forecast to post a 7.6% compound annual growth rate (CAGR) to US$79.1 billion from 2023 to 2027.

Demand for greater data throughput, crucial for AI servers, is driving strong demand for high-bandwidth memory (HBM) and spurring increased investment in memory technology. Among all segments, memory is ranked second and is expected to post US$79.1 billion in equipment investments in 2027, a 20% CAGR from 2023. DRAM equipment spending is expected to rise to US$25.2 billion in 2027, a 17.4% CAGR, while 3D NAND investment is projected to reach US$16.8 billion in 2027, a 29% CAGR.

The Analog, Micro, Opto, and Discrete segments are projected to increase 300mm fab equipment investments to US$5.5 billion, US$4.3 billion, US$2.3 billion, and US$1.6 billion in 2027, respectively.

The SEMI 300mm Fab Outlook Report to 2027 report lists 405 facilities and lines globally, including 75 high-probability facilities expected to start operation during the four years beginning in 2024. The report reflects 358 updates and 26 new fabs/lines project since its last publication in December 2023.

For more information on the report or to subscribe to SEMI market data, visit SEMI Market Data or contact the SEMI Market Intelligence Team (MIT) at mktstats@semi.org.

The post 300mm Fab Equipment Spending Forecast to Reach Record $137 Billion in 2027, SEMI Reports appeared first on ELE Times.

Infineon to complete limited Share Buyback Program serving fulfillment of obligations under existing employee participation programs

Infineon Technologies AG has successfully completed its Share Buyback Program 2024, announced on 26 February 2024 in accordance with Article 5(1)(a) of Regulation (EU) No 596/2014 and Article 2(1) of Delegated Regulation (EU) No 2016/1052. As part of the Share Buyback Program 2024, a total of 7,000,000 shares (ISIN DE0006231004) were acquired. The total purchase price of the repurchased shares was € 232,872,668. The average purchase price paid per share was € 33.27.

Alexander Foltin, Head of Finance, Treasury and Investor Relations of Infineon

Alexander Foltin, Head of Finance, Treasury and Investor Relations of Infineon

The buyback was carried out on behalf of Infineon by an independent credit institution via Xetra trading on the Frankfurt Stock Exchange, serving the sole purpose of allocating shares to employees of the company or affiliated companies, members of the Management Board of the company as well as members of the management board and the board of directors of affiliated companies as part of the existing employee participation programs.

The post Infineon to complete limited Share Buyback Program serving fulfillment of obligations under existing employee participation programs appeared first on ELE Times.

UiPath Unveils New Family of LLMs at AI Summit to Empower Enterprises to Harness Full Capabilities of GenAI

Company introduces Context Grounding to augment GenAI models with business-specific data, an IBM watsonx.ai connector, and updates for Autopilot

UiPath, a leading enterprise automation and AI software company, recently announced several new generative AI (GenAI) features in its platform designed to help enterprises realize the full potential of AI with automation by accessing powerful, specialized AI models tailored to their challenges and most valuable use cases. UiPath showcased its latest capabilities at the virtual AI Summit that took place on March 19th, 2024.

The UiPath Business Automation Platform offers end-to-end automation for business processes. There are four key factors that business leaders seeking to embed AI in their automation program must keep top of mind: business context, AI model flexibility, actionability, and trust. The new AI features of the UiPath Platform address these key areas to ensure customers are equipped with the tools necessary to enhance the performance and accuracy of GenAI models and tools and more easily tackle diverse business challenges with AI and automation.

“Businesses need an assortment of AI models, the best in class for every task, to achieve their full potential. Our new family of UiPath LLMs, along with Context Grounding to optimize GenAI models with business-specific data, provide accuracy, consistency, predictability, time to value, and empower customers to transform their business environments with the latest GenAI capabilities on the market,” said Graham Sheldon, Chief Product Officer at UiPath. “These new features ensure that AI has the integrations, data, context, and ability to take action in the enterprise with automation to meet our customers’ unique needs.”

At the AI Summit, UiPath announced:

Generative Large Language Models (LLMs)

The new LLMs, DocPATH and CommPATH, give businesses LLMs that are extensively trained for their specific tasks, document processing and communications. General-purpose GenAI models like GPT-4 struggle to match the performance and accuracy of models specially trained for a specific task. Instead of relying on imprecise and time-consuming prompt engineering, DocPATH and CommPATH provide businesses with extensive tools to customize AI models to their exact requirements, allowing them to understand any document and a huge variety of message types.

Context Grounding to augment GenAI models with business-specific data

Businesses need a safe, reliable, low-touch way to use their business data with AI models. To address this need, UiPath is introducing Context Grounding, a new feature within the UiPath AI Trust Layer that will be entering private preview in April. UiPath Context Grounding helps businesses improve the accuracy of GenAI models by providing prompts and a foundation of business context through retrieval augmented generation. This system extracts information from company-specific datasets, like a knowledge base or internal policies and procedures to create more accurate and insightful responses.

Context Grounding makes business data LLM-ready by converting it to an optimized format that can easily be indexed, searched, and injected into prompts to improve GenAI predictions. Context Grounding will enhance all UiPath Gen AI experiences in UiPath Autopilots, GenAI Activities, and intelligent document processing (IDP) products like Document Understanding.

GenAI Connectors & IBM watsonx.ai

IBM used the UiPath Connector Builder to create a unique watsonx.ai connector. The new connector provides UiPath customers with access to multiple foundational models currently available in watsonx.ai. GenAI use cases, such as summarization, Q&A, task classification, and optimization for chat, are quickly integrated and infused into new and existing UiPath workflows and frameworks. IBM Watsonx customers can also access broader UiPath platform capabilities, such as Test Automation, Process Mining and Studio workflows, all within a low/no-code UX environment. IBM’s industry-leading consulting capabilities, coupled with the UiPath Business Automation Platform, will help support successful GenAI adoption, including the right strategy for infusing AI into more powerful, and complex automated workflows.

“IBM and UiPath strongly believe that AI and GenAI are rapidly changing the entire landscape of business globally,” said Tom Ivory, Senior Partner, Vice President, Global Leader of Global Automation at IBM. “We are excited that IBM’s watsonx.ai and UiPath’s Connector Builder together now help create insights, and efficiencies that result in real value for our customers.”

The IBM Watson Connector is now generally available through the Integration Service Connector Catalog.

Autopilot for Developers and Testers

UiPath Autopilot is a suite of GenAI-powered experiences across the platform that make automation builders and users more productive. Autopilot experiences for Developers and Testers are now available in preview with a targeted general availability in June. Over 1,500 organizations are using UiPath Autopilot resulting in over 7,000 generations and over 5500 expressions generated per week.

Autopilot for Developers empowers both professional and citizen automation developers to create automation, code, and expressions with natural language, accelerating every aspect of building automation.

Autopilot for Testers transforms the testing lifecycle, from planning to analysis, reducing the burden of manual testing and allowing enterprise testing teams to test more applications faster. Autopilot for Testers empowers testing teams to rapidly generate step-by-step test cases from requirements and any other source documents, generate automation from test steps, and surface insights from test results, allowing testers to identify the root cause of issues in minutes, not hours or days.

Prebuilt GenAI Activities for faster time-to-value

New prebuilt GenAI Activities utilize the UiPath AI Trust Layer and are easy to access, develop with, and leverage high-quality AI predictions in automation workflows that deliver faster time to value. GenAI Activities provides access to a growing collection of GenAI use cases, such as text completion for emails, categorization, image detection, language translation, and the ability to filter out personally identifiable information (PII) enabling enterprises to do more with GenAI. With GenAI Activities, enterprises can reduce the time to build and achieve a competitive edge using GenAI to help customize the customer experience, optimize supply chains, forecast demands, and make informed decisions.

The post UiPath Unveils New Family of LLMs at AI Summit to Empower Enterprises to Harness Full Capabilities of GenAI appeared first on ELE Times.

Expanded Semiconductor Assembly and Test Facility Database Tracks OSAT and Integrated Device Manufacturers in 670 Facilities, SEMI and TechSearch International Report

New edition of database tracks 33% more facilities and highlights advanced packaging and factory certifications

The new edition of the Worldwide Assembly & Test Facility Database expands coverage to 670 facilities, 33% more than the previous release, including 500 outsourced semiconductor assembly and test (OSAT) service providers and 170 integrated device manufacturer (IDM) facilities, SEMI and TechSearch International announced today. The database is the only commercially available listing of assembly and test suppliers that provides comprehensive updates on packaging and testing services offered by the semiconductor industry.

The updated database includes factory certifications in critical areas such as quality, environmental, security and safety as well as data reflecting automotive quality certifications obtained by each site. The new edition also highlights advanced packaging offerings by each factory, defined as flip chip bumping and assembly, fan-out and fan-in wafer-level packaging (WLP), through silicon via (TSV), 2.5D and 3D capability.