Feed aggregator

Shifting from preventive maintenance to predictive maintenance

Courtesy: RoHM

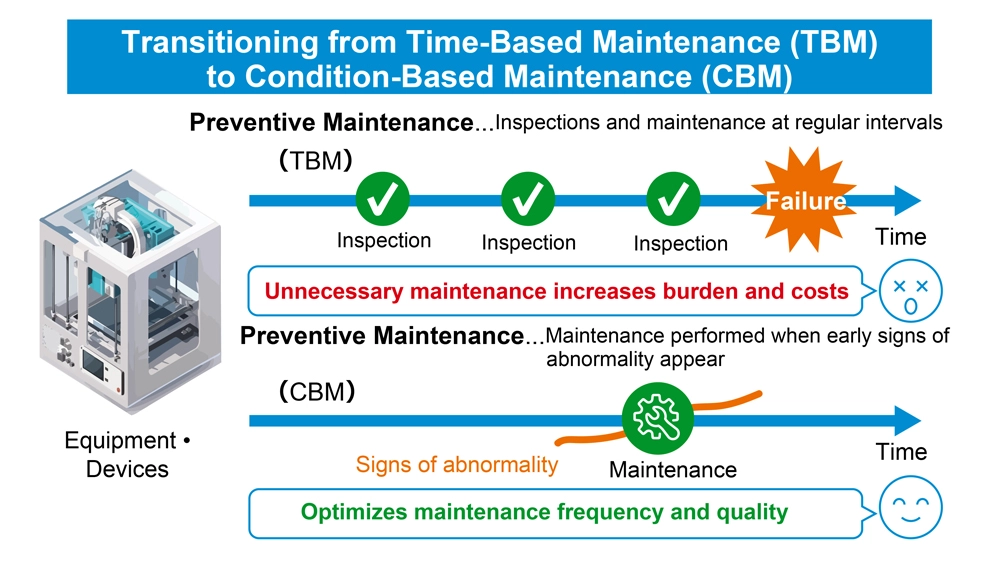

In the manufacturing industry, equipment maintenance has traditionally relied on preventive maintenance (TBM: Time-Based Maintenance), where inspections and part replacements are carried out at predetermined intervals. While effective to a degree, this approach often requires shutting down equipment that is still operating normally, resulting in unnecessary downtime, higher operating costs, and increased workload on production sites. In some cases, excessive inspection tasks can even introduce the problems they are intended to prevent—such as mistakes and human error.

Consequently, many manufacturers are now transitioning to predictive maintenance (CBM: Condition-Based Maintenance), which monitors equipment conditions in real time and performs maintenance only when early signs of abnormality are detected.

This white paper examines Solist-AI, ROHM’s AI-driven solution that enhances the efficiency and reliability of predictive maintenance.

- Transitioning from Time-Based Maintenance (TBM) to Condition-Based Maintenance (CBM)

The primary difference between preventive maintenance (TBM) and predictive maintenance (CBM) lies in how maintenance timing is determined. TBM schedules inspections and part replacements at fixed time intervals, independent of the equipment’s actual condition. In contrast, CBM performs maintenance only when truly necessary. AI plays a critical role in CBM by continuously monitoring parameters such as temperature, vibration, and current through sensors, processing large volumes of time-series data, and detecting early signs of abnormality from subtle pattern deviations.

By adopting predictive maintenance, manufacturers can avoid unnecessary shutdowns of equipment that is still operating normally. AI-driven optimisation of maintenance timing reduces operational costs and workload, while also preventing excessive inspections that may introduce procedural or human errors. Furthermore, because CBM ensures consistent maintenance quality without relying on technician intuition or experience, it is gaining attention as an effective solution to on-site challenges such as the shortage of skilled workers and persistent labour shortages.

- Shifting from Cloud-Based to Endpoint-Based Architecture

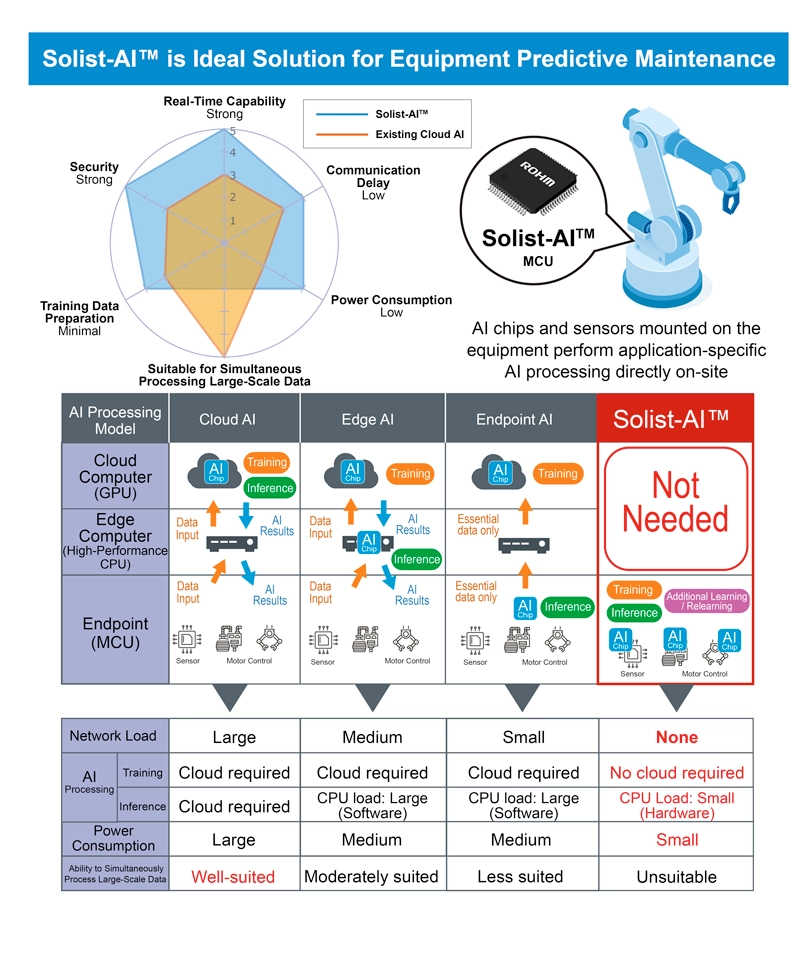

Conventional cloud-based AI systems face several challenges, including communication latency, the complexity of building and maintaining network infrastructure, and the added burden of implementing security measures to prevent data leaks during transmission.

These systems also require large volumes of training data to be collected and prepared in advance, making it difficult to account for variations in operating environments and equipment-specific differences at each site. High-precision AI processing also demands significant computational resources such as CPUs and GPUs, which inevitably increases power consumption.

This has led to the growing adoption of endpoint AI as a more practical alternative. By performing both learning and inference directly on devices equipped with sensors and MCUs, endpoint AI can adapt to the characteristics of individual machines, perform real-time processing, and maintain exceptionally low power consumption. In addition, because no data is transmitted to the cloud, it ensures a high level of security.

To support this paradigm, ROHM developed Solist-AI, an on-device AI solution optimised for edge computing, which is the core technology behind predictive maintenance. The name is derived from ‘Solution with On-device Learning IC for Standalone AI.’ Just like a ‘solist’ in music who performs independently, Solist-AI completes the entire AI workflow, from learning to inference, locally on the device without relying on the cloud or external networks.

completes the entire AI workflow, from learning to inference, locally on the device without relying on the cloud or external networks.

While Solist-AI delivers significant advantages for on-device AI, it is not designed for large-scale data processing or image-based workloads compared to conventional AI architectures (cloud, edge, or endpoint types). For advanced analytics, such as detailed image diagnostics, cloud-based AI remains the preferred solution.

For applications that do not require advanced analysis, Solist-AI offers a major benefit: substantial cost savings by eliminating expenses associated with network infrastructure and cloud service fees.

And with ongoing learning capabilities, Solist-AI can continuously update its understanding of equipment deterioration over time. This greatly improves responsiveness to both normal and abnormal conditions as machines age, making it a compelling alternative to traditional AI solutions.

- Solist-AI: The Optimal Solution for Predictive Maintenance

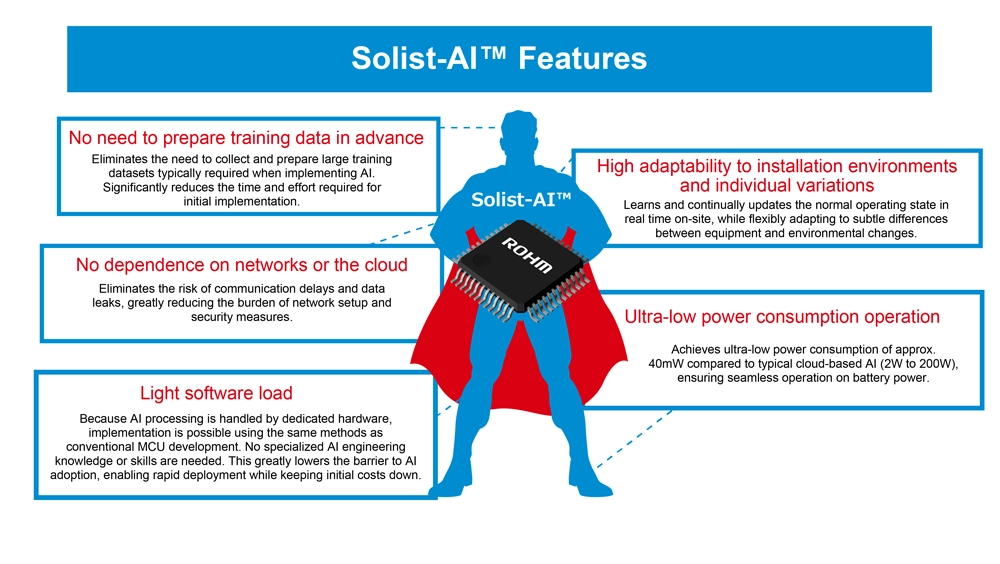

Unlike general-purpose AI processors, Solist-AI is purpose-built for targeted use cases such as failure prediction and anomaly detection. The ability to train and fine-tune models directly on-site based on the actual operating environment ensures flexible adaptation to equipment variations and changing ambient conditions.

At the heart of the solution is the Solist-AI MCU, which integrates ROHM’s proprietary AI accelerator AxlCORE-ODL (On-Device Learning). This accelerator performs a three-layer neural network and FFT (Fast Fourier Transform) processing in dedicated hardware, enabling real-time detection and notification of various anomalies and operational changes in the field.

The biggest advantage of Solist-AI lies in its simplicity as a standalone AI system, requiring only a sensor and MCU. Whereas conventional AI solutions depend on cloud access or network connectivity, Solist-AI operates completely independent of any network infrastructure. This allows it to be easily retrofitted into existing equipment while eliminating concerns related to communication delays and data leakage.

Equipped with a built-in AI accelerator, AxlCORE-ODL learns and infers data from sensors–such as vibration, temperature, and current—— in real time. By quantifying subtle deviations from normal patterns, it identifies and alerts users to early signs of abnormality.

Operating at an exceptionally low power level of approximately 40 mW, Solist-AI stands in stark contrast to conventional cloud-based AI systems that typically consume anywhere from 2W to 200W. This makes it well-suited for battery-powered devices and ideal for retrofitting into existing equipment. As a result, it enhances maintenance and operational efficiency across a wide range of industrial assets, including robots, motors, and cooling fans.

In addition, a variety of support tools are available, including Solist-AI Sim, which enables preliminary verification of AI suitability, and Solist-AI Scope, which offers real-time visualisation of AI behaviour. These tools enable implementation and evaluation without requiring specialised AI expertise, greatly lowering the barrier to adoption.

Example of Integration with AE (Acoustic Emission) Sensors

Combining AE sensors and Solist-AI enables high precision detection of abnormalities that are extremely difficult to identify manually, such as insufficient bearing lubrication or microscopic surface damage. The system analyses multiple AE sensor outputs (including peak amplitude, average value, energy, and event count) across multiple dimensions. The AI numerically quantifies the degree of abnormality, allowing early detection of emerging issues and supporting timely, condition-based maintenance. Because the progression of anomaly levels can be visually understood without requiring specialised expertise, this solution is widely regarded for its suitability in real-world on-site operation.

- Summary

ROHM’s Solist-AI is a true endpoint solution that functions independently of the cloud, delivering a practical approach to predictive maintenance. By completing both learning and inference in real-time using only a sensor and MCU, it eliminates the need for network connectivity, consumes very low power, and offers robust security for safe, reliable operation. Dedicated support tools allow even personnel without AI expertise to easily deploy and evaluate the system.

A wide range of proven application examples, including detecting abnormal fan motor vibrations and identifying changes in current waveforms, demonstrate how Solist-AI substantially lowers the barriers to implementation.

Going forward, ROHM is expanding the product lineup and developing higher-performance models to support broader use across maintenance and other applications. Solist-AI brings new value to manufacturing sites by quickly detecting ‘deviations from normal’ on the factory floor and autonomously prompting appropriate actions

To help engineers learn more about and fully leverage Solist-AI, ROHM invites users to connect through a dedicated community, ‘Solist-AI Open Lab: Getting Started’ on its technical platform, Engineer Social Hub (available in Japanese; registration/login required). In addition to accessing detailed information on Solist-AI, members can ask questions, share feedback, and discuss evaluation and implementation challenges, so we encourage you to participate.

The post Shifting from preventive maintenance to predictive maintenance appeared first on ELE Times.

How Can the High Voltage Intelligent Battery Shunt Reference Design Benefit You?

Courtesy: Element 14

Introduction

Accurate current measurement is a critical aspect of modern electrical engineering. Precision is essential everywhere – from battery management systems (BMS) to industrial automation. Traditional sensing methods are inadequate when temperatures fluctuate, leading to unreliable readings.

That’s where shunt resistors come to the rescue. These components produce a small, measurable voltage drop that reflects the amount of current flowing through them. However, their performance can be influenced by changes in temperature, which alter resistance and distort measurements. The temperature coefficient of resistance (TCR) quantifies this change, making low TCR shunt resistors essential for high precision applications.

Vishay’s WSBE8518 shunt resistor exemplifies such precision. Vishay offers the HV-IBSS-USB reference design – a sophisticated tool that simplifies testing and integration – to streamline its evaluation. This article explores the function of shunt resistors, the significance of low TCR, the impact of thermal drift, and how the HV-IBSS-USB reference design empowers engineers to achieve accurate current sensing in demanding environments.

Understanding Shunt Resistors in Current Sensing

A shunt resistor, also known as a current shunt resistor or an ammeter shunt, is a low resistance component placed in series with a load to measure voltage drop when current flows through it. This voltage drop, measured by an analogue-to-digital converter (ADC), is directly proportional to the current, enabling accurate current measurement using Ohm’s Law.

Shunt resistors are vital in precision current sensing across a wide range of high-reliability applications. These include monitoring charge and discharge cycles in BMS for electric vehicles (EV), energy storage systems, and portable devices, regulating output and detecting overcurrent in power supplies and motor control systems. Renewable energy setups like solar inverters and wind turbines ensure accurate power flow measurement, while industrial automation provides critical feedback for diagnostics and system reliability. They are also indispensable in network UPS systems, power meters, and high-precision environments such as aerospace and defence applications, where even minor deviations in current measurement can have critical consequences.

Selecting a shunt resistor involves balancing its resistance value, power rating, and TCR. For instance, the WSBE8518, with a 100 μΩ resistance and 36 W power rating at 70 °C, produces a 50 mV drop at 500 A – making it suitable for high current applications while maintaining accuracy. The WSBE series can support up to 1825 A with no noticeable resistance shift.

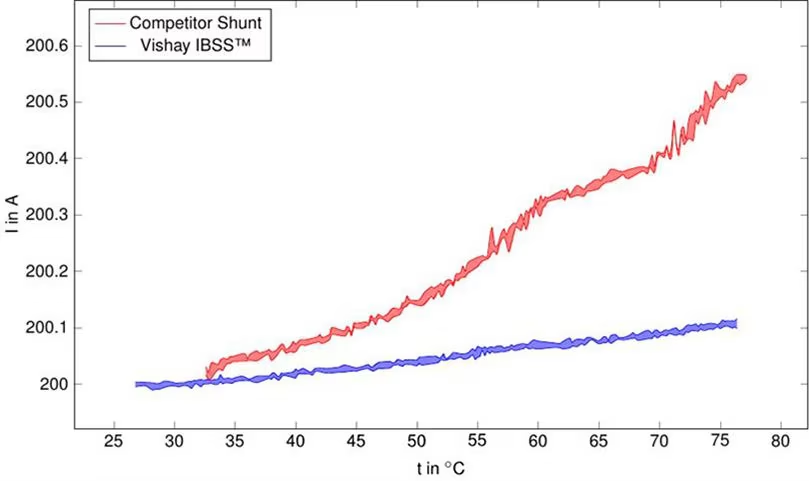

Figure 1: A comparison of current measurement stability over temperature between Vishay’s Intelligent Battery Shunt System (IBSS) and a competing shunt

Figure 1: A comparison of current measurement stability over temperature between Vishay’s Intelligent Battery Shunt System (IBSS) and a competing shunt

Figure 1 demonstrates that as the temperature rises from 25 °C to 80 °C, the standard shunt (in red) shows a current reading drift from 200 A to over 200.6 A, while Vishay’s (in blue) remains nearly flat at 200 A, highlighting its superior thermal stability. Vishay also delivers cleaner, less noisy measurements, unlike the standard shunt’s non-linear, noisier signal response.

Why Does TCR Matter in Precision Sensing?

Resistance isn’t constant—it changes with temperature. The TCR quantifies this change, expressed in parts per million per degree Celsius (ppm/°C). In simple terms, TCR tells us how much a resistor’s value will drift as the temperature fluctuates.

If a resistor has a positive TCR, its resistance increases with rising temperature. A negative TCR means resistance decreases as it gets warmer. In either case, too much variation can compromise measurement accuracy. A low TCR is critical in precision current sensing, especially in systems where even the slightest error can cascade into larger problems. Due to increased electron scattering, most metals naturally see resistance rise with temperature. However, specialized alloys — such as the manganese-copper used in Vishay’s WSBE8518 shunt resistor —can achieve remarkably stable performance, with TCRs as low as ± 10 ppm/°C. This makes them ideal for applications demanding high accuracy under wide temperature swings.

For instance, a 1 mΩ shunt with a TCR of ± 50 ppm/°C will experience a resistance change of ± 5 µΩ over a 100 °C temperature swing, which is equivalent to ± 0.5 % of its nominal resistance. That may seem insignificant, but in high precision environments such as battery charge balancing or power regulation, such deviations can skew current readings and disrupt system performance. The following equation calculates the maximum change in resistance value for a given TCR:

R = R0 X 1 [ 1 + α(T-T0)]

where,

R = final resistance

R0 = initial resistance

α = TCR

T = final temperature

T0 = initial temperature

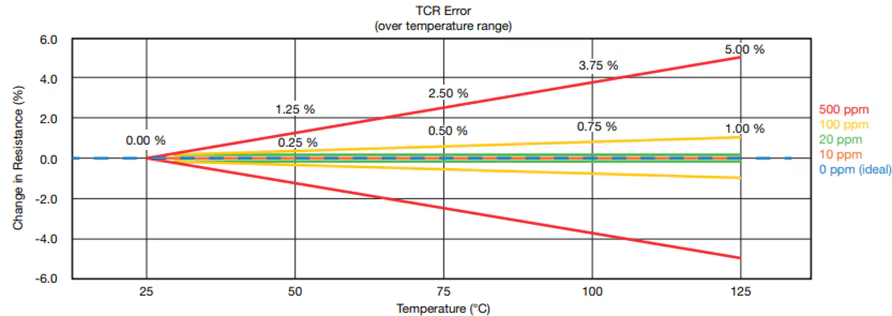

Figure 2: This graph compares different TCR levels as a percentage change in resistance versus increasing temperature from 25 °C

Figure 2: This graph compares different TCR levels as a percentage change in resistance versus increasing temperature from 25 °C

The benefits of low TCR are:

- Improved measurement accuracy: With resistance remaining stable across temperature variations, current sensing becomes more precise

- Better thermal stability: Minimal resistance drift, even under fluctuating ambient conditions or due to self-heating from applied power

- Ideal for high precision circuits: Supports applications that require consistent performance over wide temperature ranges

- Enhanced performance in harsh environments: Suitable for military and high-temperature electronics where reliability under thermal stress is critical

- Minimizes error in Kelvin configurations: Particularly advantageous in 4-terminal setups in which precise voltage sensing is critical

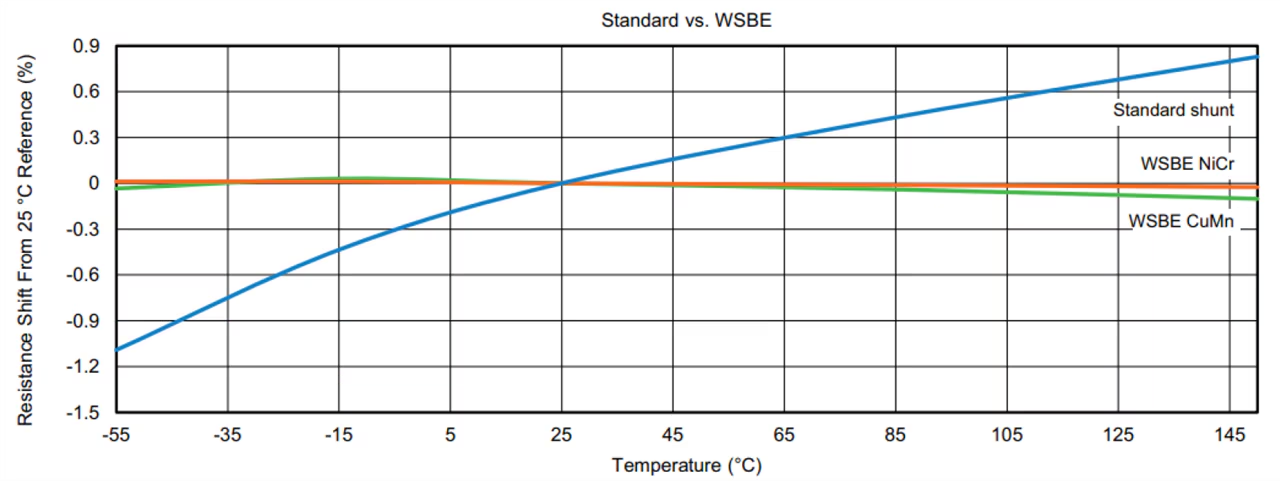

Figure 3 illustrates the superior thermal stability of WSBE series resistors (NiCr and CuMn) compared to standard shunt resistors. While the standard shunt exhibits a large resistance drift over temperature (indicative of poor TCR), the WSBE resistors maintain almost constant resistance across a broad thermal range.

Figure 3: TCR comparison chart (WBSE series vs a standard shunt resistor)

Figure 3: TCR comparison chart (WBSE series vs a standard shunt resistor)

Understanding Thermal Drift in Current Sensing

Thermal drift refers to changes in a component’s electrical characteristics caused by fluctuations in temperature. In shunt resistors, thermal drift alters resistance, directly affecting current measurement accuracy. For instance, copper, with a TCR of 3900 ppm/°C, can exhibit a 39 % resistance change over a 100 °C temperature range, severely compromising precision. Low TCR materials, such as the manganese-copper alloy in the WSBE8518, reduce this effect, maintaining high measurement accuracy.

Thermal drift can also arise from self-heating due to power dissipation during operation. Vishay’s HV-IBSS-USB mitigates this by combining a low TCR shunt with an efficient circuit design, minimizing temperature-induced errors. Kelvin (4-terminal) connections further enhance accuracy by reducing the impact of high TCR copper terminals, enabling consistent and repeatable measurements.

How the Reference Design Works

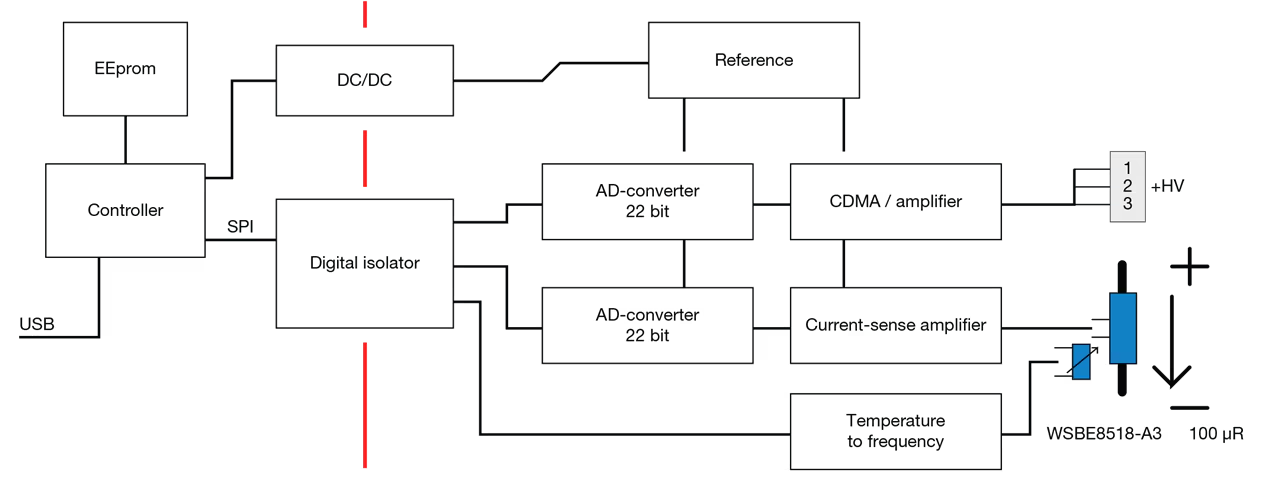

The HV-IBSS-USB reference design features two isolated domains: an HV analog front-end and an LV digital control section. Powered and interfaced via USB, the LV side hosts a microcontroller that manages data acquisition and communication. Power is transferred to the HV domain through a charge pump, where precision 22-bit sigma-delta ADCs and carefully selected analog components enable high-resolution current and voltage measurements.

The design compensates for thermal drift through automatic gain and offset calibration, ensuring accuracy across temperature variations. A dedicated voltage divider feeds the voltage signal directly to the ADC, eliminating amplifier drift. An NTC-based converter transmits a PWM signal across the isolation barrier for temperature monitoring. Data is available via a virtual COM port, auto-detected over USB, enabling seamless integration with PC-based tools.

- Current measurement: a third-order delta-sigma modulator converts the voltage drop across the WSBE8518 shunt into a digital signal, with automatic gain and offset calibration. The shunt’s TCR (10 ppm/°C) outperforms the analog circuitry’s drift (33.6 ppm/°C), ensuring high accuracy

- Voltage measurement: a 22-bit sigma-delta ADC measures voltage, divided by the CDMA2512 resistor to fit the ADC’s range

Temperature measurement: an NTC thermistor and multivibrator convert temperature to a frequency signal, transmitted as a PWM signal to the microcontroller

Figure 5: Functional block diagram of the HV-IBSS-USB

Figure 5: Functional block diagram of the HV-IBSS-USB

Application: EVs

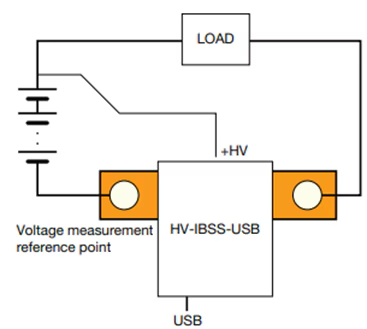

In BMS for EVs and other compact mobility vehicles such as electric two-wheelers, precise current measurement is critical for monitoring charge and discharge cycles, balancing cells, and ensure safe, efficient operation. The HV-IBSS-USB facilitates the evaluation of the WSBE8518 shunt in these demanding environments. Installed in series with the high voltage battery pack, the shunt accurately measures currents up to 500 A, which is ideal for applications where currents routinely exceed 100 A.

The reference design’s USB-C interface enables real-time data logging, allowing engineers to analyze current flow, detect anomalies, and fine-tune battery management algorithms. The WSBE8518’s low TCR ensures accurate measurements even as operating temperatures rise during rapid charging or high load discharge. Voltage measurements spanning 10 V to 850 V and integrated temperature sensing provide additional data for detecting faults or optimizing power distribution.

A typical application circuit places the shunt between the EV battery and the drive or charging system. The HV-IBSS-USB’s HV+ and HV + terminals connect to the shunt’s Kelvin terminals, and the USB-C port links to a computer for data acquisition and analysis.

Figure 6: Typical application circuit

Figure 6: Typical application circuit

Conclusion

As current sensing requirements continue to tighten across EVs, energy storage, and high-power industrial systems, accuracy can no longer be left vulnerable to temperature effects and component drift. Vishay’s HV-IBSS-USB reference design, built around the ultra-low-TCR WSBE8518 shunt resistor, addresses this challenge head-on by combining material stability, Kelvin sensing, high-resolution ADCs, and intelligent calibration in a ready-to-evaluate platform. By minimizing thermal drift and simplifying high-voltage, high-current measurements, the design enables engineers to validate performance quickly, reduce development risk, and deploy more reliable battery and power management systems. In applications where every milliamp matters, the HV-IBSS-USB offers a practical pathway from precision measurement theory to real-world, production-ready accuracy.

The post How Can the High Voltage Intelligent Battery Shunt Reference Design Benefit You? appeared first on ELE Times.

The Move to 48 Volts in Transportation

Courtesy: Avnet

| Key Takeaways:

● 48V systems are being adopted in mobility, industrial, data centres, and automotive ● Higher voltage brings multiple benefits ● New 48V motor control kit offers flexibility and efficiency ● Battery management included |

Shifting to 48V isn’t just about higher voltage. What stands out here is how that shift enables lighter designs, longer battery life, and more efficient operation. Think drones that fly further, autonomous robots that run longer without a recharge, and electric vehicles that pack more punch without bulking up.

For OEMs looking at a new product line or planning upgrades, that’s more than a technical specification; it’s a competitive factor. Getting ahead of this voltage transition could mean catching the next wave in smart transportation and industrial automation.

The market’s responding, not just in automotive, but across sectors like logistics, warehousing, and even consumer robotics.

Put 48V on your roadmap

For teams evaluating roadmap priorities, the takeaway is that aligning with this voltage shift isn’t optional. It’s quickly becoming a baseline expectation for future-ready platforms.

NXP has introduced a high-power 48V motor control kit, and there’s quite a bit under the hood here. You’ve got flexibility for controlling single three-phase, dual three-phase, and single six-phase setups, plus compatibility with resolver, hall, and encoder sensors. That means it’s well-positioned for teams with complex motor demands. Those motors could be driving a range of applications, from drones to autonomous or guided robots.

From a strategic standpoint, having isolated communication and redundant power supply isn’t just a feature; it’s risk mitigation. Fault protection for things like overcurrent and overheating? That’s a game-changer for operational uptime. Add functional safety to the mix. It’s built for both industrial and automotive standards, so teams don’t have to choose between performance and compliance.

Development kit availability

Launch timing is crucial. NXP’s development kit is available from December for early access, but the full rollout lands in January. Contact your Avnet representative to register your interest. NXP is also bundling standard drivers and control libraries, so the barrier to entry is lower for engineering teams.

For OEMs that need deeper customisation, there’s a premium software tier with extra safety features and source code. The architecture is modular, too: controller board, adapter board, power stage, and heat sink, with motors left out for flexibility. That setup is deliberate, letting customers tailor the kit to their own requirements, rather than locking them into a one-size-fits-all hardware solution.

The NXP development kit will be generally available from January 2026, with early access available by contacting your Avnet representative.

The NXP development kit will be generally available from January 2026, with early access available by contacting your Avnet representative.

NXP MCUs are at the core, plus a GUI for streamlined control. It’s aimed at making deployment straightforward, whether for prototyping or scaling up production. That level of integration should make it easier for teams to hit aggressive timelines while still meeting compliance and quality bars.

Battery management for 48V systems

Let’s shift the focus to battery management. If you’re investing in next-gen mobility or industrial systems, BMS is at the heart of every decision. What’s new here is the scale. The product family now covers everything from 12 volts all the way up to 1500 volts, but the 48V range is the sweet spot for cost and flexibility. That’s not just for cars; it’s a fit for industrial energy storage, drones, and autonomous robots.

That creates a broader footprint, but what’s the real differentiator between these 48V solutions and the legacy setups? First, you’re seeing devices like the BMA 7518 that are exclusive to 48V, with others bridging up to higher voltages in industrial use. Certification’s a key lever. Everything’s aiming for ISO 26262 on the automotive side and IEC 61508 for industrial. Compliance isn’t just a checkbox; it moves the needle on market access and risk.

There’s a new software stack for active cell balancing, and “battery passport” tools are being rolled out to keep up with European compliance. Secure protocols are another layer, making sure the system’s ready for upcoming requirements. NXP is not just filling out the product line; it’s building for future-proofing and regulatory headwinds.

Early access to 48V solutions

Avnet is working with NXP to provide early access for select customers. If you’re aiming to build momentum before January, this is a real lever for market entry. By prioritising hands-on pilots and direct feedback loops, teams catch issues or feature requests early in the cycle.

If you are evaluating 48V systems, contact your Avnet representative to find out how you could benefit from these exciting developments.

The post The Move to 48 Volts in Transportation appeared first on ELE Times.

EEVblog 1730 - AC Basics Tutorial Part 8: Apparent, Reactive & Real Power

Mitsubishi Electric shipping samples of four new trench SiC MOSFET bare dies for power semiconductors

Phlux’s Noiseless InGaAs sensors attract interest for hunting and long-range shooting

Out of the loop: What's going on between SparkFun and Adafruit?

Extend the LM358 op-amp family’s output voltage range

The LM358 family of dual op amps is among those hoary industry work-horse devices that are inexpensive and still have their uses. These parts’ outputs can approach (and for the inputs even include) their negative supply rail voltage. Unfortunately, this is not the case for the positive supply rail. However, cascading the op amp with a few simple, inexpensive components can surmount this limitation of the outputs.

Figure 1 This simple rail-to-rail gain stage, consisting of Q1, Q2, R1, Rf, Rg, Rcomp, and Ccomp, is driven by the output of the LM258A op-amp. Feedback network Rf1 and Rg1 help to ensure that the inverting input feedback voltage is within the op-amp’s common-mode input range and to set a stable loop gain characteristic.

Figure 1 This simple rail-to-rail gain stage, consisting of Q1, Q2, R1, Rf, Rg, Rcomp, and Ccomp, is driven by the output of the LM258A op-amp. Feedback network Rf1 and Rg1 help to ensure that the inverting input feedback voltage is within the op-amp’s common-mode input range and to set a stable loop gain characteristic.

I had some LM258As on hand, which I had bought instead of the LM358As because of the slightly better input offset voltage and bias current ratings, which also spanned a wider set of temperatures. Interestingly, the input common-mode range for the non-A version of the part is characterized over temperature as Vcc – 2V for Vcc between 5 and 30V. But the A version is characterized at 30-V only. Go figure! As you’ll see, the tests I ran encountered no difficulties.

The parts’ AC characteristics are spec’d identically, suggesting that the even cheaper LM358 should encounter no stability issues. With the components shown in Figure 1, the loop gain above 100 kHz is about that of the LM258A configured as a voltage follower. Below 10 kHz, there’s approximately an extra 8 dB of gain. The following (Figures 2 through Figure 7) are some screen shots of ‘scope traces for various tests of the circuit at 1 kHz. The scales for all traces are the same: 1 V and 200 µs per large divisions.

Figure 2 Here, rail-to-rail swings of the circuit’s output are apparent.

Figure 3 The circuit recovers from clipping gracefully.

Figure 4 With a 0.1 µF load, slewing problems arise.

Figure 5 A 470-ohm load in parallel with 0.1 µF is stable and doesn’t exhibit slewing problems.

Figure 6 But with 0.1 µF as the sole load, the circuit is not stable.

Figure 7 Swapping the 470-ohm Rcomp with 100-ohms restores stability with 0.1 µF as the sole load.

In conclusion, a pair of cheap transistors, an inexpensive cap, and a few non-precision resistors provide a cost-effective way to turn the LM358 family of op amps into one with rail-to-rail output capabilities.

Christopher Paul has worked in various engineering positions in the communications industry for over 40 years.

Related Content

- Simple PWM interface can program regulators for Vout < Vsense

- LM358 Datasheet and Pinout

- com: Experimenting with LM358 and OPA2182 ICs

- Tricky PWM Controller – An Analog Beauty!

- LED Lamp Dimmer Project Circuit

- Op amp one-shot produces supply-independent pulse timing

The post Extend the LM358 op-amp family’s output voltage range appeared first on EDN.

The AI design world in 2026: What you need to know

We live in an AI era, but behind the buzzword lies an intricate world of hardware and software building blocks. Like every other design, AI systems span multiple dimensions, ranging from processors and memory devices to interface design and EDA tools. So, EDN is publishing a special section that aims to untangle the AI labyrinth and thus provide engineers and engineering managers greater clarity from a design standpoint.

For instance, while AI is driving demand for advanced memory solutions, memory technology is taking a generational leap by resolving formidable engineering challenges. An article will examine the latest breakthroughs in memory technology and how they are shaping the rapidly evolving AI landscape. It will also provide a sneak peek at memory bottlenecks in generative AI, as well as thermal management and energy-efficiency constraints.

Figure 1 HBM offers higher bandwidth and better power efficiency in a similar DRAM footprint. Source: Rambus

Another article hits the “memory wall” currently haunting hyperscalers. What is it, and how can data center companies confront such memory bottlenecks? The article explains the role of high-bandwidth memory (HBM) in addressing this phenomenon and offers a peek into future memory needs.

Interconnect is another key building block in AI silicon. Here, automation is becoming a critical ingredient in generating and refining interconnect topologies to meet system-level performance goals. Then, there are physically aware algorithms that recognize layout constraints and minimize routing congestion. An article will show how the phenomena work while also showing how AI workloads have made existing chip interconnect design impractical.

Figure 2 The AI content in interconnect designs facilitates intelligent automation, which in turn, enables a new class of AI chips. Source: Arteris

No design story is complete without EDA tools, and AI systems are no exception. An EDA industry veteran writes a piece for this special section to show how AI workloads are forcing a paradigm shift in chip development. He zeroes in on the energy efficiency of AI chips and explains how next-generation design tools can help design chips that maximize performance for every watt consumed.

On the applications front, edge AI finally came of age in 2025 and is likely to make further inroads during 2026. A guide on edge AI for industrial applications encompasses the key stages of the design value chain. That includes data collection and preprocessing, hardware-accelerated processing, model training, and model compression. It also explains deployment frameworks and tools, as well as design testing and validation.

Figure 3 Edge AI addresses the high-performance and low-latency requirements of industrial applications by embedding intelligence into devices. Source: Infineon

There will be more. For instance, semiconductor fabs are incorporating AI content to modernize their fabrication processes. Take the case of GlobalFoundries joining hands with Siemens EDA for fab automation; GF is deploying advanced AI-enabled software, sensors, and real-time control systems for fab automation and predictive maintenance.

Finally, and more importantly, this special section will take a closer look at the state of training and inference technologies. Nvidia’s recent acquisition of Groq is a stark reminder of how quickly inference technology is evolving. While training hardware has captured much of the limelight in 2025, 2026 could be a year of inference.

Stay tuned for more!

Related Content

- The network-on-chip interconnect is the SoC

- An edge AI processor’s pivot to the open-source world

- Edge AI powers the next wave of industrial intelligence

- Four tie-ups uncover the emerging AI chip design models

- HBM memory chips: The unsung hero of the AI revolution

The post The AI design world in 2026: What you need to know appeared first on EDN.

Australia investing $1.2bn to create Critical Minerals Strategic Reserve

Cree LED launches fully assembled L2 PCBA solutions for indoor and outdoor lighting

Adoption of Electric Motorcycles: A Challenging Ride Ahead

India’s tryst with electric two-wheelers began as early as the 1990s, but meaningful momentum only arrived decades later. Early policy interventions—such as subsidies offered by the Ministry of New and Renewable Energy (MNRE) between 2010 and 2012—laid the groundwork. The launch of the FAME (Faster Adoption and Manufacturing of Electric Vehicles) scheme further accelerated adoption, drawing both startups and legacy manufacturers into the electric mobility race.

Yet, despite this progress, one segment remains conspicuously underdeveloped: electric motorcycles. A joint NITI Aayog–TIFAC study published in June 2022 estimates that India’s two-wheeler manufacturing capacity could exceed 20 million units annually by 2030. However, electric motorcycles account for only a negligible fraction of this future potential.

Royal Enfield’s Electric Bet Rekindles DebateAs India’s foremost motorcycle manufacturer, Royal Enfield’s move into electric mobility represents more than product diversification—it marks a fundamental technological transition for a brand long associated with large-displacement engines, mechanical character, and long-distance touring capability. Entering the electric motorcycle space forces a redefinition of performance, shifting the emphasis from displacement and acoustics to torque delivery, thermal control, software, and system integration.

The company has signalled its electric intent through the Flying Flea platform, with two derivatives planned for launch after 2026. Beyond the product itself, the real story lies in the underlying technology stack Royal Enfield is building for the electric era.

According to Matt Cardenas, Head of EV Product Strategy at Royal Enfield, much of the vehicle’s technology has been developed in-house to optimise user experience. Over 200 engineers are engaged in powertrain development, with 42 patents already filed. The company has also partnered directly with NXP and Snapdragon to co-develop motorcycle-specific chips—an uncommon move in the two-wheeler industry.

For a company built on the appeal of powerful combustion motorcycles, Royal Enfield’s electric strategy suggests a broader reimagining of power itself. In the absence of exhaust notes and mechanical mass, performance is being reconstructed through torque curves, thermal discipline, and digital intelligence. How successfully this engineering-first approach translates into rider acceptance may ultimately determine whether electric motorcycles can move beyond the margins of India’s two-wheeler market.

Where Does India’s Electric Motorcycle Market Stand?In August 2025, NITI Aayog convened a high-level meeting with major two-wheeler OEMs—Hero MotoCorp, Bajaj Auto, TVS Motor, Ola Electric, Ather Energy, and Revolt Motors—to explore strategies for improving electric motorcycle adoption.

The current market structure highlights the challenge. Internal combustion engine (ICE) motorcycles still account for nearly two-thirds of India’s two-wheeler market. Electric scooters, by contrast, have achieved around 15% penetration within the EV segment and now represent almost one-third of all two-wheelers sold. Electric motorcycles, however, contribute just 0.1% of the total two-wheeler market, according to NITI Aayog.

It’s partly because motorcycle manufacturing is dominated by some heavy-loaded challenges, ranging from the weight of the battery to efficiency and experience.

The Weight of the Battery ProblemBattery weight remains one of the most fundamental constraints. Unlike four-wheelers, motorcycles are highly sensitive to mass distribution. For long-distance and off-road riding in particular, maintaining balance, agility, and stability becomes increasingly complex as battery size grows.

Engineers must carefully position the battery to preserve handling characteristics while reinforcing the frame without adding excessive weight. Ergonomics also demands rethinking, as the traditional engine layout is replaced by a bulkier energy storage system. Suspension and braking systems require complete recalibration to match the altered dynamics of an electric powertrain. In effect, designing an electric motorcycle is far more complex than simply swapping an engine for a motor.

Reflecting the same idea, Ather’s leadership has noted that practical and cost considerations currently favour electric scooters over bikes in India — an observation directly linked to the challenges electric motorcycles face in matching affordability and everyday utility.

Efficiency Gains That Don’t Fully TranslateOn paper, electric motors are vastly more efficient than ICEs, converting 80–90% of electrical energy into motion, compared to just 20–30% for combustion engines. Regenerative braking and fewer moving parts further enhance theoretical efficiency.

However, these advantages shrink when evaluated on a well-to-wheel basis. Petrol has a far higher energy density by weight than today’s lithium-ion batteries. A small fuel tank can deliver long range with minimal mass, while an equivalent amount of stored electrical energy adds substantial weight. For long-distance riding at sustained speeds, the heavier battery pack can negate much of the motor’s inherent efficiency advantage, resulting in shorter real-world range than ICE motorcycles.

Transmission, Maintenance, and the Rider’s BondElectric scooters have benefited from simple, gearless drivetrains that translate easily into electric architectures. Motorcycles, especially those with gears, face greater design and manufacturing complexity. This also affects maintenance and repair—areas of particular importance to India’s motorcycle culture, where self-repair and aftermarket modifications are deeply ingrained. Limited service familiarity and proprietary components can dampen enthusiasm among seasoned riders.

The Sound of SilenceFor many long-distance and enthusiast riders, motorcycles are as much about emotion as engineering. The sound, vibration, and mechanical feedback of a combustion engine form a core part of the riding experience. Electric motorcycles, with their near-silent operation, can feel less visceral—even if they deliver superior acceleration.

That said, this argument is not universal. Electric bikes offer tangible benefits: drastically reduced noise pollution, smoother power delivery, and instant torque that can feel both controlled and exhilarating. For a new generation of riders, these attributes may redefine what performance and pleasure mean on two wheels.

A Weak Global Reference EcosystemGlobally, electric motorcycles lack the ecosystem support that helped electric scooters scale. The NITI Aayog study notes the absence of learning spillovers from international markets. While India benefited from mature Chinese supply chains and proven designs in e-scooters, no comparable global blueprint exists for electric motorcycles. As a result, manufacturers must build R&D capabilities largely from scratch.

Only a handful of players—Revolt Motors, Ultraviolette Automotive, Oben Electric, and Matter Motors—are currently active in India’s electric motorcycle space. This contrasts sharply with the success of Ola Electric and Ather Energy in the scooter segment. Internationally, the picture has also dimmed, highlighted by the bankruptcy of Italian high-performance EV motorcycle maker Energica in October 2024. While brands like Kawasaki and Zero remain prominent globally, volumes remain limited.

Also, executives from Bajaj Auto’s electric two-wheeler business have acknowledged that the industry initially struggled with supply chain readiness and cost structures, and that building a sustainable EV business requires a measured approach rather than aggressive cash burn — indirectly underscoring how complexity and cost are barriers for electrifying performance-oriented two-wheelers.

The Road AheadFor now, innovation appears to be the only force capable of sustaining momentum in electric motorcycles. Breakthroughs in battery chemistry, thermal management, lightweight materials, and modular platforms will be critical. Until governments worldwide offer stronger policy support—through targeted subsidies, charging infrastructure, and R&D incentives—electric motorcycles are likely to remain a passion project rather than a mass-market reality.

The ride ahead, much like the machines themselves, will demand balance, patience, and relentless engineering ingenuity.

The post Adoption of Electric Motorcycles: A Challenging Ride Ahead appeared first on ELE Times.

Edge AI Chipsets: How On-Device Intelligence Is Reshaping Embedded System Design

The cloud-first model for embedded systems is becoming a legacy architecture. We’re moving away from simply piping data to remote servers and instead shifting the entire decision engine onto the bare metal. Driven by specialised Edge AI silicon-like NPUs and accelerated RISC-V cores, this evolution allows us to bake autonomous logic directly into sensors and controllers. In a production environment, on-device AI is a functional requirement, not a luxury. As NVIDIA CEO Jensen Huang noted in his 2025 GTC keynote, “The next wave is already happening… Robotics, which has been enabled by physical AI-AI that understands the physical world, is the new era,” marking a definitive shift toward intelligence that lives where the action occurs.

Here is why Several factors make on-device AI critical today:

- Solving Latency: In robotics or power-grid monitoring, a cloud round-trip is a system failure. You need deterministic, sub-millisecond responses that only local inference provides.

- Cutting the Bandwidth Tax: Constant streaming drains batteries and budgets. Local processing means we only transmit the “meaning,” not the raw noise, making massive IoT fleets cost-effective.

- Hardened Privacy: For medical or industrial IP, data in transit is a liability. Keeping telemetry on the silicon is the most effective way to ensure confidentiality. Cristiano Amon, CEO of Qualcomm, reinforces this, stating: “When you do the processing on the device, it’s immediate. You don’t have to wait. It’s private. It’s your data. It’s your personal graph that stays with you.

- True Autonomy: Your hardware shouldn’t brick when the Wi-Fi drops. Edge AI ensures the machine stays smart in remote or “noisy” environments.

These factors collectively make Edge AI an essential enabler of modern embedded intelligence.

Architectural Distinctions of Edge AI Chipsets

Edge AI chipsets differ from conventional microcontrollers (MCUs) and CPUs in architectural intent and operational efficiency. Core characteristics include:

- AI Accelerators (NPUs/VPUs): Dedicated engines built for neural-network inference (convolutions, matrix multiplications) that significantly exceed CPUs in speed and power efficiency.

- Heterogeneous SoC Architectures: A combination of CPU (control tasks), NPU (AI inference), and sometimes GPU (parallel processing), ensures optimised resource allocation across workloads.

- Model Optimisation: Deep learning models can be deployed on devices with limited resources without experiencing significant accuracy loss thanks to techniques like quantisation, pruning, and compression.

- Power & Thermal Management: Edge AI can function within stringent power and temperature constraints thanks to dynamic voltage and frequency scaling, low-power modes, and thermal improvements.

- Security & Reliability Features: Protection of sensitive operations-particularly in industrial deployments and critical infrastructure achieved through measures such as memory isolation, secure boot processes, and hardware-level tamper-resistant design.

By combining these features, edge-AI chipsets make intelligent behaviour feasible on devices previously incapable of complex decision-making.

Transforming Embedded System Design

The introduction of Edge AI fundamentally alters embedded system design:

- From Reactive to Cognitive Systems: Traditional embedded devices follow deterministic logic to detect defects, predict equipment failures and monitor the electronic equipment. Edge AI enables them to perceive, classify, and act autonomously.

- Real-Time Autonomy: With local inference, devices operate independently of cloud connectivity, critical for industrial, safety-critical, or remote applications.

- Distributed Intelligence & Scalability: Large IoT deployments can now distribute AI across nodes, reducing network load and ensuring real-time responsiveness.

- Energy and Bandwidth Efficiency: Local processing cuts down on data transmission, which saves energy and money and makes the system less reliant on centralized infrastructure.

- Cross-Layer Co-Design: Hardware-software co-design is now essential. Teams must optimise model architecture, memory allocation, runtime scheduling, and power management from the outset.

Edge AI thus transforms embedded systems from simple controllers into autonomous, intelligent agents capable of learning and decision-making.

Real-World Applications

Edge AI chipsets are already revolutionising multiple sectors:

- Industrial Automation & Smart Manufacturing: Vision-based defect detection, predictive maintenance, anomaly detection, and real-time monitoring of inverters, EV chargers, and SMPS.

- Embedded Vision & IoT: Smart cameras, object detection, robotics, drones, and smart sensors with on-device analytics.

- Consumer Electronics & Wearables: Offline voice recognition, gesture detection, and biometric authentication while preserving privacy.

- Energy & Power Electronics: Autonomous monitoring of power converters, predictive fault detection, and safety-critical decisions in EV and renewable energy systems.

- Agriculture & Remote Infrastructure: Edge AI sensors classify crop health, monitor environmental conditions, and operate autonomously in rural or low-connectivity areas.

These applications illustrate that Edge AI is no longer experimental – it’s a practical enabler for real-world intelligence in embedded systems.

Challenges and Considerations

While Edge AI presents opportunities, several challenges require careful engineering:

- Resource Constraints: Limited compute, memory, and power require model optimisation, which may impact accuracy or capability.

- Hardware Heterogeneity: Diverse SoCs and NPUs make deployment across platforms complex.

- Thermal and Power Management: Continuous inference can generate heat and consume power, impacting device lifespan.

- Security & Trust: Edge devices handling sensitive data must ensure secure boot, encryption, and tamper resistance.

- Model Lifecycle Management: Updating and maintaining models across fleets of devices, especially in remote locations, is a significant operational challenge.

- Design Complexity: Effective deployment demands collaboration between ML engineers, hardware designers, and embedded software developers.

Addressing these challenges is essential for scalable, robust, and efficient Edge AI implementations.

Emerging Trends & the Road Ahead

Edge AI chipsets are evolving rapidly:

- TinyML and Micro-Edge Devices: Ultra-low-power NPUs enable AI on minimal sensors and microcontrollers.

- Chiplet-Based Modular SoCs: Modular architectures combining CPUs, AI accelerators, and memory provide scalable, upgradeable solutions.

- Cross-Layer Automation: Improved toolchains for quantisation, pruning, scheduling, and deployment reduce manual tuning and accelerate development.

- Hybrid Edge-Cloud Models: On-device inference combined with federated learning or cloud aggregation balances autonomy with long-term model improvement.

- Enhanced Security: Trusted execution environments and secure hardware primitives protect distributed edge deployments.

These trends point toward embedded systems that are intelligent, autonomous, energy-efficient, and scalable across industries.

India’s Emerging Edge AI Ecosystem

India is rapidly contributing to the global Edge AI landscape. Startups and MNCs like Netrasemi, Mindgrove Technologies, InCore Semiconductors, and MosChip Technologies are developing edge-AI SoCs, NPUs, and embedded solutions tailored for industrial, automotive, and IoT applications. With government initiatives like Digital India and Make in India, combined with academic research, the country is fostering innovation in Edge AI for both domestic and global markets.

Conclusion

Edge AI chipsets are changing what we expect from embedded devices. Work that once had to be pushed to a central system can now be handled directly where the data is produced. This allows equipment to react immediately, even in environments where connectivity is unreliable or power is limited. Designing such systems is no longer just a matter of selecting a processor and writing code; it involves careful trade-offs between performance, power use, reliability, and long-term maintenance, with security built in from the start. As AI visionary Andrew Ng recently summarised, “The future AI wealth doesn’t belong to those who own the largest GPU clusters but to those who know how to use the smallest models to solve the most specific problems… Edge computing and small models are the wealth keys.

For engineers in the embedded domain, this is a practical turning point rather than a theoretical one. Devices are moving beyond fixed, single-purpose roles and taking on more responsibility within distributed setups. Edge AI enables the development of autonomous and efficient systems. These solutions deliver the consistent reliability required by various industries.

The post Edge AI Chipsets: How On-Device Intelligence Is Reshaping Embedded System Design appeared first on ELE Times.

Quantum-Safe Hardware: Why the Cybersecurity Deadline Is Closer Than the Quantum Breakthrough

The world’s digital infrastructure is quietly approaching a cryptographic inflexion point. For decades, global cybersecurity has relied on a small set of mathematical assumptions, most notably RSA and elliptic-curve cryptography. These assumptions underpin everything from cloud authentication and mobile networks to payment systems, defence communications, and digital identity. Quantum computing threatens not to weaken these foundations, but to invalidate them entirely.

What makes this transition uniquely dangerous is not the arrival of quantum computers themselves, but the mismatch between how fast cryptography can be broken and how slowly hardware trust systems can be replaced. This is not a software problem that can be patched overnight. It is a hardware lifecycle problem measured in decades. As quantum research accelerates and post-quantum cryptography standards solidify, a hard truth is emerging across the industry: quantum-safe hardware must be deployed well before quantum computers can break encryption. Waiting for certainty is, paradoxically, the fastest path to systemic insecurity.

From Mathematical Risk to Engineering Reality

Quantum computing represents a rare technological disruption where the threat is mathematically inevitable, not probabilistic. Shor’s algorithm does not weaken RSA or elliptic-curve cryptography; it nullifies them. Once fault-tolerant quantum computers emerge, today’s cryptographic foundations collapse outright. More critically, attacks need not be real-time: encrypted data captured today can be decrypted years later under the “harvest now, decrypt later” model.

As Ali El Kaafarani, CEO of PQShield, has consistently argued, the industry’s mistake is treating post-quantum cryptography as a future software upgrade rather than a present hardware engineering challenge. Once quantum decryption is possible, compromise is retroactive; you don’t lose today’s secrets, you lose yesterdays. This reality has shifted focus from cryptographic theory to what can be implemented in silicon. While many PQC schemes exist on paper, only a narrow set survives real-world constraints of memory, power, latency, and side-channel resistance. Lattice-based algorithms such as NIST-standardised Kyber and Dilithium are currently the most hardware-viable, though they still impose significant demands. Other approaches, like code-based cryptography, struggle with impractically large key sizes that overwhelm secure hardware resources.

Why Hardware, Not Software, Is the Real Battleground

Cryptography is enforced by hardware roots of trust, HSMs, secure elements, TPMs, SIMs, and embedded controllers that underpin secure boot, identity, and key protection. Post-quantum cryptography breaks the assumptions these systems were built on: larger keys and heavier computation quickly expose the limits of hardware optimised for RSA and ECC. While software can absorb this overhead, hardware-bound systems cannot.

This is where “crypto-agility” fractures. Many platforms claim algorithm agility, but in practice, it exists only at the firmware or API layer, not in silicon. As Todd Moore, Vice President of Data Security Products at Thales, has noted, true crypto-agility is about whether hardware can evolve without becoming a performance bottleneck. In high-throughput environments, first-generation “PQC-ready” hardware often works functionally but not economically, forcing a choice between degraded performance and early replacement. Crypto-agility is no longer a software feature; it is a physical property of hardware architecture.

The Myth of Universal Crypto-Agility

In response to quantum risk, many vendors describe their products as “crypto agile.” In practice, crypto-agility has often meant that software can be updated, while the underlying hardware remains fixed. That model is breaking down. True crypto-agility in the post-quantum era requires hardware that was designed with uncertainty in mind: spare compute capacity, flexible accelerators, sufficient secure memory, and firmware update paths that are themselves resistant to quantum attacks. Much of the hardware deployed today, particularly older HSMs and embedded roots of trust, does not meet this bar.

Newer platforms from vendors such as Thales and Entrust are moving toward genuinely post-quantum-ready architectures, but even here, the industry is candid that early PQC support is only a first step. In many environments, especially high-throughput systems like certificate authorities and telecom authentication servers, performance collapses when PQC is layered onto hardware never designed for it. The uncomfortable implication is that a significant portion of deployed cryptographic hardware will ultimately need physical replacement, not because it is insecure today, but because it cannot scale securely tomorrow.

The Long-Life Device Problem: Secure Boot and Identity

Quantum risk becomes existential when hardware is expected to remain trusted for 15–20 years. Industrial equipment, telecom infrastructure, vehicles, medical devices, and defence systems often outlive multiple cryptographic generations. If the firmware-signing keys protecting these systems become quantum-breakable mid-lifecycle, attackers gain the ability to install malicious updates that appear fully legitimate.

To address this, hardware designers are rethinking trust from the ground up. Secure boot chains are increasingly being designed around hybrid verification models, where classical and post-quantum signatures coexist during a long transition period. Device identity is shifting toward roots of trust that can support PQC natively, rather than bolting it on later.

Equally important is the ability to migrate trust anchors in the field. Hardware that cannot rotate its cryptographic identity without physical access becomes a liability the moment quantum attacks become practical. In this sense, quantum-safe hardware is no longer just a security feature; it is a prerequisite for product longevity.

Scale Changes Everything

Post-quantum cryptography is feasible at a small scale. The real challenge emerges at volume. Larger keys and signatures mean more data moving through networks, more bytes stored in certificates, and more cycles spent on verification. In isolation, these costs are manageable. At the scale of global telecom networks, payment systems, and cloud platforms, they become systemic.

Cloud providers have already found that naïve PQC deployment can inflate handshake sizes enough to affect latency and throughput. Telecom operators face similar issues in authentication signalling, where milliseconds matter. This is why hybrid cryptography combining classical and post-quantum algorithms has become the dominant near-term strategy. Hardware acceleration is the critical enabler here. When PQC is supported at the silicon level, much of the overhead becomes manageable. When it is not, performance penalties cascade across the system.

The Real Deadline No One Wants to Announce

Public timelines often place cryptographically relevant quantum computers in the early to mid-2030s. Internally, many security leaders plan as if the deadline is earlier. The reason is simple: hardware migration takes time. From design and validation to certification and deployment, replacing cryptographic hardware across critical infrastructure can take a decade. Waiting for definitive proof that quantum computers can break RSA guarantees that the response will come too late. A pragmatic consensus is forming across the industry. By the second half of this decade, all new cryptographic hardware procurement must be quantum-safe by design. By the end of the decade, high-value infrastructure must complete the transition. Anything beyond those risks turning today’s encrypted data into tomorrow’s breach archive.

As Marco Pereira, Global Head of Cybersecurity at Capgemini, has emphasised about quantum risk “Quantum readiness isn’t about predicting a date, it’s about managing irreversible risk. Every encrypted asset today could become tomorrow’s breach if organisations delay adopting post-quantum protections.

Quantum-safe hardware is not driven by fear of the unknown. It is driven by certainty that cryptography is bounded by physics, certainty that hardware lifecycles are slow, and certainty that data longevity outlasts algorithm lifetimes. The organisations that navigate this transition successfully will not be those that deploy post-quantum cryptography fastest in software, but those that redesign their hardware roots of trust early, with crypto-agility, scale, and long-term integrity at the core. In the quantum era, cybersecurity failures will not arrive with alarms or outages. It will arrive quietly, years after the data was first captured. The only winning strategy is to make that data permanently useless before the quantum key ever turns.

The post Quantum-Safe Hardware: Why the Cybersecurity Deadline Is Closer Than the Quantum Breakthrough appeared first on ELE Times.

SQL Server IoT 2025: Why It Matters for Embedded and OEM Systems

Courtesy: Arrow Electronics

The new SQL Server IoT 2025 is now available! If you build devices, appliances, or embedded systems that ship with a database inside, SQL Server IoT 2025 is worth a serious look. It brings the SQL Server 2025 engine into long-life, fixed-function products. You get the full engine, the same AI features, the same JSON and vector capabilities, and the same security improvements. The only difference is that it is packaged and licensed for OEM and embedded scenarios.

In my experience supporting embedded customers, the pattern is consistent. More data at the edge, tight security requirements, long product lifecycles, and pressure to support AI without adding cloud dependencies. SQL Server IoT 2025 helps you handle those problems without changing how you design your systems. You can use the same T-SQL, drivers, tools, containers, and development workflow.

AI where your device runs

The biggest change in SQL Server IoT 2025 is the built-in AI stack. The database now supports a native vector type, semantic search, hybrid search, and local or remote model execution. You can generate embeddings inside the engine, and you can run AI agents through a secure REST endpoint that SQL Server manages.

Nothing in this requires a cloud connection unless you choose to use one. You can keep models local by using Ollama or ONNX Runtime. You can also call cloud models through Azure OpenAI or OpenAI.

For embedded systems, this means you can build features that previously required a cloud round-trip. Examples include local anomaly detection, troubleshooting assistance, natural language search of manuals or logs, and smarter automation. If you already store your device data in SQL Server, the new vector features let you use that data immediately.

Security that matches modern requirements

The platform is secure out of the box. SQL Server IoT 2025 carries forward the security updates from SQL Server 2025. That includes TLS 1.3, TDS 8.0, PBKDF hashing, managed identities, and stricter defaults. This helps you ship hardware that is ready for audit and compliance checks. For teams in healthcare, manufacturing, or other controlled industries, this reduces significant design risk.

Performance improvements that help small systems

Most devices in the field run on constrained compute, so predictable behaviour underload becomes more important than raw horsepower. SQL Server IoT 2025 benefits from improvements like optimised locking, Lock After Qualification, tempdb governance, faster failover, and reduced contention during heavy workloads.

Your device can run more predictable workloads with fewer stalls. It starts faster, handles concurrency better, and gives you cleaner behaviour when something in the system misbehaves.

Better ways to move data out of the device

You also get Change Event Streaming, which pushes changes directly to Azure Event Hubs. The engine streams committed transactions without extra system tables. This helps when your design needs low-latency reporting or coordination with services outside the device.

If you use Microsoft Fabric, SQL Server IoT 2025 supports database mirroring directly into OneLake. That gives you a simple path to analytics or long-term storage without writing ETL code.

Developer workflow stays simple

Stability in the toolchain is just as important as stability in the engine. SQL Server IoT 2025 uses the same drivers, SSMS, VS Code extension, containers, and deployment workflow. You also get the new JSON type, JSON indexing, RegEx functions, Base64 utilities, and improved T-SQL functions that SQL Server 2025 introduces.

When an upgrade is worth it

If you are trying to decide whether this upgrade is worth it, these are the points that usually guide the decision:

- If your device is running SQL Server 2014 or 2016, you are past or near the end of mainstream support, and the extended support runway is shrinking fast. SQL Server IoT 2025 offers a long-life option with a modern engine, stronger security, and a cleaner feature set for long-term maintenance. You also get improvements like accelerated recovery, better indexing behaviour, and up-to-date drivers.

- If your product roadmap includes AI features or if customers are asking for analytics without sending data off the device, SQL Server IoT 2025 gives you a built-in way to handle that.

- If your company is standardising on Fabric or Azure Arc, IoT 2025 fits neatly into that architecture.

If your design team is trying to reduce custom code around queues, logs, or sync processes, IoT 2025 reduces that work.

The post SQL Server IoT 2025: Why It Matters for Embedded and OEM Systems appeared first on ELE Times.

Microchip Releases Plastic Transient Voltage Suppressors for A&D Applications

Microchip Technology announced the release of its JANPTX family of non-hermetic plastic Transient Voltage Suppressor (TVS) devices that meet the MIL-PRF-19500 qualification, offering high-reliability protection for aerospace and defence applications. These TVS devices are the first in the industry to achieve MIL-PRF-19500 qualification in a plastic package, offering engineers a lightweight, cost-effective solution without sacrificing stringent military performance requirements. The JANPTX product line is available in voltage ranges from 5V to 175V and includes five variants: JANPTX1N5555UJ, JANPTX1N5558UG, JANPTX1N5629AUJ, JANPTX1N5665AUG, JANPTX1N5907UG and JANPTX1N5907UJ.

With a high peak pulse power rating of 1.5 kW and clamping response times measured at less than 100 picoseconds in internal tests, the JANPTX family is designed to help ensure the safety and reliability of sensitive electronic components in demanding environments. Designed for surface mounting, these unidirectional TVS devices deliver protection against voltage transients such as lightning strikes, electrostatic discharge (ESD), and electrical surges.

Key Features of the TVS Devices

- Surface-mount unidirectional TVS design

- Can suppress transients up to 1,500W at 10/1000 µs

- Capable of clamping transients in less than 100 ps

- Working voltage range: 5V to 175V

- Military qualification: MIL-PRF-19500/716

- Equivalent hermetic packages available for surface mount and thru-hole

- Weight: ~0.25 grams

Development Tools

The JANPTX devices are supported by various SPICE models, which enable the virtual prediction and simulation of a circuit’s behaviour, eliminating the need for time-consuming physical prototyping and redesigns.

The post Microchip Releases Plastic Transient Voltage Suppressors for A&D Applications appeared first on ELE Times.

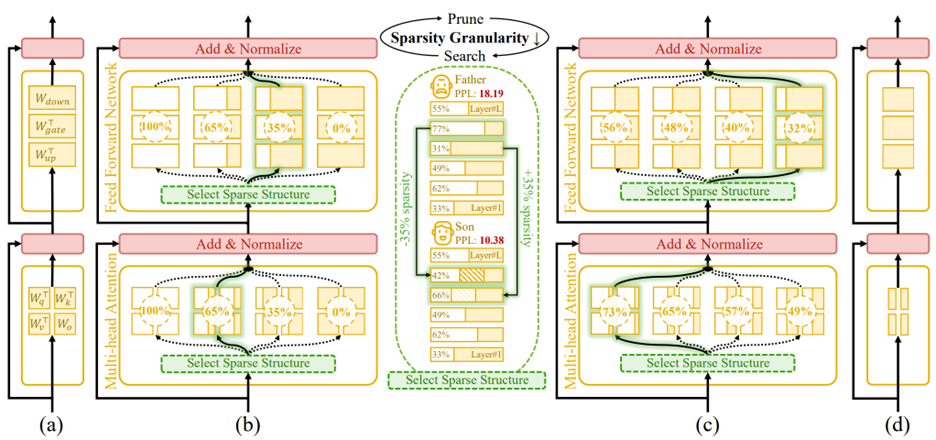

Týr-the-Pruner: Search-based Global Structural Pruning for LLMs

Courtesy: AMD

Key Takeaways:

- End-to-end global structural pruning: Týr-the-Pruner jointly optimises pruning and layer-wise sparsity allocation, avoiding two-stage global ranking pipelines.

- Multi-sparsity supernet with expectation-aware error modelling: Layers are pruned at multiple sparsity levels and evaluated collectively to capture cross-layer dependencies.

- Coarse-to-fine evolutionary search under a fixed sparsity budget: Sparsity-shift mutations preserve global constraints while progressively refining resolution (12.5% → 1.56%).

- Taylor-informed, backprop-free local pruning: First- and second-order saliency guides structured pruning with minimal functional drift.

- Near-dense accuracy with real hardware gains: Up to 50% parameter reduction retains ~97% accuracy on Llama-3.1-70B, accelerating inference on AMD Instinct GPUs.

As large language models (LLMs) scale into the tens and hundreds of billions of parameters, pruning has re-emerged as a critical lever for improving inference efficiency without sacrificing accuracy. AMD’s Týr-the-Pruner advances this frontier with a search-based, end-to-end framework for global structural pruning, delivering up to 50% parameter reduction while retaining ~97% of dense accuracy on Llama-3.1-70B—a new state of the art among structured pruning methods.

Accepted to NeurIPS 2025, the work also demonstrates tangible inference speedups on AMD Instinct GPUs, reinforcing pruning’s relevance not just as a compression technique, but as a practical path to deployment-scale efficiency.

Why global sparsity matters

Local structural pruning is appealing for its simplicity and efficiency: layers are pruned independently, often allowing even hundred-billion-parameter models to fit on a single device. However, this approach enforces uniform per-layer sparsity, overlooking how errors and redundancies propagate across layers.

Existing “global” pruning methods attempt to address this by first ranking substructures across layers and then pruning accordingly. While intuitive, this two-stage pipeline breaks end-to-end optimisation and struggles to capture inter-layer interactions.

Týr-the-Pruner flips the paradigm. Instead of ranking structures before pruning, it first constructs a multi-sparsity supernet and then searches directly for the optimal layer-wise sparsity distribution under a fixed global budget—yielding a truly end-to-end global pruning strategy.

Inside Týr-the-Pruner: How It Works

Figure 1. An overview of Týr-the-Pruner. Large language models (a) will be effectively locally pruned across multiple sparsity ratios and constructed into a supernet (b). An iterative prune-and-search strategy will be used to select the optimal sparse structure for each layer while maintaining a target overall sparsity ratio: pruning and sparsity-shift-driven evolutionary search are implemented iteratively with a coarse-to-fine sparsity interval granularity (c). Ultimately, the post-pruned LLM with the optimal sparsity distribution (d) is obtained.

Building a Reliable Supernet

The process begins by locally pruning every layer across multiple sparsity levels. Týr employs Taylor-informed saliency (first- and second-order) alongside backprop-free weight adjustment, applied progressively to minimise performance perturbations.

To ensure that different pruned variants remain mutually consistent, the framework introduces expectation-aware error accumulation, addressing the otherwise ambiguous error propagation that arises when multiple pruned copies coexist within a supernet.

Coarse-to-Fine Global Search

Once the supernet is established, Týr performs an evolutionary sparsity-shift search. Each mutation preserves the global sparsity budget—for example, making one layer slightly denser while another becomes equivalently sparser. Candidate models are evaluated using distillation-based similarity metrics over hidden activations and logits.

A naïve fine-grained search would be intractable: for an 80-sublayer model, even modest sparsity resolution would imply an astronomically large configuration space. Týr sidesteps this with an iterative coarse-to-fine strategy:

- The search begins with a coarse sparsity interval (12.5%) and just nine candidates per layer.

- After identifying a strong sparsity pattern, the search recentres and halves the interval (12.5% → 6.25% → 3.13% → 1.56%).

- After four iterations, Týr reaches fine-grained sparsity resolution while keeping each iteration’s effective search space manageable.

This design steadily narrows the search, accelerates convergence, and efficiently uncovers the optimal global sparsity distribution.

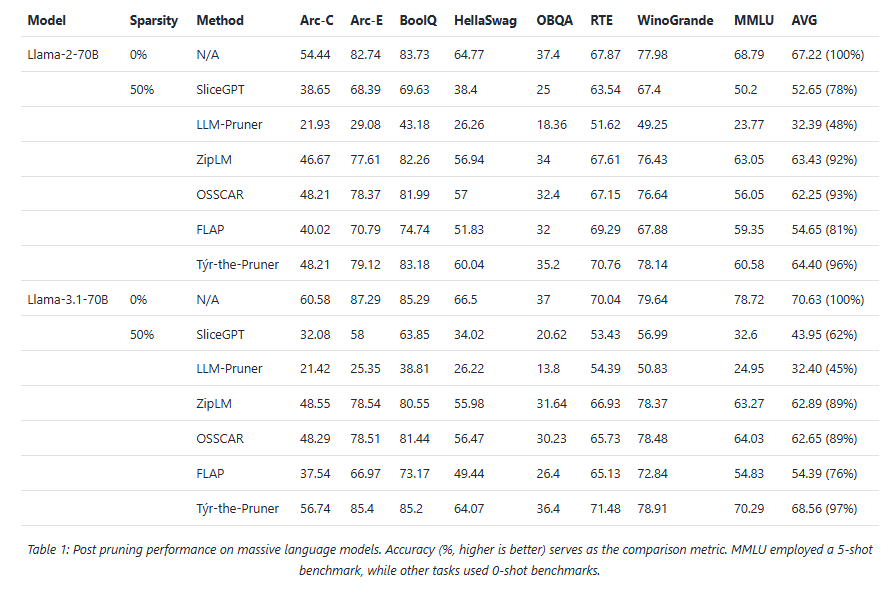

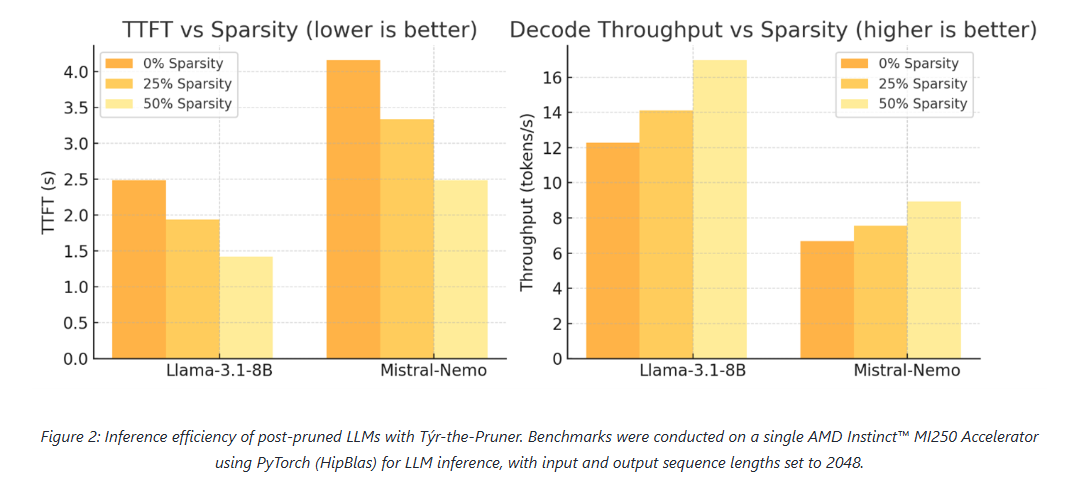

Results: Accuracy and efficiency on AMD hardware

Across models and benchmarks, Týr-the-Pruner consistently preserves near-dense accuracy while delivering meaningful efficiency gains on AMD Instinct MI250 accelerators.

At 50% sparsity, the method retains 96–97% average accuracy on 70B-scale models—outperforming structured pruning approaches such as SliceGPT, LLM-Pruner, and FLAP. On smaller models, the runtime benefits are equally compelling: for Llama-3.1-8B and Mistral-Nemo, pruning cuts time-to-first-token by up to 1.75× and boosts decode throughput by up to 1.38×.

These results position pruning as a first-class optimisation technique for large-scale LLM inference, particularly on modern accelerator architectures.

Practical Considerations: Memory and Search Efficiency

While supernets can be large, Týr keeps memory usage close to that of a single dense model by storing pruned substructures on disk and loading only the active subnet into high-bandwidth memory. Disk footprints remain manageable—around 40 GB for 7–8B models and ~415 GB for 70B models—with older artefacts cleaned up between iterations.

The evolutionary search itself is computationally efficient. Evaluations proceed under progressively increasing token budgets (2K → 16K → 128K), converging rapidly thanks to the coarse-to-fine schedule. For 8B-scale models, a single search iteration completes in a few hours, keeping overall runtime well within practical limits.

Summary

Týr-the-Pruner represents a shift in how global structural pruning is approached. By unifying pruning and sparsity allocation into a single, end-to-end search process—and combining it with expectation-aware error modelling and coarse-to-fine optimisation—the framework achieves both high accuracy retention and real-world inference acceleration.

With up to 50% parameter reduction and ~97% accuracy preserved on Llama-3.1-70B, Týr-the-Pruner demonstrates that global pruning can be both principled and practical—setting a new benchmark for structured pruning in the era of large-scale LLM deployment.

The post Týr-the-Pruner: Search-based Global Structural Pruning for LLMs appeared first on ELE Times.

LEDS Manufactured Backwards

| My college Electronics class final was to simply solder on parts of a pre-made circuit, and in my case it was an LED Christmas Tree. After soldering 36 TINY AS HELL LEDS, I tested it and there was no lights turning on…. Decided to test an extra LED and turns out the legs were manufactured with the long leg as the negative side and the short leg as the positive side. I’m so cooked [link] [comments] |