Feed aggregator

Gartner Forecasts Having 116 Million EVs on the Road in 2026

Gartner, Inc., a business and technology insights company predicts to have 116 million electric vehicles (EVs), including cars, buses, vans and heavy trucks on the road in 2026.

According to the research by the company, battery electric vehicles (BEVs) are forecast to continue to account for well over half of EV installed base, but there is an increasing proportion of customers choosing PHEVs (see Table 1).

Table 1. Electric Vehicle Installed Base by Vehicle Type, Worldwide, 2025-2026 (Single Units)

|

2025 Installed Base |

2026 Installed Base |

|

|

Battery Electric Vehicles (BEV) |

59,480,370 | 76,344,452 |

| Plug-in Hybrid Electric Vehicles (PHEV) | 30,074,582 | 39,835,111 |

| Total | 89,554,951 | 116,179,563 |

Source: Gartner (December 2025)

Expert Take:

“Despite the U.S. government introducing tariffs on vehicle imports and many governments removing the subsidies and incentives for purchasing EVs, the number of EVs on the road is forecast to increase 30% in 2026,” said Jonathan Davenport, Sr Director Analyst at Gartner. “In 2026, China is projected to account for 61% of total EV installed base, and global ownership of plug-in hybrid EVs (PHEVs) is expected to rise 32% year-over-year as customers value the reassurance of a back-up petrol engine for use, should they need it.”

The post Gartner Forecasts Having 116 Million EVs on the Road in 2026 appeared first on ELE Times.

Toradex Launches Two New Computer on Module Families for Ultra-Compact Industrial and IoT Applications

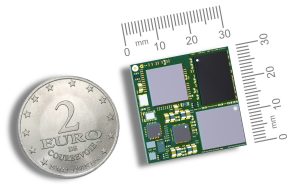

Toradex has expanded its embedded computing portfolio with four new modules powered by NXP i.MX 93 and i.MX 91 processors: OSM iMX93, OSM iMX91, and Lino iMX93, Lino iMX91 by launching two entirely new Computer on Module (CoM) families, OSM and Lino.

The OSM and Lino families deliver cost-optimized, industrial-grade reliability, offering ultra-compact form factors, and long-term software support, designed for high-volume, space-constrained industrial IoT devices, like industrial controllers, gateways, smart sensors, and handheld systems, among others. For AI at the Edge, Industrial IoT applications, the NXP i.MX 93 offers a 0.5 TOPS NPU, enabling entry-level HW accelerated on-device machine learning for smart sensing, analytics, and industrial intelligence. Designed for extreme temperatures from -40°C to +85°C, both the OSM and Lino families deliver industrial-grade reliability and availability through 2038, providing a future-proof foundation for next-generation IoT and edge devices.

Both families deliver new compact, reliable, industrial Edge AI compute platforms”, said Samuel Imgrueth, CEO at Toradex. “While OSM adds a solderable standard form factor, Lino provides connector-based ease of use for rapid integration and serviceability. This empowers customers to design next generation, intelligent, space-constrained devices with confidence, scalability, and long-term support.

The OSM family adheres to the Open Standard Module (OSM) Size-S specification, providing a 30 × 30mm solderable, connector-less design optimized for automated assembly, rugged operation, and cost-effective scaling. It’s an ideal choice for high-volume applications up to several hundred thousand devices a year.

The Lino family provides a cost-optimized, connector-based entry point for space-constrained devices. Its easy-to-use connector interface simplifies integration, serviceability, and speeds up development, while rich connectivity options support a wide range of scalable industrial and IoT applications.

Toradex is also introducing the Verdin-Lino Adapter, allowing any Lino module to be mounted onto any Verdin-compatible carrier board. This gives customers immediate access to the powerful Verdin ecosystem and enables testing and validation using both the Verdin Development Board and existing Verdin-based custom designs.

All modules come with full Toradex Software support, including a Yocto Reference Image and Torizon support, a Yocto-based, long-term-supported Linux platform that provides secure OTA remote updates, device monitoring, remote access, and simplified EU CRA (Cyber Resilience Act) compliance. Its integration with Visual Studio Code and rich ecosystem accelerates development while ensuring production reliability and operational security. Torizon is also the ideal starting point for your own Linux Distribution.

The post Toradex Launches Two New Computer on Module Families for Ultra-Compact Industrial and IoT Applications appeared first on ELE Times.

Global IP dynamics highlight surging GaN innovation activity in Q3/2025, says KnowMade

🏰 Запрошуємо на екскурсію «Місто корупційних таємниць: відкрийте правду, яка ховається за фасадами»

14 грудня о 12:00 запрошуємо всіх охочих на екскурсію, яка змінить ваше уявлення про Київ.

Ми пройдемо маршрутами, які зберігають більше, ніж здається на перший погляд, повз будівлі, що могли б розповісти не одну цікаву історію.

The Great Leap: How AI is Reshaping Cybersecurity from Pilot Projects to Predictive Defense

Imagine your cybersecurity team as a group of highly-trained detectives. For decades, they’ve been running through digital crime scenes with magnifying glasses, reacting to the broken window or the missing safe after the fact. Now, suddenly, they have been handed a crystal ball—one that not only detects the threat but forecasts the modus operandi of the attacker before they even step onto the property. That crystal ball is Artificial Intelligence, and the transformation it’s bringing to cyber defense is less a technological upgrade and more a fundamental re-engineering of the entire security operation.

Palo Alto Networks, in partnership with the Data Security Council of India (DSCI), released the State of AI Adoption for Cybersecurity in India report. The report found that only 24% of CXOs consider their organizations fully prepared for AI-driven threats, underscoring a significant gap between adoption intent and operational readiness. The report sets a clear baseline for India Inc., examining where AI adoption stands, what organizations are investing in next, and how the threat landscape is changing. It also surfaces capability and talent gaps, outlines governance, and details preferred deployment models.

While the intent to leverage AI for enhanced cyber defense is almost universal, its operational reality is still maturing. The data reveals a clear gap between strategic ambition and deployed scale.

The report underscores the dual reality of AI: it is a potent defense mechanism but also a primary source of emerging threat vectors. Key findings include:

- Adoption intent is high, maturity is low: 79% of organizations plan to integrate AI/ML towards AI-enabled cybersecurity, but 40% remain in the pilot stage. The main goal is operational speed, prioritizing the reduction of Mean Time to Detect and Respond (MTTD/MTTR).

- Investments are Strategic: 64% of organizations are now proactively investing through multi-year risk-management roadmaps.

- Threats are AI-Accelerated: 23% of the organizations are resetting priorities due to new AI-enabled attack paradigms. The top threats are coordinated multi-vector attacks and AI-poisoned supply chains.

- Biggest Barriers: Financial overhead (19%) and the skill/talent deficit (17%) are the leading roadblocks to adoption.

- Future Defense Model: 31% of organizations consider Human-AI Hybrid Defense Teams as an AI transforming cybersecurity approach and 33% of organizations require human approval for AI-enabled critical security decisions and actions.

“AI is at the heart of most serious security conversations in India, sometimes as the accelerator, sometimes as the adversary itself. This study, developed with DSCI, makes one thing clear: appetite and intent are high, but execution and operational discipline are lagging,” said Swapna Bapat, Vice President and Managing Director, India & SAARC, Palo Alto Networks. “Catching up means using AI to defend against AI, but success demands robustness. Given the dynamic nature of building and deploying AI apps, continuous red teaming of AI is an absolute must to achieve that robustness. It requires coherence: a platform that unifies signals across network, operations, and identity; Zero-Trust verification designed into every step; and humans in the loop for decisions that carry real risk. That’s how AI finally moves from shaky pilots to robust protection.”

Vinayak Godse, CEO, DSCI, said “India is at a critical juncture where AI is reshaping both the scale of cyber threats and the sophistication of our defenses. AI enabled attacker capabilities are rapidly increasing in scale and sophistication. Simultaneously, AI adoption for cyber security can strengthen security preparedness to navigate risk, governance, and operational readiness to predict, detect, and respond to threats in real time. This AI adoption study, supported by Palo Alto Networks, reflects DSCI’s efforts to provide organizations with insights to navigate the challenges emerging out of AI enabled attacks for offense while leveraging AI for security defense.

The report was based on a survey of 160+ organizations across BFSI, manufacturing, technology, government, education, and mid-market enterprises, covering CXOs, security leaders, business unit heads, and functional teams.

The post The Great Leap: How AI is Reshaping Cybersecurity from Pilot Projects to Predictive Defense appeared first on ELE Times.

I know it's nothing crazy but I built this little FM radio board from a kit and I'm proud because it works. I've never soldered before so please don't mind my ugly soldering skills

| submitted by /u/Cold-Helicopter6534 [link] [comments] |

Just wanted to share the insides of this Securesync 1200 signal generator from 2000s and the option card i installed

| submitted by /u/jacobson_engineering [link] [comments] |

Bringing up my rosco m68k

| Hey folks! On my boards the official firmware boots cleanly, the memory checks pass, and UART I/O behaves exactly as it should. I’m using the official rosco tools to verify RAM/ROM mapping, decoding, and the overall bring-up process. I also managed to get a small “hello world” running over serial after sorting out the toolchain with their Docker setup. I’m also tinkering with a 6502 through-hole version — something simple for hands-on exploration of that architecture. Happy to answer any questions or discuss the bring-up process. [link] [comments] |

Weekly discussion, complaint, and rant thread

Open to anything, including discussions, complaints, and rants.

Sub rules do not apply, so don't bother reporting incivility, off-topic, or spam.

Reddit-wide rules do apply.

To see the newest posts, sort the comments by "new" (instead of "best" or "top").

[link] [comments]

My first Smart Socket :)

| submitted by /u/udfsoft [link] [comments] |

eth industrial switch rx/tx

| yet still one pair leads to nonexisting chip and second shows only diagnostics from mcu. Life is brutal. [link] [comments] |

Simple Electronic Dice

| I had a free evening, so decided to make this in the shed/workshop. It uses a 555 to produce rapid pulses, and a 4017 decade counter to sequence 6 LEDs rapidly. in r/askelectronics I asked for advice about more chips I can use in the future, and got another 4000 series which will allow me to drive a seven segment display in the same fashion, as opposed to six individual LEDs. Once I was happy with how the circuit behaves on the breadboard I put it to stripboard. I decided to current limit the white LEDs with a 12KR resistor. I don't know if using an opto-isolator in the way I did is good practice or not. It works, and is simple enough. I found that for me, the best way to use a pulldown resistor for the 4017 trigger was to also connect a small .1uF ceramic capacitor in parallel to the pulldown resistor. I know that by no means is this groundbreaking, or advanced. It's probably akin to something that would have been made 30 or 40 years ago, but I only dabble as a hobby, and find soldering away, alone, for a few hours, whilst the rain hammers down outside quite therapeutic for me. [link] [comments] |

Nice work!

| submitted by /u/Electro-nut [link] [comments] |

EEVblog 1723 - AlienTek DM40 Multimeter/Oscilloscope Review

onsemi releases EliteSiC MOSFETs in T2PAK top-cool package

Без київських політехніків Україна не була б повноправним членом Антарктичного клубу

Величезним досягненням української науки сьогодні, на думку професійної спільноти, є продовження антарктичних досліджень на станції "Академік Вернадський" та океанографічному судні "Ноосфера". З гордістю можемо сказати, що випускники і вчені КПІ є учасниками як отримання станції Україною майже 30 років тому, так і забезпечення її життєдіяльності та виконання програми спостережень нині.

My class AB amplifier

| So, I'm developing a guitar amplifier for a friend, and I need a high power (as for my standards) amp to make it loud. So I made this one, the most powerful discrete amp to date, that can deliver 20Vpp to 8 ohm speaker without distortion at 24V supply. I had a problem with connecting everything for tests and idle current calibration because PCB is , so i had to improvise. I put a power diode into ground terminal of amp, connected a big clip of function generator ground, then connecred small clip of power supply ground, and scope ground to power supplu ground clip. The effect is this big tangle of wires and connectors, but it worked as intended. The design is a variation of amp from 70s record player but with changed voltage rating and conversion from class B to AB. It's suprisingly stable and silent when input is floating, so I like it. [link] [comments] |

The Big Allis generator sixty years ago

Think back to the 1965 electrical power blackout in the Northeast United States of just over sixty years ago. It was on November 9, 1965. There was a huge consequence for Consolidated Edison in New York City.

Their power-generating facility in Ravenswood had been equipped with a generator made by Allis-Chalmers, as shown in the following screenshots.

Figure 1 Ravenswood power generating facility and the Big Allis power generator.

That generator was the largest of its kind in the whole world at that time. Larger generators did get made in later years, but at that time, there were none bigger. It was so big that some experts opined that such a generator would not even work. Because of its size and its manufacturer’s name, that generator came to be called “Big Allis”.

Big Allis had a major design flaw. The bearings that supported the generator’s rotor were protected by oil pumps that were powered from the Big Allis generator itself.

When the power grid collapsed, Big Allis stopped delivering power, which then shut down the pumps delivering the oil pressure that had been protecting the rotor bearings.

With no oil pressure, the bearings were severely damaged as the rotor slowed down to a halt. One newspaper article described the bearings as having been ground to dust. It took months to replace those bearings and to provide their oil pumps with separate diesel generators devoted solely to maintaining the protective oil pressure.

So far as I know, Big Allis is still in service, even through the later 1977 and 2003 blackouts, so I guess that those 1965 revisions must have worked out.

John Dunn is an electronics consultant, and a graduate of The Polytechnic Institute of Brooklyn (BSEE) and of New York University (MSEE).

Related Content

- Modern Distribution Grid Technologies

- Power grid blackouts: Are they preventable and predictable?

- Teardown: The power inverter – from sunlight to power grid

- Inventions that were almost ahead of their time

The post The Big Allis generator sixty years ago appeared first on EDN.

Optimized analog front-end design for edge AI

Courtesy: Avnet

| Key Takeaways:

01. AI models see data differently: what makes sense to a digital processor may not be useful to an AI model, so avoid over-filtering and consider separate data paths 02. Consider training needs: models trained at the edge will need labeled data (such as clean, noisy, good, faulty) 03. Analog data is diverse: match the amplifier to the source, consider the bandwidth needs of the model, and the path’s signal-to-noise ratio |

Machine learning (ML) and artificial intelligence (AI) have expanded the market for smart, low-power devices. Capturing and interpreting sensor data streams leads to novel applications. ML turns simple sensors into smart leak detectors by inferring why the pressure in a pipe has changed. AI can utilize microphones in audio equipment to detect events within the home, such as break-ins or an occupant falling.

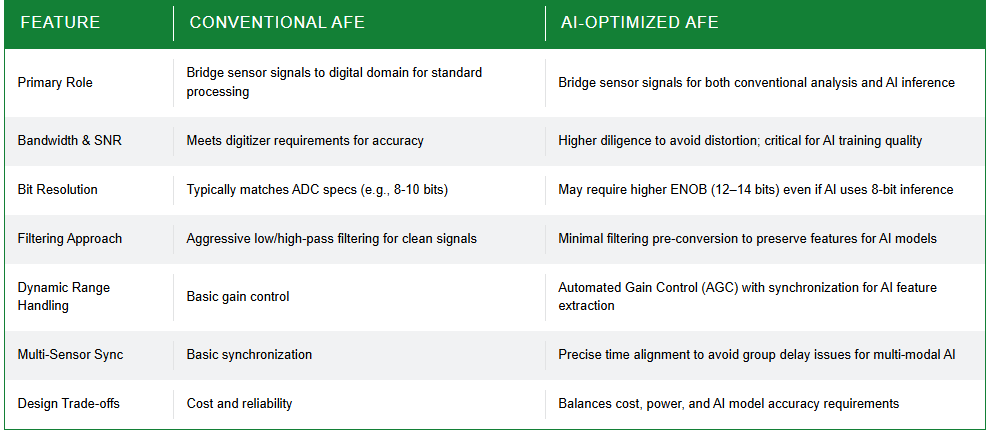

For many applications that rely on real-world data, the analog front-end (AFE) is one of the most important design elements as it functions as a bridge to the digital world. At a high level, AFEs delivering data to a machine-learning back-end have broadly similar design needs to conventional data-acquisition and signal-processing systems.

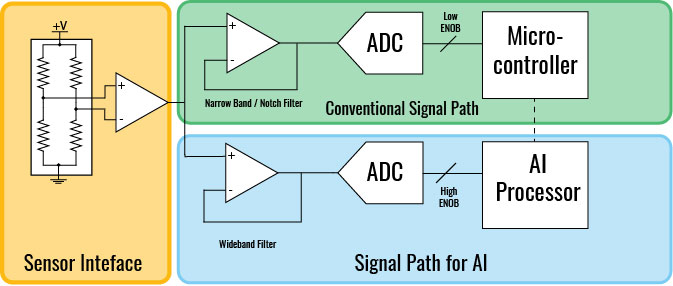

But in some applications, particularly those in transition from IoT to AIoT, the data is doing double-duty. Sensors could be used for conventional data analysis by back-end systems and also as real-time input to AI models. There are trade-offs implied by this split, but it could also deliver greater freedom in the AFE architecture. Any design freedom must still address overall cost, power efficiency, and system reliability.

The importance of bandwidth and signal-to-noise ratio

Accuracy is often an imperative with analog signals. The signal path must deliver the bandwidth and signal-to-noise ratio required by the front-end’s digitizer. When using AI, designers will be more diligent when avoiding distortion, as introducing spurious signals during training could compromise model training.

The classic AFE may need to change to accommodate the sensor and digital processing sections, and the AI model’s needs which may be different. (Source: Avnet)

The classic AFE may need to change to accommodate the sensor and digital processing sections, and the AI model’s needs which may be different. (Source: Avnet)

For signals with a wide dynamic range, it may make sense to employ automated gain control (AGC) to ensure there is enough detail in the recorded signal under all practical conditions. The changes in amplification should also be passed to the digitizer and synchronized with the sensor data so they can be recorded as features during AI training or combined by a preprocessing step into higher-resolution samples. If not, the model may learn the wrong features during training.

Interfacing AI systems with multi-sensor designs

Multi-sensor designs introduce another consideration. Devices that process biological signals or industrial condition-monitoring systems often need to process multiple types of data together. Time-synchronized data will deliver the best results as changes in group delay caused by filtering or digitization pipelines of different depths can change the relationship between signals.

The use of AI may lead the designer to make choices they might not make for simpler systems. For example, aggressive low- and high-pass filtering might help deliver signals that are easier for traditional software to interpret. But this filtering may obscure signal features that are useful to the AI.

| Design Tip – Analog Switches & Multiplexers

Analog switches and multiplexers perform an important role in AFEs where multiple sensors are used in the signal chain. Typically, devices are digitally addressed and controlled, switches selectively connect inputs to outputs, while multiplexers route a specific input to a common output. Design considerations include resistance, switching speed, bandwidth, and crosstalk. |

For example, high-pass filtering can be useful for removing apparent signal drift but will also remove cues from low-frequency sources, such as long-term changes in pressure. Low-pass filtering may remove high-frequency signal components, such as transients, that are useful for AI-based interpretation. It may be better to perform the filtering digitally after conversion for other downstream uses of the data.

Techniques for optimizing energy efficiency in AFEs

Programmable AFEs, or interchangeable AFE pipelines, can improve energy optimization. It is common for edge devices to operate in a low-energy “always on” mode, acquiring signals at a relatively low level of accuracy while the AI model is inactive. Once a signal passes a threshold, the system wakes the AI accelerator and moves into a high-accuracy acquisition mode.

That change can be accommodated in some cases by programming the preamplifiers and similar components in the AFE to switch between low-power and low-noise modes dynamically.

A different approach often used in biomedical sensors is to use changes in duty cycles to reduce overall energy. In the low-power state, the AFE may operate at a relatively low data rate and powered down during quiet intervals. The risk arises of the system missing important events. An alternative is to use a separate, low-accuracy AFE circuit that runs at nanowatt levels. This circuitry may be quite different to the main AFE signal path.

In audio sensing, one possibility is to use a frequency-detection circuit coupled with a comparator to listen for specific events captured by a microphone. A basic frequency detector, consisting of a simple bandpass filter and comparator, may wake the system or move the low-power AFE into a second, higher-power state, but not the full wakefulness mode that engages the back-end digital AI model.

In this state, a circuit such as a generalized impedance converter can be manipulated to sweep the frequency range and look for further peaks to see if the incoming signal meets the wakeup criteria. That multistage approach will limit the time during which the full AI model needs to be active.

Breaking down analog front-ends for AI

Further advances in AI theory enable more sophisticated analog-domain processing before digitization. Some vendors have specialized in neural-network devices that combine on-chip memory with analog computation. Another possibility for AFE-based AI that results in a lower hardware overhead is reservoir computing. This uses concepts from the theory of recurrent neural networks. A signal fed back into a randomly connected network, known as the reservoir, can act as a discriminator used by an output layer that is trained to recognize certain output states as representing an event of interest. This provides the ability to train an AFE on trigger states that are more complex than simple threshold detectors.

Another method for trading off AFE signal quality against AI capability is compressive or compressed sensing. This uses known signal characteristics, such as sparsity, to lower the sample rate and, with it, power. Though this mainly affects the choice of sampling rate in the analog-to-digital converter, the AFE still needs to be designed to accommodate the signal’s full bandwidth. At the same time, the AFE may need to incorporate stronger front-end filtering to block interferers that may fold into the measurement frequency range.

Optimizing AFE/AI trade-offs through experimentation

With so many choices, experimentation will be key to determining the best tradeoffs for the target system. Operating at higher bandwidth and resolution specifications is a good start. Data can be readily filtered and converted to the digital domain at lower resolutions to see how they affect AI model performance.

The results of those experiments can be used to determine the target AFE’s specifications in terms of gain, filtering, bandwidth, and the ENOB needed. Such experiments also provide opportunities to experiment with more novel AFE processing, such as reservoir computing and compressive sensing to gauge how well they might enhance the final system.

The post Optimized analog front-end design for edge AI appeared first on ELE Times.