Feed aggregator

Looking for the future of autonomous driving? It’s in your car today

Courtesy: Texas Instruments

How today’s semiconductors are powering the current and future landscape of autonomous driving

Semiconductors are crucial to designing new and evolving vehicles; a vehicle today has between 1,000 and 3,500 semiconductors. As drivers continue to prioritize features such as advanced driver assistance systems (ADAS), the importance of semiconductors in the vehicles of today – and tomorrow – will only increase. Looking ahead, more than 50 percent of vehicles in the US are estimated to have a full suite of ADAS features by 2050, potentially helping prevent 37 million crashes over a 30-year period.

Given this projected growth, how are today’s semiconductors powering the current and future landscape of autonomous driving?

To show off current ADAS, highlight future capabilities of these systems and discuss the semiconductors that make them possible, Mike Pienovi, general manager for high-performance processors, and Sneha Narnakaje, business manager for automotive radar, took a car with these features out for a test drive.

Enhanced sensing capabilities

Cars with ADAS functions need to see further in front and behind in order to better perceive and respond to the world around them. The driver assistance systems in these vehicles use a combination of semiconductors for sensing, processing, in-vehicle communication and power management.

Serving as the eyes and ears of these new vehicles, sensing integrated circuits enable enhanced angular and distance resolution sensing, whether on the road or in a parking lot. These vehicles use a combination of sensor modules (radar, lidar and cameras) to actively gather data from around the vehicle, with each type of sensor and their supporting sensing semiconductors best suited for particular driving or weather conditions.

Pairing these sensors with high-speed interface solutions facilitates the transfer of the rapidly growing volume of data about the vehicle’s surroundings to advanced embedded processors.

TI has portfolios of innovative highly precise automotive radar sensors as well as embedded processors with edge artificial intelligence capabilities. For camera-based systems, we continue to invest in high-speed FPD-Link SerDes interface technology, which is capable of transferring high-resolution, uncompressed video data and control information over lightweight automotive coaxial cables.

Meeting regulations and functional safety requirements for automotive systems

Manufacturers are striving to expand the capabilities and features of their vehicles while also complying with current and future safety regulations for new vehicles.

Selecting semiconductors that are designed, qualified and certified according to automotive, functional safety and cybersecurity standards is crucial to meeting the stringent demands of these regulations. Our automotive-qualified devices are designed to not only help manufacturers create safer vehicle systems, but also to provide the advanced capabilities and scalability they need to meet current and future design requirements while differentiating from their competition in terms of features and safety.

Driving closer to the future of vehicle autonomy

With portfolios like TI’s, manufacturers can elevate the ADAS features in cars while setting expectations for the future of autonomous driving. Semiconductors are enabling this journey by making it easier for manufacturers to implement ADAS such as parking and lane assistance, collision monitoring, adaptive cruise control and automatic emergency braking across new vehicles.

These enhanced semiconductors, including sensors, processors, power components and interface devices, are the building blocks for innovation. They can help manufacturers reduce design complexity and more easily meet regulatory requirements in current automotive systems while also planning for the future.

From current features like lane and parking assist to the enhanced Level 3 environmental detection capabilities now hitting the market, each innovation, no matter how seemingly minor, moves us closer to full vehicle autonomy, one mile and one parking space at a time.

The post Looking for the future of autonomous driving? It’s in your car today appeared first on ELE Times.

Inside the AI & Sensor Technology Underpinning Level 3 Driving: Beyond ADAS

From simple driver aid technologies to extremely clever, semi-autonomous cars, the automobile industry is changing quickly. The transition from conventional ADAS to Level 2+ and Level 3 autonomous driving, made possible by developments in artificial intelligence, sensor fusion, edge computing, and functional safety, is at the core of this evolution. The fundamental engineering advancements that not only enable Level 3 autonomy but also make it feasible for mass production are examined in this article.

Level 3 systems still anticipate the driver to take control when the system requests it, in contrast to Level 4 systems, which may function without a steering wheel or pedals and don’t require driver participation inside their designated operational domains. The technological advancement from Level 2 and the constraints that set Level 3 apart as a stage of transition toward complete autonomy are both highlighted in this comparison.

Introduction to Level 3 AutonomyLevel 3 autonomy, or “Conditional Automation” as defined by SAE J3016, allows the driver to relinquish active control under certain circumstances, allowing the vehicle to take over dynamic driving responsibilities. Suppose the Operational Design Domain (ODD) is satisfied. In that case, Level 3 systems can control lane changes, acceleration, braking, and environmental perception without human interaction, in contrast to Level 2, which necessitates continuous driver supervision.

With the help of UNECE standards, nations such as China, Japan, and Germany have begun to approve Level 3 deployments (R157). This change necessitates a strong technical base that combines real-time decision-making algorithms, ultra-low latency processing, and sensor redundancy.

The Level 3 Vehicle ArchitecturePlatforms for Centralized Computing

Centralized computation systems that can process more than 20 sensor inputs in real-time are replacing legacy distributed ECUs. Level 3 vehicles are increasingly being driven by high-performance SoCs like of the Qualcomm Snapdragon Ride Flex, NVIDIA DRIVE Thor, and Mobileye EyeQ Ultra. These SoCs are based on real-time safety islands, ISPs (Image Signal Processors), and AI accelerators. Controlling power consumption and heat dissipation becomes a crucial engineering challenge as these compute platforms incorporate numerous high-throughput pipelines and AI inference engines. Advanced thermal management techniques, such as power gating, dynamic voltage/frequency scaling (DVFS), heat sinks, and active cooling, must be used by designers to guarantee system dependability, efficiency, and adherence to automotive-grade operating temperature ranges.

Combining LiDAR, Radar, and Camera SensorsLevel 3 cars use a redundant set of sensors:

- cameras for semantic segmentation and object classification.

- Radar for tracking velocity and depth in all weather conditions.

- LiDAR for object contour identification and high-resolution 3D mapping.

Using deep neural networks (DNNs), Bayesian networks, and Kalman filters, sensor fusion techniques combine multi-modal data to provide a logical environmental model. Avoiding obstacles and maintaining situational awareness depend on this. However, problems including cross-sensor synchronization under dynamic settings, sensor calibration drift over time, and different environmental influences on sensor dependability are introduced by real-world deployment. For the sensor suite to operate consistently, engineers must guarantee accurate temporal alignment and strong error correction.

Safety & Redundant ActuationDesigns that adhere to ISO 26262 guarantee fail-operational capabilities. Redundant power, steering, and braking systems are essential, particularly in situations where human override is delayed. At this level, ASIL-D certified systems and functional safety monitoring are non-negotiable.

Perception and Planning Driven by AIThe Level 3 AI stack consists of:

- Perception: To identify lanes, people, cars, and signs, DNNs were trained on millions of edge cases.

- Prediction: Probabilistic models and recurrent neural networks (RNNs) assess paths and intent.

- Planning: To create safe, driveable routes, path planning modules employ optimization solvers, RRT (Rapidly-exploring Random Trees), and A* search.

Real-time OS kernels and hypervisors are now a feature of compute platforms to control the separation of safety-critical and non-critical workloads.

Localization and High-Definition (HD) MapsLevel 3 systems use SLAM (Simultaneous Localization and Mapping) and GNSS corrections to integrate sensor data with HD maps that are centimeter-accurate. Real-time map streaming is available from map providers such as HERE, TomTom, and Baidu. Using fleet learning algorithms, some OEMs are experimenting with crowdsourced localization.

Real-Time Inference & Edge AIFor Level 3, inference latency is a bottleneck. On-chip AI accelerators (such as NPU and DSP cores) enable real-time neural network inference at over 30 frames per second with latency at the millisecond level. AI models can be deployed on embedded automotive platforms more efficiently thanks to widely used frameworks like ONNX Runtime and NVIDIA TensorRT. These toolkits aid in the optimization, quantization, and compression of models for effective real-time operation.

Mixed precision (INT8, FP16) is supported by new SoCs to balance energy efficiency and performance. Automotive-grade Linux or QNX-based systems and zero-downtime OTA updates are essential for maintaining the system’s security, responsiveness, and compliance. For Level 3, latency is a bottleneck. On-chip AI accelerators (such as NPU and DSP cores) enable real-time neural network inference at over 30 frames per second with latency at the millisecond level. Mixed precision (INT8, FP16) is supported by new SoCs to balance energy efficiency and performance.

Automotive-grade Linux or QNX-based systems and zero-downtime OTA updates are essential for maintaining the system’s security, responsiveness, and compliance.

Challenges AheadA single essential point that concerns the implementation strategy for Level 3 vehicles is the absence of standardised regulations across all regions. Unlike the European Union or Japan, and China, which have put in place systems for endorsing and managing Level 3 systems (i.e., UNECE R157), the United States still does not have overarching federal guidelines. Approvals are still left to states, which creates inconsistency at the central level. These inconsistencies impact OEM planning calendars, compliance validation testing, and strategies for market entrance.

- ODD Constraints: Most Level 3 systems have geo-fencing or speed limitations.

- Cost & Power: Sensor suites and compute platforms escalate the BOM, budget and thermal envelope.

- Cybersecurity: Robust security measures are needed for real-time V2X communication.

- Driver Handover: UX and regulation remain significant challenges for smooth transition from AI driver to human control.

In Level 3 autonomy, a milestone in automotive engineering progress is AI, mechatronics, embedded systems, and regulatory science working together. Level 4/5 complete autonomy may still be years away, but Level 3 demonstrates and paves the way for a future where cars not only provide assistance but drive themselves under supervision and sophisticated control.

The post Inside the AI & Sensor Technology Underpinning Level 3 Driving: Beyond ADAS appeared first on ELE Times.

Breaking Through AI’s Invisible Barrier With Molybdenum

Courtesy: Lam Research

- AI’s evolution could be thwarted by electrical resistance in 3D architectures

- Molybdenum, a breakthrough material, reduces resistance, improves performance

Lam Research’s ALTUS Halo is designed to address the unique challenges of molybdenum implementation in leading-edge integrated circuit types.

The AI revolution faces an invisible barrier: electrical resistance. As artificial intelligence (AI) demands ever-increasing compute, the semiconductor industry has responded by building upward, creating dense 3D architectures that pack more computing power into each square millimeter.

But this vertical scaling creates an unprecedented engineering challenge: every electrical connection through these towering structures must be perfect at the atomic scale, or AI performance degrades catastrophically.

Advanced 3D Integration and Metallization Barriers in AI Chip ManufacturingTraditional metallization approaches are reaching their physical limits.

In conventional chip designs, creating electrical pathways meant depositing metal into dielectric etched features. These methods relied on barrier layers (e.g., titanium nitride, TiN) to prevent unwanted interactions between metals and surrounding materials.

While necessary, these barriers add electrical resistance—acceptable in simpler chips, but a fundamental roadblock in 3D architectures where signals must (soon) travel through up to 1,000 NAND layers of vertical connections.

The narrowing of lines caused by device shrinking drives the need for new materials with shorter mean-free paths—the distance electrons can travel before colliding—that match line length to achieve lower resistance.

The surge in compute demand compounds the challenge. Every suboptimal connection, every additional barrier layer, creates performance bottlenecks and thermal management challenges that can degrade overall AI system capability.

Molybdenum Innovation Provides a Breakthrough Material for Advanced AI Chip ArchitectureLam’s leadership in metallization innovation spans decades of industry inflections. Our pioneering work with tungsten atomic layer deposition (ALD) enabled the revolutionary shift from planar to 3D NAND memory. Now, as device features continue to shrink, we’re driving another fundamental transition with molybdenum—a material uniquely suited for today’s confined spaces.

Molybdenum (Mo) emerges as a transformative material for advanced metallization because its shorter mean-free path makes it uniquely suited for today’s confined spaces.

And unlike tungsten and other metals, Mo doesn’t need an adhesion or barrier layer (like TiN), simplifying the manufacturing process while significantly reducing overall resistance.

The transition to molybdenum echoes another historic industry inflection point: the shift from aluminum to copper interconnects in the early 2000s, which Lam led. Just as that transition fundamentally changed semiconductor manufacturing, today’s move to Mo represents a similar watershed moment.

Advanced ALD Solutions for AI-Era ChipsMaterial selection alone isn’t enough. Our latest innovation, ALTUS Halo, represents a convergence of atomic-scale engineering and practical manufacturing solutions. The platform brings specific innovations for each critical application:

- For 3D NAND it enables void-free lateral and barrier-less fill through advanced ALD technology and precise wafer temperature control.

- For DRAM applications it drives metallization innovation with selective and conformal fill capabilities.

- For logic it offers both thermal and plasma ALD options with an integrated interface cleaning process.

The implications extend far beyond material selection and manufacturing processes.

Lam’s advances in deposition technology and grain engineering enable optimal molybdenum integration across all leading-edge applications—from 3D NAND wordlines to advanced logic interconnects and DRAM structures.

Initial atomic layers in ALD are critical for interface engineering and subsequent film growth, serving as the template for the material’s properties. The ALTUS Halo quad station module architecture is ideal for creating the most advanced fill processes with the highest productivity due to its flexibility of running different wafer temperatures, process steps and chemistry at each station.

As the industry pushes toward increasingly complex architectures, this precision engineering at the atomic scale becomes even more critical.

A Semiconductor Industry Transformation in Memory and LogicThe semiconductor industry stands at a crucial juncture. Data-intensive AI applications demand significant advancements in both memory and logic technologies. These next-generation devices require unprecedented precision in metallization, where even small improvements in resistance and thermal performance can have outsized impacts on overall system capability.

Through innovations like ALTUS Halo, Lam is enabling a fundamental transition across NAND, DRAM, and logic. As the semiconductor industry pushes physics and chemistry to their limits, our manufacturing-ready solutions will help define the future of AI computing.

The post Breaking Through AI’s Invisible Barrier With Molybdenum appeared first on ELE Times.

Виставка викладача ВПІ Сергія Гулєвича в художньому музеї

Жити в Україні й оминути тему війни неможливо. Дослідити парадокси і карколомні повороти людського життя у кризові часи покликана виставка графіки "Долаючи морок", що була розгорнута в Хмельницькому обласному художньому музеї. Автор робіт – молодий художник, викладач кафедри графіки НН ВПІ Сергій Гулєвич.

Transform Your Safety Strategy: Introducing Logix SIS for Enhanced Protection and Efficiency

Courtesy: Rockwell Automation

Introducing Logix SIS, a safety instrumentation system from Rockwell Automation.

“The only way of discovering the limits of the possible is to venture a little way past them into the impossible.”

This quintessential quote by Arthur C. Clarke appropriately foreshadows and encapsulates the spirit of innovation and progress that has driven humanity to push past conceivable boundaries. More than ever, people look to their resources, neighbors and leaders for intuitive and adaptable solutions to their persisting problems. Today, in the realm of safety, Rockwell Automation answers the call, standing at the precipice of a new era, where the limitations of the past are giving way to future possibilities.

Barriers of Traditional Safety Systems

Complexities abound in our dynamic world of modern industry, and safety is paramount. From robust oil refineries and chemical plants to intricate manufacturing facilities, ensuring the well-being of personnel, protecting valuable assets and safeguarding the environment are at the forefront of business priorities.

Traditional safety systems, while essential, have often needed help to keep pace with the evolving demands of industry. Their implicit complexity, inflexibility and integration challenges can create barriers, hinder productivity and even compromise safety itself. The need for a more streamlined, adaptable and cost-effective approach to safety has never been more apparent.

Enter Logix SIS: A New Era of Safety

Rockwell Automation, a pioneer in industrial automation and digital transformation, has risen to this challenge with the introduction of Logix SIS. This cutting-edge safety instrumented system redefines the landscape of industrial safety.

Logix SIS is more than merely an incremental improvement over existing solutions — it’s a paradigm shift, a bold leap into the future of safety that empowers a connected approach to how businesses achieve unprecedented levels of protection, efficiency and productivity.

Unlike traditional safety systems that operate in isolation, Logix SIS seamlessly integrates with Rockwell Automation’s Integrated Architecture®, leveraging a common platform for both safety and process control. This simplification reduces the need for separate engineering and maintenance staff, which in turn reduces complexity and can help accelerate project timelines. With familiar Logix programming tools and a streamlined configuration process, you can easily design, implement and maintain your safety system.

Key Features and Benefits of Logix SIS:

- High Availability & SIL 2 /SIL 3 Certification: Logix SIS is engineered for the most demanding safety applications, achieving Safety Integrity Level (SIL) 2 and SIL 3 certifications, the gold standards in industrial safety. This means you can trust Logix SIS to provide robust protection against even the most critical hazards, achieving the safety of your people, assets and environment.

- Scalability and Flexibility: Logix SIS is built to adapt and grow with your business. Its modular design allows you to quickly expand or modify your system as your needs evolve, confirming your safety infrastructure remains practical and relevant in the face of change. Whether you’re adding new processes, equipment, or safety zones, Logix SIS can scale to meet your requirements, providing the flexibility and agility you must stay ahead of the curve.

- Cost Efficiency and ROI: Logix SIS is not just a safety solution; it’s a strategic investment in your business. By leveraging existing Rockwell Automation hardware and software, eliminating the need for additional licenses, and streamlining engineering processes, Logix SIS helps you reduce the total cost of ownership and achieve a compelling return on investment.

- Improved Productivity and Uptime: Downtime is the enemy of productivity. Logix SIS’s high availability architecture, combined with advanced diagnostics and predictive maintenance capabilities, helps minimize safety-related stoppages and unplanned downtime. This delivers continuous operation, maximizes output and helps protect your bottom line.

Tomorrow’s Safety, Today

Logix SIS represents a bold step forward in industrial safety, offering a comprehensive, integrated and future-ready solution that empowers businesses to achieve their safety and operational goals. It’s a testament to the spirit of innovation and progress that has always defined Rockwell Automation.

The post Transform Your Safety Strategy: Introducing Logix SIS for Enhanced Protection and Efficiency appeared first on ELE Times.

Designing power supplies for industrial functional safety, Part 1

A power supply unit is one of the most crucial components in an electronics system, as its operation can affect the entire system’s functionality. In the context of industrial functional safety, as in IEC 61508, power supplies are considered elements and supporting services to electrical/electronic/programmable electronic (E/E/PE) safety-related systems (SRS) as well as other subsystems. With the IEC 61508’s three key requirements for functional safety (FS) compliance alongside recommended diagnostic measures, developing power supplies for industrial FS can be tiresome. For this reason, this first part of the series discusses what the basic functional safety standard states about power supplies.

The first part of this series on functional safety in power supply design focuses on insights about the safety requirements for such elements of E/E/PE SRS. This is accomplished by showing what the basic functional safety standard requires from power supplies.

Power Supplies in E/E/PE Safety-Related SystemsThe IEC 61508-4 defines E/E/PE systems as systems used for control, protection, or monitoring based on one or more E/E/PE devices. This includes all elements of the system, such as power supplies, sensors, and other input devices, data highways and other communication paths, and actuators and other output devices.

Meanwhile, an SRS is defined as a designated system that both implements the required safety functions necessary to achieve or maintain a safe state for the equipment under control (EUC) and is intended to achieve—on its own or with other E/E/PE SRS and other risk reduction measures—the necessary safety integrity for the required safety functions. This is shown in Figure 1, where power supplies also serve as an example of supporting services to an E/E/PE SRS aside from the hardware and software required to carry out the specified safety function.

Figure 1 E/E/PE system—structure and terminology showing that power supplies serve as a supporting service to an E/E/PE SRS device. Source: Analog Devices

Figure 1 E/E/PE system—structure and terminology showing that power supplies serve as a supporting service to an E/E/PE SRS device. Source: Analog Devices

The basic functional safety standard defines common cause failure (CCF) as a failure resulting from one or more events that cause concurrent failures of two or more separate channels in a multiple-channel system, ultimately leading to system failure. One example is a power supply failure that can result in multiple dangerous failures of the SRS. This is shown in Figure 2 where a failure in the 24-V supply, assuming the 24 V input becomes shorted to its outputs 12 VCC and 5 VCC, will result in a dangerous failure of the succeeding circuits.

Figure 2 Example of a power supply CCF scenario showing how a shorting of the 24-V supply input and the 12-V or 5-V outputs would result in a dangerous failure of the downstream systems. Source: Analog Devices

CCFs are important to consider when complying with functional safety, as they affect compliance with the IEC 61508’s three key requirements: systematic safety integrity, hardware safety integrity, and architectural constraints. These standard-cited requirements regarding CCF and power supplies in certain circumstances are shown here:

- IEC 61508-1 Section 7.6.2.7 takes the possibility of CCF into account when allocating overall safety requirements. This section also requires that the EUC control system, E/E/PE SRS, and other risk reduction measures, when treated as independent for the allocation, shall not share common power supplies whose failure could result in a dangerous mode of failure of all systems.

- Similarly, under synthesis of elements to achieve the required systematic capability (SC), IEC 61508-2 Section 7.4.3.4 Note 1 cites ensuring that there’s no common power supply failure that will cause a dangerous mode of failure of all systems is a possible approach to achieve sufficient independence.

- For integrated circuits with on-chip redundancy, IEC 61508-2 Annex E also cites several normative requirements, including the separation of input and outputs, such as power supply, among others, and the use of measures to avoid dangerous failures caused by power supply faults.

While these clauses prohibit sharing common power supplies whose failure could cause a dangerous mode of failure for all systems, implementing such a practice when designing a system will result in an increased footprint, with greater board size and cost. One way to still use common power supplies is by employing sufficient power supply monitoring. By doing this, dangerous failures brought by the power supply to an E/E/PE SRS can be reduced to a tolerable level, if not eliminated, in accordance with the safety requirements. More discussion about how effective power supply monitoring can solve common cause failures can be found in the blog post “Functional Safety for Power.”

Power supply failures and diagnosticsTo detect failures in the power supply, the basic functional safety standard specifies requirements and recommendations that address both systematic and random hardware failures.

In terms of the requirements for control of systematic faults, IEC 61508-2 Section 7.4.7.1 requires the design of E/E/PE SRS to be tolerant against environmental stresses including electromagnetic disturbances. This clause is cited in IEC 61508-2 Table A.16, which describes some measures against defects in power supplies—voltage breakdown, voltage variations, overvoltage (OV), low voltage, and other phenomena—as mandatory regardless of safety integrity level (SIL), Table 1.

|

Technique/Measure |

SIL 1 |

SIL 2 |

SIL 3 |

SIL 4 |

|

Measures against voltage breakdowns, voltage variations, overvoltage, low voltage, and other phenomena such as AC power supply frequency variation that can lead to dangerous failure |

M low |

M medium |

M medium |

M high |

Table 1 Power Supply Monitoring Requirement from IEC 61508-2 Table A.16.

IEC 61508-2 Table A.1, under the discrete hardware component, shows the faults and failures that can be assumed for a power supply when quantifying the effect of random hardware failures; this is shown in Table 2. Meanwhile, IEC 61508-2 Table A.9 shows the diagnostic measures recommended for a power supply along with the respective maximum claimable diagnostic coverage.

|

Component |

Low (60%) |

Medium (90%) |

High (99%) |

|

Power supply |

Stuck-at |

DC fault model Drift and oscillation |

DC fault model Drift and oscillation |

Table 2 Power supply faults and failures to be assumed according to IEC 61508-2 Table A.1.

Table 3 shows this with more details from IEC 61508-7 Section A.8. Both Table 2 and Table 3 are useful when doing a safety analysis as failure modes per component and diagnostic coverage of diagnostic techniques employed are inputs to the calculation of lambda values, thus the SIL metric: probability of dangerous failure and safe failure fraction (SFF).

|

Diagnostic Measure |

Aim |

Description |

Max DC Considered Achievable |

|

OV protection with safety shut-off |

To protect the SRS against OV. |

OV is detected early enough that all outputs can be switched to a safe condition by the power-down routine or there is a switch-over to a second power unit. |

Low (60%) |

|

Voltage control (secondary) |

To monitor the secondary voltages and initiate a safe condition if the voltage is not in its specified range. |

The secondary voltage is monitored and a power-down is initiated, or there is a switch-over to a second power unit, if it is not in its specified range. |

High (99%) |

|

Power-down with safety shut-off |

To shut off the power, with all safety-critical information stored. |

OV or undervoltage (UV) is detected early enough so that the internal state can be saved in non-volatile memory if necessary, and so that all outputs can be set to a safe condition by the power-down routine, or there is a switch-over to a second power unit. |

High (99%) |

Table 3 The recommended power supply diagnostic measures in IEC 61508-7 Section A.8.

Figure 3a shows an example of a voltage control diagnostic measure. In this example, the power supply of the logic controller subsystem, typically in the form of a post-regulator or LDO, is monitored by a voltage protection circuit, specifically the MAX16126.

Any out-of-range voltage detected by the supervisor, whether it be OV or UV, will result in the disconnection of the logic controller subsystem, composed of a microcontroller and other logic devices, from the power supply as well as assertion of the MAX16126’s FLAG pin. With this, the logic controller subsystem can be switched to a safe condition. Similarly, this circuit can also be used as an OV protection with a safety shut-off diagnostic measure if UV detection is not present.

On the other hand, Figure 3b shows an example of a power-down with a safety shut-off diagnostic measure. In this example, a hot-swappable system monitor, the LTC3351, connects the power supply to the logic controller subsystem while its synchronous switching controller operates in step-down mode, charging a stack of supercapacitors. If the power supply goes outside the OV or UV threshold voltages, the LTC3551 will disconnect the logic controller subsystem from the power supply, and the synchronous controller will run in reverse as a step-up converter to deliver power from the supercapacitor stack to the logic controller subsystem. This will give enough time to the logic controller subsystem to save the internal state to a nonvolatile memory, so that all outputs can be set to a safe condition by the power-down routine.

Figure 3 An illustration of the recommended diagnostic measures for a power supply. Source: Analog Devices

Power supply operationAside from CCF, power supply failures, and recommended diagnostic measures, the IEC 61508 also expresses the importance of power supply operation in the E/E/PE SRS. This can be seen in the sixth part of the standard, Annex B.3, discussing the use of the reliability block diagram approach to evaluate probabilities of hardware failure, assuming a constant failure rate. Aside from the scope of the sensor, logic, and final element subsystems, power supply operation is also included—this is shown in the following examples.

- When a power supply failure removes power from a de-energize-to-trip E/E/PE SRS and initiates a system trip to a safe state, the power supply does not affect the PFDavg of the

- If the system is energized-to-trip or the power supply has failure modes that can cause unsafe operation of the E/E/PE SRS, the power supply should be included in the evaluation.

Such assumptions make power supply operation in an E/E/PE SRS critical as it can determine whether the power supply can affect the calculation for the probability of a dangerous failure, which is one of the IEC 61508’s key requirements.

SRS’s power supplyThis article provided insights regarding the basic functional safety standard’s normative and informative requirements for an E/E/PE SRS’s power supply. This was done by first tackling the role of the power supply in an E/E/PE SRS. A discussion of common cause failures, which prohibit the use of common power supplies, then demonstrated how the use of power supply monitoring eliminates CCFs. Requirements regarding systematic and random hardware failures related to power supplies were also presented, along with the recommended diagnostic measures for power supplies. Finally, depending on the power supply operation—de-energize-to-trip or energize-to-trip—the probability of a dangerous failure of the SRS can be affected by the power supply, which was also covered.

Bryan Angelo Borres is a TÜV-certified functional safety engineer who currently works on several industrial functional safety product development projects. As a senior power applications engineer, he helps system integrators design functionally safe power architectures which comply to industrial functional safety standards such as the IEC 61508. Recently, he became a member of the IEC National Committee of the Philippines to IEC TC65/SC65A and IEEE Functional Safety Standards Committee. Bryan has a postgraduate diploma in power electronics and around seven years of extensive experience in designing efficient and robust power electronics systems.

Noel Tenorio is a product applications manager under multimarket power handling high performance supervisory products at Analog Devices Philippines. He joined ADI in August 2016. Prior to ADI, he worked as a design engineer in a switch-mode power supply research and development company for six years. He holds a bachelor’s degree in electronics and communications engineering from Batangas State University, as well as a postgraduate degree in electrical engineering in power electronics and a Master of Science degree in electronics engineering from Mapua University. He also had a significant role in applications support for thermoelectric cooler controller products prior to handling supervisory products.

Related Content

- Attaining functional safety: Standards, certification, and the development process

- Functional safety in non-automotive BMS designs

- Overview of IEC61508 safety levels

- A first (lock) step into functional safety

- Redundancy for safety-compliant automotive & other devices

References

- Foord, Tony and Colin Howard. “Energise or De-Energise to Trip?” Measurement and Control, Vol. 41, No. 9, November 2008.

- IEC 61508 All Parts, Functional Safety of Electrical/Electronic/Programmable Electronic Safety-Related Systems. International Electrotechnical Commission, 2010.

- Meany, Tom. “Functional Safety for Power.” Analog Devices, Inc., March 2019.

The post Designing power supplies for industrial functional safety, Part 1 appeared first on EDN.

How Cadence Is Energizing Sustainable Semiconductor Design

Courtesy: Cadence Systems

The demand for semiconductors is surging due to AI growth, data centers, and digital transformation, but the environmental cost is significant. Energy-intensive manufacturing and waste generation pose challenges that must be addressed for long-term sustainability.

Semiconductors power modern innovation, from smartphones to AI systems, yet their production impacts the environment. By integrating sustainability into design and manufacturing, the industry can reduce energy consumption, minimize waste, and conserve resources. Introducing environmental impact (E) to the traditional power, performance, area, and cost (PPAC) model during the early stages of development is key to sustainable progress.

Efforts to lower energy use, optimize fabrication processes, and utilize recycled materials are gaining traction. Innovations like energy-efficient architectures and adaptive power management deliver high performance while reducing carbon footprints, advancing a greener future for the industry.

Imec’s SSTS ProgramImec, the world’s leading independent nanoelectronics R&D hub, has long been at the forefront of sustainability in semiconductor manufacturing. The Sustainable Semiconductor Technologies and Systems (SSTS) program, spearheaded by imec, brings together an impressive coalition of industry giants as well as government bodies, academia, and key associations, whereby the program aims to provide actionable data and tackle the pressing environmental challenges of advanced semiconductor manufacturing.

Cadence is proud to be the first electronic design automation (EDA) partner to join this groundbreaking program. This partnership marks a pivotal first step toward establishing a secure data-sharing platform that enables access to imec.netzero data through Cadence’s state-of-the-art design tools. With this capability, engineers and designers can make informed decisions early in the design process, integrating environmental considerations from the beginning. The mission is clear—to weave sustainability into the very foundation of semiconductor innovation.

How Cadence and imec Benefit the IndustryThrough this partnership, Cadence and imec will be redefining the design mindset, enabling sustainability to be a core driver during the design phase. Currently, life cycle analysis is typically done after a product is designed, so any required changes to the bill of materials or process will set back the project timeline by months. With Cadence’s “shift left” process, designers can proactively evaluate the environmental impact of their decisions during the design phase, where it matters most.

“Welcoming Cadence as the first EDA partner in our SSTS program marks a significant milestone in valorizing our data and ensuring sustainability is embedded at the heart of semiconductor innovation,” said Lars-Åke Ragnarsson, program director of Sustainable Semiconductor Technologies and Systems (SSTS) at imec.

Cadence’s Leadership in Sustainable InnovationThis partnership underscores Cadence’s position as a global leader in sustainable innovation. We have pledged to achieve net-zero greenhouse gas emissions by 2040, and we are backing up this promise with bold actions. By developing generative AI and digital twin technologies, strategically acquiring companies that drive sustainable innovation, and offering tool solutions that address both current and future environmental challenges, Cadence is paving the way for a sustainable, efficient, and technologically advanced semiconductor ecosystem.

The post How Cadence Is Energizing Sustainable Semiconductor Design appeared first on ELE Times.

Maximize positioning accuracy and battery life with LEAP : Low Energy Accurate Positioning for wearables

Courtesy: u-blox

LEAP brings pinpoint accuracy and ultra-low power consumption to smartwatches, fitness trackers, and sports wearables.

The wearables conundrum

Smartwatches, fitness trackers, and GPS-enabled sports wearables have become essential tools for millions of users tracking their daily activities and athletic performance. But with these compact devices come big challenges – especially when it comes to delivering highly accurate GNSS positioning without draining the battery.

For device designers and users alike, the demands are increasing. Accurate tracking is expected even in dense cities, deep forests, or open water. And nobody wants to charge their wearable every day. The push to squeeze more performance into ever-smaller packages has created a tension between precision and power.

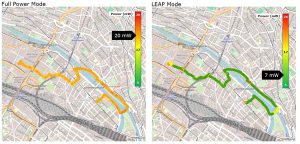

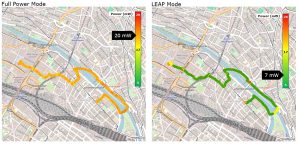

To meet this challenge head-on, u-blox has introduced LEAP (Low Energy Accurate Positioning), a powerful new GNSS technology built into the u-blox M10 platform. It enables wearables to deliver consistently accurate positioning while extending battery life –

solving one of the most persistent problems in wearable design.

Why low power and accuracy usually don’t mix

Accurate GNSS positioning is hard work, especially in the kinds of environments wearables are typically used in. In open-sky conditions things aren’t so bad. But in a dense forest, an urban canyon, or on the side of a mountain, GNSS signal quality starts to drop and accuracy starts to suffer. Add in dynamic movements like arm swings or vibration, and things get even more complicated. Plus, the GNSS antennas that wearables use aren’t very big to begin with.

The consequence is that the device uses lots of power trying to receive weak signals, and filtering out noise, reducing battery life. This is why traditional low power GNSS solutions tend to compromise on accuracy in order to conserve battery – and why high-accuracy solutions often drain battery quickly. LEAP was designed to avoid this compromise.

The solution: How LEAP works

LEAP is a smart GNSS mode developed by u-blox to deliver optimal performance for wearables while also extending battery life.

• Smart signal selection is at the core of LEAP. Rather than using all available GNSS signals, LEAP selectively uses only those that offer the strongest signal or the most accurate data. It dynamically filters out low-elevation or noisy signals that could introduce errors, and applies multipath mitigation techniques to reduce the impact of reflected signals common in cities or wooded areas.

• External low-noise amplifier (LNA) switching also helps minimise battery usage. LEAP can automatically switch the device’s LNA on or off based on real-time signal conditions. If signal quality is already high, the LNA can be disabled to save power. When signals are weak or noisy, the LNA reactivates to maintain positioning performance.

Together, these innovations ensure that a device using LEAP doesn’t waste power trying to receive poor quality data – and also improve accuracy, giving your device a powerful edge in the wearables space.

To further improve accuracy, LEAP includes activity-aware dynamics: tailored motion models for activities such as running, cycling, and hiking. These models account for specific movement patterns, like arm swings or stride variations, allowing the GNSS system to make smarter assumptions and corrections based on user behaviour. LEAP has even been validated for various sports like running, hiking and cycling – and further enhancements are in development.

What LEAP delivers

In side-by-side tests with standard u-blox M10 GNSS mode, LEAP reduced power consumption by up to 50%, while delivering similar or better positioning accuracy. In forest environments, LEAP delivered a circular error probable (CEP95, which essentially means that the probability of the data being at the stated accuracy is 95%) of 8 metres, compared to 14 metres from competitor products. In opensky tests, the improvement was from 4 metres to 2 metres. These kinds of gains matter. Whether users are running under tree

cover, hiking through gorges, or biking through the city, they can trust that their wearable device is delivering accurate, energy-efficient tracking.

Why it matters for designers

For wearable device designers, LEAP opens up a new range of possibilities. By delivering high-accuracy GNSS positioning at low power, it enables smaller batteries and slimmer form factors without compromising user experience. The chip package is impressively compact at just 2.39 x 2.39 mm, making it ideal for modern wearables, including those with severe

space and weight constraints. Whether you’re designing a rugged GPS sports watch or a lightweight everyday fitness tracker, LEAP will fit.

It’s also a future-proof solution. Firmware upgrades, which can be delivered via external flash or a connected MCU, mean LEAP can continue to evolve after deployment. Future firmware updates could introduce new models for additional activities, further optimise

power savings, or enhance positioning accuracy based on the latest innovations. This ensures that devices built with LEAP can stay competitive and adapt to emerging user needs. It supports Android systems and is fully compatible with u-blox AssistNow, enabling faster positioning and lower startup power draw. Built-in support for protocols like SUPL means LEAP can integrate seamlessly into today’s connected wearable ecosystems.

A LEAP forward for wearable GNSS

With LEAP, u-blox has redefined what’s possible for wearable GNSS. By combining low power consumption with high accuracy, it solves one of the biggest challenges in GNSS for wearables. And by making smartwatches and sports watches more capable, it gives users the freedom to explore further, train harder, and go longer between charges.

The post Maximize positioning accuracy and battery life with LEAP : Low Energy Accurate Positioning for wearables appeared first on ELE Times.

Skyworks appoints Robert Schriesheim as interim CFO

VueReal partners with distributor ACA TMetrix

Бюлетень для експертів Quarterly Newsletter

У грудні минулого року побачив світ перший номер англомовного інформаційного бюлетеню, присвяченого перебігу життя КПІ ім. Ігоря Сікорського. Він називався Quarterly Newsletter (щоквартальний інформаційний бюлетень). Але щотижня подій в університеті відбувається стільки, що навіть, якщо в інформаційну підбірку зібрати лише головні з них, вона буде величезною. Тому вже з наступного номера назва змінилася, оскільки бюлетень став виходити частіше. Тепер він називається Kyiv Polytechnic Newsletter і видається двічі на місяць, або за потребою. За формою це дайджест новин про університет з коротким викладом розлогих матеріалів з університетського сайту та сайтів підрозділів, з газети "Київський політехнік", а інколи і загальноукраїнських видань у перекладі на англійську мову, а також інформації від міжнародних альянсів університетів, членом яких є й КПІ ім. Ігоря Сікорського. Кожен опублікований матеріал має інтернет-посилання на першоджерело.

На Міжнародній конференції з історії науки, техніки та освіти "Пріоритети української науки"

24 квітня в рамках Міжнародного молодіжного симпозіуму з історії науки і техніки "Пріоритети української науки" на базі фізико-математичного факультету КПI ім. Ігоря Сікорського в режимі онлайн відбулася щорічна ХХІІІ Міжнародна науково-практична конференція "Історія розвитку науки, техніки та освіти" за темою "Фундаментальні науки як рушій технологічних трансформацій". Організаторами заходу виступили Фізико-математичний факультет КПІ, Науково-технічна бібліотека ім. Г.І. Денисенка, Державний політехнічний музей імені Бориса Патона, Рада молодих учених МОН України, ДУ "Інститут досліджень науково-технічного потенціалу та історії науки (ДУ ІДНТПІН) ім. Г.М. Доброва НАН України", профком КПІ.

Yokogawa Test & Measurement Releases SL2000 High-Speed Data Acquisition Unit

A high-performance DAQ system that meets the latest evaluation and test needs in the automotive, mechatronics, and power electronics fields

Yokogawa Test & Measurement Corporation announces the release of the SL200 High-Speed Data Acquisition Unit, a ScopeCorder series product with a wide range of data logging functionalities for evaluation and test applications, including high-speed sampling and analysis. The SL2000 is a modular platform that combines the functions of a mixed signal oscilloscope and a data acquisition recorder, and is designed to capture fast signal transients and long-term trends. It is suitable for applications such as R&D, validation, and troubleshooting.

The SL2000 can be used separately or in combination with the DL950 ScopeCorder, depending on the application. No other product family on the market offers this level of flexibility in handling multi-channel measurements. With the ScopeCorder product family, Yokogawa provides a multifaceted, total solution for the high-precision mechatronics and electric power markets that is contributing to the advancement and development of new technologies and applications.

Development Background

In the four years since the DL950 ScopeCorder was first brought to the market by Yokogawa Test & Measurement, there have been many technical advances in the electric vehicle (EV), renewable energy, and other industrial fields. Today, there is an ever-greater requirement for the capability to simultaneously measure multiple parameters and for the systemization of mechatronic measurements in product development. For example, in the development of motors for industrial and EV systems, one essential test for checking and improving a product is the durability test. This test takes a long time to complete and requires a highly reliable measuring instrument and high sampling rates.

Main Features

1. Enabling both high-speed sampling and multi-channel measurement

The SL2000 performs long-duration multi-channel measurements while precisely analyzing even the most detailed aspects of waveforms. With its dual capture function, the SL2000 can perform durability tests over long periods of time at speeds of up to 200 MS/s.

By using the IS8000 integrated software platform, it is easier to perform the long-term measurements required for durability testing, helping to improve the efficiency of product design and evaluation work. In addition, isolation measurement technology ensures the noise resistance required for durability testing in harsh environments.

2. Supporting simultaneous measurement of a wide variety of devices

The SL2000 has eight available slots (with up to 32 channels), for which over 20 types of input modules are available to enable measurements of electrical signals, mechanical performance parameters indicated by sensors, and decoded vehicle serial bus signals. To increase the number of measurement channels, up to five SL2000 and DL950 units can be synchronized.

Major Target Markets

• Transportation (automotive, rail, aviation, etc.)

• Power and energy (renewable energy, smart cities/homes, data centers, etc.)

• Mechatronics, including industrial robots and motors

Applications

• Durability and reliability testing of components and vehicles that requires high sampling rates and multi-channel simultaneous measurement of analog signals and in-vehicle bus signals such as CAN and CAN FD

• Simultaneous measurement and evaluation of temperature, vibration, and other mechanical signals that change relatively slowly as well as mechatronic and other such high-speed control signals

• Electrical analysis and control signal evaluation

The post Yokogawa Test & Measurement Releases SL2000 High-Speed Data Acquisition Unit appeared first on ELE Times.

STMicroelectronics combines activity tracking and high-impact sensing in miniature AI-enabled sensor for personal electronics and IoT

Industry-first inertial measurement unit (IMU) with dual MEMS accelerometer

and embedded AI measures accurately up to 320g full-scale range

STMicroelectronics, a global semiconductor leader serving customers across the spectrum of electronics applications, has revealed an inertial measurement unit that combines sensors tuned for activity tracking and high-g impact measurement in a single, space-saving package. Devices equipped with this module can allow applications to fully reconstruct any event with high accuracy and so provide more features and superior user experiences. Now that it’s here, markets can expect powerful new capabilities to emerge in mobiles, wearables, and consumer medical products, as well as equipment for smart homes, smart industry, and smart driving.

The new LSM6DSV320X sensor is an industry first in a regular-sized module (3mm x 2.5mm) with embedded AI processing and continuous registration of movements and impacts. Leveraging ST’s sustained investment in micro-electromechanical systems (MEMS) design, the innovative dual-accelerometer device ensures high accuracy for activity tracking up to 16g and impact detection up to 320g.

“We continue to unleash more and more of the potential in our cutting-edge AI MEMS sensors to enhance the performance and energy efficiency of today’s leading smart applications,” said Simone Ferri, APMS Group VP, MEMS Sub-Group General Manager at STMicroelectronics. “Our new inertial module with unique dual-sensing capability enables smarter interactions and brings greater flexibility and precision to devices and applications such as smartphones, wearables, smart tags, asset monitors, event data recorders, and larger infrastructure.”

The LSM6DSV320X extends the family of sensors that contain ST’s machine-learning core (MLC), the embedded AI processor that handles inference directly in the sensor to lower system power consumption and enhance application performance. It features two accelerometers, designed for coexistence and optimal performance using advanced techniques unique to ST. One of these accelerometers is optimized for best resolution in activity tracking, with maximum range of ±16g, while the other can measure up to ±320g to quantify severe shocks such as collisions or high-impact events.

By covering an extremely wide sensing range with uncompromised accuracy throughout, all in one tiny device, ST’s new AI MEMS sensor will let consumer and IoT devices provide even more features while retaining a stylish or wearable form factor. An activity tracker can provide performance monitoring within normal ranges, as well as measuring high impacts for safety in contact sports, adding value for consumers and professional/semi-pro athletes. Other consumer-market opportunities include gaming controllers, enhancing the user’s experience by detecting rapid movements and impacts, as well as smart tags for attaching to items and recording movement, vibrations, and shocks to ensure their safety, security, and integrity.

With its wide acceleration measurement range, ST’s sensor will also enable new generations of smart devices for sectors such as consumer healthcare and industrial safety. Potential applications include personal protection devices for workers in hazardous environments, assessing the severity of falls or impacts. Other uses include equipment for accurately assessing the health of structures such as buildings and bridges.

The sensor’s high integration simplifies product design and manufacture, enabling advanced monitors to enter their target markets at competitive prices. Designers can create slim, lightweight form factors that are easy to wear or attach to equipment.

The post STMicroelectronics combines activity tracking and high-impact sensing in miniature AI-enabled sensor for personal electronics and IoT appeared first on ELE Times.

5 myths about AI from a software standpoint

Courtesy: Avnet

Myth #1: Demo code is production-ready

AI demos always look impressive but getting that demo into production is an entirely different challenge. Productionizing AI requires effort to ensure it’s secure, optimized for your hardware, and

tailored to meet your specific customer needs.

The gap between a working demonstration and real-world deployment often includes considerations like performance, scalability

and maintainability. One of the biggest hurdles is maintaining AI

models over time, particularly if you need to retrain the application

and update the inference engine across thousands of deployed devices. Ensuring long-term support, handling versioning and managing updates without disrupting service add layers of complexity

that go far beyond an initial demo.

Additionally, the real-world environment for AI applications is dynamic. Data shifts, changing user behavior, and evolving business

needs all require frequent updates and fine-tuning.

Organizations must implement robust pipelines for monitoring

model drift, collecting new data and retraining models in a controlled and scalable way. Without these mechanisms in place, AI

performance can degrade over time, leading to inaccurate or unreliable outputs.

Emerging techniques like federated learning allow decentralized

model updates without sending raw data back to a central server,

helping improve model robustness while maintaining data privacy.

Myth #2: All you need is Python

Python is an excellent tool for rapid prototyping, but its limitations

in embedded systems become apparent when scaling to production.

In resource-constrained environments, languages like C++ or C

often take the lead for their speed, memory efficiency and hardware-level control. While Python has its place in training and experimentation, it rarely powers production systems in embedded

AI applications.

In addition, deploying AI software requires more than just writing

Python scripts. Developers must navigate dependencies, version

mismatches and performance optimizations tailored to the target

hardware.

While Python libraries make development easier, achieving real-time inference or low-latency performance often necessitates

re-implementing critical components in optimized languages like

C++ or even assembly for certain accelerators. ONNX Runtime and

TensorRT provide performance improvements for Python-based AI

models, bridging some of the efficiency gaps without requiring full

rewrites.

Myth #3: Any hardware can run AI

The myth that “any hardware can run AI” is far from reality. The

choice of hardware is deeply intertwined with the software requirements of AI.

High-performance AI algorithms demand specific hardware accelerators, compatibility with toolchains and memory capacity. Choosing mismatched hardware can result in performance bottlenecks or even an inability to deploy your AI model.

For example, deploying deep learning models on edge devices requires selecting chipsets with AI accelerators like GPUs, TPUs or

NPUs. Even with the right hardware, software compatibility issues

can arise, requiring specialized drivers and optimization techniques.

Understanding the balance between processing power, energy consumption, and cost is crucial to building a sustainable AI-powered

solution. While AI is now being optimized for TinyML applications

that run on microcontrollers, these models are significantly scaled

down, requiring frameworks like TensorFlow Lite for Microcontrollers for deployment.

Myth #4: AI is quick to implement

AI frameworks like TensorFlow or PyTorch are powerful, but they

don’t eliminate the steep learning curve or the complexity of real-world applications. If it’s your first AI project, expect delays.

Beyond the framework itself, one of the biggest challenges is creating a toolchain that integrates one of these frameworks with the

IDE for your chosen hardware platform. Ensuring compatibility, optimizing models for edge devices, integrating with legacy systems,

and meeting market-specific requirements all add to the complexity.

For applications outside the smartphone or consumer tech domain,

the lack of pre-existing solutions further increases development

effort.

Myth #5: Any OS can run AI

Operating system choice matters more than you think. Certain AI

platforms work best with specific distributions and can face compatibility issues with others.

The myth that “any OS will do” ignores the complexity of kernel

configurations, driver support and runtime environments. To avoid

costly rework or hardware underutilization, ensure your OS aligns

with both your hardware and AI software stack.

Additionally, real-time AI applications, such as those in automotive

or industrial automation, often require an OS with real-time capabilities. This means selecting an OS that supports deterministic execution, low-latency processing, and security hardening.

Developers must carefully evaluate the trade-offs between flexibility, support, and performance when choosing an OS for AI deployment. Some AI accelerators require specific OS support.

What’s Next for AI at the edge?

We’re already seeing large language models (LLMs) give way to

small language models (SLMs) in constrained devices, putting the

power of generative AI into smaller products. If this is the direction

you’re going, talk to the experts at Witekio.

The post 5 myths about AI from a software standpoint appeared first on ELE Times.

Experimenting and learning LLC resonant power supplies.

| Learning about LLC resonant power supplies and micropython for Pico W. [link] [comments] |

🎥 Студенти КПІ відвідали Броварський завод котельного устаткування ARDENZ

У рамках співпраці з Теплоенергетичним кластером України викладачі та студенти кафедри теплової та альтернативної енергетики НН ІАТЕ КПІ ім. Ігоря Сікорського відвідали Броварський завод котельного устаткування ARDENZ.

My first attempt at clean cable wiring for my weather station project

| The ESP32 C3 is connected to a DHT11 and a 4x 8x8 MAX7219 LED matrix. The cable management wasn't remotely as relaxing as I imagined it in my fantasy. [link] [comments] |

Aeluma and Thorlabs unveil large-diameter wafer manufacturing platform for quantum computing and communication

Wireless SoCs drive IoT efficiency

Built on a 22-nm process, Silicon Labs’ SiXG301 and SiXG302 wireless SoCs deliver improved compute performance and energy efficiency. As the first members of the Series 3 portfolio, they target both line- and battery-powered IoT devices.

![]()

Designed for line-powered applications such as LED smart lighting, the SiXG301 integrates an LED pre-driver and a 32-bit Arm Cortex-M33 processor running at up to 150 MHz. It supports concurrent multiprotocol operation with Bluetooth, Zigbee, and Matter over Thread, and includes 4 MB of flash and 512 kB of RAM. Currently in production with select customers, the SiXG301 is expected to be generally available in Q3 2025.

Extending the Series 3 platform to battery-powered applications, the SiXG302 features a power-efficient architecture that consumes just 15 µA/MHz when active—up to 30% lower than comparable devices. It is well-suited for battery-powered wireless sensors and actuators using Matter or Bluetooth. Sampling is expected to begin in 2026.

The SiXG301 and SiXG302 families will initially include two types of devices: ‘M’ variants (SiMG301 and SiMG302) for multiprotocol support, and ‘B’ variants (SiBG301 and SiBG302) optimized for Bluetooth LE.

The post Wireless SoCs drive IoT efficiency appeared first on EDN.