Feed aggregator

New, Imaginative AI-enabled satellite applications through Spacechips

As the demand for smaller satellites with sophisticated computational capabilities and reliable along with robust onboard processor systems to support the five to ten-year duration of a mission grows, so does the limits of the latest ultra‑deep‑submicron FPGAs and ASICs and their power delivery networks. These high-performance processors have demanding, low-voltage, high-current power requirements and their system design is further compounded by the complexities of managing thermal and radiation conditions in space.

To cater to these demands, Spacechips has introduced its AI1 transponder, a small, on-board processor card containing an ACAP (Adaptive Compute Acceleration Platform) AI accelerator. The smart, re-configurable receiver and transmitter delivers up to 133 tera operations per second (TOPS) of performance that enables new Earth-observation, in-space servicing, assembly and manufacturing (ISAM), signals intelligence (SIGINT), and intelligence, surveillance and reconnaissance (ISR) and telecommunication applications to support real-time, autonomous computing while ensuring the reliability and longevity to complete longer missions.

“Many spacecraft operators simply don’t have sufficient bandwidth in the RF spectrum to download all of the data they’ve acquired for real-time processing,” said Dr. Rajan Bedi, CEO of Spacechips. “An alternative solution is accomplishing the processing in-orbit and simply downlink the intelligent insights.”

New levels of processing power spawn imaginative new applications in space and on Earth

Today’s low-Earth-orbit observation spacecraft can establish direct line of sight over a specific region only about once every ten minutes. If satellites were trained to fill those blind spots using AI algorithms, emergency management teams could make faster, better-informed decisions when direct line-of-sight communication with Earth is not possible. Spacechips is harnessing these powerful artificial intelligence compute engines to enable in-orbit AI to address a variety of Earth-bound and space-related problems:

- Tracking space debris to avoid costly collisions

- Monitoring mission critical spacecraft system health

- Identifying severe weather patterns

- Reporting critical crop production rainfall data

Figure 1 On-orbit AI can detect temperature anomalies such as wildfires, volcanic activity, or industrial accidents with the Spacechips AI1 processer. This helps emergency management teams make faster, better-informed decisions about which fire prone areas are the most vulnerable.

Figure 1 On-orbit AI can detect temperature anomalies such as wildfires, volcanic activity, or industrial accidents with the Spacechips AI1 processer. This helps emergency management teams make faster, better-informed decisions about which fire prone areas are the most vulnerable.

Vicor Factorized Power Architecture delivers high current, low voltage

Given the constrained operating environment of space, AI-enabled computing has an acute need for precision power management. The need is compounded by the expanding number, scope and variety of missions that require different kinds of spacecraft and a growing reliance on some form of solar power to deliver adequate power.

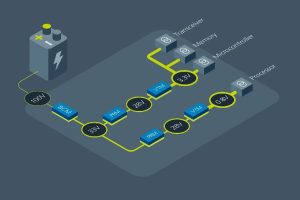

This led Spacechips to partner with Vicor to incorporate Vicor Factorized Power Architecture (FPA) using high density power modules into the Spacechips AI1 Transponder Board. FPA is a power delivery system design that separates the functions of DC-DC conversion into independent modules. In Vicor’s radiation tolerant modules, the bus converter module (BCM) provides the isolation and step down to 28V, while the pre-regulator module (PRM) provides regulation to a voltage transformation module (VTM) or current multiplier that performs the 28V DC transformation to 0.8V.

The value of the Vicor solution, according to Bedi, is that it is very small and power dense. This allows for better efficiency and flexibility by reducing size and weight and yields higher power density, especially in high-performance computing applications.

By adopting Vicor’s FPA power delivery system, Bedi is helping telecommunications and SIGINT operators perform real-time, on-board processing by autonomously changing RF frequency plans, channelization, modulation and communication standards based on live traffic needs. Vicor power converter modules also feature a dual powertrain, which for fault-intolerant space applications provides built-in redundancy that allows loads to be driven at 100 percent on each side of the powertrain.

Figure 2 Vicor Factorized Power Architecture (FPA) separates the functions of DC-DC conversion into independent modules. Using radiation-tolerant modules, the BCM bus converter provides the isolation, the PRM regulator provides the regulation and the VTM current multiplier performs the DC transformation. This allows for better efficiency, flexibility and higher power density, especially in high-performance computing applications.

Figure 2 Vicor Factorized Power Architecture (FPA) separates the functions of DC-DC conversion into independent modules. Using radiation-tolerant modules, the BCM bus converter provides the isolation, the PRM regulator provides the regulation and the VTM current multiplier performs the DC transformation. This allows for better efficiency, flexibility and higher power density, especially in high-performance computing applications.

“Vicor FPA delivers a much more elegant, efficient solution in a very small form factor,” Bedi said. “The benefits of Vicor FPA are simply an order of magnitude superior to everything else on the market.”

Together Spacechips and Vicor have partnered to design the most power-dense, reliable processer board on orbit. The AI1 board is rad-tolerant, rugged and compact. It sets a new standard for power processing, enabling the next-generation of computing and application design for New Space.

The post New, Imaginative AI-enabled satellite applications through Spacechips appeared first on ELE Times.

Fighting Fire with Fire: How AI is Tackling Its Own Energy Consumption Challenge to Boost Supply Chain Resilience

AI is no longer just a futuristic idea, today it is a known technology across every industry. AI is being used to automate tasks, make quicker decisions, and build digital products that were once considered impossible to create. But as AI becomes more common, a major problem is starting to show up: it uses a lot of energy. Training large models and keeping them running day and night requires huge amounts of computing power, which in turn puts pressure on power grids, data centres, and the supply chains that support them.

This creates a clear paradox. AI demands significant energy, yet it can also help organisations manage energy more efficiently. With data centre power requirements expected to rise sharply, procurement teams, engineers, and supply chain leaders must reconsider how AI systems are designed, deployed, and supported. Encouragingly, low-power AI architectures and smarter data-centre management tools are emerging to tackle the issue from within the ecosystem.

Energy Consumption Profile of AI Technologies

AI’s energy demand has surged as newer models grow larger and more compute-intensive. Boston Consulting Group reports that U.S. data centers consumed about 2.5% of national electricity in 2022, a share projected to rise to nearly 7.5% around 390 TWh by 2030.

The biggest contributors include:

- Model Training

Training today’s large models isn’t a simple overnight job. It involves running thousands of GPUs or specialised AI chips in parallel for weeks at a stretch. The compute load is enormous, and the cooling systems have to run constantly just to stop the hardware from overheating. Together, they draw a surprising amount of power.

- Data Center Operations

People often assume most of the power goes into the servers, but the cooling and air-handling equipment consume almost as much. As AI traffic grows, data centers are forced to maintain tight temperature and latency requirements, which makes the power bill climb even faster.

- Inference at Scale

Running models in real time, or inference, now accounts for most of AI’s total energy consumption. With generative AI being used in search engines, content creation tools, manufacturing systems, and supply chain operations, continuous inference tasks place constant pressure on electricity infrastructure.

AI-Driven Strategies for Energy EfficiencyTo mitigate AI’s energy consumption, several AI-driven strategies have been developed:

- Energy-Efficient Model Architectures

Modern AI research increasingly focuses on architectures that deliver higher performance with lower computational load. Techniques gaining wide adoption include:

- Pruning: Removing redundant neurons and parameters to shrink model size.

- Quantization: Reducing precision (e.g., FP32 → INT8) with minimal accuracy loss.

- Knowledge Distillation: Compressing large teacher models into compact student models.

These approaches can cut training and inference energy by 30–60%, making them critical for enterprise-scale deployments.

- Adaptive Training Methods

Adaptive training methods dynamically adjust GPU allocation, batch size, and learning rate based on convergence behavior. Instead of running training jobs at maximum power regardless of need, compute intensity scales intelligently. This prevents over-provisioning, lowers operational costs, and reduces carbon footprint particularly in cloud-based AI development workflows.

- AI-Powered Data Center Energy Management

AI is increasingly being integrated into Hyperscale data-centre control systems because it can monitor operations faster than humans. It tracks power usage, detects irregularities, and predicts demand spikes so workloads and cooling can be adjusted before issues arise.

Google applied this approach in its facilities and found that machine-learning–based cooling adjustments reduced energy use by about 15–20%. According to Urs Hölzle, this improvement came from predicting load changes and tuning cooling in advance.

- Cooling System Optimization

Cooling is one of the largest energy loads in data centres. AI-driven cooling systems, especially those using offline reinforcement learning, have achieved 14–21% energy savings while maintaining thermal stability.

Techniques include:

- Predicting thermal hotspots

- Dynamic airflow and coolant modulation

- Intelligent chiller sequencing

- Liquid-cooled rack optimization

As AI model density increases, these innovations are essential for maintaining operational uptime.

- Predictive Analytics for Lead Time Optimization

AI forecasting tools optimize procurement, lead time, and logistics by predicting:

- Seasonal energy price fluctuations

- Grid availability

- Renewable energy generation patterns

- Peak demand windows

These insights allow organizations to schedule compute-heavy workloads like model training-during low-cost or low-emission energy periods, directly improving supply chain resilience

Strategic Implications for Procurement and Supply Chain ManagementEnergy-efficient AI is not merely an IT requirement; it is a supply chain strategy. Organizations are rethinking how they source hardware, design workflows, and plan operations.

1. Procurement of Energy-Efficient Semiconductors

The demand for low-power AI accelerators and CPUs-such as Arm Neoverse platforms is rising sharply. Procurement leaders must prioritize vendors who offer:

- High performance-per-watt

- Advanced power management features

- Hardware-level AI acceleration

Selecting the right semiconductor partners reduces long-term operational costs and aligns with sustainability commitments

- Enhancing Supply Chain Resilience

Energy availability and cost volatility can trigger delays, downtime, and disruptions. AI-based energy optimization enhances resilience by:

- Predicting shortages

- Reducing load on critical systems

- Identifying alternative low-power workflows

- Optimizing backup generation or renewable energy use

This is particularly vital for semiconductor fabs, logistics hubs, and manufacturing plants that rely on uninterrupted power.

- Wafer Fab Scheduling Analogies

The semiconductor industry offers a useful analogy: wafer fabrication requires meticulous scheduling to optimize throughput while managing energy-intensive processes. AI-driven energy management requires:

- Workload balancing

- Thermal and power constraint management

- Predictive scheduling

- Minimization of idle compute cycles

Several trends are shaping the next wave of AI energy innovation:

1. Specialized Low-Power AI Chips

Arm, NVIDIA, AMD, and start-ups are designing AI chips focused on maximum efficiency per watt-critical for both data centers and edge AI systems.

2. Green Data Centers

Operators are investing in:

- Renewable power

- Liquid immersion cooling

- Waste heat reuse

- Modular micro-data centers

These reduce operational emissions and increase grid independence.

3. Regulatory Pressures

Governments are introducing stricter carbon reporting, energy consumption caps, and sustainability requirements—pushing organizations toward greener AI adoption.

4. Market Volatility

Energy price fluctuations directly impact the cost of training and deploying AI. Organizations must adopt agile, energy-aware planning to maintain competitiveness.

ConclusionAI is in a strange position right now. On one hand, it consumes a huge amount of energy; on the other, it’s one of the best tools we have for cutting energy waste. When companies use more efficient model designs, smarter data-center management systems, and predictive tools that anticipate resource needs, they can bring down operating costs and make their supply chains more stable.

Using sustainable AI isn’t just a “good to have” anymore it’s becoming a key factor in staying competitive. As businesses push deeper into digital operations, the combination of AI innovation and energy-conscious thinking will play a major role in determining which organisations stay resilient and which ones fall behind.

The post Fighting Fire with Fire: How AI is Tackling Its Own Energy Consumption Challenge to Boost Supply Chain Resilience appeared first on ELE Times.

Beyond the Bill: How AI-Enabled Smart Meters Are Driving Lead Time Optimization and Supply Chain Resilience in the Energy Grid

Introduction

Smart meters have significantly evolved since their initial implementation for consumer billing. In the contemporary networked industrial landscape, where semiconductor fabrication facilities, data centers, and manufacturing plants rely on a consistent, high-quality electrical supply, AI-enabled smart meters have become essential instruments. These meters, integrated with edge analytics, IoT infrastructures, and cloud-based machine learning engines, produce high-resolution data that informs procurement, operational planning, and supply chain resilience.

For the semiconductor industry, where a single hour of downtime in a wafer fab can cost between $1–5 million, energy reliability is not merely operational, it is existential. By using predictive analytics from AI-enabled smart meters, both utilities and semiconductor fabs gain visibility into consumption anomalies, voltage instability, and equipment stress patterns that traditionally led to delays, yield losses, and unplanned shutdowns.

As Dr. Aaron Shields, Director of Grid Strategy at VoltEdge, remarks-For semiconductor fabs, energy intelligence is no different from process intelligence. AI-enabled metering is now a supply chain stabilizer, not just a measurement tool.

Smart Meters as Intelligent, High-Resolution Energy Nodes

Smart Meters as Sophisticated, High-Resolution Energy Nodes

Contemporary AI-driven smart meters possess integrated processors, edge AI chips, and secure communication protocols. These qualities convert them into “micro-decision engines” capable of executing:

- Local anomaly detection

- High-frequency load forecasting

- Voltage quality assessment

- DER coordination

- Event-driven grid signalling

This is especially important for semiconductor ecosystems, which need very careful monitoring because they are very sensitive to voltage drops, harmonics, and micro-interruptions.

Semiconductor fabs typically run:

- 5,000–50,000 process tools,

- under strict schedule windows,

- where wafer fab scheduling depends on consistent energy flow to keep lithography, etching, CMP, and deposition tools stable.

AI-enabled smart meters supply real-time, tool-level and grid-level data that feeds these scheduling algorithms, reducing cycle time disruptions.

AI Applications for Grid Optimization and Semiconductor Supply Chain Stability

Through a number of methods, AI-enabled smart meters improve supply chain resilience in the utility and semiconductor manufacturing industries.

Predictive Maintenance & Equipment Lead Time Planning

AI detects early signatures of:

- transformer fatigue,

- feeder overloads,

- harmonic distortions,

- and breaker stress.

Utilities can then predict how many spare parts they will need and speed up the delivery of important parts. Semiconductor fabs likewise gain advance warning for facility equipment—HVAC loads, chillers, pumps, and vacuum systems.

Demand Forecasting with Industry-Specific Models

AI models like LSTM, transformer networks, and hybrid ARIMA-ML pipelines look at things like:

- Patterns in the production cycle

- Peak fab energy windows

- Changes in seasonal demand

- Large tool starts up currents

- Changes in the grid at the level of the grid

Better energy forecasting helps fab procurement leaders get power contracts, make better energy-based costing models, and cut down on delays caused by volatility.

Risk Mitigation During Market Volatility

Changes in energy prices have a direct effect on the costs of making chips. AI-AI-driven intelligent metering offers:

- Early warnings of grid instability

- Risk maps highlighting feeders that could trigger fab downtime

- Real-time dashboards for emergency preparedness

This improves the stability of the semiconductor supply chain amid energy price volatility or grid congestion events..

Case Study 1: European Utility + Semiconductor Fab Partnership Reduces Lead Times by 28%

A prominent European utility implemented AI-integrated smart meters throughout the industrial area containing a semiconductor fabrication facility with a capacity of 300,000 wafers per month. Historically, unpredictable transformer failures forced the fab to activate emergency procurement workflows.

AI-driven meter analytics identified transformer strain 18 days prior to conventional SCADA systems

This gave the utility’s purchasing team the ability to:

- Reorder transformer modules ahead of time

- Reduce urgent shipment costs

- Avoid fab shutdowns

Result:

- 28% reduction in transformer component lead times

- Zero unplanned fab downtime in eight months

- 12% improvement in wafer fab scheduling adherence

Case Study 2: Indian Fab Achieves 22% Faster Spare-Part Fulfilment Using Smart Meter Predictive Analytics

AI-enabled smart meters were installed from substation to tool-level feeders at a semiconductor fab park in India. Unusual starting-current spikes in the CMP and deposition sections were detected by predictive analytics, suggesting impending breaker degradation.

The fabs supply chain leaders integrated this data into their ERP procurement engine.

Impact:

- Spare-part availability increased by 24%

- Maintenance response times improved by 22%

- Downtime during voltage sag occurrences lowered by 17%%

The park’s engineering head noted: “Intelligence from smart meters now directs our procurement schedule.” We strategize weeks in advance, rather than hours.

Strategic Insights for Procurement Leaders Across Energy & Semiconductor Sectors

- Granular consumption data facilitates precise procurement. Prediction Meter data facilitates the prediction of:

Meter data helps forecast:

- Spare-transformer needs

- HVAC load cycles

- Cleanroom energy peaks

- Fuel windows for backup generators

This facilitates long-term vendor agreements and minimizes unanticipated orders.

- Smarter Vendor Evaluation

Tool uptime and voltage stability data allow semiconductor fabs to evaluate how supplier components behave under real load conditions.

- Lead Time Optimization Through Predictive Insights

Early detection of energy-side failures prevents:

- Wafer batches that are late,

- Cycle times that are too long, and

- Tool requalification delays.

Utility supply chains also reduce buffer stocks while improving availability.

- Operational Resilience and Risk Mitigation

AI-enabled data supports:

- Contingency planning

- Load re-routing

- Rapid DER activation

- Process tool safeguarding

This is crucial in a sector where milliseconds of voltage fluctuation can scrap millions in wafers.

Future Trends: Where Energy Intelligence Meets Semiconductor Precision

- AI-Orchestrated Load Scheduling for Fabs

Predictive models will align fab tool scheduling with energy stability windows. - Digital Twins Using Smart Meter Data

Utilities and fabs will run simulations to test equipment stress scenarios before making procurement decisions. - Edge AI Advancements

Next-generation meters will host larger models that independently diagnose harmonic distortions critical to lithography and etching tools. - Real-Time ROI Dashboards

CFOs in the semiconductor sector will see energy risk reduction as a way to get a good return on investment.

Conclusion

Artificial intelligence-enabled smart meters are essential for the modernization of the electricity grid and the stabilization of the semiconductor supply chain. Procurement directors, supply chain strategists, and fabrication engineers can make informed, proactive decisions with access to real-time analytics, predictive maintenance metrics, and load forecasting information. Smart meters are increasingly essential for maintaining production schedules, reducing lead times, and remaining competitive globally, as wafer manufacture requires consistent, high-quality power.

The post Beyond the Bill: How AI-Enabled Smart Meters Are Driving Lead Time Optimization and Supply Chain Resilience in the Energy Grid appeared first on ELE Times.

Inside the Digital Twin: How AI is Building Virtual Fabs to Prevent Trillion-Dollar Mistakes

Introduction

Semiconductor manufacturing often feels like modern alchemy: billions of tiny transistors squeezed onto a chip smaller than a fingernail, stitched through thousands of precise steps. Shifting a line by nanometres can ruin the batch.

The stakes are enormous. One day of unplanned downtime in a top fab can wipe out over $20 million. Problems aren’t always dramatic shutdowns; sometimes, process drift quietly eats into yields, totalling billions in lost revenue yearly. Factor in easily wobbling supply chains, and you see the industry’s looming “trillion-dollar risk.”

AI-powered digital twins are living mirrors of the factory. Continuously updated with real data, they run endless “what-if” scenarios, catching errors before they become costly. It’s like rehearsing production virtually, avoiding real-world mistakes.

- What is a Digital Twin in Semiconductor Manufacturing?

Now, a digital twin in a fab isn’t just some fancy simulation it’s basically a virtual twin of the whole facility, kept in lockstep with the real thing. Traditional simulations? They’re kind of frozen in time. Digital twins, on the other hand, are always moving, always learning, pulling in data from thousands of sensors, tool logs, and manufacturing systems to really reflect what’s happening on the floor.

Their scope is huge. For instance:

- Wafer Fab Scheduling: Figuring out the best sequence and queue times across hundreds of tools, shaving days off wafer cycles that can otherwise drag past 90 days.

- Tool Behavior Simulation: Watching how lithography, etching, and deposition tools drift or wear out, and guessing when they’ll need attention.

- Predictive Maintenance: Catching potential failures before they hit, avoiding downtime that could grind production to a halt.

The cool part? They keep getting smarter. Every wafer that runs through the fab teaches the twin a little more, helping it predict better and suggest fixes before things go sideways. Over time, fabs move from constantly reacting to actually staying ahead of the game.

- How AI Makes Digital Twins Smarter

AI is the real power that changes things. Digital twins transition from mere reflections to autonomous control systems capable of independent decision-making.

For instance:

- Defect Prediction: Machine learning spots tiny defect patterns humans or simple rules miss. A lithography misalignment, for instance, is caught before it ruins wafers.

- Automated Calibration: Reinforcement learning algorithms fine-tune deposition or etch times, keeping precision high with minimal human input.

- Fab Simulation: You can stress-test entire fabs virtually (temperature, vibration, purity changes) to see how production fares.

The impact is real:

- 30% faster validation, qualifying new processes quicker.

- 25% better yield forecasts, cutting waste.

- Avoided downtime over $2 million per tool daily (SEMI data).

AI tools like Bayesian models and reinforcement learning push fabs toward self-regulation—the factory learns to heal itself. The workflow below shows how AI twins turn raw data into better supply chain outcomes.

Figure 1: AI-powered digital twins connect fab data, predictive analytics, and real-time simulation to deliver measurable gains in yield, procurement efficiency, and supply chain resilience.

- Procurement & Supply Chain Resilience

The engineering benefits of digital twins are clear, but they also bring unexpected value to procurement and supply chain planning, functions exposed as fragile during the pandemic-era chip shortage.

- Supplier Qualification: Before approving new photoresists, gases, or wafers, digital twins simulate their impact on yield, reducing the risk of supplier-induced disruptions.

- Equipment Sourcing: AI predicts the saturation point of tools, enabling fabs to place orders in advance and avoid expensive overstocking.

- Virtual Commissioning: Tools can be simulated in the digital twin prior to acquisition, guaranteeing return on investment and compatibility with current production lines.

Case Study: When advanced lithography tools ran short in 2021, a major foundry used its twin to re-sequence wafer queues. This move held throughput steady, saved nearly $5 million in delay costs, and kept chips on schedule.

The globalization of supply chains necessitates this foresight. A firm in Taiwan can now anticipate the effects of a European chemical delay weeks in advance and adjust wafer production schedules to mitigate losses.

- Future Outlook: Virtual Fabs, Resilience, and ESG

The goal is the “virtual-first fab.” Every new process or recipe must be fully tested in the twin before going live. That method sharply lowers risk and cuts the cost of old-school trial-and-error.

Beyond efficiency, twins are crucial for sustainability targets:

- Scrap: Less wafer loss helps factories cut material scrap by 5–10%.

- Energy: Better tuning and scheduling can drop energy use by 3–7% per wafer.

- Waste: Fewer reworks directly cuts chemical and water usage.

Rahimian and other experts say that smart fabs of the future will combine efficiency with resilience, making supply chains stronger and better for the environment.

- Challenges on the Road Ahead

Despite their promise, setting up and maintaining a digital twin system is tough.

- Data Silos: Merging data from specialized, unlike tools is the core issue. Many factories use older equipment that lacks common data formats.

- Computational Demands: Running high-fidelity twins needs exascale computing, which smaller operations can’t afford.

- Adoption Hurdles: The industry needs simple rules for interoperability. Plus, veteran engineers must trust AI over their experience.

Solving this requires equipment makers, software firms, and chip producers to collaborate. Groups like SEMI are already pushing for common standards.

- Visualizing the Future

To see the full potential, think of a digital dashboard for a modern fab operator:

A heatmap shows when the wafer queue will be full, so there is no chaos. 3D models forecast tool wear weeks in advance. A supply chain radar tracks every bottleneck like a neon shortage or logistics delay in real time.

These visuals change factories from reactive spaces to proactive ecosystems. Every worker can now anticipate and adapt to changes, instead of constantly fighting crises.

Conclusion

The semiconductor industry drives nearly every modern device, yet its manufacturing risks are enormous. Digital twins are becoming essential because they let engineers spot yield problems early, see where supply issues may emerge, and keep sustainability efforts on track. These models aren’t just virtual copies of factories; they give teams clearer insight into how to run tools, materials, and workflows more efficiently and with fewer surprises. As digital-twin technology matures, it’s set to influence how leading fabs plan, test, and refine production. The message is clear: manufacturing’s future isn’t only physical. It’s virtual-first, AI-validated, and designed to prevent trillion-dollar mistakes.

The post Inside the Digital Twin: How AI is Building Virtual Fabs to Prevent Trillion-Dollar Mistakes appeared first on ELE Times.

ZK-DPL DC-DC Buck-Boost-Module

| My little lovely ZK-DPL has arrived. It is adjustable DC-DC buck-boost power supply module featuring a digital display for output voltage/current, multiple input options (USB, Micro-USB, pads), and designed for stepping voltages up or down (e.g., 5V to 3.3V, 9V, 12V, 24V) at 3W max output. working aruond to find some cool things to do with it :) [link] [comments] |

Inductive sensor simplifies position sensing

Melexis introduces a 16-bit inductive sensor for robotics, industrial, and mobility applications. The new dual-input MLX90514 delivers absolute position with high noise immunity through its SSI output protocol.

Applications such as robotic joint control, industrial motor commutation, and e-mobility drive systems require sensors with high-precision feedback, compact form factor, low-latency response, resilience to environmental stressors, and seamless integration, Melexis explained.

These sensors also need to overcome the limitations of optical encoders and magnetic-based solutions, the company said, which the MLX90514 addresses with its contactless inductive measurement principle. This results in stable and repeatable performance in demanding operational environments.

The inductive measurement principle operates without a permanent magnet, reducing design effort, and the sensor’s PCB-based coil design simplifies assembly and reduces costs, Melexis added.

In addition, the sensor is robust against dust, mechanical wear, and contamination compared with optical solutions. The operating temperature ranges from ‐40°C to 160°C.

(Source: Melexis)

(Source: Melexis)

The inductive sensor’s dual-input architecture simultaneously processes signals from two coil sets to compute vernier angles on-chip. This architecture consolidates position sensing in a single IC, reducing external circuitry and enhancing accuracy.

The MLX90514 also ensures synchronized measurements, and supports enhanced functional safety for applications such as measuring both input and output positions of a motor in e-bikes, motorcycles, or robotic joints, according to the company.

Targeting both absolute rotary or linear motion sensing, the MLX90514 provides up to 16-bit position resolution and zero-latency measurement with accuracy better than 0.1° for precise control in demanding environments. Other features include a 5-V supply voltage, coil diameters ranging from 20 mm to 300 mm, and linear displacements of up to 400 mm.

The inductive sensor offers plug-and-play configuration. Engineers only need to program a limited set of parameters, specifically offset compensation and linearization, for faster system integration.

The MLX90514 inductive sensor interface IC is available now. Melexis offers a free online Inductive Simulator tool, which allows users to design and optimize coil layouts for the MLX90514.

The post Inductive sensor simplifies position sensing appeared first on EDN.

Haptic drivers target automotive interfaces

Cirrus Logic Inc. is bringing its expertise in consumer and smartphone haptics to automotive applications with its new family of closed-loop haptic drivers. The CS40L51, CS40L52, and CS40L53 devices mark the company’s first haptic driver solutions that are reliability qualified to the AEC-Q100 automotive standard.

(Source: Cirrus Logic Inc.)

(Source: Cirrus Logic Inc.)

The CS40L51/52/53 devices integrate a high-performance 15-V Class D amplifier, boost converter, voltage and current monitoring ADCs, waveform memory, and a Halo Core DSP. In addition to simplifying design, the family’s real-time closed-loop control improves actuator response and expands the frequency bandwidth for improved haptic effects, while the proprietary algorithms dynamically adjust actuator performance, delivering precise and high-definition haptic feedback.

The CS40L51/52/53 haptic drivers are designed to deliver a more immersive and intuitive tactile user experience in a range of in-cabin interfaces, including interactive displays, steering wheels, smart surfaces, center consoles, and smart seats. These devices can operate across varying conditions.

The advanced closed-loop hardware and algorithm improve the response time of the actuator and expand usable frequency bandwidth to create a wider range of haptic effects. Here’s the lineup:

- The CS40L51 offers advanced sensor-less velocity control (SVC), real-time audio to haptics synchronization (A2H), and active vibration compensation (AVC) for immersive, high-fidelity feedback.

- The CS40L52 features the advanced closed-loop control SVC that optimizes the system haptic performance in real-time by reducing response time, increasing frequency bandwidth and compensating for manufacturing tolerances and temperature variation.

- The CS40L53 provides a click compensation algorithm to enable consistent haptic feedback across systems by adjusting haptic effects based on the actuator manufacturing characteristics.

The haptic drivers are housed in a 34-pin wettable flank QFN package. They are AEC-Q100 Grade-2 qualified for automotive applications, with an operating temperature from –40°C to 105°C.

Engineering samples of CS40L51, CS40L52, and CS40L53 are available now. Mass production will start in December 2025. Visit www.cirrus.com/automotive-haptics for more information, including data sheets and application materials.

The post Haptic drivers target automotive interfaces appeared first on EDN.

Nordic adds expansion board for nRF54L series development

Nordic Semiconductor introduces the nRF7002 expansion board II (nRF7002 EBII), bolstering the development options for its nRF54L Series multiprotocol system-on-chips (SoCs). The nRF7002 EBII plug-in board adds Wi-Fi 6 capabilities to the nRF54L Series development kits (DKs).

Based on Nordic’s nRF7002 Wi-Fi companion IC, the nRF7002 EBII allows developers using the nRF54L Series multiprotocol SoCs to leverage the benefits of Wi-Fi 6, including power efficiency improvements for battery-powered Wi-Fi operation, and management of large IoT networks, for IoT applications such as smart home and Matter-enabled devices, industrial sensors, and smart city infrastructure, as well as wearables and medical devices.

(Source: Nordic Semiconductor)

(Source: Nordic Semiconductor)

The nRF7002 EBII provides seamless hardware and software integration with the nRF54L15 and nRF54LM20 development kits. The EBII supports dual-band Wi-Fi (2.4 GHz and 5 GHz) and advanced Wi-Fi 6 features such as target wake time (TWT), OFDMA, and BSS Coloring, enabling interference-free, battery-powered operation, Nordic said.

The new board also features a dual-band chip antenna for robust connectivity across Wi-Fi bands. The onboard nRF7002 companion IC offers Wi-Fi 6 compliance, as well as backward compatibility with 802.11a/b/g/n/ac Wi-Fi standards. It supports both STA and SoftAP operation modes.

The nRF7002 EBII can be easily integrated with the nRF54L Series development kits via a dedicated expansion header. Developers can use SPI or QSPI interfaces for host communication and use integrated headers for power profiling, making the board suited for energy-constrained designs.

The nRF7002 EBII will be available through Nordic’s distribution network in the first quarter of 2026. It is fully supported in the nRF Connect SDK, Nordic’s software development kit.

The post Nordic adds expansion board for nRF54L series development appeared first on EDN.

Latest issue of Semiconductor Today now available

Wolfspeed powering Toyota’s EV platforms with silicon carbide components

5N Plus added to S&P/TSX Composite Index

BluGlass appoints CEO Jim Haden as executive director, replacing non-exec director Jean Michel Pelaprat

Fixed a flaky toaster oven button.

| This button has been working intermittently. I pulled it out and noticed it was less "clicky" than the others. Had spares on a scrap board. Works perfectly now. The hardest part was getting into that area of the toaster. [link] [comments] |

Developing high-performance compute and storage systems

Peripheral Component Interconnect Express, or PCI Express (PCIe), is a widely used bus interconnect interface, found in servers and, increasingly, as a storage and GPU interconnect solution. The first version of PCIe was introduced in 2003 by the Peripheral Component Interconnect Special Interest Group (PCI-SIG) as PCIe Gen 1.1. It was created to replace the original parallel communications bus, PCI.

With PCI, data is transmitted at the same time across many wires. The host and all connected devices share the same signals for communication; thus, multiple devices share a common set of address, data, and control lines and clearly vie for the same bandwidth.

With PCIe, however, communication is serial point-to-point, with data being sent over dedicated lines to devices, enabling bigger bandwidths and faster data transfer. Signals are transferred over connection pairs, known as lanes—one for transmitting data, the other for receiving it. Most systems normally use 16 lanes, but PCIe is scalable, allowing up to 64 lanes or more in a system.

The PCIe standard continues to evolve, doubling the data transfer rate per generation. The latest is PCIe Gen 7, with 128 GT/s per lane in each direction, meeting the needs of data-intensive applications such as hyperscale data centers, high-performance computing (HPC), AI/ML, and cloud computing.

PCIe clockingAnother differentiating factor between PCI and PCIe is the clocking. PCI uses LVCMOS clocks, whereas PCIe uses differential high-speed current-steering logic (HCSL) and low-power HCSL clocks. These are configured with the spread-spectrum clocking (SSC) scheme.

SSC works by spreading the clock signal across several frequencies rather than concentrating it at a single peak frequency. This eliminates large electromagnetic spikes at specific frequencies that could cause interference (EMI) to other components. The spreading over several frequencies reduces EMI, which protects signal integrity.

On the flip side, SSC introduces jitter (timing variations in the clock signal) due to frequency-modulating the clock signal in this scheme. To preserve signal integrity, PCIe devices are permitted a degree of jitter.

In PCIe systems, there’s a reference clock (REFCLK), typically a 100-MHz HCSL clock, as a common timing reference for all devices on the bus. In the SSC scheme, the REFCLK signal is modulated with a low-frequency (30- to 33-kHz) sinusoidal signal.

To best balance design performance, complexity, and cost, but also to ensure best functionality, different PCIe clocking architectures are used. These are common clock (CC), common clock with spread (CCS), separate reference clock with no spread (SRNS), and separate reference clock with independent spread (SRIS). Both CC and separate reference architectures can use SSC but with a differing amount of modulation (for the spread).

Each clocking scheme has its own advantages and disadvantages when it comes to jitter and transmission complexity. The CC is the simplest and cheapest option to use in designs. Here, both the transmitter and receiver share the same REFCLK and are clocked by the same PLL, which multiplies the REFCLK frequency to create the high-speed clock signals needed for the data transmission. With the separate clocking scheme, each PCIe endpoint and root complex have their own independent clock source.

PCIe switchesTo manage the data traffic on the lanes between different components within a system, PCIe switches are used. They allow multiple PCIe devices, from network and storage cards to graphics and sound cards, to communicate with one another and the CPU simultaneously, optimizing system performance. In cloud computing and AI data centers, PCIe switches connect multiple NICs, GPUs, CPUs, NPUs, and other processors in the servers, all of which require a robust and improved PCIe infrastructure.

PCIe switches play a key role in next-generation open, hyperscale data center specifications now being worked on rapidly by a growing developer contingent around the world. This is particularly needed with the advent of ML- and AI-centric data centers, underpinned by HPC systems. PCIe switches are also instrumental in many industrial setups, wired networking, communications systems, and where many high-speed devices must be connected with data traffic managed effectively.

A well-known brand of PCIe switches is the Switchtec family from Microchip Technology. The Switchtec PCIe switch IP manages the data flow and peer-to-peer transfers between ports, providing flexibility, scalability, and configurability in connecting multiple devices.

The Switchtec Gen 5.0 PCIe high-performance product lineup delivers very low system latency, as well as advanced diagnostics and debugging tools for troubleshooting and fast product development, making it highly suitable for next-generation data center, ML, automotive, communications, defense, and industrial applications, as well as other sectors. Tier 1 data center providers are relying on Switchtec PCIe switches to enable highly flexible compute and storage rack architectures.

Reference design and evaluation kit for PCIe switchesTo enable rapid, PCIe-based system development, Microchip has created a validation board reference design, shown in Figure 1, using the Switchtec Gen 5 PCIe Switch Evaluation Kit, known as the PM52100-KIT.

Figure 1: Microchip’s Switchtec Gen 5 PCIe switch reference design validation board (Source: Microchip Technology Inc.)

Figure 1: Microchip’s Switchtec Gen 5 PCIe switch reference design validation board (Source: Microchip Technology Inc.)

The reference design helps developers implement the Switchtec Gen 5 PCIe switch into their own systems. The guidelines show designers how to connect and configure the switch and how to reach the best balance for signal integrity and power, as well as meet other critical design aspects of their application.

Fully tested and validated, the reference design streamlines development and speeds time to market. The solution optimizes performance, costs, and board footprint and reduces design risk, enabling fast market entry with a finished product. See the solutions diagram in Figure 2.

Figure 2: Switchtec Gen 5 PCIe solutions setup with other key devices (Source: Microchip Technology Inc.)

Figure 2: Switchtec Gen 5 PCIe solutions setup with other key devices (Source: Microchip Technology Inc.)

As with all Switchtec PCIe switch designs, full access to the Microchip ChipLink diagnostics tool is included, which allows parameter configuration, functional debug, and signal integrity analysis.

As per all PCIe integrations, clock and timing are important aspects of the design. Clock solutions must be highly reliable for demanding end applications, and the Microchip reference design includes complete PCIe Gen 1–5 timing solutions that include the clock generators, buffers, oscillators, and crystals.

Microchip’s ClockWorks Configurator and product selection tool allow easy customization of the timing devices for any application. The tool is used to configure oscillators and clock generators with specific frequencies, among other parameters, for use within the reference design.

The Microchip PM52100-KITFor firsthand experience of the Switchtec Gen 5 PCIe switch, Microchip provides the PM52100-KIT (Figure 3), a physical board with 52 ports. The kit enables users to experiment with and evaluate the Switchtec family of Gen 5 PCIe switches in real-life projects. The kit was built with the guidance provided by the Microchip reference design.

Figure 3: The Microchip PM52100-KIT (Source: Microchip Technology Inc.)

Figure 3: The Microchip PM52100-KIT (Source: Microchip Technology Inc.)

The kit contains an evaluation board with the necessary firmware and cables. Users can download the ChipLink diagnostic tool by requesting access via a myMicrochip account.

With the ChipLink GUI, which is suitable for Windows, Mac, or Linux systems, the board’s hardware functions can easily be accessed and system status information monitored. It also allows access to the registers in the PCIe switch and configuration of the high-speed analog settings for signal integrity evaluation. The ChipLink diagnostic tool features advanced debug capabilities that will simplify overall system development.

The kit operates with a PCIe host and supports the connection of multiple host entities to multiple endpoint devices.

The PCIe interface contains an edge connector for linking to the host, several PCIe Amphenol Mini Cool Edge I/O connectors to connect the host to endpoints, and connectors for add-in cards. The 0.60-mm Amphenol connector allows high-speed signals to Gen 6 PCIe and 64G PAM4/PCIe, but also Gen 5 and Gen 4 PCIe. This connector maintains signal integrity, as its design minimizes signal loss and reflections at higher frequencies.

The board’s PCIe clock interface consists of a common reference clock (with or without SSC), SRNS, and SRIS. A single stable clock, with low jitter, is shared by both endpoints. The second most common clocking scheme is SRNS, where an independent clock is supplied to each end of the PCIe link; this is also supported by the Microchip kit.

Among the kit’s peripherals are two-wire (TWI)/SMBus interfaces; TWI bus access and connectivity to the temperature sensor, fan controller, voltage monitor, GPIO, and TWI expanders; SEEPROM for storage and PCIe switch configuration; and 100 M/GE Ethernet. The kit also includes GPIOs for TWI, SPI, SGPIO, Ethernet, and UART interfaces. There is UART access with a USB Type-B and three-pin connector header.

The included PSX Software Development Kit (integrating GHS’s MULTI development environment) enables the development and testing of the custom PCIe switch functionalities. An EJTAG debugger supports test and debug of custom PSX firmware; a 14-pin EJTAG connector header allows PSX probe connectivity.

Switchtec Gen 5 52-lane PCIe switch reference designMicrochip also offers a Switchtec 52-lane Gen 5 PCIe Switch Reference Design (Figure 4). As with the other reference design, it is fully validated and tested, and it provides the components and tools necessary to thoroughly assess and integrate this design into your systems.

This board includes a 32-bit microcontroller (ATSAME54, based on the Arm Cortex-M4 processor with a floating-point unit) to be configured with Microchip’s MPLAB Harmony software, as well as a CEC1736 root-of-trust controller. The CEC1736 is a 96-MHz Arm Cortex-M4F controller that is used to detect and provide protection for the PCIe system against failure or malicious attacks.

Figure 4: Switchtec Gen 5 52-lane PCIe switch reference design board (Source: Microchip Technology Inc.)

Microchip and the PCIe standard

Figure 4: Switchtec Gen 5 52-lane PCIe switch reference design board (Source: Microchip Technology Inc.)

Microchip and the PCIe standard

Microchip continues to be actively involved in the advancement of the PCIe standard, and it regularly participates in PCI-SIG compliance and interoperability events. With its turnkey PCIe reference designs and field-proven interoperable solutions, a high-performance design can be streamlined and brought to market very quickly.

To view the full details of this reference design, bill of materials, and to download the design files, visit https://www.microchip.com/en-us/tools-resources/reference-designs/switchtec-gen-5-pcie-switch-reference-design.

The post Developing high-performance compute and storage systems appeared first on EDN.

A digital filter system (DFS), Part 2

Editor’s note: In this Design Idea (DI), contributor Bonicatto designs a digital filter system (DFS). This is a benchtop filtering system that can apply various filter types to an incoming signal. The filtering range is up to 120 kHz.

In Part 1 of this DI, the DFS’s function and hardware implementation are discussed.

In Part 2 of this DI, the DFS’s firmware and performance are discussed.

FirmwareThe firmware, although a bit large, is not too complicated. It is broken into six files to make browsing the code a bit easier. Let’s start with the code for the LCD screen, and its attached touch detector.

Wow the engineering world with your unique design: Design Ideas Submission Guide

LCD and touchscreen codeHere might be a good place to show the LCD screens, as they will be discussed below.

The LCD screen in the DFS. From the top left: Splash Screen, Main Screen, Filter Selection Screen, Cutoff or Center Frequency Screen, Digital Gain Screen, About Screen, and Running Screen.

The LCD screen in the DFS. From the top left: Splash Screen, Main Screen, Filter Selection Screen, Cutoff or Center Frequency Screen, Digital Gain Screen, About Screen, and Running Screen.

A quick discussion of the screens will give you a good overview of the operation and capabilities of the DFS. The first screen is the opening or Splash Screen—not much here to see, but there is a battery charge icon on the upper right.

Touching this screen anywhere will move you to the Main Screen. The Main Screen shows you the current settings and allows you to jump to the appropriate screen to adjust the filter type, cutoff, or center frequency of the filter, and the digital gain. It also has the “RUN” button that starts the filtering function based on the current settings.

If you touch the “Change Filter Type” on the Main Screen, you will jump to the Filter Selection Screen. This lets you select the filter type you would like to use and if you want 2-pole or 4-pole filtering. (Selecting a 2-pole filter will give you a roll-off of 24 dB per octave or 80 dB per decade, while the 4-pole will provide you with a roll-off of 12 dB per octave or 40 dB per decade.) When you touch the “APPLY” button, your settings (in green) will be applied to your filter, and you will jump back to the Main Screen.

In the Main Screen, if you want to change the cutoff/center frequency. You can set any frequency up to 120 kHz. Hitting “Enter” will bring you back to the Main Screen.

If you want to change the digital gain (gain applied when the filter is run on a sample, between the ADC and the DAC, touch “Change Output Gain” on the Main Screen. The range for this gain is from 0.01 to 100. Again, “Enter” will take you back to the Main Screen.

On the Main Screen, selecting “About” will take you to a screen showing lots of interesting information, such as your filter settings, your current filter’s coefficients, battery voltage, and charge level. (If you do not have a battery installed, you may see fully charged numbers as it is seeing the charger voltage. There is a #define at the top of the main code you can set to false if you don’t have a battery installed, and this line won’t show.) The last item shown is the incoming USB voltage.

In the main code, hitting “Run” will start the system to take in your signal at the input BNC, filter it, and send it out the output BNC. The Running Screen also shows you the current filter settings.

Now, let’s take a quick look at the mechanics of creating the screens. The LCD uses a few libraries to assist in the display of primitive shapes and colors, text, and to provide some functions for reading the selected touchscreen position. When opening the Arduino code, you will find a file labeled “TFT_for_adjustable_filter_7.ino”. This file contains the code for most of the screen displays and touchscreen functions. The code is too long to list here, but here are a few lines of code setting the background color and displaying the letters “DFS”:

tft.fillScreen(tft.color565(0xe0, 0xe0, 0xe0)); // Grey tft.setFont(&FreeSansBoldOblique50pt7b); tft.setTextColor(ILI9341_RED); tft.setTextSize(1); tft.setCursor(13, 100); tft.print("DFS");A lot of the functions look like this, as they are used to present the data and create the keypads for filter selection, frequency setting, and digital gain setting. In this file are also the functions for monitoring and logically connecting the touch to the appropriate function.

The touchscreen, although included on the LCD, is essentially independent from it. One issue that needed to be solved was that the alignment of the touchscreen over the LCD screen is not accurate, and pixel (0,0) is not aligned with the touch position (0,0) on the touchscreen.

Also, the LCD has 240×320 pixels, and the touch screen has, roughly, 3900×900 positions. So there needs to be some calibration and then a conversion function to convert the touch point to the LCD’s pixel coordinates. You’ll see in the code that, after calibration, the conversion function is very easy using the Arduino map() function.

Other filesThere is also a file that contains the initialization code for the ADC. This sets up the sample rate, resolution, and other needed items. Note that this is run at the start of the code, but also when changing the number of poles in the filter. This is due to the need to change the sample rate. Another file contains the code to initialize the DAC, and is also called when the program is started.

Also included is a file with code to pull in factory calibration data for linearizing the ADC. This calibration data is created at the factory and is stored in the microcontroller (micro)—nice feature.

Filtering codeNow to the more interesting code. There is code to route you to the screens and their selections, but let’s skip that and look at how the filtering is accomplished. After you have selected a filter type (low-pass, high-pass, band-pass, or band-stop), the number of poles of the filter (2 or 4), the cutoff or center frequency of the filter, or adjusted the internal digital output gain, the code to calculate the Butterworth IIR filter coefficients is called.

These coefficients are calculated for a direct form II filter algorithm. The coefficients for the filters are floats, which allow the system to create filters accurately throughout a 1 Hz to 120 kHz range. After the coefficients are calculated, the filter can be run. When you touch the “RUN” button, the code begins the execution of the filter.

The first thing that happens in the filter routine is that the ADC is started in a free-running mode. That means it initiates a new ADC reading, waits for the reading to complete, signals (through a register bit) that there is a new reading available, and then starts the cycle again. So, the code must monitor this register bit, and when it is set, the code must quickly get the new ADC reading and filter it, and then send that filtered value to the DAC for output.

After this, it checks for clipping in the ADC or DAC. The input to the DFS is AC-coupled. Then, when it enters the analog front-end (AFE), it is recentered at about 1.65 V. If any ADC sample gets too close to 0 or too close to 4095 (it’s a 12-bit ADC), it is flagged as clipping, and the front panel input clipping LED will light for ~ 0.5 seconds. This could happen if the input signal is too large or the input gain dial has been turned up too far.

Similarly, the DAC output is checked to see if it gets too close to 0 or to 2047 (we’ll explain why the DAC is 11 bits in a moment). If it is, it is flagged as clipping, and the output clipping LED will light for ~0.5 seconds. Clipping of the DAC could happen because the digital gain has been set too high. Note that the output signal could be set too high, using the output gain dial, and it could be clipping the signal, but this would not be detected as the amplified analog back-end (ABE) signal is not monitored in the micro.

Now to why the DAC is 11 bits. In the micro, it is actually a 12-bit DAC, but in an effort to get the sample rate as fast as possible, I discovered that the DAC had an unspec’d slew rate limit of somewhere around 1 volt/microsecond.

To work around this, I divide the signal being passed to the DAC by two so the DAC’s output voltage doesn’t have to slew so far. This is compensated for in the ABE as the actual gain of the output gain adjust is actually 2 to 10, but represented as 1 to 5. [To those of you who are questioning why I didn’t set the reference to 1.65 (instead of 3.3 V) and use the full 12 bits, the answer is this micro has a couple of issues on its analog reference system that precluded using 1.65 V.]

One last task in the filter “running” loop is to check if the “Stop” has been pressed. This a simple input bit read so it only takes few cycles.

A couple of notes on filtering: You may have noticed that there is an extra filter you can select – the “Pass-Thru”. This takes in the ADC reading, “filters” it by only multiplying the sample by the selected digital gain. It then outputs it to the DAC. I find this useful for checking the system and setup.

Another note on filtering is that you will see, in the code, that a gain offset is added when filtering. The reason for this is that, when using a high-pass or band-pass filter, the DC level is removed from the samples. We need to recenter the result at around 2048 counts. But we can’t just add 2048, as the digital gain also comes into play. You’ll see this compensation calculated using the gain and applied in the filtering routines.

PerformanceI managed to get the ADC and DAC to work at 923,645 samples per second (sps) when using any of the 2-pole filters. This is a Nyquist frequency around 462 kHz. Since the selectable upper rate for a filter is 120 kHz, we get an oversample rate of almost 4. For 4-pole filters, the system runs at 795,250 sps, for an oversample rate of around 3.3.

The 4-pole filters are slower as they are created by cascading two 2-pole filters. [To check these sample rates and see if the loop has enough time to complete before the following sample is read, I toggle the D4 digital output as I pass through the loop. I then monitor this on a scope.]

The noise floor of the output signal is fairly good, but as I built this on a protoboard with no ground planes, I think it could be lower if this were built on a PCB with a good ground plane. The limiting factor on my particular board, though, is the spurious free dynamic range (SFDR).

I do get harmonic spurs at various frequencies, but I can get up to a 63 dB SFDR. This is not far from the SFDR spec of the ADC. I did notice that the amplitude of these harmonic spurs changed when I moved cables inside the enclosure, so good cable management may improve this.

Use of AIRecently, I’ve been using AI in design, and I like to include quick information describing if AI helped in the design—here’s how it worked out. The code to initialize the ADC was quite difficult, mostly because there was a lot of misinformation about this online.

I used Microsoft Copilot and ChatGPT to assist. Both fell victim to some of the online misinformation, but by pointing out some of the errors, I got these AI systems close enough that I could complete the ADC initialization myself.

To design the battery charge indicator on the splash screen, I used the same AI. It was a pure waste of time as it produced something that didn’t look like a battery – it was easier and faster to design it myself.

The code for the filter coefficients was difficult to track down online, and ChatGPT did a much better job of searching than Google.

For circuit design, I tried to use AI for the Sallen-Key filter designs, but it was a failure, just a waste of time. Stick to the IC supplier tools for filter design.

Tried to use AI to design a nice splash screen, but I wasted more time iterating with the chatbot than it took to design the screen.

I also tried to get AI to design an enclosure with a viewable LCD, BNC connectors, etc. To be honest, I didn’t give it a lot of chances, but it couldn’t get it to stop focusing on a flat rectangular box.

ModificationsThe code is actually easy to read, and you may want to modify it to add features (like a circular buffer sample delay) or modify things like the filter coefficient calculations. I think you’ll find this DFS has a number of uses in your lab/shop.

The schematic, code, 3D print files, links to various parts, and more information and notes on the design and construction can be downloaded at: https://makerworld.com/en/@user_1242957023/upload

Damian Bonicatto is a consulting engineer with decades of experience in embedded hardware, firmware, and system design. He holds over 30 patents.

Phoenix Bonicatto is a freelance writer.

Related Content

- A digital filter system (DFS), Part 1

- How much help are free AI tools for electronic design?

- Non-linear digital filters: Use cases and sample code

- A beginner’s guide to power of IQ data and beauty of negative frequencies – Part 1

- A Sallen-Key low-pass filter design toolkit

- Designing second order Sallen-Key low pass filters with minimal sensitivity to component tolerances

- Toward better behaved Sallen-Key low pass filters

- Design second- and third-order Sallen-Key filters with one op amp

The post A digital filter system (DFS), Part 2 appeared first on EDN.

Open World Foundation Models Generate Synthetic Worlds for Physical AI Development

Courtesy: Nvidia

Physical AI Models- which power robots, autonomous vehicles, and other intelligent machines — must be safe, generalized for dynamic scenarios, and capable of perceiving, reasoning and operating in real time. Unlike large language models that can be trained on massive datasets from the internet, physical AI models must learn from data grounded in the real world.

However, collecting sufficient data that covers this wide variety of scenarios in the real world is incredibly difficult and, in some cases, dangerous. Physically based synthetic data generation offers a key way to address this gap.

NVIDIA recently released updates to NVIDIA Cosmos open-world foundation models (WFMs) to accelerate data generation for testing and validating physical AI models. Using NVIDIA Omniverse libraries and Cosmos, developers can generate physically based synthetic data at incredible scale.

Cosmos Predict 2.5 now unifies three separate models — Text2World, Image2World, and Video2World — into a single lightweight architecture that generates consistent, controllable multicamera video worlds from a single image, video, or prompt.

Cosmos Transfer 2.5 enables high-fidelity, spatially controlled world-to-world style transfer to amplify data variation. Developers can add new weather, lighting and terrain conditions to their simulated environments across multiple cameras. Cosmos Transfer 2.5 is 3.5x smaller than its predecessor, delivering faster performance with improved prompt alignment and physics accuracy.

These WFMs can be integrated into synthetic data pipelines running in the NVIDIA Isaac Sim open-source robotics simulation framework, built on the NVIDIA Omniverse platform, to generate photorealistic videos that reduce the simulation-to-real gap. Developers can reference a four-part pipeline for synthetic data generation:

- NVIDIA Omniverse NuRec neural reconstruction libraries for reconstructing a digital twin of a real-world environment in OpenUSD, starting with just a smartphone.

- SimReady assets to populate a digital twin with physically accurate 3D models.

- The MobilityGen workflow in Isaac Sim to generate synthetic data.

- NVIDIA Cosmos for augmenting generated data.

From Simulation to the Real World

Leading robotics and AI companies are already using these technologies to accelerate physical AI development.

Skild AI, which builds general-purpose robot brains, is using Cosmos Transfer to augment existing data with new variations for testing and validating robotics policies trained in NVIDIA Isaac Lab.

Skild AI uses Isaac Lab to create scalable simulation environments where its robots can train across embodiments and applications. By combining Isaac Lab robotics simulation capabilities with Cosmos’ synthetic data generation, Skild AI can train robot brains across diverse conditions without the time and cost constraints of real-world data collection.

Serve Robotics uses synthetic data generated from thousands of simulated scenarios in NVIDIA Isaac Sim. The synthetic data is then used in conjunction with real data to train physical AI models. The company has built one of the largest autonomous robot fleets operating in public spaces and has completed over 100,000 last-mile meal deliveries across urban areas. Serve’s robots collect 1 million miles of data monthly, including nearly 170 billion image-lidar samples, which are used in simulation to further improve robot models.

See How Developers Are Using Synthetic Data

Lightwheel, a simulation-first robotics solution provider, is helping companies bridge the simulation-to-real gap with SimReady assets and large-scale synthetic datasets. With high-quality synthetic data and simulation environments built on OpenUSD, Lightwheel’s approach helps ensure robots trained in simulation perform effectively in real-world scenarios, from factory floors to homes.

Data scientist and Omniverse community member Santiago Villa is using synthetic data with Omniverse libraries and Blender software to improve mining operations by identifying large boulders that halt operations.

Undetected boulders entering crushers can cause delays of seven minutes or more per incident, costing mines up to $650,000 annually in lost production. Using Omniverse to generate thousands of automatically annotated synthetic images across varied lighting and weather conditions dramatically reduces training costs while enabling mining companies to improve boulder detection systems and avoid equipment downtime.

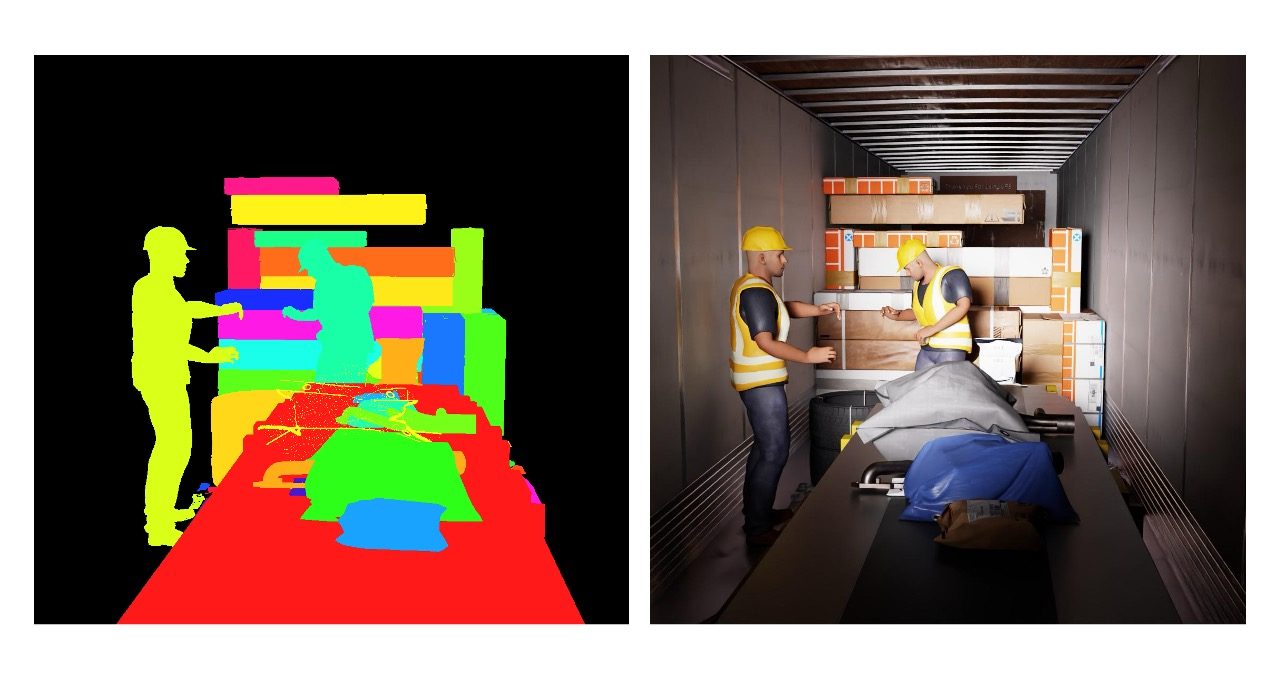

FS Studio partnered with a global logistics leader to improve AI-driven package detection by creating thousands of photorealistic package variations in different lighting conditions using Omniverse libraries like Replicator. The synthetic dataset dramatically improved object detection accuracy and reduced false positives, delivering measurable gains in throughput speed and system performance across the customer’s logistics network.

Robots for Humanity built a full simulation environment in Isaac Sim for an oil and gas client using Omniverse libraries to generate synthetic data, including depth, segmentation and RGB images, while collecting joint and motion data from the Unitree G1 robot through teleoperation.

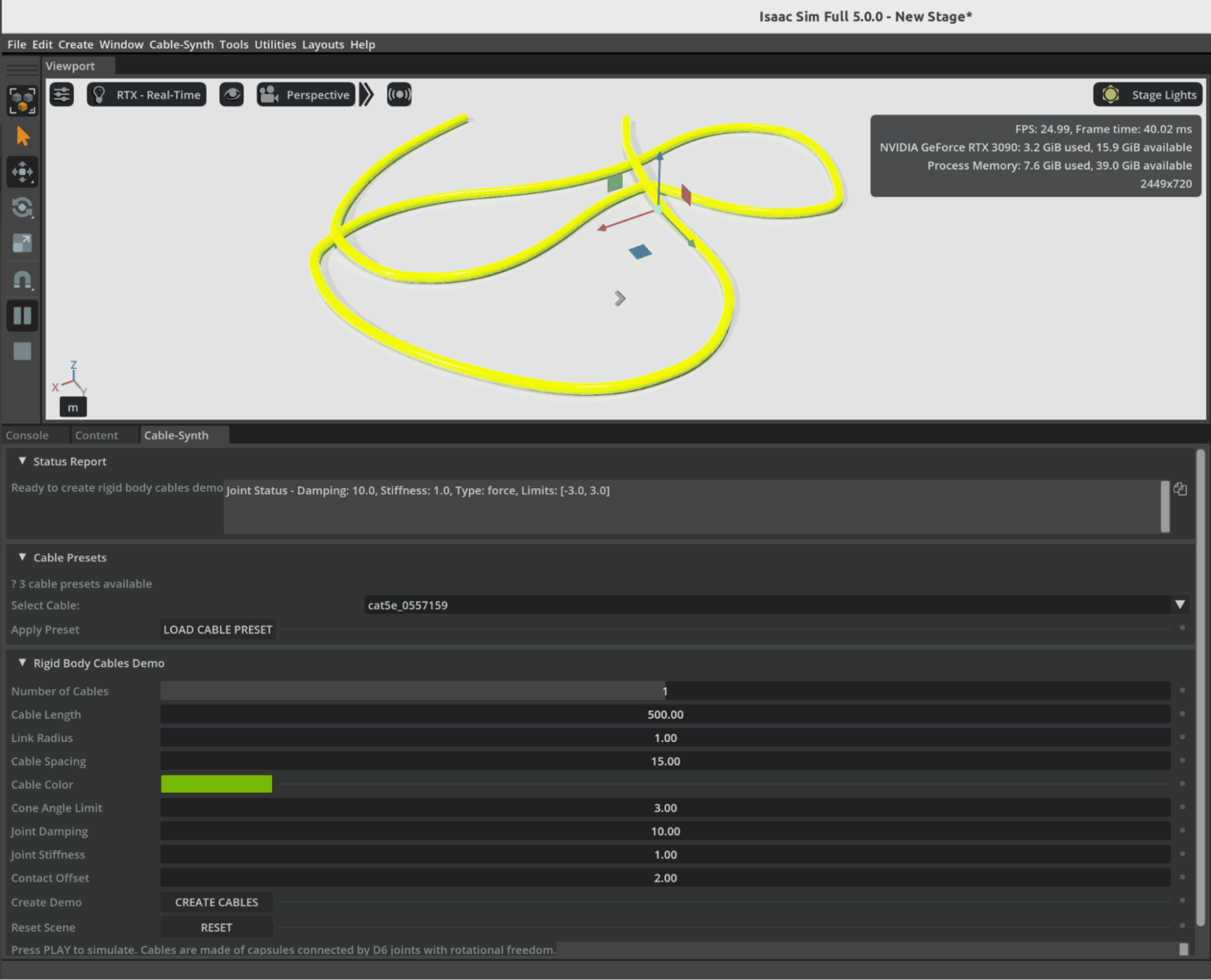

Omniverse Ambassador Scott Dempsey is developing a synthetic data generation synthesizer that builds various cables from real-world manufacturer specifications, using Isaac Sim to generate synthetic data augmented with Cosmos Transfer to create photorealistic training datasets for applications that detect and handle cables.

Conclusion

As physical AI systems continue to move from controlled labs into the complexity of the real world, the need for vast, diverse, and accurate training data has never been greater. Physically based synthetic worlds—driven by open-world foundation models and high-fidelity simulation platforms like Omniverse—offer a powerful solution to this challenge. They allow developers to safely explore edge cases, scale data generation to unprecedented levels, and accelerate the validation of robots and autonomous machines destined for dynamic, unpredictable environments.

The examples from industry leaders show that this shift is already well underway. Synthetic data is strengthening robotics policies, improving perception systems, and drastically reducing the gap between simulation and real-world performance. As tools like Cosmos, Isaac Sim, and OpenUSD-driven pipelines mature, the creation of rich virtual worlds will become as essential to physical AI development as datasets and GPUs have been for digital AI.

In many ways, we are witnessing the emergence of a new engineering paradigm—one where intelligent machines learn first in virtual environments grounded in real physics, and only then step confidently into the physical world. The Omniverse is not just a place to simulate; it is becoming the training ground for the next generation of autonomous systems.

The post Open World Foundation Models Generate Synthetic Worlds for Physical AI Development appeared first on ELE Times.

Ascent Solar closes up to $5.5m private placement

Космічний шлях одесита Георгія Добровольського

Наша газета вже писала про те, як відзначали цьогорічний Всесвітній тиждень космосу в Державному політехнічному музеї ім. Бориса Патона. Серед заходів програми була й лекція, присвячена пам'яті уродженця України, космонавта Георгія Добровольського – піонера тривалих пілотованих космічних польотів і командира екіпажу космічного корабля "Союз-11".

How Well Will the Automotive Industry Adopt the Use of AI for its Manufacturing Process

Gartner believes that by 2029, only 5% of automakers will maintain strong AI investment growth, a decline from over 95% today.

“The automotive sector is currently experiencing a period of AI euphoria, where many companies want to achieve disruptive value even before building strong AI foundations,” said Pedro Pacheco, VP Analyst at Gartner. “This euphoria will eventually turn into disappointment as these organizations are not able to achieve the ambitious goals they set for AI.”

Gartner predicts that only a handful of automotive companies will maintain ambitious AI initiatives after the next five years. Organizations with strong software foundations, tech-savvy leadership, and a consistent very long-term focus on AI will pull ahead from the rest, creating a competitive AI divide.

“Software and data are the cornerstones of AI,” said Pacheco. “Companies with advanced maturity in these areas have a natural head start. In addition, automotive companies led by execs with strong tech know-how are more likely to make AI their top priority instead of sticking to the traditional priorities of an automotive company.”

Fully-Automated Vehicle Assembly Predicted by 2030

The automotive industry is also heading for radical operational efficiency. As automakers rapidly integrate advanced robotics into their assembly lines, Gartner predicts that by 2030, at least one automaker will achieve fully automated vehicle assembly, marking a historic shift in the automotive sector.

“The race toward full automation is accelerating, with nearly half of the world’s top automakers (12 out of 25) already piloting advanced robotics in their factories,” said Marco Sandrone, VP Analyst at Gartner. “Automated vehicle assembly helps automakers reduce labor costs, improve quality, and shorten production cycle times. For consumers, this means better vehicles at potentially lower prices.”

While it may reduce the direct need for human labor in vehicle assembly, new roles in AI oversight, robotics maintenance and software development could offset losses if reskilling programs are prioritized.

The post How Well Will the Automotive Industry Adopt the Use of AI for its Manufacturing Process appeared first on ELE Times.

Electronics manufacturing and exports grow manifold in the last 11 years

Central government-led schemes, including PLI for large-scale electronics manufacturing (LSEM) and PLI for IT hardware, have boosted both manufacturing and exports in the broader electronics category and the mobile phone segment.

The mobile manufacturing in India has taken a tremendous rise. In last 11 years, total number of mobile manufacturing units have increased from 2 to more than 300. Since the launch of PLI for LSEM, Mobile manufacturing has increased from 2.2 Lakh Cr in 2020-21 to 5.5 Lakh Cr.

Minister of State for Electronics and Information Technology Jitin Prasada, in a question, informed Rajya Sabha on Friday that as a result of policy efforts, electronics manufacturing has grown almost six times in the last 11 years – from ₹1.9 lakh crore in 2014-15 to ₹11.32 lakh crore in 2024-25.

The booming industry has generated employment for approximately 25 lakh people and the electronic exports have grown by eight times since 2014-15.

According to the information submitted by Union Minister for Electronics and Information Technology Shri Ashwini Vaishnaw in Rajya Sabha, to encourage India’s young engineers, the Government is providing latest design tools to 394 universities and start-ups. Using these tools, chip designers from more than 46 universities have designed and fabricated the chips using these tools at Semiconductor Labs, Mohali.

Also, all major semiconductor design companies have set up design centers in India. Most advanced chips such as 2 nm chips are now being designed in India by Indian designers.

The post Electronics manufacturing and exports grow manifold in the last 11 years appeared first on ELE Times.