Feed aggregator

The Schiit Modi Multibit: A little wiggling ensures this DAC won’t quit

Sometimes, when an audio component dies, the root cause is something electrical. Other times, the issue instead ends up being fundamentally mechanical.

Delta sigma modulation-based digital-to-analog conversion (DAC) circuitry dominates the modern high-volume audio market by virtue of its ability (among other factors) to harness the high sample rate potential of modern fast-switching and otherwise enhanced semiconductor processes. Quoting from Wikipedia’s introduction to the as-usual informative entry on the topic (which, as you’ll soon see, also encompasses analog-to-digital converters, i.e., ADCs):

Delta-sigma modulation is an oversampling method for encoding signals into low bit depth digital signals at a very high sample-frequency as part of the process of delta-sigma analog-to-digital converters (ADCs) and digital-to-analog converters (DACs). Delta-sigma modulation achieves high quality by utilizing a negative feedback loop during quantization to the lower bit depth that continuously corrects quantization errors and moves quantization noise to higher frequencies well above the original signal’s bandwidth. Subsequent low-pass filtering for demodulation easily removes this high frequency noise and time averages to achieve high accuracy in amplitude, which can be ultimately encoded as pulse-code modulation (PCM).

Both ADCs and DACs can employ delta-sigma modulation. A delta-sigma ADC encodes an analog signal using high-frequency delta-sigma modulation and then applies a digital filter to demodulate it to a high-bit digital output at a lower sampling-frequency. A delta-sigma DAC encodes a high-resolution digital input signal into a lower-resolution but higher sample-frequency signal that may then be mapped to voltages and smoothed with an analog filter for demodulation. In both cases, the temporary use of a low bit depth signal at a higher sampling frequency simplifies circuit design and takes advantage of the efficiency and high accuracy in time of digital electronics.

Primarily because of its cost efficiency and reduced circuit complexity, this technique has found increasing use in modern electronic components such as DACs, ADCs, frequency synthesizers, switched-mode power supplies and motor controllers. The coarsely-quantized output of a delta-sigma ADC is occasionally used directly in signal processing or as a representation for signal storage (e.g., Super Audio CD stores the raw output of a 1-bit delta-sigma modulator).

Oversampled interpolation vs quantization noise shapingThat said, plenty of audio purists object to the inherent interpolation involved in the delta-sigma oversampling process. Take, for example, this excerpt from the press release announcing Schiit’s $249 first-generation Modi Multibit DAC, today’s teardown patient, back in mid-2016:

Multibit DACs differ from the vast majority of DACs in that they use true 16-20 bit D/A converters [editor note: also known as resistor ladder, specifically R-2R, D/A converters] that can reproduce the exact level of every digital audio sample. Most DACs use inexpensive delta-sigma technology with a bit depth of only 1-5 bits to approximate the level of every digital audio sample, based on the values of the samples that precede and follow it.

Here’s more on the Modi Multibit 1 bill of materials, from the manufacturer:

Modi Multibit is built on Schiit’s proprietary multibit DAC architecture, featuring Schiit’s unique closed-form digital filter on an Analog Devices SHARC DSP processor. For D/A conversion, it uses a medical/military grade, true multibit converter specified down to 1/2LSB linearity, the Analog Devices AD5547CRUZ.

That said, however, plenty of other audio purists object to the seemingly inferior lab testing results for multibit DACs versus delta-sigma alternatives (including those from Schiit itself), particularly in the context of notably higher comparative prices of multibit offerings. Those same detractors, exemplifying one end of the “objectivist” vs “subjectivist” opinion spectrum, would likely find it appropriate that in the “Schiit stack” whose photo I first shared a few months ago (and which I’ll discuss in detail in another post to come shortly):

I coupled a first-generation Modi Multibit (bottom) with a Vali 2++ tube-based headphone amplifier (top), both Schiit devices delivering either “enhanced musicality” (if you’re a subjectivist) or “desultory distortion” (for objectivists). For what it’s worth, I don’t consistently align with either camp; I was just curious to audition the gear and compare the results against more traditional delta-sigma DAC and discrete- or op amp-based amplifier alternatives!

A sideways wiggle did the trickThat said, I almost didn’t succeed in getting the Modi Multibit into the setup at all. My wife had bought it for me off eBay as a Valentine’s Day gift in (claimed gently) used condition back in late January; it took me a few more months to get around to pressing it into service. Cosmetically, it indeed looked nearly brand new. But when I flipped the backside power switch…nothing. This in spite of the fact that the AC transformer feeding the device still seemed to be functioning fine:

The Modi Multibit was beyond the return-for-refund point, and although the seller told me it had been working fine when originally shipped to us, I resigned myself to the seemingly likely reality that it’d end up being nothing more than a teardown candidate. But after subsequently disassembling it, I found nothing scorched or otherwise obviously fried inside. So, on a hunch and after snapping a bunch of dissection photos and then putting it back together and reaching out to the manufacturer to see if it was still out-of-warranty repairable (it was), I plugged it back into the AC transformer and wiggled the power switch back and forth sideways. Bingo; it fired right up! I’m now leaving the power switch in the permanently “on” position and managing AC control to it and other devices in the setup via a separately switchable multi-plug mini-strip:

What follows are the photos I’d snapped when I originally took the Modi Multibit apart, starting with some outside-chassis shots and as-usual accompanied by a 0.75″ (19.1 mm) diameter U.S. penny for size comparison purposes. Front first; that round button, when pressed, transitions between the USB, optical S/PDIF, and RCA (“coaxial”) S/PDIF inputs you’ll see shortly, selectively illuminating the associated front panel LED-of-three to the right of the button at the same time:

Left side:

Back, left-to-right are the:

- Unbalanced right and left channel analog audio outputs

- RCA (“coaxial”) digital S/PDIF input

- Optical digital S/PDIF input

- USB digital input

- The aforementioned flaky power switch, and

- 16V AC input

Before continuing, a few words about the “wall wart”. It’s not, if you haven’t yet caught this nuance, a typical full AC-to-DC converter. Instead, it steps down the premises AC voltage to either 16V (which is actually, you may have noticed from the earlier multimeter photo, more like 20V unloaded) for the Modi Multibit or 24V for the Vali 2++, with the remainder of the power supply circuitry located within the audio component itself:

Fortunately, since the 16V and 24V transformer output plugs are dissimilar, there’s no chance you’ll inadvertently blow up the Modi Multibit by plugging the Vali 2++ wall wart into it!

Onward, right side:

Bottom:

And last but not least, the top, including the distinctive “Multibit” logo, perhaps obviously not also found on delta-sigma-implementing Schiit DACs:

Let’s start here, with those four screw heads you see, one in each corner:

With them removed, the aluminum top piece pops right off:

Next up, the two screw heads on the back panel:

And finally, the three at the steel bottom plate:

At this point, the PCB slides out, although you need to be a bit careful in doing so to prevent the steel frame’s top bracket from colliding with tall components along the PCB’s left edge:

Here’s a close-up of the PCB topside’s left half:

AC-to-DC conversion circuitry dominates the far left portion of the PCB. The large IC at the center is C-Media Electronics’ CM6631A (PDF) USB 2.0 high-speed true HD audio processor. Below it is the associated firmware chip, with an “MD218” sticker on top. The original firmware, absent the sticker, had a minor (and effectively inaudible, but you know how picky audiophiles can be) MSB zero-crossing glitch artifact that Schiit subsequently fixed, also sending replacement firmware chips to existing device owners (or alternatively updating them in-house for non-DIYers).

And here’s the PCB topside’s right half:

Now for the other side:

In the bottom left quadrant are two On Semiconductor MC74HC595A (PDF) 8-bit serial-input/serial or parallel-output shift registers with latched 3-state outputs. Above them is the aforementioned “resistor ladder DAC”, Analog Devices’ AD5547. Above it and to either side are a pair of Analog Devices AD8512A (PDF) dual precision JFET amplifiers. And above them is STMicroelectronics’ TL082ID dual op amp.

Shift your eyes to the right, and you’ll not be able to miss the largest IC on this side of the PCB. It’s the Analog Devices ADSP-21478 SHARC DSP, also called out previously in Schiit’s press release. Above it is an AKM Semiconductor AK4113 six-channel 24-bit stereo digital audio receiver chip for the Modi Multibit’s two S/PDIF inputs. And on the other side…

Shift your eyes to the right, and you’ll not be able to miss the largest IC on this side of the PCB. It’s the Analog Devices ADSP-21478 SHARC DSP, also called out previously in Schiit’s press release. Above it is an AKM Semiconductor AK4113 six-channel 24-bit stereo digital audio receiver chip for the Modi Multibit’s two S/PDIF inputs. And on the other side…

is a SST (now Microchip Technology) 39LF010 1Mbit parallel flash memory, presumably housing the SHARC DSP firmware.

Wrapping up, here are some horizontal perspectives of the front, back, and left-and-right sides:

And that’s “all” I’ve got for you today! In the future, I plan to compare the first-generation Modi Multibit against its second-generation successor, which switches to a Schiit-developed USB interface, branded as Unison and based on a Microchip Technologies controller, and also includes a NOS (non-oversampling) mode option, along with stacking it up against several of Schiit’s delta-sigma DAC counterparts. Until then, let me know your thoughts in the comments!

—Brian Dipert is the Principal at Sierra Media and a former technical editor at EDN Magazine, where he still regularly contributes as a freelancer.

Related Content

- Class D: Audio amplifier ascendancy

- Audio amplifiers: How much power (and at what tradeoffs) is really required?

- A holiday shopping guide for engineers: 2025 edition

- Audio Amplifiers from Class A, B, D to T

The post The Schiit Modi Multibit: A little wiggling ensures this DAC won’t quit appeared first on EDN.

Building automotive data logging with F-RAM flash combo

Advances in the automotive industry continue to make cars safer, more efficient, and more reliable than ever. As motor vehicles become more advanced, so do the silicon components that serve as the backbone of their advanced features. Case in point: the requirement for and proliferation of data logging systems is an item that has become increasingly prevalent.

In particular, event data recorder (EDR) and data storage system for autonomous driving (DSSAD) have been the source of significant attention due to recent worldwide legislation. While both systems function to provide safe and reliable storage of driving data, there are a few key distinctions (Table 1).

Table 1 Here is a comparison between EDR and DSSAD data loggers. Source: Infineon

As regulations governing automotive data logging evolve, so do the specifications for the associated memory that stores the data. For instance, in the United States, these storage requirements were recently revised to “extend the EDR recording period for timed data metrics from 5 seconds of pre-crash data at a frequency of 2 Hz to 20 seconds of pre-crash data at a frequency of 10 Hz”. These effects will be enforced on September 1, 2027, for most manufacturers with a few exceptions for altered vehicles and small-volume production lines.

Regulations such as these are not unique to the United States but rather echoed worldwide. Recently, the United Nations Economic Commission for Europe (UNECE) has sought to standardize automotive data logging requirements across its constituents with key pieces of regulation. These regulations provide guidelines for EDR in passenger vehicles, heavy-duty vehicles, and DSSAD as it pertains to Automated Lane Keeping Systems (ALKS). As these regulations grow and are adopted by constituents, the demand for hardware storage systems becomes paramount in the automotive industry.

Data storage requirements

The National Highway Traffic Safety Administration (NHTSA) in the United States gives insight into existing EDR solutions, describing them as having “a random-access memory (RAM) buffer the size of one EDR record to locally store data before the data is written to memory. The data is typically stored using electrically erasable programmable read-only memory (EEPROM) or data flash memory”.

This document also provides an overview of concerns and industry feedback regarding the requirement changes. The feedback indicated that “while the proposed 20 seconds of pre-crash data could be recorded by EDRs, some EDRs may require significant hardware and software changes to meet these demands”.

On the other hand, DSSAD requires storage of all events over a set period. While the previously referenced NHTSA document applies only to EDR, a similar solution could fulfill these requirements by buffering incoming signals before transferring them to long-term storage in a non-volatile memory.

Given the strain on current systems from growth in requirements, the quest for an optimized solution becomes pertinent. All things considered, the ideal system must provide power-loss robustness for buffered data and enough space for long-term storage. With these requirements in mind, this article will discuss how using F-RAM and NOR flash together meets the modern challenges of data logging.

Flash F-RAM combo

Ferroelectric random-access memory (F-RAM) stores information in a ferroelectric capacitor. The dipoles within this material—oriented based on the direction of applied charge—maintain their orientation after power is no longer applied. This type of memory is characterized by fast write speeds and high endurance (~1014 cycles).

These characteristics give F-RAM a unique advantage over other non-volatile memory technologies. However, densities for F-RAM are low, ranging from a few kilobits to tens of megabits, limiting its scope for high density applications.

NOR flash is another type of memory which uses a MOSFET to store electric charge within a non-metallic region of the transistor’s gate. This memory is typically more complex in its operation—for instance, the necessity of an erase operation—than F-RAM and may offer additional features such as password protection or a one-time programmable secure silicon region. NOR flash offers small-granular random access for reading but requires large-granular access to write operations.

Multiple bits must be erased simultaneously and then programmed in order to “write” to the device. Thus, timing for device operations is generally slower compared to F-RAM, and endurance is comparatively limited (~106 cycles). However, NOR flash holds the advantage of a larger storage size, with ranges up to a few gigabits.

This article will showcase how F-RAM + NOR flash compares to RAM + NOR flash as a solution for EDR and DSSAD. For this analysis, data was logged in a ring buffer within the front-end device continuously. During event triggers for EDR or conditions where the buffer filled for DSSAD, the information was transferred to the back-end device, where the data was held in long term storage (Figure 1).

Figure 1 The block diagram shows front-end and back-end storage in a logging operation. Source: Infineon

To evaluate performance, Table 2 below uses requirements from both EDR and DSSAD for the comparison. These specifications for EDR and DSSAD were based on the UN regulations for heavy duty vehicles and for ALKS DSSAD requirements, respectively. To discuss how these systems work, it’s important to review the specifications from these documents and highlight the assumptions made by the comparison.

Table 2 The performance comparison between EDR and DSSAD is conducted across multiple technologies. Source: Infineon

For logging systems that use EDR, data elements are required to be logged continuously and transferred to long term storage solely in the case of an event, such as a car accident. A ring buffer in the front-end device accomplishes this effectively. To determine the size of this buffer, the expected EDR data rate is needed alongside the storage time requirements.

From the legislation, required parameters were used as a part of the calculation. The relevant time interval (commonly 20 seconds pre-crash data, 10 seconds post-crash data) and logging frequency (4 Hz, 10 Hz, or single instance) were also utilized for calculations. One important assumption was a fixed EDR data packet size of 12 Bytes.

The UNECE document has no requirement for using a set number of bytes for storage or for storing any information outside of the parameter data. This comparison assumes an 8-byte time stamp of metadata will be included alongside an estimated 3 bytes for parameter data and 1 byte for parameter identification. Using the previous calculations and assumptions, the expected buffer size was calculated to be 790 Kbits.

In the case of the event, the entire buffer would be transferred to the back-end storage. It is required that “the EDR non-volatile memory buffer shall accommodate the data related to at least five different events”. Thus, five events worth of storage were allocated, resulting in a back-end EDR buffer size of 3.95 Mbits.

On the other hand, DSSAD requires all data elements to be stored rather than a set amount of data within an event window. Therefore, a relatively small buffer can be used on the front-end which can be migrated to the back-end device once filled. It was assumed that the buffer must be large enough to store all events if a sector erase is in progress and must be completed before transferring the data to NOR flash.

For this analysis, the maximum DSSAD rate is assumed to be 10 events/second. Furthermore, each packet was estimated to be a fixed size of 25 bytes. This would include the time and date stamp (estimated as 8 bytes) and parameter data (estimated as 1 byte) which are required in the regulation, alongside the GPS coordinates (estimated as 16 bytes), which are not required but are included as metadata in this analysis. This results in a front-end DSSAD buffer size of 5.3 Kbits.

Meanwhile, for the back-end device, an assumption of 6 months of DSSAD data storage was implemented. The size of the back-end buffer is determined by the average expected DSSAD rate multiplied by the 6-month period. Using an estimated DSSAD rate 4 events/minute and the fixed 25-byte packet size, the back-end buffer was calculated to be 210 Mbits.

Memory endurance characteristics

The sum of the buffer sizes determined the required densities for each of the components in Table 2. For this analysis, the F-RAM and NOR flash endurance characteristics were demonstrated by Infineon’s SEMPER NOR flash and EXCELON F-RAM devices. Endurance was assumed to be infinite for the front-end SRAM device, but data packets are considered lost during a power failure as this is a volatile memory.

This comparison model attempted to find the smallest density that could meet a life expectancy endurance requirement of 20 years. The results of this comparison are shown in Table 2.

As shown in the table, the critical F-RAM + NOR flash solution advantage is in the case of a power loss situation. The worst-case scenario is in a situation where buffered information in volatile RAM without using a back-up battery would result in the loss of significant vehicle data during a crash.

In this case, if an erase is required at the start of an event, the system could lose all 20 seconds of pre-crash data plus the 2.68 seconds of time it takes to perform an erase on a 256-Mb SEMPER NOR device if the power is lost as the erase is completed. The corresponding data lost is calculated based on the assumed EDR and DSSAD data rates over this time.

Despite the high rate of cycling through the ring buffer, the EXCELON F-RAM was able to meet the endurance requirements and match the life expectancy of the RAM + SEMPER NOR flash solution.

As far as other potential front-end solutions, it should be noted that using other non-volatile technologies for the front-end such as EEPROM or RRAM would potentially require higher density requirements due to the lower endurance capabilities compared to F-RAM. Furthermore, the fast write time of EXCELON F-RAM provides proper storage for data packets sent to the front-end device in the immediate time frame prior to a power loss.

Why memory matters in data logging

Given the growth of EDR and DSSAD and the legal implications associated with these systems, reliable data storage is paramount and therefore reflected in legislative requirements. For instance, the requirement of “adequate protection against manipulation like data erasure of stored data such as anti-tampering design”. While there are different ways to secure the logged data on a system, a simple and robust method involves hardware.

The future of autonomous driving depends on logging for legal records, safety, and cutting-edge features. As systems become more complex, memory technologies are frequently challenged for performance, requiring creative solutions to satisfy the requirements.

Kyle Holub is applications engineer at Infineon Technologies.

Related Content

- FRAM MCUs For Dummies

- Cypress Sees a Future for FRAM

- 7 Ways a Data Logger Can Save You Money

- Why FRAMs suit data logging in EDR for airbags, ADAS

- Why FRAM memories are suitable for data logging in ADAS designs

The post Building automotive data logging with F-RAM flash combo appeared first on EDN.

Студент НН ІТС Данііл Потомахін – віце-чемпіон Європи з пауерліфтингу

Нещодавно студент НН ІТС КПІ ім. Ігоря Сікорського Данііл Потомахін, який у складі національної збірної команди представляв Україну на чемпіонаті Європи з пауерліфтингу, виборов срібну медаль і став віце-чемпіоном континенту. Чемпіонат проходив у місті Валетта (Мальта), участь у ньому взяли спортсмени з 15 країн. Про те, як проходили змагання та свій шлях до успіху, Данііл розповів кореспонденту "Київського політехніка":

CEA-Leti and ST demo path to fully monolithic silicon RF front-ends with 3D sequential integration

Imec presents record WSe2-based 2D-pFETs for extending logic technology roadmap

NTT reports first RF operation of AlGaN transistors with Al-content over 0.75

CHIPX to establish 8-inch GaN-on-SiC wafer fab in Malaysia

What Are Memory Chips—and Why They Could Drive TV Prices Higher From 2026

As the rupee continues to depreciate, crossing the magical figure of 90, the electronics manufacturing industry in India is set to take a blow. As India imports nearly 70 percent of the components used in TVs, whether Open Cell Panels or glass substrates, and even the memory Chips, a continued rise in the prices of memory chips along with depreciating rupees beyond 90 is set to impact the TV prices by around 3-4 percent by January 2026. The situation is further aggravated by the rising demand for High-Bandwidth Memory (HBM) for AI servers, which is driving a global chip shortage.

Also, the chipmakers are largely focusing on the high-profit AI chips, lowering the supply of chips for legacy devices like TVs. Like any high-end device, Smart TVs are also heavily dependent on memory chips for various reasons, ranging from storing preferences to facilitating services.

What are Memory Chips?

Memory Chips are integrated chips used in TVs that store firmware, settings, apps, and user data, primarily using Electrically Erasable Programmable Read-Only Memory (EEPROM) for basic adjustments (like picture settings) and modern eMMC flash memory (like in smartphones) for smart TV OS, apps, and video buffering. The EEPROM mode allows the memory to retain data even when power is off and makes it essential for storing data like configuration settings and the system’s fundamental settings.

Why is it so important?

Since the chip stores such minimal yet crucial data, important for the functioning of smart TVs, they act as the brains of the TV’s memory. Most importantly, these not only hold data, but also ensure that the TVs start up correctly and store the TV’s Operating system and Software. In crux, it is an integrated memory that, like any memory, runs smart features, remembers preferences, and displays content smoothly.

7-10 % Price Hike

According to media reports, Super Plastronics Pvt Ltd (SPPL)—a TV manufacturing company that holds licences for several global brands, including Thomson, Kodak and Blaupunkt—has said that memory chip prices have surged by nearly 500 per cent over the past three months. The company’s CEO, Avneet Singh Marwah, added that television prices could rise by 7–10 per cent from January, driven largely by the memory chip crunch and the impact of a depreciating rupee.

According to a recent Counterpoint Research report, India’s smart TV shipments fell 4 per cent year-on-year in Q2 2025, weighed down by saturation in the smaller-screen segment, a lack of fresh demand drivers, and subdued consumer spending.

The post What Are Memory Chips—and Why They Could Drive TV Prices Higher From 2026 appeared first on ELE Times.

Anritsu & HEAD Launch Acoustic Evaluation Solution for Next-Gen Automotive eCall Systems

ANRITSU CORPORATION and HEAD acoustics have jointly launched of an advanced acoustic evaluation solution for next-generation automotive emergency call systems (“NG eCall”).

The new solution is compliant with ITU-T Recommendation P.1140, enabling precise assessment of voice communication quality between vehicle occupants and Public Safety Answering Points (PSAPs), supporting faster and more effective emergency response.

With NG eCall over 4G (LTE) and 5G (NR) now mandatory in Europe as of 1 January, 2026, ensuring high-quality, low-latency voice communication during vehicle emergencies has become essential. After a collision, calls are conducted hands-free inside the vehicle cabin, where high noise levels, echoes, and other acoustic challenges can significantly degrade speech clarity. Reliable voice performance is therefore critical to accurately conveying the situation and enabling rapid rescue operations.

The solution integrates Anritsu’s MD8475B (for 4G LTE base-station simulation) or MT8000A (for both 4G LTE and 5G NR simulation) with HEAD acoustics’ ACQUA voice quality analysis platform. This combination enables comprehensive evaluation of transmitted (microphone) and received (speaker) audio under a wide range of realistic operating conditions.

Example Evaluation Scenarios

• Echo and double-talk situations where speaker output re-enters the microphone or simultaneous speech may affect intelligibility

• Cabin noise simulations representing real driving environments, including road, wind, and engine noise

By delivering a reliable and repeatable approach to voice-quality assessment, Anritsu reinforces its commitment to supporting automotive manufacturers and suppliers in the development of NG eCall and advanced in-vehicle audio systems, contributing to a safer and more secure mobility ecosystem.

The post Anritsu & HEAD Launch Acoustic Evaluation Solution for Next-Gen Automotive eCall Systems appeared first on ELE Times.

After I repaired my laptop, I had a handful of spare parts left over. I think the manufacturer simply kept them as a backup, just in case)

| submitted by /u/SpaceRuthie [link] [comments] |

Siren circuit I made

| Last year at a social get-together, I got immensely bored and heard a fire truck siren in the distance. I began brainstorming ways to model the ramping-up and ramping-down of the Q-siren and came up with this simple VCO design and a large capacitor. Like the physical sirens, the circuit has a power button (to ramp up the frequency) and a brake button (to quickly reduce the frequency. A fun side effect of the way I designed the controls is that when both buttons are depressed, the steady state frequency falls somewhere lower than it otherwise would, which mimics what would probably happen if you tried accelerating the turbine while the brake was engaged. (I have never heard this actually happen, but it’s a fun thought.) I’m sad that I’m not allowed to post a video on here, but if someone asks for one I’ll figure out a way to share it. [link] [comments] |

I don't think it's supposed to look like this

| The temperature sensor of the heating station gave up and now it heats up indefinitely. Perfect for making your PCBs very crispy and crunchy. [link] [comments] |

Intend to buy huge lot of electronic components.

| I am offered a huge lot of electronic components from a former TV repair shop that was active from 1973 - 2015. Resistors, capacitors, transistors, IC's and many other components. HV transformers (TV), switches, knobs, inductors, subassemblies, ... Most of it is sorted in over 40 Raaco bins, and the rest is partially sorted/unsorted. They are asking 400 euro and I have to decide tomorrow by noon. I think I will buy it, but it will take time to move it all and sort it again. [link] [comments] |

Weekly discussion, complaint, and rant thread

Open to anything, including discussions, complaints, and rants.

Sub rules do not apply, so don't bother reporting incivility, off-topic, or spam.

Reddit-wide rules do apply.

To see the newest posts, sort the comments by "new" (instead of "best" or "top").

[link] [comments]

Vintage white ceramic ICs are absolutely beautiful!

| Black thermoset resin packaging is probably far superior from an industrial standpoint, but I’m in love with the beauty of white ceramic IC packages from around the 1970s. [link] [comments] |

Пішов з життя професор Віктор Демидович Романенко

З глибоким сумом повідомляємо про передчасну кончину видатного вченого та педагога, заступника директора Навчально-наукового інституту прикладного системного аналізу з науково-педагогічної роботи професора Віктора Демидовича Романенка.

The 1972LED's are Red

| This is in response to "light them up" from mr. blueball. Finally figured out how to light it up with a AA battery. These are RED led's. Please forgive me for any sacred electronic transgressions I may have committed in making this picture, I did not intend to harm or decrease the value of these amazing objects, I am a biologist dammit, not an engineer. In 1972, I visited my father's lab. After turning off the lights, he started turning on rows and rows of red, green and yellow LED's. It was an amazing sight. Thank you to all commentors for the great information and feedback on my first post titled: Interesting old Monsanto LED's 1972. [link] [comments] |

Building high-performance robotic vision with GMSL

Robotic systems depend on advanced machine vision to perceive, navigate, and interact with their environment. As both the number and resolution of cameras grow, the demand for high-speed, low-latency links capable of transmitting and aggregating real-time video data has never been greater.

Gigabit Multimedia Serial Link (GMS), originally developed for automotive applications, is emerging as a powerful and efficient solution for robotic systems. GMSL transmits high-speed video data, bidirectional control signals, and power over a single cable, offering long cable reach, deterministic microsecond-level latency with extremely low bit error rate (BER). It simplifies the wiring harness and reduces the total solution footprint, ideal for vision-centric robots operating in dynamic and often harsh environments.

The following sections discuss where and how cameras are used in robotics, the data and connectivity challenges these applications face, and how GMSL can help system designers build scalable, reliable, and high-performance robotic platforms.

Where are cameras used in robotics?Cameras are at the heart of modern robotic perception, enabling machines to understand and respond to their environment in real time. Whether it’s a warehouse robot navigating aisles, a robotic arm sorting packages, or a service robot interacting with people, vision systems are critical for autonomy, automation, and interaction.

These cameras are not only diverse in function but also in form—mounted on different parts of the robot depending on the task and tailored to the physical and operational constraints of the platform (see Figure 1).

Figure 1 An example of a multimodal robotic vision system enabled by GMSL. Source: Analog Devices

AutonomyIn autonomous robotics, cameras serve as the eyes of the machine, allowing it to perceive its surroundings, avoid obstacles, and localize itself within an environment.

For mobile robots—such as delivery robots, warehouse shuttles, or agricultural rovers—this often involves a combination of wide field-of-view cameras placed at the corners or edges of the robot. These surround-view systems provide 360° awareness, helping the robot navigate complex spaces without collisions.

Other autonomy-related applications use cameras facing downward or upward to read fiducial markers on floors, ceilings, or walls. These markers act as visual signposts, allowing robots to recalibrate their position or trigger specific actions as they move through structured environments like factories or hospitals.

In more advanced systems, stereo vision cameras or time of flight (ToF) cameras are placed on the front or sides of the robot to generate three-dimensional maps, estimate distances, and aid in simultaneous localization and mapping (SLAM).

The location of these cameras is often dictated by the robot’s size, mobility, and required field of view. On small sidewalk delivery robots, for example, cameras might be tucked into recessed panels on all four sides. On a drone, they’re typically forward-facing for navigation and downward-facing for landing or object tracking.

AutomationIn industrial automation, vision systems help robots perform repetitive or precision tasks with speed and consistency. Here, the camera might be mounted on a robotic arm—right next to a gripper or end-effector—and the system can visually inspect, locate, and manipulate objects with high accuracy. This is especially important in pick-and-place operations, where identifying the exact position and orientation of a part or package is essential.

Other times, cameras are fixed above a work area—mounted on a gantry or overhead rail—to monitor items on a conveyor or to scan barcodes. In warehouse environments, mobile robots use forward-facing cameras to detect shelf labels, signage, or QR codes, enabling dynamic task assignments or routing changes.

Some inspection robots, especially those used in infrastructure, utilities, or heavy industry, carry zoom-capable cameras mounted on masts or articulated arms. These allow them to capture high-resolution imagery of weld seams, cable trays, or pipe joints—tasks that would be dangerous or time-consuming for humans to perform manually.

Human interactionCameras also play a central role in how robots engage with humans. In collaborative manufacturing, healthcare, or service industries, robots need to understand gestures, recognize faces, and maintain a sense of social presence. Vision systems make this possible.

Humanoid and service robots often have cameras embedded in their head or chest, mimicking the human line of sight to enable natural interaction. These cameras help the robot interpret facial expressions, maintain eye contact, or follow a person’s gaze. Some systems use depth cameras or fisheye lenses to track body movement or detect when a person enters a shared workspace.

In collaborative robot (cobot) scenarios, where humans and machines work side by side, machine vision is used to ensure safety and responsiveness. The robot may watch for approaching limbs or tools, adjusting its behavior to avoid collisions or pause work if someone gets too close.

Even in teleoperated or semi-autonomous systems, machine vision remains key. Front-mounted cameras stream live video to remote operators, enabling real-time control or inspection. Augmented reality overlays can be added to this video feed to assist with tasks like remote diagnosis or training.

Across all these domains, the camera’s placement—whether on a gripper, a gimbal, the base, or the head of the robot—is a design decision tied to the robot’s function, form factor, and environment. As robotic systems grow more capable and autonomous, the role of vision will only deepen, and camera integration will become even more sophisticated and essential.

Robotics vision challengesAs vision systems become the backbone of robotic intelligence, opportunity and complexity grow in parallel. High-performance cameras unlock powerful capabilities—enabling real-time perception, precise manipulation, and safer human interaction—but they also place growing demands on system architecture.

It’s no longer just about moving large volumes of video data quickly. Many of today’s robots must make split-second decisions based on multimodal sensor input, all while operating within tight mechanical envelopes, managing power constraints, avoiding electromagnetic interference (EMI), and maintaining strict functional safety in close proximity to people.

These challenges are compounded by the environments robots face. A warehouse robot may shuttle in and out of freezers, enduring sudden temperature swings and condensation. An agricultural rover may crawl across unpaved fields, absorbing constant vibration and mechanical shock. Service robots in hospitals or public spaces may encounter unfamiliar, visually complex settings, where they must quickly adapt to safely navigate around people and obstacles.

Solve the challenges with GMSLGMSL is uniquely positioned to meet the demands of modern robotic systems. The combination of bandwidth, robustness, and integration flexibility makes it well-suited for sensor-rich platforms operating in dynamic, mission-critical environments. The following features highlight how GMSL addresses key vision-related challenges in robotics.

High data rateThe GMSL2 and GMSL3 product families support forward-channel (video path) data rates of 3 Gbps, 6 Gbps, and 12 Gbps, covering a wide range of robotic vision use cases. These flexible link rates allow system designers to optimize for resolution, frame rate, sensor type, and processing requirements (Figure 2).

Figure 2 Sensor bandwidth ranges with GMSL capabilities. Source: Analog Devices

A 3 Gbps link is sufficient for most surround view cameras using 2 MP to 3 MP rolling shutter sensors at 60 frames per second (FPS). It also supports other common sensing modalities, such as ToF sensors and light detection and ranging (LIDAR) units with point-cloud outputs and radar sensors transmitting detection data or compressed image-like returns.

The 6 Gbps mode is typically used for the robot’s main forward-facing camera, where higher resolution sensors (usually 8 MP or more) are required for object detection, semantic understanding, or sign recognition. This data rate also supports ToF sensors with raw output, or stereo vision systems that either stream raw output from two image sensors or output a processed point cloud stream from an integrated image signal processor (ISP). Many commercially available stereo cameras today rely on this data rate for high frame-rate performance.

At the high end, 12 Gbps links enable support for 12 MP or higher resolution cameras used in specialized robotic applications that demand advanced object classification, scene segmentation, or long-range perception. Interestingly, even some low-resolution global shutter sensors require higher speed links to reduce readout time and avoid motion artifacts during fast capture cycles, which is critical in dynamic or high-speed environments.

Determinism and low latencyBecause GMSL uses frequency-domain duplexing to separate the forward (video and control) and reverse (control) channels, it enables bidirectional communication with deterministic low latency, without the risk of data collisions.

Across all link rates, GMSL maintains impressively low latency: the added delay from the input of a GMSL serializer to the output of a deserializer typically falls in the lower tens of microseconds—negligible for most real-time robotic vision systems.

The deterministic reverse-channel latency enables precise hardware triggering from the host to the camera—critical for synchronized image capture across multiple sensors, as well as for time-sensitive, event-driven frame triggering in complex robotic workflows.

Achieving this level of timing precision with USB or Ethernet cameras typically requires the addition of a separate hardware trigger line, increasing system complexity and cabling overhead.

Small footprint and low powerOne of the key value propositions of GMSL is its ability to reduce cable and connector infrastructure.

GMSL itself is a full-duplex link, and most GMSL cameras utilize the power-over-coax (PoC) feature, allowing video data, bidirectional control signals, and power to be transmitted over a single thin coaxial cable.

This significantly simplifies wiring, reduces the overall weight and bulk of cable harnesses, and eases mechanical routing in compact or articulated robotic platforms (Figure 3).

Figure 3 A typical GMSL camera architecture using the MAX96717. Source: Analog Devices

In addition, the GMSL serializer is a highly integrated device that combines the video interface (for example, MIPI-CSI) and the GMSL PHY into a single chip. The power consumption of the GMSL serializer, typically around 260 mW in 6 Gbps mode, is favorably low compared to alternative technologies with similar data throughput.

All these features will translate to smaller board areas, reduced thermal management requirements (often eliminating the need for bulky heatsinks), and greater overall system efficiency, particularly for battery-powered robots.

Sensor aggregation and video data routingGMSL deserializers are available in multiple configurations, supporting one, two, or four input links, allowing flexible sensor aggregation architectures. This enables designers to connect multiple cameras or sensor modules to a single processing unit without additional switching or external muxing, which is especially useful in multicamera robotics systems.

In addition to the multiple inputs, GMSL SERDES also supports advanced features to manage and route data intelligently across the system. These include:

- I2C and GPIO broadcasting for simultaneous sensor configuration and frame synchronization.

- I2C address aliasing to avoid I2C address conflict in passthrough

- Virtual channel reassignment allows multiple video streams to be mapped cleanly into the frame buffer inside the systems on chip (SoCs).

- Video stream duplication and virtual channel filtering, enabling selected video data to be delivered to multiple SoCs—for example, to support both automation and interaction pipelines from the same camera feed or to support redundant processing paths for enhanced functional safety.

Originally developed for automotive advanced driver assistance systems (ADAS) applications, GMSL has been field-proven in environments where safety, reliability, and robustness are non-negotiable. Robotic systems, particularly those operating around people or performing mission-critical industrial tasks, can benefit from the same high standards.

|

Feature/Criteria |

GMSL (GMSL2/GMSL3) |

USB (for example, USB 3.x) |

Ethernet (for example, GigE Vision) |

|

Cable Type |

Single coax or STP (data + power + control) |

Separate USB + power + general-purpose input/output (GPIO) |

Separate Ethernet + power (PoE optional) + GPIO |

|

Max Cable Length |

15+ meters with coax |

3 m reliably |

100 m with Cat5e/Cat6 |

|

Power Delivery |

Integrated (PoC) |

Requires separate or USB-PD |

Requires PoE infrastructure or separate cable |

|

Latency (Typical) |

Tens of microseconds (deterministic) |

Millisecond-level, OS-dependent |

Millisecond-level, buffered + OS/network stack |

|

Data Rate |

3 Gbps/6 Gbps/12 Gbps (uncompressed, per link) |

Up to 5 Gbps (USB 3.1 Gen 1) |

1 Gbps (GigE), 10 Gbps (10 GigE, uncommon in robotics) |

|

Video Compression |

Not required (raw or ISP output) |

Often required for higher resolutions |

Often required |

|

Hardware Trigger Support |

Built-in via reverse channel (no extra wire) |

Requires extra GPIO or USB communications device class (CDC) interface |

Requires extra GPIO or sync box |

|

Sensor Aggregation |

Native via multi-input deserializer |

Typically point-to-point |

Typically point-to-point |

|

EMI Robustness |

High—designed for automotive EMI standards |

Moderate |

Moderate to high (depends on shielding, layout) |

|

Environmental Suitability |

Automotive-grade temp, ruggedized |

Consumer-grade unless hardened |

Varies (industrial options exist) |

|

Software Stack |

Direct MIPI-CSI integration with SoC |

OS driver stack + USB video device class (UVC) or proprietary software development kit (SDK) |

OS driver stack + GigE Vision/ GenICam |

|

Functional Safety Support |

ASIL-B devices, data replication, deterministic sync |

Minimal |

Minimal |

|

Deployment Ecosystem |

Mature in ADAS, growing in robotics |

Broad in consumer/PC, limited industrial options |

Mature in industrial vision |

|

Integration Complexity |

Moderate—requires SERDES and routing config |

Low—plug and play for development High—for production |

Moderate—needs switch/router config and sync wiring |

Table 1 A comparison between GMSL, USB, and Ethernet in terms of trade-offs in robotic vision. Source: Analog Devices

Most GMSL serializers and deserializers are qualified to operate across a –40°C to +105°C temperature range, with built-in adaptive equalization that continuously monitors and adjusts transceiver settings in response to environmental changes.

This provides system architects with the flexibility to design robots that function reliably in extreme or fluctuating temperature conditions.

In addition, most GMSL devices are ASIL-B compliant and exhibit extremely low BERs. Under compliant link conditions, GMSL2 offers a typical BER of 10–15, while GMSL3, with its mandatory forward error correction (FEC), can reach a BER as low as 10–30. This exceptional data integrity, combined with safety certification, significantly simplifies system-level functional safety integration.

Ultimately, GMSL’s robustness leads to reduced downtime, lower maintenance costs, and greater confidence in long-term system reliability—critical advantages in both industrial and service robotics deployments.

Mature ecosystemGMSL benefits from a mature and deployment-ready ecosystem, shaped by years of high volume use in automotive systems and supported by a broad network of global ecosystem partners.

This includes a comprehensive portfolio of evaluation and production-ready cameras, compute boards, cables, connectors, and software/driver support—all tested and validated under stringent real-world conditions.

For robotics developers, this ecosystem translates to shorter development cycles, simplified integration, and a lower barrier to scale from prototype to production.

GMSL vs. legacy robotics connectivityIn recent years, GMSL has become increasingly accessible beyond the automotive industry, opening new possibilities for high performance robotic systems.

As the demands on robotic vision grow with more cameras, higher resolution, tighter synchronization, and harsher environments, traditional interfaces like USB and Ethernet often fall short in terms of bandwidth, latency, and integration complexity.

GMSL is now emerging as a preferred upgrade path, offering a robust, scalable, and production-ready solution that is gradually replacing USB and Ethernet in many advanced robotics platforms. Table 1 compares the three technologies across key metrics relevant to robotic vision design.

An evolution in roboticsAs robotics moves into increasingly demanding environments and across diverse use cases, vision systems must evolve to support higher sensor counts, greater bandwidth, and deterministic performance.

While legacy connectivity solutions will remain important for development and certain deployment scenarios, they introduce trade-offs in latency, synchronization, and system integration that limit scalability.

GMSL, with its combination of high data rates, long cable reach, integrated power delivery, and bidirectional deterministic low latency, provides a proven foundation for building scalable robotic vision systems.

By adopting GMSL, designers can accelerate the transition from prototype to production, delivering smarter, more reliable robots ready to meet the challenges of a wide range of real-world applications.

Kainan Wang is a systems applications engineer in the Automotive Business Unit at Analog Devices in Wilmington, Massachusetts. He joined ADI in 2016 after receiving an M.S. in electrical engineering from Northeastern University in Boston, Massachusetts. Kainan has been working with 2D/3D imaging solutions from hardware development and systems integrations to application development. Most recently, his work focus has been to expand ADI automotive technologies into other markets beyond automotive.

Related Content

- ADI’s GMSL technology eyes a place on ADAS bandwagon

- ADI unveils embedded software development tools

- Advancing GMSL as an open automotive standard

- CES 2025: Wirelessly upgrading SDVs

The post Building high-performance robotic vision with GMSL appeared first on EDN.

Dell Technologies’ 2026 Predictions: AI Acceleration, Sovereign AI & Governance

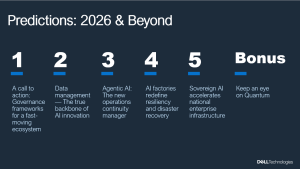

Dell Technologies hosted its Predictions: 2026 & Beyond briefing for the Asia Pacific Japan & Greater China (APJC) media, where the company’s Global Chief Technology Officer & Chief AI Officer, John Roese, and APJC President, Peter Marrs, outlined the transformative technology trends and Dell’s strategies for accelerating AI adoption and innovation in the region.

John Roese’s vision on the trends set to shape the technology industry in 2026 and beyond (Image Credits: Dell Technologies)

John Roese’s vision on the trends set to shape the technology industry in 2026 and beyond (Image Credits: Dell Technologies)

According to Roese, the rapid acceleration of AI is set to profoundly reengineer the entire fabric of enterprise and industry, driving new ways of operating, building, and innovating at an unprecedented scale and pace.

Focus on scalability and real adoption

A key trend is the shift in focus towards scaling AI for tangible business outcomes. “Conversations are on very real adoption, and AI is creating a truly transformational opportunity,” said Marrs. “We are working with customers across the region to build AI at scale.”

Marrs noted that growing deployment of agentic AI is an example of this transformation, with organizations such as Zoho in India already working with Dell to accelerate agentic AI adoption by delivering contextual, privacy-first and multimodal enterprise AI solutions. “AI has become more accessible for all companies in the region, and what we’ve been doing is successfully building foundations with customers to deploy AI at scale.”

Roese highlighted that the industry is now entering the autonomous agent era, where agentic AI is evolving from a helpful assistant to an integral manager of complex, long-running processes. “We expect that as people go on the agentic journey into 2026, they will be surprised by how much more agents do for them than they anticipated. Its very presence will bring value to make humans more efficient, and make the non-AI work, work better,” he noted.

As the industry continues to build and deploy more enterprise AI, Roese also emphasized the need for businesses to rethink how they treat and make resilient AI factories.

Sovereign AI and governance as the foundation for innovation

With the light-speed acceleration of AI development, there is a degree of volatility. Roese predicted that the demand for robust governance frameworks and private, controlled AI environments will become undeniable, urging the industry to build on both internal and external AI guardrails that allow organizations to innovate safely and sustainably.

“Last year, we predicted that ‘Agentic’ would be the word of 2025. This year, the word ‘Governance’ is going to play a much bigger role,” Roese said. “The technology and its use cases are not going to be successful if you do not have discipline and governance around how you operate your AI strategy as either an enterprise, a region, or a country.”

At national levels, the rapid rise of sovereign AI ecosystems will continue as AI becomes critical to state-level interests. Marrs discussed this trend’s momentum, noting that like many countries in the region, enterprises are also actively building their own frameworks to drive local innovation, with strong foundations already in place.

Building the ecosystem for impact and progress

To bridge that gap, Marrs reiterated the importance of a collaborative ecosystem in nurturing a skilled talent pool and advancing the region’s AI competitiveness, citing the APJ AI Innovation Hub as an initiative that is delivering impact through the combination of Dell’s capabilities, talent, and ecosystem.

“By working with experts, government, and industry peers, we’ve made unbelievable headway in fostering skill development and advancing our collective expertise,” said Marrs. “Together, we are accelerating Asia’s leadership as an AI region, identifying key steps to bolster the region’s growth. Dell is excited about how we’re participating and helping with this transformation.”

The post Dell Technologies’ 2026 Predictions: AI Acceleration, Sovereign AI & Governance appeared first on ELE Times.