Збирач потоків

IR Obstruction Detector

| Nice day-off project. I know it's pretty dated style, but I just really love using nothing more than a pin-out diagram for the components, and going from there. I start by placing the button and a regulator, and then the smoothing capacitors, then the power LED and its power limiting resistor. This was made on stripboard, so each column is common, except for where I cut the traces under the 555 socket, to prevent pins 1-8, 2-7, etc from being shorted together. [link] [comments] |

I don't think that any part of the circuitry in a metro station elevatr should look like this

| submitted by /u/Elvenblood7E7 [link] [comments] |

Toshiba Improves MOSFET Performance With Advanced Packaging

Just cleaned about 5 square meters of vintage mixer PCBs.

| Mmm capacitor juice and grime. Series Ten Mixer btw :3 [link] [comments] |

Increasing bit resolution with oversampling

Increasing ADC resolution

Increasing ADC resolution

Many electronic designs contain an ADC, or more than one, to read various signals and voltages. Often, these ADCs are included as part of the microcontroller (MCU) being used. This means, once you pick your MCU, you have chosen the maximum resolution (calculated from the number of bits in the ADC and the reference) you will have for taking a reading.

Wow the engineering world with your unique design: Design Ideas Submission Guide

What happens if, later in the design, you find out you need slightly more resolution from the ADC? Not to worry, there are some simple ways to improve the resolution of the sampled data. I discussed one method in a previous EDN Design Idea (DI), “Adaptive resolution for ADCs,” which talked about changing the reference voltage, so I won’t discuss that here. Another way of improving the resolution is through the concept of oversampling.

Let’s first look at a method that is essentially a simplified version of oversampling…averaging. (Most embedded programmers have used averaging to improve their readings, sometimes with the thought of minimizing the effects of bad readings and not thinking about improving resolution.)

So, suppose you’re taking a temperature reading from a sensor once a second. Now, to get a better resolution of the temperature, take the reading every 500 ms and average the two readings together. This will give you another ½-bit of resolution (we’ll show the math later). Let’s go further—take readings every 250 ms and average four readings. This will give you a whole extra bit of resolution.

If you have an 8-bit ADC and it is scaled to read 0 to 255 degrees with 1-degree resolution, you will now have a virtual 9-bit ADC capable of returning readings of 0 to 255.5 degrees with 0.5-degree resolution. If you average 16 readings, you will create a virtual 10-bit ADC from your 8-bit ADC. The 64-averaged reading will create an 11-bit virtual ADC by improving your 8-bit ADC with three extra bits, thereby giving you a resolution of one part in 2048 (or, in the temperature sensor example, a resolution of about 0.12 degrees).

A formula for averagingThe formula for extra bits versus the number of samples averaged is:

Number of samples averaged = M

Number of virtual bits created = b

M = 4b

If you want to solve for b given M: b = log4(M)

Or, b = (1/ log2(4)) * log2(M) = log2(M)/2

You may be scratching your head, wondering where that formula comes from. First, let’s think about the readings we are averaging. They consist of two parts. The first is the true, clean reading the sensor is trying to give us. The second part is the noise that we pick up from extraneous signals on the wiring, power supplies, components, etc. (These two signal parts combine in an additive way.)

We will assume that this noise is Gaussian (statistically normally distributed; often shown as a bell curve; sometimes referred to as white noise) and uncorrelated to our sample rate. Now, when taking the average, we first sum up the readings. The clean readings from the sensor will obviously sum up in a typical mathematical way. In the noise part, though, the standard deviation of the sum is the square root of the sum of the standard deviations. In other words, the clean part increases linearly, and the noise part increases as the square root of the number of readings.

What this means is that not only is the resolution increased, but the signal-to-noise ratio (SNR) would improve by M/sqrt(M), which mathematically reduces to sqrt(M). In simpler terms, the averaged reading SNR improves by the square root of the number of samples averaged. So, if we take four readings, the average SNR improves by 2, or the equivalent of one more bit in the ADC (an 8-bit ADC performs as a 9-bit ADC).

I have used averaging in many pieces of firmware, but it’s not always the best solution. As was said before, your sensor connection is passing your ADC a good signal with some noise added to it. Simple averaging is not always the best solution. One issue is the slow roll-off in the frequency domain. Also, the stopband attenuation is not very good. Both of these issues indicate that averaging allows a good portion of the noise to enter your signal. So, we may have increased the resolution of the reading, but have not removed all the noise from the signal we can.

Reducing the noiseTo reduce this noise, that is spread over the full frequency spectrum coming down the sensor wire, you may want to apply an actual lowpass filter (LPF) to the signal. This can be done as a hardware LPF applied before the ADC or it can be a digital LPF applied after the ADC, or it can be both. (Oversampling makes the design of these filters easier as the roll-off can be less steep.)

There are many types of digital filters but the two major ones are the finite impulse response (FIR) and the infinite impulse response (IIR). I won’t go into the details of these filters here, but just say that these can be designed using tradeoffs of bandpass frequency, roll-off rate, ripple, phase shift, etc.

A more advanced approach to oversamplingSo, let’s look at a design to create a more advanced oversampling system. Figure 1 shows a typical layout for a more “formal”, and better oversampling design.

Figure 1 A typical oversampling block diagram with an antialiasing filter, ADC, digital LPF, and decimation (down-sampling).

We start by filtering the incoming signal with an analog hardware LPF (often referred to as an antialiasing filter). This filter is typically designed to filter the incoming desired signal at just above the frequency of interest.

The ADC then samples the signal at a rate many times (M) the frequency of interest’s Nyquist rate. Then, in the system’s firmware, the incoming sample stream is again low-pass filtered with a digital filter (typically an FIR or IIR) to further remove the signal’s Gaussian noise as well as the quantization noise created during the ADC operation. (Various filter designs can also be useful for other kinds of noise, such as impulse noise, burst noise, etc.) Oversampling gave us the benefit of spreading the noise over the wide oversample bandwidth, and our digital lowpass filter can remove much of this.

Next, we decimate the signal’s data stream. Decimation (also known as down-sampling) is simply the act of now only using every 2nd, or 3rd, or 4th, up to every Mth sample, and tossing the rest. This is safe due to oversampling and the lowpass filters, so we won’t alias much noise into the lower sample rate signal. Decimation essentially reduces the bandwidth as represented by the remaining samples. Further processing now requires less processing power as the number of samples is significantly reduced.

It worksThis stuff really works. I once worked on a design that required us to receive very small signals being transmitted on a power line (< 1 W). The signal was attenuated by capacitors on the lines, various transformers, and all the customer’s devices plugged into the powerline. The signal to be received was around 10 µV riding on the 240-VAC line. We ended up oversampling by around 75 million times the Nyquist rate and were able to successfully receive the transmissions at over 100 miles from the transmitter.

Damian Bonicatto is a consulting engineer with decades of experience in embedded hardware, firmware, and system design. He holds over 30 patents.

Phoenix Bonicatto is a freelance writer.

Related Content

- Adaptive resolution for ADCs

- Understanding noise, ENOB, and effective resolution in ADCs

- How do ADCs work?

- Understand key ADC specs

The post Increasing bit resolution with oversampling appeared first on EDN.

Deep Learning Architecture Definition, Types and Diagram

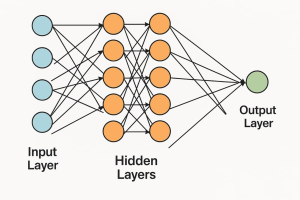

Deep learning architecture pertains to the design and arrangement of neural networks, enabling machines to learn from data and make intelligent decisions. Inspired by the structure of the human brain, these architectures comprise many layers of nodes connected to one another to gain increasing abstraction. As data goes through these layers, the network learns to recognize patterns, extract features, and perform tasks such as classification, prediction, or generation. Deep learning architectures have brought about a paradigm shift in the fields of image recognition, natural language processing, and autonomous systems, empowering computers with a degree of precision and adaptability to interpret inputs brought forth by human intelligence.

Deep Learning Architecture Diagram:

Diagram Explanation:

This illustration describes a feedforward network, a simple deep learning model wherein data travels from input to output in one direction only. It begins with an input layer, where, for example, every node would be a feature, fully connecting with nodes in the next hidden layer. The hidden layers (two layers of five nodes each) now transform the data with weights and activation functions, while every node in one layer connects with every node in the other layer: this complexity aids the network in learning complicated patterns. The output layer produces the final prediction-fully connected with the last hidden layer, it uses sigmoid in case of binary classification or softmax in case of multi-class. The arrows represent weights, which get adjusted during training to minimize the cost function.

Types of Deep Learning Architecture:

- Feedforward Neural Networks (FNNs)

The simplest cases of neural networks used for classification and regression with a unidirectional flow of data from input to output form the basis for more complicated architectures

- Convolutional Neural Networks (CNNs)

CNNs process image data by applying convolutional layers to detect spatial features. They are widely used in image classification, object detection, and medical image analysis because they can capture local patterns.

- Recurrent Neural Networks (RNNs)

RNNs are ideal for working with sequential data such as time series or text data. The loops hold in memory information or state of previous computations, which prove useful in speech recognition and language modeling.

- Long Short-Term Memory Networks (LSTMs)

LSTMs, which in turn are a type of RNN, can learn long-term dependencies as they utilize gates to control the flow of information through the cell. Some of their main uses include machine translation, music generation, and text prediction.

- Variational Autoencoders (VAEs)

With the addition of probabilistic elements, a VAE extends the traditional autoencoder and can, therefore, generate new data samples. They find their use in generative modeling of images and text.

- Generative Adversarial Networks (GANs)

GANs work by pitting two networks, a generator and a discriminator, against each other to create realistic data. They are known for producing high-quality images, deepfakes, and art.

- Transformers

Transformers use self-attention to study sequences in parallel, making them excellent models in natural language processing. Models like BERT, GPT, and T5 use the Transformer as their backbone.

- Graph Neural Networks (GNNs)

GNNs operate on graph-structured data; for example: social networks, or molecular structures. They learn representations by aggregating information from neighboring nodes-and are powerful for relational reasoning.

- Autoencoders

These are unsupervised models that learn to compress and then reconstruct data. Autoencoders are also used for dimensionality reduction, anomaly detection, and image denoising.

- Deep Belief Networks (DBNs)

DBNs are networks with multiple layers of restricted Boltzmann machines. They are used for unsupervised feature learning and pretraining of deep networks, which are then fine-tuned with supervised learning.

Conclusion:

Deep learning architectures are the backbone of modern AI systems. Each type, be it a simple feedforward network or an advanced transformer, possesses unique strengths suited to particular applications. With the continuing evolution of deep learning, hybrid architectures and efficient models are poised to spark breakthroughs in healthcare, autonomous systems, and generative AI.

The post Deep Learning Architecture Definition, Types and Diagram appeared first on ELE Times.

CCFL inverter from an old monitor

| The scree got water damage, but this still works, the tubes will light up. Also, these transformers look quite similar to the ones found in CRTs. I wonder... [link] [comments] |

How JSD Electronics Uses AI and Machine Vision to Deliver Zero-Defect Electronics

In an exclusive interview with ELE Times, Mr. Deep Hans Aroraa, Co-Founder & Director at JSD Electronics, discussed how the company is redefining IoT-enabled manufacturing through multi-layered cybersecurity, AI-driven quality control, and advanced testing methodologies. From embedding secure boot and end-to-end encryption into connected devices, to deploying machine vision and predictive analytics for defect prevention, JSD is committed to delivering reliable, compliant, and future-ready electronics. The conversation also explored the company’s use of digital twins, ERP-MES integration, and embedded software innovations that power smarter, more resilient products across industries. Excerpts:

ELE Times: How are you integrating cybersecurity measures into your IoT-enabled products to ensure data safety and compliance?Deep Hans Aroraa: At JSD, we integrate multiple layers of cybersecurity into our IoT-enabled products to protect data integrity, confidentiality, and availability while ensuring compliance with industry standards.

Key Measures Implemented:

- Secure Boot – Only authenticated firmware is allowed to run.

- Firmware Signing & Verification – Prevents malicious or unauthorized firmware updates.

- End-to-End Encryption – TLS 1.3 / DTLS for secure data transmission.

- Mutual Authentication – Both device and server authenticate each other before communication.

- VPN or Private APN – Used for sensitive industrial and enterprise deployments.

- Data-at-Rest Encryption – AES-256 encryption for onboard storage and databases.

- API Authentication & Authorization – Implemented via OAuth 2.0 and JWT tokens.

- Cloud IAM (Identity & Access Management) – Restricts access to IoT data.

- Intrusion Detection Systems (IDS) – Specialized for IoT protocols such as MQTT and CoAP.

- Secure OTA Updates – Safely push patches and firmware updates throughout the device lifecycle.

Deep Hans Aroraa: For stable IoT device manufacturing aligned with global quality standards, JSD employs the following advanced testing and simulation technologies:

- Hardware-in-the-Loop (HIL) Testing

- Environmental & Stress Testing (Thermal Cycling, Shock Testing, Vibration, Humidity, and Corrosion Testing)

- RF & Wireless Performance Testing

- Functional Qualification Testing

- Protocol & Interoperability Testing (MQTT, TCP/IP, HTTP, etc.)

- Cybersecurity Penetration Testing

- Power Consumption & Battery Life Simulation

- EMC/EMI Compliance Testing

- Field Trials & Operational Evaluation

Deep Hans Aroraa: Data plays a vital role in optimizing manufacturing operations. JSD has implemented ERP systems for production and inventory management, along with a Manufacturing Execution System (MES), enabling data capture from multiple sources such as machines, operators, environmental conditions, materials, and quality inspections.

Data Analytics Applications:

- Bottleneck Analysis (Time-Series) – Identify and resolve process slowdowns.

- Predictive Maintenance – Anticipate equipment failures before they occur.

- Process Parameter Optimization – Fine-tune machine settings for maximum efficiency.

- Dynamic Scheduling – Adjust production plans in real-time to changing conditions.

- Energy Optimization – Reduce energy consumption without impacting output.

Quality Improvement with AI:

- AI-Powered Visual Inspection – Detect the smallest defects in real time.

- In-Process Quality Prediction – Forecast potential quality issues before final assembly.

- Defect Root Cause Analysis – Pinpoint exact defect causes.

- Supplier Quality Analytics – Correlate incoming material quality with production outcomes.

These initiatives deliver better insights, higher yields, greater reliability, improved efficiency, and significant cost savings.

ELE Times: How is JSD integrating machine vision systems or AI-driven defect detection into its quality control processes?Deep Hans Aroraa: JSD Optical Inspection & AI-Driven Quality Control Workflow

- Inward Material Inspection – Optical systems check incoming components for dimensional accuracy, labeling, and surface defects.

- PCBA Solder Paste Inspection (SPI) – Measures solder paste volume, height, and alignment before placement to ensure optimal solder joints.

- Pre-AOI – Verifies part type, polarity, and position after component placement.

- Post-AOI – Detects solder bridging, tombstoning, missing components, and misalignments after reflow soldering.

- Final Product Digital Inspection (PDI) – High-resolution imaging and visual inspection for cosmetic finish, assembly quality, and labeling.

AI-Enhanced Workflow:

- Image Capture – AOI systems record detailed PCB images.

- AI Analysis – Detects solder defects, missing components, and misalignments with high precision.

- Defect Logging – Records in MES with batch and machine data.

- Real-Time Alerts – Flags issues immediately for rework.

- Continuous Learning – Uses stored defect images for AI retraining and root cause prevention.

Deep Hans Aroraa: Embedded software development is central to JSD’s product strategy, serving as the intelligence that transforms hardware into connected, adaptive, and differentiated products.

It defines core functionality, manages connectivity (Wi-Fi, Bluetooth, Zigbee, LoRa, 5G), and ensures security through secure boot, encryption, authentication, and OTA updates. Embedded software also enables scalability and future-proofing, allowing features and compliance updates without hardware redesign.

Additionally, it powers edge intelligence, processing data locally for faster response and reduced bandwidth, and governs data collection and transmission—critical for analytics, predictive maintenance, and new revenue streams.

ELE Times: How does JSD leverage digital twins or virtual prototyping before moving to full-scale manufacturing?Deep Hans Aroraa: JSD uses digital twins and virtual prototyping to reduce risk, improve design quality, and accelerate time-to-market by creating virtual replicas for simulation and validation before physical production.

- Design Validation – Identify and fix flaws before building prototypes.

- Process Optimization – Simulate assembly lines and workflows to remove bottlenecks.

- Performance Testing – Model real-world stress, thermal, and EMI conditions.

- IoT-Driven Insights – Use sensor data for predictive improvements.

- Cost & Sustainability – Test materials and processes for efficiency and eco-impact.

- Training – Prepare teams in a simulated environment before production ramp-up.

The post How JSD Electronics Uses AI and Machine Vision to Deliver Zero-Defect Electronics appeared first on ELE Times.

India’s Electronics Production Climbs to $133 Billion, Export Growth Accelerates

Commerce and Industry Minisher Piyush Goyal has said that India’s electronics production has seen an increase of more than four times in the last decade from $31 billion in 2014-15 to $133 billion in 2024-25. He mentioned that such magnificent growth was accompanied by a tremendous increase in exports, which have increased by more than 47% in Q1 FY26 over the same quarter last year.

According to data by India Cellular and Electronics Association (ICEA), electronics exports were $12.4 billion in Q1 FY26 compared to $8.43 billion in Q1 FY25. Riding on this momentum, the industry body hopes to reach exports to the tune of $46–50 billion by the fiscal year.

Goyal further elaborated upon the long-term transformation seen in the sector. He said, “The mobile phone industry has essentially been at the heart of this journey. A decade ago, India was largely an importer of mobile phones. Today, we have become a global hub for mobile manufacturing and exports. Mobile exports themselves grew 55 per cent in Q1 FY26, rising from $4.9 billion in the same quarter last year to an estimated $7.6 billion.” Non-mobile exports grew at 36% from $3.53 billion to an estimated $4.8 billion.

These includes solar modules, networking equipment, charger adapters, and electronic components: thus, widening India’s export portfolio.

He added that electronics not just strengthened the exports but also created employment opportunities on a large scale with the support of policy initiatives like Phased Manufacturing Programme (PMP), Production Linked Incentive (PLI) schemes, and close industry-state coordination.

Electronics exports from India have experienced sustained double-digit growth in multiple product segments and are set to record a landmark in FY26, thus placing the country firmly in the global supply chains.

The post India’s Electronics Production Climbs to $133 Billion, Export Growth Accelerates appeared first on ELE Times.

UCLA and Broadcom Team Up to Craft Wafer-Scale Unidirectional Imager

Some vintage memory chips

| submitted by /u/Practical-Friend-960 [link] [comments] |

I uploaded a sketch to the ESP32 bare chip using ESP32 dev board as a programmer!

| This is just a blink sketch, but my projects will become miniaturized. [link] [comments] |

Keysight Unveils EMI Test Receiver with Real-Time, 1 GHz Bandwidth

📚 Набір на Базовий курс підготовки до НМТ 2026 для учнів 9, 10 та 11-х класів відкрито!

Ми чекаємо слухачів і слухачок на наших підготовчих курсах. Це можливість розкрити ваші здібності до навчання, надолужить згаяне, усунути прогалини в знаннях або підготуватися до іспиту.

Broke MoCA II: This time, the wall wart got zapped, too

Back in 2016, I did a teardown of Actiontec’s ECB2200 MoCA adapter, which had fried in response to an EMP generated by a close-proximity lightning bolt cloud-to-cloud spark (Or was it an arc? Or are they the same thing?). As regular readers may recall, this was the second time in as many years that electronics equipment had either required repair or ended up in the landfill for such a reason (although the first time, the lightning bolt had actually hit the ground). And as those same regular readers may already be remembering, last August it happened again.

I’ve already shared images and commentary with you of the hot tub circuitry that subsequently required replacement, as well as the three-drive NAS, the two eight-port GbE switches and the five-port one (but not two, as originally feared) GbE switch. And next month, I plan to show the insides of the three-for-three CableCard receiver that also met its demise this latest lightning-related instance. But this time, I’ll dissect Actiontec’s MoCA adapter successor, the ECB2500C:

I’d already mentioned the ECB2500C a decade back, actually:

The ECB2500C is the successor to the ECB2200; both generations are based on MoCA 1.1-supportive silicon, but the ECB2500C moves all external connections to one side of the device and potentially makes other (undocumented) changes.

And as was the case back in 2016, the adapter in the master guest bedroom was the MoCA network chain link that failed again this time. Part of the reason why MoCA devices keep dying, I think, is due to their inherent nature. Since they convert between Ethernet and coax, there are two different potential “Achilles Heels” for incoming electromagnetic spikes. Plus, the fact that coax routes from room to room via cable runs attached to the exterior of the residence doesn’t help. And then there’s the fact that the guest bedroom’s location is in closest proximity (on that level, at least) to the Continental Divide, from whence many (but not all) storms source.

This time, however, the failure was more systemic than before. The first thing I did was to test the wall wart’s DC output using my multimeter:

Dead! Hey…maybe the adapter itself is still functional? I grabbed the spare ECB2500C’s wall wart, confirmed it was functional, plugged it into this adapter and…nope, nothing lit up on the front panel, so the adapter’s dead, too. Oh well, you’ll get a two-for-one teardown today, then!

Let’s start with the wall wart, then, as usual accompanied by a 0.75″ (19.1 mm) diameter U.S. penny for size comparison purposes:

Specs n’ such:

Time to break out the implements of destruction again (vise not shown this time):

Progress…

Success!

No “potting” in this case; the PCB pulls right out:

The more interesting side of the PCB, both in penny-inclusive and closer-up perspectives:

The same goes for the more boring (unless you’re into thick traces, that is) side:

The same goes for the more boring (unless you’re into thick traces, that is) side:

And now for some side views:

I didn’t see anything obviously scorched, bulged, or otherwise mangled; did you? Let me know in the comments if I missed something! Now on to the adapter, measuring 1.3 x 3.8 x 5.5 in. (33 x 97 x 140 mm). I double-checked those dimensions with my tape measure and initially did a double-take until I realized that the published width included the two coax connectors poking out the back. Subtract 5.8” for the actual case width:

You may have already noticed the four screw heads, one in each corner, in the earlier underside shot. You know what comes next, right?

That was easy!

The PCB then (easily, again) lifts right out of the remaining top half of the case:

Light pipes for the LEDs, which we’ll presumably see once we flip over the PCB:

Let’s stick with this bottom side for now, though:

The lone component of note is a Realtek RTL8201EL Fast Ethernet PHY. The mess of passives below it is presumably for the system processor at that location on the other side of the PCB:

Let’s see if I’m right:

Yep, it’s Entropic’s EN2510 single-chip MoCA controller, at lower left in the following photo. To its left are the aforementioned LEDs. At upper left is an Atmel (now Microchip Technology) ATMEGA188PA 8-bit AVR microcontroller. And at upper right, conveniently located right next to its companion Ethernet connector, is a Magnetic Communications (MAGCOM) HS9001 LAN transformer:

Switching attention to the other half of the PCB upper half, I bet you’re dying to see what’s underneath those “can” and “cage” lids, aren’t you? Me, too:

Your wish is my command!

As with the wall wart, and unlike last time when a scorched soldered PCB pad pointed us to the likely failure point, I didn’t notice anything obviously amiss with the adapter, either. It makes me wonder, in fact, whether either the coax or Ethernet connector was the failure-mechanism entry point this time, and whether the failure happened in conjunction with last August’s lightning “event” or before. The only times I would ever check the MoCA adapter in the master guest bedroom were when…umm…we were prepping for overnight guests to use that bedroom.

Granted, an extinguished “link active” light at the mated MoCA adapter on the other end, in the furnace room, would also be an indirect tipoff, but I can’t say with certainty that I regularly glanced at that, either. Given that the wall wart is also dead, I wonder if its unknown-cause demise also “zapped” the power regulation portion of the adapter’s circuitry, located at the center of its PCB’s upper side, for example. Or maybe the failure sequence started at the adapter and then traveled back to the wall wart over the conjoined power tether? Let me know your theories, as well as your broader thoughts on what I’ve covered today, in the comments!

—Brian Dipert is the Editor-in-Chief of the Edge AI and Vision Alliance, and a Senior Analyst at BDTI and Editor-in-Chief of InsideDSP, the company’s online newsletter.

Related Content

- Teardown: MoCA adapter succumbs to lightning strike

- Devices fall victim to lightning strike, again

- Lightning strike becomes EMP weapon

- Lightning strikes…thrice???!!!

- A teardown tale of two not-so-different switches

- Dissecting (and sibling-comparing) a scorched five-port Gigabit Ethernet switch

- LAN security for MoCA and powerline

The post Broke MoCA II: This time, the wall wart got zapped, too appeared first on EDN.

Silicon 100: Chiplet work spanning interconnect PHYs to smart substrates

While the Silicon 100 report was being compiled and curated to profile the most promising startups in the semiconductor industry in 2025, two prominent chiplet upstarts were already taken. First, Qualcomm announced its acquisition of chiplet interconnect developer Alphawave Semi in the second week of June 2025.

Nearly a month later, Tenstorrent snapped Blue Cheetah Analog Design, another supplier of die-to-die interconnect IPs. These two deals highlight the red-hot nature of the chiplets world and how this new multi-die technology landscape is emerging despite geopolitical headwinds.

In this year’s Silicon 100 report, there are eight startup companies associated with chiplet design and manufacturing work. In the chiplet design realm, DreamBig Semiconductor develops chiplet platforms and high-performance accelerator solutions for 5G, artificial intelligence (AI), automotive, and data center markets. Its core technology includes a chiplet hub with high-bandwidth memory (HBM).

Founded by Sohail Syed in 2019, the San Jose, California-based chiplet designer is using Samsung Foundry’s SF4X 4-nm process technology and is backed by the Samsung Catalyst Fund and the Sutardja family investment.

Eliyan, another well-known chiplet firm, offers PHY interconnect that enables high-bandwidth, low-latency, and power-efficient communication between chiplets on both silicon and organic substrates. The company, co-founded in 2021 by serial entrepreneur Ramin Farjadrad, completed the tapeout of its NuLink PHY in a ×64 UCIe package module on Samsung Foundry’s SF4X 4-nm manufacturing process in November 2024.

Figure 1 The die-to-die PHY solution for chiplet interconnect achieves 64 Gbps/bump. Source: Eliyan

While design startups are mostly engaged in die-to-die interconnect and related aspects, chiplet manufacturing realm seems far more expansive and exciting. Take AlixLabs, for instance, a 2019 startup spun off from Sweden’s Lund University. It specializes in atomic layer etch (ALE) equipment to develop a technique called ALE pitch splitting (APS), which enables atomic-scale precision in semiconductor manufacturing at dimensions below 20 nm.

Figure 2 The ALE-based solutions perform atomic-level processing to reduce the number of process steps required to manufacture a chip while increasing throughput. Source: AlixLabs

Then there is Black Semiconductor, developing manufacturing methods for back-end-of-line use of graphene to create optical chip-to-chip connections. The company is currently building a manufacturing facility at its new headquarters in Aachen, Germany. FabONE is expected to be operational in 2026, with pilot production scheduled to start in 2027 and full-volume production by 2029.

Figure 3 FabONE will be the world’s first graphene photonics fab. Source: Black Semiconductor

Next, Chipletz, a fabless substrate startup, is working on chiplet-based packaging. Established in 2016 as an activity within AMD and then spun off in 2021, its smart substrate enables the heterogeneous integration of multiple ICs within a single package. That, in turn, eliminates the need for a silicon interposer by providing die-to-die interconnects and high-speed I/O. It also supports different voltage domains from a single supply, outperforming traditional multichip modules and system-in-package (SIP) solutions.

Silicon Box is another semiconductor packaging upstart featured in the Silicon 100 report; it specializes in the production of multi-die components based on chiplet architecture. It currently operates a factory and R&D facility in Singapore and has raised $3.5 billion to build a semiconductor assembly and testing facility in Piedmont, Italy.

Silicon 100 offers a glimpse into the startup ecosystem of 2025 and beyond, highlighting firms that work on various aspects of chiplet design and manufacturing. And their potential is inherently intertwined with another 2025 star: AI and data center semiconductors. One common factor that both chiplets and AI semiconductors share is their association with advanced packaging technology.

Find out more about upstarts focusing on chiplet design and manufacturing in “Silicon 100: Startups to Watch in 2025” by uploading a copy of the report here.

Related Content

- The Age of Chiplets is Upon Us

- Startup Aims to Improve Chiplet Packaging

- Chiplets diary: Three anecdotes recount design progress

- Silicon Box to Invest €3.2B in a Semiconductor Fab in Italy

- Eliyan Breaks Chiplet Memory Wall With Standard Packaging

The post Silicon 100: Chiplet work spanning interconnect PHYs to smart substrates appeared first on EDN.

Microscope shots of EPROMs left to us at work by the ancients.

| submitted by /u/FeedanSneed [link] [comments] |

Top 10 Deep Learning Algorithms

Deep learning algorithms are a category of machine learning methods that draw inspiration from the workings of the human brain. Such methods use artificial neural networks made up of interconnected nodes or neurons in handling data. Deep learning algorithms are the driving force behind modern artificial intelligence. They enable machines to learn from vast amounts of data, recognize patterns, and make decisions with minimal human intervention. These algorithms are modeled after the structure and function of the human brain, using artificial neural networks composed of layers of interconnected nodes.

Usually, deep learning algorithms are divided into groups according on the neural network architecture they employ:

- Feedforward neural networks (FNNs): The fundamental architecture of feedforward neural networks (FNNs) allows data to flow in a single direction.

- Convolutional neural networks, or CNNs, are specialized for analyzing images and videos.

- Recurrent neural networks (RNNs): These networks are made to process sequential data, such as language or time series.

- Autoencoders: For dimensionality reduction and unsupervised learning.

- Generative models, such as GANs and VAEs, generate new data instances.

- GNNs (Graph Neural Networks): Utilize data that is graph-structured.

- Transformers: Using attention mechanisms, they transformed NLP tasks.

Examples of Deep Learning Algorithms:

- Image Classification: CNNs used for facial identification or medical imaging.

- Speech recognition: RNNs and LSTMs are utilized in virtual assistants.

- Text Generation: Chatbots and translation use transformers like GPT.

- Anomaly Detection: Fraud detection using autoencoders.

- Data Synthesis: GANs that produce lifelike pictures or movies.

Top 10 deep learning algorithms:

- Convolutional Neural Networks (CNNs)

Convolutional Neural Networks are applied to process grid-like data such as images by convolution layers that can identify spatial hierarchies and patterns such as edges and textures. It is widely used in image recognition applications ranging from facial recognition to medical imaging for tumor detection and object detection in autonomous vehicles.

- Recurrent Neural Networks (RNNs)

Recurrent Neural Networks were designed to work with sequences of data through loops in the network to keep a memory of past inputs. They are thus best suited for tasks such as speech recognition, time-series forecasting (e.g., stock prices), and natural language processing where context from previous data points is essential.

- Long Short-Term Memory Networks (LSTMs)

LSTMs are specialized RNNs that can learn longer-term dependencies and avoid the vanishing gradient problem. They are best suited for applications like machine translation, predictive text input, and chatbots, in which realizing the bigger picture of a conversation or an incoming sentence is advantageous.

- Generative Adversarial Networks (GANs)

GANs consist of two networks-the discriminator and the generator-that compete against one another in order to create realistic synthetic data. These models are used in generating lifelike images, creating deepfake videos, producing art, and augmenting datasets-classifying certain datasets-so that their training with respect to other models can be improved.

- Autoencoders

Autoencoders are types of unsupervised learning models that map input data into a lower-dimensional representation, then reconstruct this representation. They are used for anomaly detection in cybersecurity, image denoising, and dimensionality reduction for visualization or further high-end analysis.

- Deep Belief Networks (DBNs)

DBNs are layered networks built using Restricted Boltzmann Machines that learn to represent data hierarchically. They’re useful for tasks like image and speech recognition, where uncovering hidden patterns and features in large datasets is essential.

- Variational Autoencoders

VAEs are a probabilistic extension of autoencoders that learn latent representations of data with some added regularization. They are commonly found being used in drug discovery for generating new molecules, handwriting synthesis, speech synthesis, or just compression of data in a way that retains important features.

- Graph Neural Networks (GNNs)

GNNs were built to work with data structured as graphs and capture relationships between nodes. They are especially useful in social network analysis, recommendation systems, and fraud detection, wherein understanding the relationships between entities is key.

- Transformers

Transformers rely on attention mechanisms to attribute relative importance to different chunks of input data. This has ushered in advancements in NLP tasks—translation, summarization, and question answering to name a few—while also leading to their use, to some extent, in vision tasks like image captioning and object detection.

- Multilayer Perceptron (MLP)

MLPs stand for multilayer perceptrons, or feedforward neural networks with more than one layer of neurons separating input and output. They are suited for handwritten digit recognition, fraud detection, and customer churn prediction, where structured data and non-linear relationships have to be modeled.

Conclusion:

The latest changes in AI are powered by deep learning algorithms. These algorithms are used with varying strengths and applications, for instance, CNNs that study images, and Transformers that understand human language.

Following the implementation of AI for applications in health sciences, financial management, autonomous systems, and content creation, possessing knowledge about these top 10 deep learning algorithms becomes essential for both practitioners and researchers.

The post Top 10 Deep Learning Algorithms appeared first on ELE Times.