ELE Times

Microchip Technology Expands its India Footprint with a New Office Facility in Bengaluru

Microchip Technology has expanded its India footprint with the acquisition of 1.72 lakh square feet (16,000 square meters) of premium office space at the Export Promotion Industrial Park (EPIP) Zone in Whitefield, Bengaluru. This move highlights the company’s continued focus on strengthening its engineering and design capabilities in the region.

The facility will serve as a strategic extension of Microchip’s Bengaluru Development Center that can easily accommodate over 3,000 employees in the next 10 years. It is designed to support the company’s growing workforce and future hiring plans, encourage stronger collaboration across global and regional teams, and provide them with modern infrastructure for advanced research and development.

Talking on the new acquisition, Srikanth Settikere, vice president and managing director of Microchip’s India Development Center stated, “At Microchip, growth is about creating opportunities as much as scaling operations. With India contributing to nearly 20% of global semiconductor design talent, our new Bengaluru facility will sharpen our advanced IC design focus and strengthen our engagement in one of the country’s most dynamic technology hubs.”

Steve Sanghi, President and CEO of Microchip added, “We recently celebrated Microchip’s 25th anniversary in India and this office acquisition is a testament to our commitment in India. We believe our investments in the region will enable us to both benefit from and contribute to the country’s increasingly important role in the global semiconductor industry.”

The Bengaluru acquisition is Microchip’s second facility in Bengaluru besides its physical presence in Hyderabad, Chennai, Pune and New Delhi, reinforcing its long-term commitment to product development, business enablement and talent growth in India. With this expansion, the company further positions itself to deliver innovative semiconductor solutions across industrial, automotive, consumer, aerospace and defense, communications and computing markets.

The post Microchip Technology Expands its India Footprint with a New Office Facility in Bengaluru appeared first on ELE Times.

How Quantum Sensors and Post-Moore Measurement Tech Are Rewriting Reality

When the chip industry stopped promising effortless doublings every two years, engineers didn’t panic, they changed the problem. Instead of forcing ever-smaller transistors to do the same old sensing and measurement jobs, the field has begun to ask a bolder question: what if measurement itself is redesigned from first physical principles? That shift from “more of the same” to “different physics, different stack” is where the current revolution lives.

Today is not about one device or one lab, instead, it’s about a system-level pivot. Government labs, hyperscalers, deep-tech start-ups and legacy instrument makers are converging around sensors that read quantum states, neuromorphic edge processors that pre-digest raw physical signals, and materials-level breakthroughs (2D materials, diamond colour centres, integrated photonics) that enable ultra-sensitive transduction. This results in a pipeline of measurement capabilities that look less like incremental sensor upgrades and more like new senses for machines and humans.

The opening act: credibility and capability

Two facts anchor this moment. First, quantum measurement is leaving the lab and becoming engineering work. Companies are reporting sustained fidelity and performance gains, enabling practical devices rather than one-off demonstrations. Quantinuum’s recent announcements new trapped-ion systems and record fidelities illustrate the industry’s transition from discovery to engineering at scale.

Second, established compute and platform players are doubling down on quantum ecosystems — not because they expect instant universal quantum computers, but because quantum sensing and hybrid quantum-classical workflows have near-term value. Nvidia’s move to open a quantum research lab in Boston is a concrete example of big-tech treating quantum as part of an integrated future compute stack. As Jensen Huang put it when announcing the initiative, the work “ reflects the complementary nature of quantum and classical computing.”

The technologies: what’s actually being built

Here are the concrete innovations that are moving from prototype to product:

- Portable optical atomic clocks. Optical lattice clocks have long been the domain of national labs; recent work shows designs that ditch cryogenics and complex laser trees, opening the door to compact, fieldable clocks that could replace GPS time references in telecom, finance, and navigation. (NIST and research groups published simplified optical clock designs in 2024.)

- Diamond (NV-centre) magnetometry. The nitrogen-vacancy (NV) centre in diamond has matured as a practical transducer: ensembles and Faraday-effect architectures now push magnetometry into the femto- to picotesla regime for imaging and geophysics. Recent preprints and lab advances show realistic sensitivity improvements that industry can productize for MEG, non-destructive testing, and subsurface exploration.

- Atom-interferometric gravimetry and inertial sensing. Cold-atom interferometers are being transformed into compact gravimeters and accelerometers suitable for navigation, resource mapping, and structural monitoring — systems that enable GPS-independent positioning and subsurface mapping. Market and technical reports point to rapid commercial interest and growing device deployments.

- Quantum photonics: entanglement and squeezing used in imaging and lidar. By borrowing quantum optical tricks (squeezed light, correlated photons), new imagers and LIDAR systems reduce classical shot- noise limits and succeed in low-light and high-clutter environments a direct win for autonomous vehicles, remote sensing, and biomedical imaging.

- Edge intelligence + hybrid stacks. The pragmatic path to adoption is hybrid: quantum-grade front-ends feeding neural or neuromorphic processors at the edge that perform immediate anomaly detection or data compression before sending distilled telemetry to cloud AI. McKinsey and industry analysts argue that this hybrid model unlocks near-term value while the pure quantum stack matures. “Quantum sensing’s untapped potential” is exactly this: integrate, don’t wait.

Voices from the field

Rajeeb Hazra of Quantinuum captures the transition: the company frames recent hardware advances as a move from research to engineering, and the market reaction underscores that sensors and systems with quantum components are becoming realistic engineering deliverables.

Nvidia’s Jensen Huang framed the strategy plainly when announcing the Boston lab: quantum and classical systems are complementary and will be developed together a pragmatic admission that integration is the near-term path.

Industry analysts from consulting and market research also point to rapid investment and

commercialization cycles in quantum technologies, especially sensing, where near-term ROI exists.

(Each of the above citations points to public statements or industry reporting documenting these positions.)

The industrial storyline: how it’s being developed

Three engineering patterns repeat across successful projects:

- Co-design of physics and system: Sensors are designed simultaneously with readout electronics, packaging, and AI stacks. Atomic clocks aren’t just lasers in a box they are timing engines integrated into telecom sync, GNSS augmentation, and secure-time services.

- Material and integration leaps: High-purity diamonds, integrated photonics, and 2D materials are used not as laboratory curiosities but as manufacturing inputs. The emphasis is on manufacturable material processes that support yield and repeatability.

- Hybrid deployment models: Pilots embed quantum sensors with classical edge compute in aircraft, subsea drones, and industrial plants. These pilots emphasize robustness, calibration, and lifecycle engineering rather than purely chasing sensitivity benchmarks.

The judgment: what will change, and how fast

Expect pockets of rapid, strategic impact not immediate universal replacement. Quantum sensors will first displace classical approaches where

(a) There’s no classical alternative (gravimetry for subsurface mapping)

(b) Small improvements produce outsized outcomes (timekeeping in finance, telecom sync)

(c) The environment is hostile to classical methods (low-light imaging, non-invasive brain sensing).

Within five years we will see commercial quantum-assisted navigation units, fieldable optical clocks for telecom carriers and defense, and NV-based magnetometry entering clinical and energy-sector workflows. Over a decade, as packaging, calibration standards, and manufacturing mature, quantum- grade measurements will diffuse widely and the winners will be those who mastered hybrid systems engineering, not isolated device physics.

What leaders should do now?

- Invest in hybrid stacks: fund pilots that pair quantum front-ends with robust edge AI and lifecycle engineering.

- Prioritize integration not headline sensitivity: a slightly less sensitive sensor that works reliably in the field beats a lab record every time.

- Build standards and calibration pathways: work with national labs; timekeeping and magnetometry need interoperable, certified standards.

- Secure talent at the physics-engineering interface: hires that understand both decoherence budgets and manufacturable packaging are gold.

The revolution is not a single “quantum sensor” product; it’s a new engineering posture: design sensors from the physics up, integrate them with intelligent edge processing, and industrialize the stack. That is how measurement stops being passive infrastructure and becomes a strategic asset one that will reshape navigation, healthcare, energy and national security in the decade to come.

The post How Quantum Sensors and Post-Moore Measurement Tech Are Rewriting Reality appeared first on ELE Times.

“Robots are undoubtedly set to make the manufacturing process more seamless and error-free,” says Anil Chaudhry, Head of Automation at Delta.

“Everywhere. Automation can be deployed from very simple things to the most complicated things,” says Delta’s Anil Chaudhry as he underlines Delta’s innovations in the field of digital twins and human-centric innovation. With industries across the globe preparing to embrace the automation revolution—from advanced assembly lines to robotic arms—ELE Times sits down with Anil Chaudhry, Business Head – Solution & Robotics at Delta Electronics India, and Dr. Sanjeev Srivastava, Head of Industrial Automation at SBP, to discuss Delta’s plans.

In the conversation, both guests talk extensively about the evolution emerging in the industrial automation space, especially with reference to India, and how these solutions are set to support India in securing a significant share of the global semiconductor and electronics market. “The availability of the collaborative robots, or the co-bot, which is called in short form, is one of the stepping stones into Industry 5.0,” says Anil Chaudhry.

A Well-integrated and All-encompassing Approach

“We are in all domains, and we see these domains are well integrated,” says Anil Chaudhry as he reflects on the automation demands of the industry, ranging from power electronics to mobility (such as EV charging), automation (industrial and building automation), and finally the infrastructure (data centers, telecom & renewable energy). He highlights that such an approach makes Delta the most sought-after name in automation tech. “We offer products ranging from an AI-enabled energy management system to edge computing software that can handle the large, all-encompassing processes in an industrial landscape,” he adds.

“It is an integrated solution we have everywhere in our capabilities, and we are integrating all this to make it more enhanced,” says Anil Chaudhry.

Delta’s Key to Efficiency and Productivity

As the conversation touched upon the aspects of efficiency and productivity, Anil Chaudhry was quick to say, “The key to efficiency and productivity lies in no breakdown.” He further says that Delta’s software-enabled programs are equipped to provide predictive failure information to the manufacturing plant, enabling the necessary actions to be taken in advance.

Delta’s Stride through Digital Twins & Industry 5.0

Delta’s digital twin models imitate the manufacturing process, making it easy and seamless to enable automation. He says, “The robots are definitely going to enable the manufacturing process to be more seamless and more error-free. Sharing a glimpse into the Delta’s co-bots, he says that the robots are equipped enough to handle repetitive yet highly accurate and high-demand requirements.

He was quick to underline that Delta not only offers these machines but also a lot of software tools required to make the whole facility run seamlessly, making an end-to-end solution and enabling a stride towards an Industry 5.0 environment.

Delta’s Approach towards Localization

On the subject of localization of manufacturing in India, Dr. Sanjeev Srivastava, Head of Industrial Automation at SBP, highlighted the progress Delta has been making in building a strong ecosystem. “It’s good that we are developing an ecosystem wherein we also have our supply chain integrated with manufacturing, and as we see, a lot of industries are coming into India. Over the years, we will have a very robust supply chain,” he said.

He pointed out that many components are already being sourced locally, and this share is expected to grow further. Confirming Delta’s commitment to Indian manufacturing, Dr. Srivastava added, “Yes, we are manufacturing in India, and we are also exporting to other places. We have the global manufacturing unit, which is supplying to other parts of the world, as well as catering to our Indian market. So it is both domestic and international.”

As the conversation wrapped up, Anil Chaudhry went on to further underline Delta’s overall and larger goal, wherein he says, “So we work on the TC, or total cost of ownership, on how to reduce it with our technology going into the equipment, as well as the overall solution.”

The post “Robots are undoubtedly set to make the manufacturing process more seamless and error-free,” says Anil Chaudhry, Head of Automation at Delta. appeared first on ELE Times.

The Quantum Leap: How AI and Quantum Computing Are Driving Lead Time Optimization and Supply Chain Resilience in Semiconductor Innovation

Introduction

Silicon has been the primary driver of the computing growth for decades, but Moore’s Law is now reaching its limits. As the need for chips to be faster and more energy-efficient grows, the pressure on supply chains are like never before due to shortages and geopolitical tensions.

This is where AI and quantum computing come into play. It is not science fiction; they are

helping discover new semiconductor materials and optimizing production scheduling in

wafer fabs. This results in shorter lead times, reduced risks, and a more resilient supply chain.

For engineers and procurement teams, the message is simple: keeping up in the chip world

will soon require leveraging both quantum computing and AI together.

Quantum Computing and AI Integration in Semiconductor Innovation

Quantum computing works with qubits, which, unlike classical bits, can exist in

superposition, representing both 0 and 1 simultaneously. This enables quantum processors to tackle complex simulations that classical computers struggle with, such as modelling atomic-level behaviour in new semiconductor materials.

AI enhances this capability. By applying predictive analytics to quantum simulations,

machine learning models identify promising material candidates, predict their performance,

and recommend adjustments. This transforms what was once a slow trial-and-error process

into actionable insights, saving years of laboratory work.

Take Google’s Willow processor as an example. It is the follow-up to Sycamore, and while not built solely for materials research, it demonstrates how quantum systems can scale and

reduce errors. When combined with machine learning, it provides an unprecedented view of

material properties critical for chip innovation.

As Anima Anandkumar points out: “AI helps us turn the raw complexity of quantum

simulations into insights engineers can actually use.”- Anima Anandkumar, Professor,

Caltech & Senior Director of AI Research, Nvidia.

Together, AI and quantum computing are laying the foundation for a fundamentally new

approach to chip design.

AI-Driven Material Science: Operational and Market Impact

When it comes to discovering new semiconductors, atomic-level precision is crucial. AI-

powered quantum models can simulate electron behaviour in materials such as graphene,

gallium nitride, or perovskites. This enables researchers to evaluate conductivity, energy

efficiency, and durability before performing laboratory tests, greatly accelerating material

qualification.

The practical impact is significant. Material validation traditionally took years, but early

studies indicate that timelines can be shortened by 30 to 50 percent. This allows wafer fabs to operate more efficiently, align production with new innovations, and minimize idle time.

Market pressures further complicate the situation. During the 2021 shortage, lead times

increased from approximately 12 weeks to over a year. With AI, companies can anticipate

supply chain disruptions and proactively adjust sourcing strategies. Quantum simulations also expand the range of usable materials, reducing reliance on a single supplier or high-risk region.

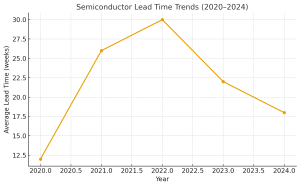

Figure 1. Semiconductor lead times spiked from 12 weeks in 2020 to over 30 weeks in 2022 before easing. AI-quantum integration can help stabilize these fluctuations by enabling predictive analytics and diversified sourcing.

The return on investment is remarkable. According to Deloitte, companies that integrate AI

into R&D and supply chain operations are achieving double-digit efficiency gains, primarily due to improved yield forecasting and reduced downtime. Google’s quantum research team

has demonstrated that AI-driven simulations can narrow the list of promising materials from thousands to just a few within weeks, a process that would normally take years using classical computing. This dramatic compression of the R&D cycle fundamentally changes competitive dynamics.

Strategic Insights for Procurement and Supply Chain Leaders

For procurement and supply chain leaders, this is more than just a technical upgrade; it

represents a genuine strategic advantage. AI-powered quantum tools help optimize lead

times, enabling more precise supplier contracts and reducing the need for excess buffer stock. Predictive analytics also allow teams to identify potential risks before they affect wafer fabs or delay customer deliveries.

Supply chain resilience is also enhanced. When AI-guided quantum simulations confirm

alternative semiconductors that can be sourced from different regions, procurement teams

reduce exposure to geopolitical risks or natural disasters. This approach aligns with national

initiatives such as the U.S. CHIPS and Science Act and the EU Chips Act, both of which

promote stronger local production and more resilient sourcing strategies. Quantum-AI

modelling provides the technical confidence required to qualify these alternative supply

streams.

“The upward trajectory for the industry in the short-term is clear, but the companies that can manage their supply chains and attract and retain talent will be the ones well-positioned to sustain and benefit from the AI boom.”- Mark Gibson, Global Technology Leader, KPMG

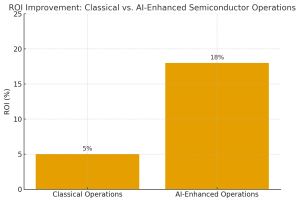

Figure 2. Firms adopting AI-enhanced semiconductor operations achieve significantly higher ROI, with consulting studies reporting double-digit efficiency gains compared to classical operations.

At the end of the day, companies that successfully integrate these technologies do not just

bring products to market faster; they also gain a genuine competitive edge in today’s unpredictable global markets. In the semiconductor industry, a delay of a few weeks can result in billions in lost revenue, so agility is essential for survival.

Future Outlook: Scaling Quantum AI Impact on Semiconductor Manufacturing

Looking ahead, the next major development appears to be full-stack quantum-AI design.

Imagine quantum processors running full-chip simulations while AI optimizes them for

speed, efficiency, and manufacturability. Although we are not there yet, incremental

advances in photonic circuits and spintronic components are already producing tangible

results.

For manufacturing teams, the challenge will be maintaining flexibility in supply chains. As

new materials move from simulation to pilot production, procurement and fab teams must

scale in coordination. Today’s quantum processors are not perfect, as they still face

limitations in qubit counts, error rates, and scalability, but if progress continues, practical

industrial applications could emerge within the next ten years. Companies that begin planning now, by developing roadmaps and forming strategic partnerships, will gain a significant advantage.

Conclusion

Right now, the semiconductor industry is at a critical turning point. Combining AI’s

predictive capabilities with quantum computing’s atomic-level insights can accelerate

discovery, shorten lead times, and make supply chains significantly more resilient.

For engineers and procurement leaders, the message is clear: adopting AI-quantum

integration is not just about technology; it is about remaining competitive. The next major

advancement in silicon will not occur by chance. It will be carefully designed, optimized, and engineered using AI and quantum computing together.

The post The Quantum Leap: How AI and Quantum Computing Are Driving Lead Time Optimization and Supply Chain Resilience in Semiconductor Innovation appeared first on ELE Times.

Rohde & Schwarz Mobile Test Summit 2025 on the future of wireless communications

Rohde & Schwarz has announced that this year’s Mobile Test Summit will be an online, multi-session event catering to two major time zones. Wireless communications professionals are invited to register for individual sessions on the Rohde & Schwarz website. The sessions will cover a wide range of critical industry topics: AI and machine learning in mobile networks, non-terrestrial networks (NTN) for mobile devices, the transition from 5G to 6G and the next generation of Wi-Fi.

- The first topic, AI and machine learning, will cover how AI and ML are changing mobile networks.

- The second topic is NTN, and its sessions will cover how the evolving NTN landscape enhances the mobile user experience and provides true global coverage for IoT devices.

- The third topic addresses the transition from 5G to 6G, with a focus being on XR applications in the 6G age, new device types and the rise of private 5G NR networks. Special focus will be on the impact on test and measurement as the industry evolves from 5G to 6G.

- The fourth topic covers the latest advancements in Wi-Fi 8 technology and how they elevate the mobile user experience.

Alexander Pabst, Vice President of Wireless Communications at Rohde & Schwarz, says: “As we mark the fifth anniversary of hosting our popular Mobile Test Summit, we’re excited to continue this open forum for the global wireless community to exchange ideas, share experiences and debate the technical and operational questions that will shape the future of connectivity. The virtual, multi‑session format makes it easy for professionals in the wireless ecosystem around the globe to participate in focused conversations and obtain actionable insights that help shape the industry’s future.”

The post Rohde & Schwarz Mobile Test Summit 2025 on the future of wireless communications appeared first on ELE Times.

Infineon and SolarEdge collaborate to advance high-efficiency power infrastructure for AI data centres

Infineon and SolarEdge are partnering to advance the development of highly efficient next-generation Solid-State Transformer (SST) technology for AI and hyperscale data centres.

– The new SST is designed to enable direct medium-voltage to 800–1500V DC conversion with over 99% efficiency, reducing size, weight, and CO₂ footprint – The collaboration combines SolarEdge’s DC expertise with Infineon’s semiconductor innovation to support sustainable, scalable power infrastructure and further expansion into the AI data-centre market

Infineon Technologies AG and SolarEdge Technologies, Inc. announced a collaboration to advance SolarEdge’s Solid-State Transformer (SST) platform for next-generation AI and hyperscale data centres. The collaboration focuses on the joint design, optimization and validation of a modular 2-5 megawatt (MW) SST building block. It combines advanced silicon carbide (SiC) switching technology from Infineon with SolarEdge’s proven power-conversion and control topology set to deliver >99% efficiency, supporting the global shift towards high-efficiency, DC-based data centre infrastructure.

The Solid-State Transformer technology is well positioned to play a crucial role in future,

highly efficient 800 Volt direct current (VDC) AI data centre power architectures. The

technology enables end-to-end efficiency and offers several key advantages, including a

significant reduction of weight and size, a reduced CO₂ footprint, and accelerated

deployment of power distribution, among others, when connecting the public grid with data

centre power distribution. The SST under joint development will enable direct medium-

voltage (13.8–34.5 kV) to 800–1500 V DC conversion.

“Collaborations like this are key to enabling the next generation of 800 Volt DC data centre

power architectures and further driving decarbonization,” said Andreas Urschitz, Chief

Marketing Officer at Infineon. “With high-performance SiC technology from Infineon,

SolarEdge’s proven capabilities in power management and system optimization are

enhanced, creating a strong foundation for the efficient, scalable, and reliable infrastructure

demanded by AI-driven data centres.”

“The AI revolution is redefining power infrastructure,” said Shuki Nir, CEO of SolarEdge. “It

is essential that the data centre industry is equipped with solutions that deliver higher levels of efficiency and reliability. SolarEdge’s deep expertise in DC architecture uniquely positions us to lead this transformation. Collaborating with Infineon brings world-class semiconductor innovation to our efforts to build smarter, more efficient energy systems for the AI era.”

As AI infrastructure drives an unprecedented surge in global power demand, data centre

operators are seeking new ways to deliver more efficient, reliable, and sustainable power.

Building on more than 15 years of leadership in DC-coupled architecture and high-efficiency

power electronics, this development would enable SolarEdge to expand into the data-centre

market with solutions designed to optimize power distribution from the grid to the compute

rack. This optimization relies on the efficient conversion of power, a challenge that

semiconductor solutions from Infineon are addressing, enabling efficient power conversions

from grid to core (GPU). With a focus on delivering reliable and scalable power systems

based on all relevant semiconductor materials silicon (Si), silicon carbide and gallium nitride (GaN), Infineon is enabling reduced environmental footprint and lower operating costs for the AI data centre ecosystem.

The post Infineon and SolarEdge collaborate to advance high-efficiency power infrastructure for AI data centres appeared first on ELE Times.

Evolving Priorities in Design Process of Electronic Devices

One of the natal and most crucial stages of electronic devices’ production is the design stage. It encompasses the creative, manual, and technical facets incorporated in an electronic device. The design stage allows manufacturers and developers to convert a textual system definition into a detailed and functional prototype before mass production. Almost all the functional requirements of an electronic device are addressed at the design stage itself.

Considering the BOM and the DFM are crucial at this stage to maintain or improve quality,

while keeping a check on the cost and expected features and performance.

Fundamentals of the Design Process

- Prior to investing in materials required for manufacturing, it is essential to establish a

list of requirements. This helps the manufacturer understand the features required in

the product. Similarly, it is essential to conduct a thorough market research to identify

market gaps and consumer requirements to develop products that can address the

consumer needs. A successful product is one that fulfills what the market of similar

products lack. - Subsequently, after the conceptualization is complete, the focus shifts to creating a

design proposal and project plan. This defines the projected expenses involved in the

manufacturing process, an approximate timeline, along with other design and

manufacturing process segments. - A final electronic device comprises of several small components like multiple

microcontrollers, displays, sensors, and memory to name a few. The advent in

technology has allowed us to leverage the usage of advanced software like Electronic

Computer-aided design (ECAD) or Electronic Design Automation (EDA) tools to

create the schematic diagram. These help in reducing the scope of error and act as

catalysts to the design process. - Eventually, the detailed schematic design proves beneficial for the next step where the

schematic is transformed into a PCB layout.

Growing Trends in the Design Process

- The advancement in nanotechnology and microfabrication techniques have evolved

the design process to allow for further miniaturization with increased integration on

chips. Design engineers can now add more features than before on a single chip along

with reducing its size. - Present day electronic designs are trying to incorporate the usage of renewable energy

as the industry shifts from fossil fuels. This change has forced designers to maneuver

the design of electronic devices along with incorporating advanced features like IoT

efficiency. - The growing demand for sustainable devices has equally affected the design process

which now needs to include features to reduce greenhouse gas emissions as well as

reduce energy consumption. This has influenced electric designers to modify power

converters and motor drives to reduce energy loss and increase efficiency. - The contemporary times also require the integration of automation and robotics for

both industrial and consumer electronic devices. The design process hence, has to assimilate these requirements to maintain the longevity of the electronic device to

allow its easy adoption of advanced technology. The same goes for integration with

artificial intelligence, a growing rage and one that is bound to prove monumental in

the simplification of the process and usage of electronic devices. - The major challenge in the design process is not the integration of such features but

their human-friendly integration. Any feature in a device can fail to fulfil its purpose

if it is not user-friendly, hence, the task falls on design engineers to make their access

easy and durable. - Apart from the features and structural innovation, the design process in upcoming

electronic devices has also undergone a change in the materials used. Newer, flexible

devices have changed the dynamics from rigid circuit boards to flexible substrates and

conductive polymers. Electronic designers are now compelled to adhere to even

mechanical flexibility in their electronic layouts.

Simplifying the Process for Complex Designs

As the need for miniaturisation and integration grows, the complexity of the design follows

suit, however, advancements in software and applications have simplified the process,

allowing designers to experiment with more creative ideas without compromising on the

timeline and costs.

While ECAD is one such innovation which has been adopted extensively now, some other

EDA tools are:-

- SPICE: This is a simulation tool used to analyse and predict the circuit's behaviour

under different conditions before building a physical prototype. It helps, identify and

fix potential issues in advance. - OptSim: This software tool allows designers to evaluate and optimize the

performance of optical links within a sensor design, predicting how light will behave

through components like lenses, fibres, and detectors.

Conclusion

Designing for electronic devices is a dynamic process and requires engineers to stay up-to-

date with the industry and market trends. As automation, robotics, and artificial intelligence garner a strong hold among electronics, their integration for the design process is inevitable. The design process is that vital and non-linear stage in manufacturing which often continues even post testing for refinement and then for documentation and certification.

The post Evolving Priorities in Design Process of Electronic Devices appeared first on ELE Times.

New Radiation-Tolerant, High-Reliability Communication Interface Solution for Space Applications

The post New Radiation-Tolerant, High-Reliability Communication Interface Solution for Space Applications appeared first on ELE Times.

Nuvoton Launches Industrial-Grade, High-Security MA35 Microprocessor with Smallest Package and Largest Stacked DRAM Capacity

Nuvoton Technology announced the MA35D16AJ87C, a new member of the MA35 series microprocessors. Featuring an industry-leading 15 x 15 mm BGA312 package with 512 MB of DDR SDRAM stacked inside, the MA35D16AJ87C streamlines PCB design, reduces product footprint, and lowers EMI, making it an excellent fit for space-constrained industrial applications.

Key Highlights-

- Dual 64-bit Arm Cortex-A35 cores plus a Cortex-M4 real-time core

- Integrated, independent TSI (Trusted Secure Island) security hardware

- 512 MB DDR SDRAM stacked inside a 15 x 15 mm BGA312 package

- Supports Linux and RTOS, along with Qt, emWin, and LVGL graphics libraries

- Industrial temperature range: -40°C to +105°C

- Ideal for factory automation, industrial IoT, new energy, smart buildings, and smart cities

The MA35D16AJ87C is built on dual Arm Cortex-A35 cores (Armv8-A architecture, up to 800 MHz) paired with a Cortex-M4 real-time core. It supports 1080p display output with graphics acceleration and integrates a comprehensive set of peripherals, including 17 sets of UARTs, 4 sets of CAN-FD interfaces, 2 sets of Gigabit Ethernet ports, 2 sets of SDIO 3.0 interfaces, and 2 sets of USB 2.0 ports, among others, to meet diverse industrial application needs.

To address escalating IoT security challenges, the MA35D16AJ87C incorporates Nuvoton’s independently designed TSI (Trusted Secure Island) hardware security module. It supports Arm TrustZone technology, Secure Boot, and Tamper Detection, and integrates a complete hardware cryptographic engine suite (AES, SHA, ECC, RSA, SM2/3/4), a true random number generator (TRNG), and a key store. These capabilities help customers meet international cybersecurity requirements such as the Cyber Resilience Act (CRA) and IEC 62443.

The MA35D16AJ87C is supported by Nuvoton’s Linux and RTOS platforms and is compatible with leading graphics libraries including Qt, emWin, and LVGL, helping customers shorten development cycles and reduce overall development costs. The Nuvoton MA35 Series is designed for industrial-grade applications and is backed by a 10- year product supply commitment.

The post Nuvoton Launches Industrial-Grade, High-Security MA35 Microprocessor with Smallest Package and Largest Stacked DRAM Capacity appeared first on ELE Times.

Nokia and Rohde & Schwarz collaborate on AI-powered 6G receiver to cut costs, accelerate time to market

Nokia and Rohde & Schwarz have created and successfully tested a 6G radio receiver that uses AI technologies to overcome one of the biggest anticipated challenges of 6G network rollouts, coverage limitations inherent in 6G’s higher-frequency spectrum.

The machine learning capabilities in the receiver greatly boost uplink distance, enhancing coverage for future 6G networks. This will help operators roll out 6G over their existing 5G footprints, reducing deployment costs and accelerating time to market.

Nokia Bell Labs developed the receiver and validated it using 6G test equipment and methodologies from Rohde & Schwarz. The two companies will unveil a proof-of-concept receiver at the Brooklyn 6G Summit on November 6, 2025.

Peter Vetter, President of Bell Labs Core Research at Nokia, said: “One of the key issues facing future 6G deployments is the coverage limitations inherent in 6G’s higher-frequency spectrum. Typically, we would need to build denser networks with more cell sites to overcome this problem. By boosting the coverage of 6G receivers, however, AI technology will help us build 6G infrastructure over current 5G footprints.”

Nokia Bell Labs and Rohde & Schwarz have tested this new AI receiver under real world conditions, achieving uplink distance improvements over today’s receiver technologies ranging from 10% to 25%. The testbed comprises an R&S SMW200A vector signal generator, used for uplink signal generation and channel emulation. On the receive side, the newly launched FSWX signal and spectrum analyzer from Rohde & Schwarz is employed to perform the AI inference for Nokia’s AI receiver. In addition to enhancing coverage, the AI technology also demonstrates improved throughput and power efficiency, multiplying the benefits it will provide in the 6G era.

Michael Fischlein, VP Spectrum & Network Analyzers, EMC and Antenna Test at Rohde & Schwarz, said: “Rohde & Schwarz is excited to collaborate with Nokia in pioneering AI-driven 6G receiver technology. Leveraging more than 90 years of experience in test and measurement, we’re uniquely positioned to support the development of next-generation wireless, allowing us to evaluate and refine AI algorithms at this crucial pre-standardization stage. This partnership builds on our long history of innovation and demonstrates our commitment to shaping the future of 6G.”

The post Nokia and Rohde & Schwarz collaborate on AI-powered 6G receiver to cut costs, accelerate time to market appeared first on ELE Times.

ESA, MediaTek, Eutelsat, Airbus, Sharp, ITRI, and R&S announce world’s first Rel-19 5G-Advanced NR-NTN connection over OneWeb LEO satellites

European Space Agency (ESA), MediaTek Inc., Eutelsat, Airbus Defence and Space, Sharp, the Industrial Technology Research Institute (ITRI), and Rohde & Schwarz (R&S) have conducted the world’s first successful trial of 5G-Advanced Non-Terrestrial Network (NTN) technology over the Eutelsat’s OneWeb low Earth orbit (LEO) satellites compliant with 3GPP Rel-19 NR-NTN configurations. The tests pave the way for deployment of 5G-Advanced NR-NTN standard, which will lead to future satellite and terrestrial interoperability within a large ecosystem, lowering the cost of access and enabling the use of satellite broadband for NTN devices around the world.

The trial used OneWeb satellites, communicating with the MediaTek NR-NTN chipset, and ITRI’s NR-NTN gNB, implementing 3GPP Release 19 specifications including Ku-band, 50 MHz channel bandwidth and conditional handover (CHO). The OneWeb satellites, built by Airbus, carry transparent transponders, with Ku-band service link, Ka-band feeder link, and adopt the “Earth-moving beams” concept. During the trial, the NTN user terminal with a flat panel antenna developed by SHARP – successfully connected over satellite to the on-ground 5G core using the gateway antenna located at ESA’s European Space Research and Technology Centre (ESTEC) in The Netherlands.

David Phillips, Head of the Systems, Strategic Programme Lines and Technology Department within ESA’s Connectivity and Secure Communications directorate, said: “By partnering with Airbus Defence and Space, Eutelsat and partners, this innovative step in the integration of terrestrial and non-terrestrial networks proves why collaboration is an essential ingredient in boosting competitiveness and growth of Europe’s satellite communications sector.”

Mingxi Fan, Head of Wireless System and ASIC Engineering at MediaTek, said: “As a global leader in terrestrial and non-terrestrial connectivity, we continue in our mission to improve lives by enabling technology that connects the world around us, including areas with little to no cellular coverage. By making real-world connections with Eutelsat LEO satellites in orbit, together with our ecosystem partners, we are now another step closer to bring the next generation of 3GPP-based NR-NTN satellite wideband connectivity for commercial uses.”

Daniele Finocchiaro, Head of Telecom R&D and Projects at Eutelsat, said: “We are proud to be among the leading companies working on NTN specifications, and to be the first satellite operator to test NTN broadband over Ku-band LEO satellites. Collaboration with important partners is a key element when working on a new technology, and we especially appreciate the support of the European Space Agency.”

Elodie Viau, Head of Telecom and Navigation Systems at Airbus, said: “This connectivity demonstration performed with Airbus-built LEO Eutelsat satellites confirms our product adaptability. The successful showcase of Advanced New Radio NTN handover capability marks a major step towards enabling seamless, global broadband connectivity for 5G devices. These results reflect the strong collaboration between all partners involved, whose combined expertise and commitment have been key to achieving this milestone.”

Masahiro Okitsu, President & CEO, Sharp Corporation, said: “We are proud to announce that we have successfully demonstrated Conditional Handover over 5G-Advanced NR-NTN connection using OneWeb constellation and our newly developed user terminals. This achievement marks a significant step toward the practical implementation of non-terrestrial networks. Leveraging the expertise we have cultivated over many years in terrestrial communications, we are honored to bring innovation to the field of satellite communications as well. Moving forward, we will continue to contribute to the evolution of global communication infrastructure and strive to realize a society where everyone is seamlessly connected.”

Dr. Pang-An Ting, Vice President and General Director of Information and Communications Research Laboratories at ITRI, said: “In this trial, ITRI showcased its advanced NR-NTN gNB technology as an integral part of the NR-NTN communication system, enabling conditional handover on the Rel-19 system. We see great potential in 3GPP NTN communication to deliver ubiquitous coverage and seamless connectivity in full integration with terrestrial networks.”

Goce Talaganov, Vice President of Mobile Radio Testers at Rohde & Schwarz, said: “We at Rohde & Schwarz are excited to have contributed to this industry milestone with our test and measurement expertise. For real-time NR-NTN channel characterization, we used our high-end signal generation and analysis instruments R&S SMW200A and FSW. Our CMX500-based NTN test suite replicated the Ku-band conditional handover scenarios in the lab. This rigorous testing, which addresses the challenges of satellite-based communications, paved the way for further performance optimization of MediaTek’s and Sharp’s 5G-Advanced NTN devices.”

The post ESA, MediaTek, Eutelsat, Airbus, Sharp, ITRI, and R&S announce world’s first Rel-19 5G-Advanced NR-NTN connection over OneWeb LEO satellites appeared first on ELE Times.

Decoding the AI Age for Engineers: What all Engineers need to thrive in this?

As AI tools increasingly take on real-world tasks, the roles of professionals, from copywriters to engineers, are undergoing a rapid and profound redefinition and reenvisioning. This swift transformation, characteristic of the AI era, is shifting core fundamentals and operational practices. Sometimes AI complements people, other times it replaces them, but most often, it fundamentally redefines their role in the workplace.

In this story, we look further into the emerging roles and responsibilities of an engineer as AI tools gain greater traction, while also tracking the industry’s shifting expectations through the eyes of prominent names from the electronics and semiconductor industry. The resounding messages? Engineers must anchor themselves in foundational principles and embrace systems-level thinking to thrive.

The Siren Song of AI/ML

There’s no doubt that AI and Machine Learning (ML) are the current darlings of the tech world, attracting a huge talent pool. Raghu Panicker, CEO of Kaynes Semicon, notes this trend: “Engineers today at large are seeing that there are more and more people going after AI, ML, data science.” While this pursuit is beneficial, he issues a crucial caution. He urges engineers to “start to re-look at the hardcore electronics,” pointing out the massive advancements happening across the semiconductor and systems space that are being overlooked.

The engineering landscape is broadening beyond just circuit design. Panicker highlights that a semiconductor package today involves less purely “semiconductors” and more physics, chemistry, materials science, and mechanical engineering. This points to a diverse, multi-faceted engineering future.

The Bright Future in Foundations and Manufacturing

The industry’s optimism about the future of electronics, especially in manufacturing, is palpable. With multiple large-scale projects, including silicon and display fabs, being approved, Panicker sees a “very, very bright” future for Electronics and Manufacturing in India.

He stresses that manufacturing is a career path engineers should take “very seriously,” noting that while design attracts the larger paychecks, manufacturing is catching up and has significant, long-term promise. He also brings up the practical aspect of efficiency, stating that minimizing test time is critical for cost-effective customer solutions, requiring a deep understanding of the trade, often gained through specialized programs.

Innovate, Systematize, Tinker: The Engineer’s New Mandate

Building on this theme, Shitendra Bhattacharya, Country Head of Emerson’s Test and Measurement group, emphasizes the need for a community of innovators. He challenges the new generation of engineers to “think innovation, think systems,” which requires them to “get down to dirtying their hands.”

Bhattacharya is vocal about the danger of focusing solely on the “cooler or sexier looking fields like AI and ML.” He asserts that the future growth of the industry, particularly in India, hinges on local innovation and the creation of homegrown products and OEMs. To achieve this, he calls for a shift toward integrated coursework at the university level.

“System design requires you to understand engineering fundamentals. Today, that is missing at many levels… knowing only one domain is not good enough for it. It will not cut it.” – Shitendra Bhattacharya, Emerson

This call for system design thinking —the ability to bring different fields of engineering together—is a key takeaway for thriving in the AI age.

The Return of the ‘Tinkerer’

This focus on fundamental, hands-on knowledge is echoed strongly by Raja Manickam, CEO of iVP Semicon. He reflects on how the education system’s pivot toward coding and computer science led to the loss of skills like tinkering and a foundational understanding of “basics of physics, basics of electricity.”

Manickam argues that AI’s initial impact will be felt most acutely by IT engineers, and the core electronics sector needs engineers who are “more fundamentally strong.” The emphasis is on the joy and necessity of building things from the very scratch. To future-proof their careers, engineers must actively cultivate this foundational, tangible skill set.

The AI Enabler: Transforming the Value Chain

While the focus must return to engineering basics, it’s vital to recognize that AI is not a threat to be avoided but a tool to be mastered. Amit Agnihotri, Chief Operating Officer at RS Components & Control, provides a clear picture of how AI is already transforming the semiconductor value chain end-to-end.

AI is embedded in:

- Design: Driving simulation and optimization to improve power/performance trade-offs.

- Manufacturing: Assisting testing, yield analytics, and smarter process control.

- Supply Chain: Enhancing forecasting, allocation, and inventory strategies with predictive analytics.

- Customer Engagement: Providing personalized guidance and virtual technical support to accelerate time-to-market.

Agnihotri explains that companies like RS Components leverage AI to improve component discovery, localize inventory, and provide data-backed design-in support, accelerating prototyping and scaling with confidence.

Conclusion: Engineering for Longevity

The AI age presents an exciting paradox for engineers. To successfully leverage the most advanced tools, they must first become profoundly proficient in the most fundamental aspects of their discipline. The future belongs not to those who chase the shiniest new technology in isolation, but to those who view AI as an incredible enabler layered upon an unshakeable foundation of physics, materials science, system-level design, and hands-on tinkering.

Engineers who embrace this philosophy—being both an advanced AI user and a foundational master—will be the true architects of the next wave of innovation in the core electronics and semiconductor industry. The message from the industry is clear: Get back to the basics, think in systems, and start innovating locally. That is the wholesome recipe for a thriving engineering career in the AI era.

The post Decoding the AI Age for Engineers: What all Engineers need to thrive in this? appeared first on ELE Times.

Next-Generation of Optical Ethernet PHY Transceivers Deliver Precision Time Protocol and MACsec Encryption for Long-Reach Networking

The post Next-Generation of Optical Ethernet PHY Transceivers Deliver Precision Time Protocol and MACsec Encryption for Long-Reach Networking appeared first on ELE Times.

Anritsu Launches Virtual Network Measurement Solution to Evaluate Communication Quality in Cloud and Virtual Environments

Anritsu Corporation announced the launch of its Virtual Network Master for AWS MX109030PC, a virtual network measurement solution operating in Amazon Web Services (AWS) Cloud environments. This software-based solution enables accurate, repeatable evaluation of communication quality across networks, including Cloud and virtual environments. It measures key network quality indicators, such as latency, jitter, throughput, and packet (frame) loss rate, in both one-way and round-trip directions. This software can accurately evaluate end-to-end (E2E) communication quality even in virtual environments where hardware test instruments cannot be installed.

Moreover, adding the Network Master Pro MT1000A/MT1040A test hardware to the network cellular side supports consistent quality evaluation from the core and Cloud to field-deployed devices.

Anritsu has developed this solution operating on Amazon Web Services (AWS) to accurately and reproducibly evaluate end-to-end (E2E) quality under realistic operating conditions even in virtual environments.

The Virtual Network Master for AWS (MX109030PC) is a software-based solution to accurately evaluate network communication quality in Cloud and virtual environments. Deploying software probes running on AWS across Cloud, data center, and virtual networks enables precise communication quality assessment, even in environments where hardware test instruments cannot be located.

The post Anritsu Launches Virtual Network Measurement Solution to Evaluate Communication Quality in Cloud and Virtual Environments appeared first on ELE Times.

Rohde & Schwarz enables MediaTek’s 6G waveform verification with CMP180 radio communication tester

Rohde & Schwarz announced that MediaTek is utilizing the CMP180 radio communication tester to test and verify TC-DFT-s-OFDM, a proposed waveform technology for 6G networks. This collaboration demonstrates the critical role of advanced test equipment in developing foundational technologies for next-generation wireless communications.

TC-DFT-s-OFDM (Trellis Coded Discrete Fourier Transform spread Orthogonal Frequency Division Multiplexing) is being proposed to 3GPP as a potential candidate technology for 6G standardization. MediaTek’s research shows that TC-DFT-s-OFDM delivers superior Maximum Coupling Loss (MCL) performance across various modulation orders, including advanced configurations like 16QAM.

Key benefits of this 6G waveform proposed by MediaTek include enhanced cell coverage through reduced power back-off requirements and improved power efficiency through optimized power amplifier operation techniques such as Average Power Tracking (APT). TC-DFT-s-OFDM enables up to 4dB higher transmission power compared to traditional modulation schemes while maintaining lower interference levels, implying up to 50% gain in coverage area.

“MediaTek’s selection of our CMP180 for their 6G waveform verification work demonstrates the instrument’s capability to support cutting-edge research and development,” said Fernando Schmitt, Product Manager, Rohde & Schwarz. “As the industry advances toward 6G, we’re committed to providing test solutions that enable our customers to push the boundaries of wireless technology.”

The collaboration will be showcased at this year’s Brooklyn 6G Summit, November 5-7, highlighting industry progress toward defining technical specifications for future wireless communications. As TC-DFT-s-OFDM advances through the 3GPP standardization process, rigorous testing using advanced equipment becomes increasingly critical.

The CMP180 radio communication tester is part of the comprehensive test and measurement portfolio from Rohde & Schwarz designed to support wireless technology development from research through commercial deployment.

The post Rohde & Schwarz enables MediaTek’s 6G waveform verification with CMP180 radio communication tester appeared first on ELE Times.

STMicroelectronics powers 48V mild-hybrid efficiency with flexible automotive 8-channel gate driver

The L98GD8 driver from STMicroelectronics has eight fully configurable channels for driving MOSFETs in flexible high-side and low-side configurations. It is able to operate from a 58V supply, and the L98GD8 provides rich diagnostics and protection for safety and reliability.

The 48V power net lets car makers increase the capabilities of mild-hybrid systems including integrated starter-generators, extending electric-drive modes, and enhancing energy recovery to meet stringent new, globally harmonized vehicle-emission tests. Powering additional large loads at 48V, such as the e-compressor, pumps, fans, and valves further raises the overall electrical efficiency and lowers the vehicle weight.

ST’s L98GD8 assists the transition, as an integrated solution optimized for driving the gates of NMOS or PMOS FETs in 48V-powered systems. With eight independent, configurable outputs, a single driver IC controls MOSFETs connected as individual power switches or as high-side and low-side switches in up to two H-bridges for DC-motor driving. It can also provide peak-and-hold control for electrically operated valves. The gate current is programmable, helping engineers minimize MOSFET switching noise to meet electromagnetic compatibility (EMC) regulations.

Automotive-qualified and featured to meet the industry’s high safety and reliability demands, the L98GD8 has per-channel diagnostics for short-circuit to battery, open-load, and short-to-ground faults. Further diagnostic features include logic built in self-test (BIST), over-/under-voltage monitoring with hardware self-check (HWSC), and a configurable communication check (CC) watchdog timer.

In addition, overcurrent sensing allows many flexible configurations while the ability to monitor the drain-source voltage of external MOSFETs and the voltage across an external shunt resistor help further enhance system reliability. There is also an ultrafast overcurrent shutdown with dual-redundant failsafe pins, battery-under voltage monitoring, an ADC for battery and die temperature monitoring, and H-bridge current limiting.

The L98GD8 is in production now, in a 10mm x 10mm TQFP64 package with a budgetary pricing starting at $3.94 for orders of 1000 pieces.

The post STMicroelectronics powers 48V mild-hybrid efficiency with flexible automotive 8-channel gate driver appeared first on ELE Times.

Keysight Advances Quantum Engineering with New System-Level Simulation Solution

Keysight Technologies, announced the release of Quantum System Analysis, a breakthrough Electronic Design Automation (EDA) solution that enables quantum engineers to simulate and optimize quantum systems at the system level. This new capability marks a significant expansion of Keysight’s Quantum EDA portfolio, which includes Quantum Layout, QuantumPro EM, and Quantum Circuit Simulation. This announcement comes at a pivotal moment for the field, especially following the 2025 Nobel Prize in Physics, which recognized advances in superconducting quantum circuits, a core area of focus for Keysight’s new solution.

Quantum System Analysis empowers researchers to simulate the quantum workflow, from initial design stages to system-level experiments, reducing reliance on costly cryogenic testing and accelerating time-to-validation. This integrated approach supports simulations of quantum experiments and includes tools to optimize dilution fridge input lines for thermal noise and qubit temperature estimation.

Quantum System Analysis introduces two transformative features:

- Time Dynamics Simulator: Models the time evolution of quantum systems using Hamiltonians derived from electromagnetic or circuit simulations. This enables accurate simulation of quantum experiments such as Rabi and Ramsey pulsing, helping researchers understand qubit behavior over time.

- Dilution Fridge Input Line Designer: Allows precise modeling of cryostat input lines to qubits, enabling thermal noise analysis and effective qubit temperature estimation. By simulating the fridge’s input architecture, engineers can minimize thermal photon leakage and improve system fidelity.

Chris Mueth, Senior Director for New Markets at Keysight, said: “Quantum System Analysis marks the completion of a truly unified quantum design workflow, seamlessly connecting electromagnetic and circuit-level modeling with comprehensive system-level simulation. By bridging these domains, it eliminates the need for fragmented toolchains and repeated cryogenic testing, enabling faster innovation and greater confidence in quantum system development.”

Mohamed Hassan, Quantum Solutions Planning Lead at Keysight, said: “Quantum System Analysis is a leap forward in accelerating quantum innovation. By shifting left with simulation, we reduce the need for repeated cryogenic experiments and empower researchers to validate system-level designs earlier in the development cycle.”

Quantum System Analysis is available as part of Keysight’s Advanced Design System (ADS) 2026 platform and complements existing quantum EDA solutions. It supports superconducting qubit platforms and is extensible to other modalities such as spin qubits, making it a versatile choice for quantum R&D teams.

The post Keysight Advances Quantum Engineering with New System-Level Simulation Solution appeared first on ELE Times.

Infineon’s new MOTIX system-on-chip family for motor control enables compact and cost-efficient designs

Infineon Technologies is expanding its MOTIX 32-bit motor control SoC (System-on-chip) family with new solutions for both brushed (BDC) and brushless (BLDC) motor applications: TLE994x and TLE995x. The new products are tailored for small- to medium-sized automotive motors, ranging from functions such as battery cooling in electric vehicles to comfort features such as seat adjustment. The number of such motors continues to grow in modern, especially electric, vehicles and they are used in an increasing number of safety-critical applications.

Therefore, car manufacturers require reliable, compact and cost-effective solutions that

integrate multiple functions. Based on Infineon’s extensive experience in motor control, the

new SoCs combine advanced integration with functional safety and cybersecurity-relevant

features.

The three-phase TLE995x (BLDC) is ideal for pumps and fans in thermal management

systems, while the two-phase TLE994x (BDC) targets comfort functions such as electric

seats and power windows. Both devices integrate advanced diagnostic and protection

functions that support reliable motor operation.

By combining a gate driver, microcontroller, communication interface, and power supply in a single chip, Infineon’s SoCs offer exceptional functionality with minimal footprint. The new LIN-based devices feature an Arm Cortex-M23 core running up to 40 MHz, with

integrated flash and RAM. Field-Oriented Control (FOC) capability ensures efficient and

precise motor operation. Compared to the established TLE986x/7x family, the TLE994x/5x

offers enhanced peripherals, flexible PWM generation via the CCU7, and automatic LIN

message handling to reduce CPU load. All devices comply with ISO 26262 (ASIL B) for

functional safety. Additionally, the integrated Arm TrustZone technology provides a

foundation for improved system security.

The post Infineon’s new MOTIX system-on-chip family for motor control enables compact and cost-efficient designs appeared first on ELE Times.

India’s Battery Manufacturing Capacity Projected to Reach 100 GWh by Next Year: Experts

India’s battery manufacturing capacity stands at nearly 60 GWh and is projected to reach 100 GWh by next year, said Mr. Nikhil Arora, Director, Encore Systems.

Arora said that with automation efficiencies crossing 95% and advanced six-axis robotics handling 625Ah, 12kg cells, we are driving large-scale localization in the energy storage value chain. Our sodium-based cell technologies, safer, highly recyclable and ideal for grid-scale storage reflect India’s growing self-reliance in clean energy.

“Collaborations with IIT Roorkee, NIT Hamirpur and local automation partners are accelerating innovation and technology transfer. As storage costs fall from ₹1.77 to ₹1.2 per unit in five years, India is set to achieve cost parity between solar and storage, advancing its journey toward energy independence.” Arora said while speaking at the 18th Renewable Energy India Expo in Greater Noida.

Speaking at the event, Mr. Ankit Dalmia, Partner, Boston Consulting Group, said “India’s next five years will be shaped by advances in battery storage, digitalization, and green hydrogen. New emerging chemistries such as LFP, sodium-ion and solid-state batteries could cut storage costs by up to 40% by 2030, enabling 24×7 renewable power. AI-driven grid management and smart manufacturing are improving reliability and reducing system costs by nearly 20%. The National Green Hydrogen Mission, targeting 5 million tonnes of production annually by 2030, is positioning India to capture about 10% of global green-hydrogen capacity.”

“With the right policy support, manufacturing scale-up and global partnerships, India can become a resilient, low-cost hub for clean energy and battery innovation. India’s clean-energy ecosystem represents a US$200–250 billion investment opportunity this decade, with targets of 500 GW of renewables and 200 GWh of storage by 2030. Investors are focusing on hybrid RE + storage, grid-scale batteries, and pumped storage projects, while companies leverage AI and digital twins for smarter grid integration. Despite policy and land challenges, strong momentum and falling costs are powering rapid growth.” he further added.

Mr. Arush Gupta, CEO, OKAYA Power Private Limited, said” OKAYA has powered over 3 million Indian households with its inverter and power backup solutions and is now accelerating its presence in solar and lithium storage. With a new ₹140 crore facility coming up in Neemrana, we’re scaling both lithium and inverter production to meet growing residential demand. Solar is projected to contribute nearly 40% of our business within the next five years, driven by initiatives like the PM Suryaghar Muft Bijli Yojana. As India targets 1 crore solar-powered homes, our focus is on providing efficient, digitally enabled rooftop solutions built on indigenous technology. By integrating advanced BMS and power electronics, we aim to make every Indian household energy independent and future-ready.”

Acharya Balkrishna, Head, Patanjali, said “At Patanjali, our vision has always been to contribute to the nation’s development and people’s prosperity through Swadeshi solutions be it in health, wellness, or daily essentials. Extending the same philosophy to renewable energy, we are committed to advancing solar and battery technologies that reduce foreign dependence and make clean energy affordable for all. Solar energy, a divine and continuous source, holds the key to meeting India’s growing power needs at minimal cost. Through Swadeshi-driven innovation and collaboration, we aim to ensure that sustainable and economical solar solutions reach every household in the country.”

Mr. Inderjit Singh, Founder & Managing Director, INDYGREEN Technologies, said “We provide customized battery solutions across L5, C&I, and utility-scale BESS segments, designed to balance performance, scale, and economics for Indian customers. With over 100 battery assembly lines successfully implemented, we aim to expand multifold in the next two years, targeting over 20 GWh of battery lines and 20 GW of solar PV manufacturing solutions. Leveraging IoT and AI-driven technologies, we enhance battery safety, thermal management, and lifecycle efficiency while supporting OEMs and Tier 1 suppliers with advanced insulation and fire-safety systems. Additionally, we’re enabling India’s industrial lithium cell ecosystem through pilot-line infrastructure for premier institutes and labs, alongside showcasing high-efficiency solar cell lines and large-scale BESS assembly solutions at REI and The Battery Show India.”

Sharing perspective on the co-located expos, Mr. Yogesh Mudras, Managing Director, Informa Markets in India, said, “India’s clean energy transition is accelerating faster than ever, with renewable capacity surpassing 250 GW in 2025 and a strong pipeline targeting 500 GW by 2030. The Ministry of Power has approved a ₹5,400 crore Viability Gap Funding (VGF) scheme for 30 GWh of Battery Energy Storage Systems (BESS), in addition to 13.2 GWh already underway, which is expected to attract ₹33,000 crore in investments by 2028.

The post India’s Battery Manufacturing Capacity Projected to Reach 100 GWh by Next Year: Experts appeared first on ELE Times.