ELE Times

Top 10 Smart Switch Startups in India

The Indian smart home market is experiencing rapid growth, with smart switches playing a pivotal role in home automation. These devices allow users to control lighting, appliances, and other electrical fixtures remotely, enhancing convenience and energy efficiency. Several Indian startups have emerged as key players in this domain, offering innovative smart switch solutions tailored to the unique needs of Indian consumers. Here are ten notable smart switch startups in India:

- Wipro Smart Home

A subsidiary of Wipro Limited, Wipro Smart Home specializes in integrated smart lighting systems, security solutions, and energy management devices. Their smart switches enable users to control home lighting remotely, schedule operations, and monitor energy consumption, all through a user-friendly mobile application. The seamless integration with other smart devices makes Wipro a prominent player in the Indian smart switch market.

- Syska

Syska is renowned for its smart lighting solutions, including smart bulbs and switches. Their smart switches are designed for easy installation and compatibility with voice assistants like Amazon Alexa and Google Assistant. Features such as remote operation, scheduling, and energy monitoring cater to the evolving needs of tech-savvy consumers.

- Oakter

Oakter offers modular smart home kits, including smart plugs and switches, that can be controlled via smartphones or voice commands. Their smart switches are designed for retrofit installations, allowing users to upgrade existing setups without extensive rewiring. The focus on affordability and user-friendly interfaces has made Oakter a popular choice among Indian homeowners.

- Cubical Labs

Cubical Labs provides automation systems for lighting, security, and energy management. Their smart switches offer features like touch-sensitive controls, remote access, and integration with other smart devices. The emphasis on scalability and customization allows users to tailor their smart home experience according to individual preferences.

- Atomberg Technologies

While primarily known for smart ceiling fans, Atomberg Technologies has ventured into smart switches that complement their energy-efficient appliances. These switches offer remote control, scheduling, and energy monitoring, aligning with the company’s commitment to sustainability and innovation.

- Silvan Innovation Labs

Silvan specializes in integrated home automation systems, including smart switches that control lighting, security, and entertainment devices. Their products feature AI-powered systems and voice recognition, providing a seamless and intuitive user experience. The focus on high-end residences and luxury hotels showcases their expertise in creating sophisticated smart home ecosystems.

- TagHaus

TagHaus offers a range of smart home devices, including smart plugs and switches, that prioritize simplicity and affordability. Their smart switches are designed for plug-and-play installation, making it easy for users to upgrade their homes without professional assistance. Features like cloud connectivity and mobile app control enhance the convenience and appeal of their products.

- Inoho

Inoho provides retrofit smart home solutions, including smart switches that allow users to control appliances remotely. Their modular design and affordability make it accessible for homeowners looking to upgrade their existing electrical systems without significant modifications.

- Leccy & Genesis

Leccy & Genesis focuses on centralized control systems, offering smart switchboards that manage various appliances simultaneously. Their products are designed to be dependable and user-friendly, providing homeowners with the ability to control lighting, fans, and other devices through a centralized interface.

- Jasmine Smart Homes

Jasmine Smart Homes offers Wi-Fi-enabled smart switches designed specifically for Indian homes. Their products feature innovative designs, easy installation, and compatibility with popular voice assistants. The focus on combining functionality, style, and reliability has made them a trusted name in the Indian smart home market.

These startups are at the forefront of India’s smart switch industry, offering a diverse range of products that cater to various consumer needs. From energy efficiency to seamless integration with existing home systems, these companies are driving the adoption of smart home technologies across the country.

The post Top 10 Smart Switch Startups in India appeared first on ELE Times.

Top 10 Reflow Oven Manufacturers in India

India’s electronics manufacturing sector has witnessed remarkable growth, driving a rising demand for high-quality reflow ovens, which are essential for soldering surface-mount components onto printed circuit boards (PCBs). Several Indian companies have emerged as key players in this industry, offering advanced reflow oven solutions tailored to domestic and international requirements. Below is an overview of the top 10 reflow oven manufacturers in India, highlighting their technological advancements and industry contributions.

- Heller India

Heller India, a subsidiary of Heller Industries, is known for its cutting-edge reflow soldering ovens. Their product range includes convection reflow ovens, voidless/vacuum reflow soldering ovens, and fluxless/formic reflow soldering ovens, as well as curing ovens. These solutions cater to applications such as surface-mount technology (SMT) reflow, semiconductor packaging, consumer electronics assembly, and power device packaging. Heller India’s products emphasize efficiency and sustainability, featuring Industry 4.0 compatibility, low-height top shells, and innovative flux management systems.

- Leaptech Corporation

Headquartered in Mumbai, Leaptech Corporation provides a diverse range of SMT equipment, including high-performance reflow soldering ovens. They are authorized distributors of ITW EAE Vitronics Soltec’s Centurion Reflow Ovens, which offer exceptional reliability and precise thermal performance. The Centurion platform includes forced-convection SMT reflow systems with closed-loop process control, ideal for high-throughput PCB assembly environments. Leaptech also supplies Tangteck reflow ovens, including IR & hot air SMT reflow furnaces, BGA soldering reflow furnaces, and curing furnaces tailored to different production needs.

- EMS Technologies

EMS Technologies, located in Bangalore, specializes in manufacturing reflow ovens and other SMT equipment. Their flagship model, the Konark 1020, is a 10-zone reflow oven designed for complex PCB assemblies. It features a Windows 10-based PC interface, data logging traceability, adjustable blower speed, and PID closed-loop temperature control, ensuring superior precision in soldering operations.

- Mectronics Marketing Services

Mectronics Marketing Services, based in New Delhi, is a leading provider of electronic manufacturing equipment, including reflow soldering systems. Their EPS reflow ovens incorporate patented Horizontal Convection technology, ensuring uniform heating across the entire PCB. The company’s product lineup includes benchtop solder reflow ovens, batch ovens, automatic floor-style systems, hot plates, and vapor phase ovens, catering to various scales of electronics production.

- Sumitron Exports

Operating from New Delhi, Sumitron Exports is a key supplier of high-quality soldering solutions, including reflow ovens. Partnering with renowned international brands, they bring advanced reflow soldering technology to the Indian market, addressing the needs of modern electronics manufacturers.

- Accurex Solutions Pvt. Ltd.

Accurex Solutions Pvt. Ltd. is a leading supplier of SMT equipment, including advanced reflow soldering ovens. Their product range is designed to meet the high-performance demands of modern electronics manufacturing, ensuring precision and reliability.

- SumiLax SMT Technologies Private Limited

Based in New Delhi, SumiLax SMT Technologies offers a wide range of reflow ovens, including models such as the T960 and T980. These ovens provide efficient and reliable soldering solutions, catering to diverse SMT production requirements.

- Hamming Technology Private Limited

Located in Noida, Hamming Technology specializes in manufacturing lead-free reflow ovens. Their products feature advanced PID+SSR control modes and hot air circulation heating technology, ensuring efficient, consistent, and high-quality soldering processes.

- Shenzhen Jaguar Automation Equipment Co., Ltd.

Although headquartered in Shenzhen, China, Shenzhen Jaguar Automation Equipment Co., Ltd. has a prominent presence in the Indian market. Their reflow soldering machines, including models like the A4, are known for their high precision and efficiency in SMT production lines.

- GoldLand Electronic Technology Co., Ltd.

GoldLand Electronic Technology Co., Ltd. is another international player with a strong foothold in India, offering advanced reflow ovens suited for various SMT applications. Their products are recognized for quality and reliability in electronics manufacturing.

These companies play a crucial role in strengthening India’s electronics manufacturing ecosystem by providing innovative reflow oven solutions. Their commitment to technological advancements ensures that Indian manufacturers have access to state-of-the-art equipment, facilitating the production of high-quality electronic products.

The post Top 10 Reflow Oven Manufacturers in India appeared first on ELE Times.

Understanding Soft Soldering: Definition, Process, Working, Uses & Advantages

Soft soldering is a process used to join metal components by melting a filler metal, known as solder, which has a low melting point, typically below 450°C (842°F). The solder forms a bond between the surfaces without melting the base metals. It is widely used in electronics, plumbing, and jewellery making due to its ability to create strong yet flexible joints. Unlike hard soldering or welding, soft soldering does not require extremely high temperatures, making it ideal for delicate workpieces.

How Soft Soldering WorksSoft soldering involves heating the joint area to a temperature sufficient to melt the solder but not the base materials. A soldering iron, torch, or another heat source is used to bring the solder to its melting point. When melted, the solder flows into the joint by capillary action and solidifies as it cools, forming a mechanical and electrical connection. The effectiveness of this process depends on the cleanliness of the surfaces, the proper use of flux, and the choice of solder alloy.

Soft Soldering ProcessThe soft soldering process follows several essential steps to ensure a secure and reliable joint:

- Surface Preparation: Before soldering, the metal surfaces must be cleaned thoroughly to remove any dirt, oxidation, or grease. This can be done using abrasive pads, chemical cleaners, or flux.

- Application of Flux: Flux is a chemical agent that prevents oxidation and helps the solder adhere to the metal surfaces. It is applied to the joint area before heating.

- Heating the Joint: A soldering iron or other heat source is used to heat the metal surfaces to the required temperature. The heat must be sufficient to melt the solder but not damage the components.

- Applying Solder: Once the surfaces are heated, the solder is applied to the joint. It melts and flows into the gap between the metals through capillary action, creating a bond.

- Cooling and Solidification: After the solder has flowed into the joint, the heat source is removed, allowing the solder to cool and solidify. Proper cooling ensures a strong and durable bond.

- Cleaning the Joint: Any residual flux or oxidation by-product should be cleaned off using alcohol or other cleaning solutions to prevent corrosion or electrical issues.

Soft soldering is widely applied across various industries due to its versatility and ease of use. Some of its common applications include:

- Electronics: Soft soldering is extensively used in the electronics industry for assembling circuit boards, connecting wires, and securing components. It ensures reliable electrical conductivity while maintaining component integrity.

- Plumbing: In household plumbing, soft soldering is employed to join copper pipes, creating leak-proof connections. Lead-free solders are used to ensure safe drinking water systems.

- Jewellery Making: Soft soldering allows jewellers to join delicate metal pieces without compromising their structural integrity or aesthetic appeal.

- Automotive Industry: Automotive manufacturers use soft soldering for electrical connections in vehicles, ensuring durability and performance.

- Arts and Crafts: Artists and craftsperson utilize soft soldering for creating decorative metalwork, stained glass, and intricate designs.

Soft soldering offers several benefits, making it a preferred method for many applications:

- Low Operating Temperature: Soft soldering requires lower temperatures compared to brazing or welding, reducing the risk of heat damage to components.

- Ease of Use: The process is simple and does not require specialized training or expensive equipment.

- Cost-Effective: Soft soldering uses affordable materials and tools, making it an economical joining method.

- Good Electrical Conductivity: The soldered joints provide excellent electrical conductivity, making it ideal for electronic circuits.

- Reversibility: If necessary, soldered joints can be easily reworked or repaired by reheating the solder.

- Compatibility with Thin Materials: Soft soldering is suitable for delicate and thin metals that might be damaged by higher-temperature processes.

Despite its advantages, soft soldering has some limitations that must be considered:

- Lower Strength: Soft soldered joints are not as strong as brazed or welded joints, making them unsuitable for high-load applications.

- Limited Temperature Resistance: The low melting point of soft solder means that joints can weaken or fail at high temperatures.

- Flux Residue Issues: If not cleaned properly, residual flux can lead to corrosion or contamination in sensitive applications.

- Potential Toxicity: Some solder materials, particularly those containing lead, can be hazardous to health and the environment, necessitating the use of lead-free alternatives.

Soft soldering remains an essential technique in various industries, offering a balance of affordability, ease of use, and effectiveness. While it has certain limitations, its advantages make it a preferred method for applications requiring electrical conductivity, delicate metalwork, and low-temperature bonding. By understanding the process, applications, and considerations, professionals and hobbyists alike can make the most of soft soldering in their projects.

The post Understanding Soft Soldering: Definition, Process, Working, Uses & Advantages appeared first on ELE Times.

Global manufacturers welcome smart manufacturing and PCB technology platform linking Southeast Asian industrial supply chain

Intelligent Asia Thailand and Automation Thailand open tomorrow at the Bangkok International Trade & Exhibition Centre (BITEC). Running through 8 March 2025, this platform will unite 300 international and domestic leaders in printed circuit board (PCB) technologies, industrial control, assembly, power electronics, CNC machinery, factory automation and more across 10,000 square meters of exhibition space, creating a comprehensive network that connects Southeast Asia’s industrial supply chain.

Addressing Thailand’s thriving electronics and semiconductor industries, Intelligent Asia Thailand brings together a wide range of smart electronic manufacturing technologies, PCB technologies, and other advanced component production and assembly solutions.

As part of the PCB segment, the exhibition demonstrates end-to-end capabilities across manufacturing, automation, testing, and materials. On display are HDI/IC carrier boards, flexible and rigid PCBs, smart automation equipment, and advanced testing solutions, alongside non-destructive inspection systems, process equipment, electroplating technology, and handling systems. Leading software providers complement these technologies, linking manufacturers, suppliers, and other key supply chain players throughout Southeast Asia.

Within the platform, Automation Thailand focuses on comprehensive automation solutions, uniting regional manufacturers with automation experts and system integrators. Visitors can explore essential technologies for fully digitised factories, from inspection systems and digital platforms to automated handling equipment and smart factory robotics.

Expert sessions across three technical stages

Supporting industry professionals from technology selection to implementation, three concurrent knowledge-sharing events will accompany the exhibitions, bridging upstream and midstream segments through a combination of technical presentations, live demonstrations, and expert discussions.

Running over the course of two days, the PCB Stage will explore collaboration opportunities between the electronics manufacturing and PCB industries. Industry experts and panel discussions will address topics including PCB and packaging substrate innovations in Southeast Asia, smart manufacturing and green factory initiatives. Further areas of focus include investment prospects in Thailand’s industrial estates, including tax, licensing and regulatory considerations, as well as collaboration challenges and opportunities within the global PCB industry.

At the Tech Stage, exhibitors will present a range of advanced solutions spanning automation, robotics, energy and sustainability, motion technology, digital systems, warehouse operations, sensor technology, and Industrial IoT. These sessions will cover key developments in smart manufacturing and industrial safety, connecting attendees with solutions from leading manufacturers.

Drawing expertise from global Smart Production Solutions (SPS) events, SPS Stage Bangkok brings international automation insights to Thailand’s manufacturing sector. Industry leaders including Beckhoff Automation, Bender GmbH, Phoenix Contact (Thailand), and Pilz South East Asia (Thailand) will present strategies for modernising legacy factories, improving operational resilience, implementing AI in manufacturing and logistics, and achieving IT/OT convergence.

Complementing the stage, the SPS Demo Zone will feature advanced industrial technologies from SICK (Thailand), Wieland Electric Singapore, Belden Asia (Thailand), Balluff, Cognex, Igus, Interroll and Rittal, providing attendees with practical demonstrations of automation technologies.

Leading manufacturers to present technologies from PCB production to smart factory solutions

The platform will feature a diverse range of solutions spanning PCB manufacturing, automation, automation and motion control, cleanroom and factory infrastructure, and more. Participating exhibitors and their exhibition highlights include:

PCB manufacturing:

- Shenzhen Han’s CNC Technology and World Wide PCB Equipments will showcase advanced production technologies, including laser drilling and direct imaging systems.

- Huizhou CEE Technology and Dongwei Technology (Thailand) will present innovations in PCB fabrication, focusing on high-density interconnect boards and copper plating equipment.

- DuPont Taiwan will feature advanced materials for PCB and IC substrate production, such as electroplating and high-speed transmission solutions.

Automation and motion control:

- Smart Motion Control will demonstrate industrial optimisation systems, including motion controllers, servo motors, and encoders.

- Delta Electronics (Thailand) will exhibit power and thermal management solutions for industrial applications.

- Nidec Advance Technology (Thailand) will showcase precision inspection technologies and EV motor testing systems.

- Schloetter Asia and Atotech (Thailand) will present surface treatment and plating technologies for high-performance manufacturing processes.

Cleanroom and factory infrastructure:

- Long Long Clean Room Technology will offer solutions for controlled environments, including cleanroom and freezer panels.

- C Sun MFG will exhibit UV processing and plasma treatment equipment, supporting high-precision manufacturing needs.

- Mega Energy (Thailand) will present power distribution solutions, including busway systems and switchgear, to ensure efficient energy management in industrial facilities.

Other advanced manufacturing solutions:

- Aresplus will demonstrate high-precision moulding and simulation software.

- Store Master will showcase solutions for logistics and storage optimisation.

- Johnsolar Energy will present sustainable energy solutions, including solar power systems for industrial use.

- Sagami Shoko (Thailand), Raas Pal (Thailand), Thaimach Sales & Service (Thailand), and Thai Worth (Thailand) will showcase a wide range of solutions to support production efficiency, automate workflows, and improve operational performance.

Intelligent Asia Thailand, Automation Thailand and SPS Stage Bangkok are jointly organised by Messe Frankfurt (HK) Ltd Taiwan Branch, Yorkers Trade & Marketing Service Co Ltd and GMTX Company Ltd. For more details, please contact: Israel.Gogol@taiwan.messefrankfurt.com.

The post Global manufacturers welcome smart manufacturing and PCB technology platform linking Southeast Asian industrial supply chain appeared first on ELE Times.

Top 10 Solar Power Plants in the USA

The United States has been making significant strides in renewable energy, with solar power emerging as a key player in the clean energy transition. Over the past decade, massive solar farms have been established across the country, contributing significantly to the national grid. These solar power plants not only generate sustainable electricity but also help reduce carbon emissions and promote energy independence. Below is an in-depth look at the top 10 largest solar power plants in the USA, detailing their capacity, location, and impact.

1. Copper Mountain Solar Facility- Location: Nevada

- Capacity: 802 MW (AC)

The Copper Mountain Solar Facility in Nevada is one of the largest photovoltaic (PV) solar plants in the United States. Developed in five phases, this project has continually expanded since its inception. Its large-scale capacity supplies clean energy to thousands of homes while reducing reliance on fossil fuels. The facility showcases how solar energy can be scaled up efficiently to integrate with the national electricity grid.

2. Gemini Solar Project- Location: Nevada

- Capacity: 690 MW (AC)

The Gemini Solar Project is one of the most ambitious solar power projects in the U.S. In addition to its impressive solar power generation, it includes 380 MW of battery storage, ensuring stable energy supply even during periods of low sunlight. This hybrid solar-plus-storage system demonstrates the future of renewable energy, where energy storage plays a crucial role in grid stability and efficiency.

3. Edwards Sanborn Solar and Energy Storage Project- Location: California

- Capacity: 864 MW (Solar) + 3,320 MWh (Battery Storage)

Located in California, the Edwards Sanborn Solar and Energy Storage Project is a groundbreaking renewable energy initiative. This facility integrates large-scale solar power generation with one of the largest battery storage capacities in the country. The battery component ensures that excess solar energy generated during the day is stored and used when needed, making it a game-changer in the renewable energy sector.

4. Lumina I and II Solar Project- Location: Texas

- Capacity: 828 MW

Texas is rapidly becoming a leader in solar power, and the Lumina I and II Solar Project is a testament to that growth. Expected to be completed by 2024, these twin solar farms will add 828 MW of clean energy to the state’s power grid. Texas’ solar expansion highlights how renewable energy can complement traditional power sources, especially in a state known for its oil and gas industry.

5. Mount Signal Solar- Location: California

- Capacity: 794 MW

The Mount Signal Solar project, also known as the Imperial Valley Solar Project, has been built in multiple phases since 2014. This massive solar farm is located in the sun-drenched Imperial Valley of California, where it harnesses abundant sunlight to generate clean electricity. The project has played a critical role in California’s transition towards 100% clean energy goals.

6. Solar Star I & II- Location: California

- Capacity: 747 MW

When it was completed in 2015, Solar Star I & II was the largest solar power plant in the world, with a capacity of 579 MW (AC). It set new benchmarks for utility-scale solar installations and inspired the development of even larger projects. Spread across 13 square kilometers, this solar farm utilizes advanced photovoltaic technology to efficiently convert sunlight into electricity.

7. Topaz Solar Farm- Location: California

- Capacity: 550 MW (AC)

The Topaz Solar Farm is another key solar project in California, operational since 2014. One of the pioneering utility-scale solar projects, it consists of over 9 million thin-film photovoltaic panels. This farm has been instrumental in proving the economic and environmental feasibility of large-scale solar projects in the United States.

8. Desert Sunlight Solar Farm- Location: California

- Capacity: 550 MW (AC)

Commissioned in 2014, the Desert Sunlight Solar Farm is one of the largest solar projects in the world. It spans 3,800 acres in the Mojave Desert and consists of over 8 million solar panels. This farm contributes significantly to California’s ambitious renewable energy targets, reducing carbon emissions and supporting a cleaner energy future.

9. Ivanpah Solar Electric Generating System- Location: California

- Capacity: 392 MW

Unlike traditional photovoltaic solar farms, the Ivanpah Solar Electric Generating System uses solar thermal technology. It employs mirrors (heliostats) to focus sunlight onto central towers, generating steam to power turbines. This innovative approach allows the plant to produce electricity even when sunlight is not directly available, making it one of the most advanced solar plants in the country.

10. Agua Caliente Solar Project- Location: Arizona

- Capacity: 290 MW

The Agua Caliente Solar Project in Arizona is notable for utilizing thin-film cadmium telluride (CdTe) solar panels, which offer cost-effective and high-efficiency energy production. The plant generates over 626 GWh of clean energy annually, powering thousands of homes and reducing dependence on conventional power sources.

The Future of Solar Energy in the USAThe U.S. solar industry continues to grow, with large-scale projects like these playing a crucial role in the transition towards clean and renewable energy. These power plants not only contribute to reducing greenhouse gas emissions but also help in creating jobs, boosting energy security, and promoting technological advancements in solar power and battery storage.

With increasing investments in solar farms and energy storage, the United States is well on its way to achieving a sustainable and carbon-free energy future.

The post Top 10 Solar Power Plants in the USA appeared first on ELE Times.

Top 10 Agriculture Drone Companies in India

Agriculture in India has witnessed a technological revolution, with drones playing a pivotal role in modernizing farming practices. These unmanned aerial vehicles (UAVs) assist in tasks such as crop monitoring, precision spraying, and data analysis, leading to increased efficiency and sustainability. Here are ten prominent agriculture drone companies in India contributing to this transformation:

- Garuda Aerospace

Based in Chennai, Garuda Aerospace specializes in drone solutions for various sectors, including agriculture. Their drones are designed for precision spraying, crop health monitoring, and surveillance, aiming to enhance productivity and reduce manual labor in farming.

- IoTechWorld Avigation

IoTechWorld Avigation, headquartered in Gurugram, offers innovative agricultural drones like the Agribot. This multi-rotary drone is India’s first DGCA-approved agriculture drone, used for spraying, broadcasting, and assessing soil and crop health.

- Throttle Aerospace Systems

Bangalore-based Throttle Aerospace Systems manufactures UAVs for various applications, including agriculture. Their drones assist in land mapping, surveillance, cargo delivery, inspection, and disaster management, providing versatile solutions for the farming sector.

- Aarav Unmanned Systems (AUS)

AUS, located in Bangalore, offers drone-based solutions for mining, urban planning, and agriculture. Their drones facilitate precision agriculture by providing high-resolution aerial imagery for crop health monitoring and yield estimation.

- Dhaksha Unmanned Systems

Chennai-based Dhaksha Unmanned Systems provides drones for agriculture, surveillance, and logistics. Their agricultural drones are equipped with intelligent spraying systems and real-time data analysis capabilities, enhancing farming efficiency.

- ideaForge

Headquartered in Mumbai, ideaForge is a leading manufacturer of UAVs for defense, homeland security, and industrial applications, including agriculture. Their drones offer high endurance and are used for large-scale mapping and surveillance in farming.

- General Aeronautics

Bangalore-based General Aeronautics offers the Krishak series drones for agricultural purposes. Known for their durability and efficient spraying systems, these drones are compatible with various attachments, allowing multi-functional use in diverse agricultural settings.

- Paras Aerospace

Paras Aerospace, located in Bangalore, specializes in user-friendly and affordable drones for agriculture. Their Paras Agricopter series is designed for precision spraying and crop monitoring, aiming to optimize resource utilization and increase yields.

- Johnnette Technologies

Based in Noida, Johnnette Technologies offers agricultural drone services, including crop health monitoring, precision spraying, and remote sensing. Their drones are designed to optimize agrochemical applications, reducing wastage and maximizing crop yields.

- Asteria Aerospace

Bangalore-based Asteria Aerospace provides drone-based solutions for various sectors, including agriculture. Their drones are used for crop monitoring, field mapping, and surveillance, aiding farmers in making data-driven decisions.

These companies are at the forefront of integrating drone technology into Indian agriculture, offering solutions that enhance productivity, efficiency, and sustainability. As the industry continues to evolve, these innovations are expected to play a crucial role in meeting the growing demands of modern farming.

The post Top 10 Agriculture Drone Companies in India appeared first on ELE Times.

Understanding Reflow Soldering: Definition, Process, Working, Uses & Advantages

Reflow soldering is a widely used technique in electronics manufacturing for assembling surface-mount devices (SMDs) onto printed circuit boards (PCBs). This method involves applying solder paste to the board, placing components on top of it, and then heating the assembly in a controlled manner to melt and solidify the solder. The process ensures strong and reliable solder joints, making it a preferred method for mass production in the electronics industry.

Unlike wave soldering, which is typically used for through-hole components, reflow soldering is designed for SMDs, allowing for higher component density and miniaturization of electronic circuits. The precision and efficiency of reflow soldering make it ideal for modern electronic manufacturing, where consistency and high reliability are critical.

How Reflow Soldering WorksThe reflow soldering process consists of several carefully controlled stages to ensure optimal soldering results. Each stage plays a crucial role in preventing defects such as solder bridging, tombstoning, or incomplete solder joints. The main steps include:

- Solder Paste Application

The process begins with the application of solder paste, a mixture of powdered solder alloy and flux, onto the PCB. This is typically done using a stencil and a squeegee to ensure uniform deposition. The accuracy of solder paste application is critical as it determines the quality of the solder joints.

- Component Placement

Once the solder paste is applied, surface-mount components are carefully placed on the PCB using automated pick-and-place machines. These machines use vision systems to precisely position components, ensuring alignment with the solder paste deposits.

- Preheating Stage

The assembled board is then gradually heated in a reflow oven. The preheating stage raises the temperature of the PCB and components at a controlled rate to prevent thermal shock. This phase also activates the flux in the solder paste, which removes oxidation and improves wetting.

- Thermal Soaking

After preheating, the PCB enters a thermal soaking phase where the temperature is maintained at a specific range to ensure uniform heat distribution. This helps in stabilizing the components and further activating the flux.

- Reflow Zone (Peak Temperature Stage)

In this stage, the temperature reaches its peak, typically between 220°C and 250°C, depending on the solder alloy used. This is the critical moment where the solder paste melts, creating reliable electrical and mechanical connections between the components and the PCB.

- Cooling Phase

Once the solder has melted and formed solid connections, the PCB is gradually cooled in a controlled manner. Controlled cooling prevents thermal stress and ensures the formation of strong, defect-free solder joints.

Reflow Soldering Uses & ApplicationsReflow soldering is widely used in various industries, primarily in the manufacturing of electronic devices. Some key applications include:

- Consumer Electronics: Smartphones, laptops, tablets, and gaming consoles all rely on reflow soldering to ensure compact and efficient circuit assemblies.

- Automotive Electronics: Modern vehicles contain complex electronic systems, including engine control units (ECUs), infotainment systems, and safety sensors, all of which use SMD technology and reflow soldering.

- Medical Devices: High-precision medical equipment, such as diagnostic devices and portable health monitors, require reliable soldering for seamless functionality.

- Industrial Electronics: Industrial automation, control systems, and robotics benefit from reflow soldering due to its ability to create robust and durable electronic circuits.

- Aerospace & Defense: High-reliability electronics for satellites, avionics, and defense applications depend on precise and high-quality soldering techniques like reflow soldering.

Reflow soldering offers several advantages that make it the preferred method for assembling SMD components:

- High Precision: Automated solder paste application and component placement result in accurate soldering with minimal defects.

- Consistency and Reliability: Controlled heating profiles ensure strong and uniform solder joints, reducing the chances of failure.

- Mass Production Efficiency: The process is highly automated and scalable, making it suitable for high-volume manufacturing.

- Compatibility with Small Components: Reflow soldering supports miniaturized electronics, enabling the development of compact and lightweight devices.

- Improved Aesthetic and Functionality: Unlike wave soldering, reflow soldering does not leave excess solder, resulting in cleaner circuit boards with better electrical performance.

Despite its benefits, reflow soldering also has some limitations:

- Complex Equipment Requirements: Reflow ovens and pick-and-place machines are expensive, making the initial setup costly for small manufacturers.

- Component Sensitivity: Some temperature-sensitive components may get damaged if exposed to high temperatures during the reflow process.

- Risk of Defects: Issues such as tombstoning (where small components lift on one side) or solder bridging (where excess solder creates unintended connections) can occur if process parameters are not optimized.

- Limited Use for Through-Hole Components: While hybrid techniques exist, reflow soldering is primarily designed for surface-mount devices, requiring additional methods for through-hole components.

Reflow soldering is a highly efficient and precise method for assembling surface-mount components on PCBs. With applications ranging from consumer electronics to aerospace, it remains a crucial technique in modern electronic manufacturing. While the process requires careful temperature control and specialized equipment, its benefits in terms of reliability, efficiency, and scalability make it indispensable. As technology advances, improvements in solder paste formulations and reflow oven designs continue to enhance the effectiveness of reflow soldering, ensuring its relevance in the ever-evolving electronics industry.

The post Understanding Reflow Soldering: Definition, Process, Working, Uses & Advantages appeared first on ELE Times.

Top 10 3D Printer Manufacturers in India

India’s 3D printing industry has experienced significant growth, with numerous companies emerging as key players in the field. Here’s an overview of the top 10 3D printer manufacturers in India as of 2025:

- Divide By Zero Technologies

Established in 2013 and based in Maharashtra, Divide By Zero Technologies is a prominent 3D printer manufacturer catering to small and medium enterprises. The company aims to make 3D printing affordable and reliable, providing solutions to renowned clients such as Samsung, L’Oreal, GE Healthcare, Godrej, and Mahindra. Their product lineup includes models like Accucraft i250+, Aion 500 MK2, Aeqon 400 V3, and Alpha 500. Notably, their patented Advanced Fusion Plastic Modeling (AFPM) technology ensures consistent quality through intuitive automation.

- Make3D

Founded in 2014 in Gujarat, Make3D specializes in manufacturing 3D printers and scanners. Their Pratham series caters to both hobbyists and industrial users, featuring models like Pratham Mini and Pratham 6.0. They also offer the Eka Star DLP Printer and EinScan Pro 2X scanner. Committed to enhancing the accessibility of additive manufacturing, Make3D provides products known for quality and durability, alongside educational courses on 3D printing.

- Mekuva Technologies (POD3D)

Based in Hyderabad and established in 2021, Mekuva Technologies offers a range of 3D printers, including the AKAR series (300 Pro, 600 Basic, 200 Desktop, and 200 Pro). Beyond manufacturing, they provide services like 3D design, printing, scanning, CNC machining, sheet metal operations, and injection molding, serving as a comprehensive solution provider for various industries. Their printers are compatible with materials such as PLA, ABS, and carbon fiber.

- IMIK Technologies

Operating since 2007 in Tamil Nadu, IMIK Technologies supplies a wide range of 3D printers, including their own FDM printer, IMIK 3DP, and models from brands like Creality, Flashforge, and Ultimaker. They cater to sectors such as defense, textiles, robotics, agriculture, and medicine, offering products suitable for educational, industrial, and personal use. Additionally, they provide 3D prototyping services.

- Printlay

Established in 2015 in Tamil Nadu, Printlay specializes in 3D printing and scanning services. They assist clients from prototyping to end-use parts production, ensuring precision and accuracy. Their services extend to CNC machining and injection molding, catering to diverse industries with a reputation for quick turnaround times and quality service.

- A3DXYZ

Founded in 2018 in Maharashtra, A3DXYZ offers 3D printing services, including FDM printing, stereolithography, CAD modeling, digital light processing, and vacuum casting. They serve industries such as architecture, aerospace, healthcare, and medicine, and have introduced the A3DXYZ DS200 3D printer, known for its robust design and high-quality output.

- Boson Machines

Based in Maharashtra and established in 2017, Boson Machines is a leading 3D printing manufacturer utilizing technologies like FDM, SLA, and SLS. They offer services such as part production, injection molding, and CNC machining, providing comprehensive solutions from design to production.

- 3Ding

Operating since 2013 in Tamil Nadu, 3Ding supplies a variety of 3D printers, PCB printers, printing materials, and scanners. They distribute products from brands like 3D Systems, Fab X, Voltera, Formlabs, and Creality, and offer rental options. Their services include 3D design, printing, and scanning, along with workshops and training programs. They have branches in Chennai, Bangalore, Hyderabad, and Mumbai.

- Precious 3D

Established in 2017 in Tamil Nadu, Precious 3D offers 3D design, printing, CNC machining, and injection molding services. They utilize technologies like SLS, FDM, DLP, and Polyjet, serving industries such as automotive and healthcare. Their clientele includes brands like Renault, Ford, Verizon, and Nippon Paint, and they provide consultation services for material selection.

- Imaginarium

Based in Mumbai, Imaginarium stands as India’s largest 3D printing and rapid prototyping company. They offer design validation, prototyping, batch production, and various techniques such as SLA (Stereolithography), SLS (Selective Laser Sintering), VC (Vacuum Casting), CNC machining, injection molding, and scanning. Serving industries such as jewelry, automotive, and healthcare, Imaginarium utilizes 20 industrial 3D printers and 140 different materials, making them a key player in the field.

These companies exemplify the rapid advancement and diversification of India’s 3D printing industry, offering a wide range of products and services to meet the evolving needs of various sectors.

The post Top 10 3D Printer Manufacturers in India appeared first on ELE Times.

STMicroelectronics enables unmatched edge AI performance on MCU with STM32N6

In an era where artificial intelligence (AI) is rapidly transforming edge computing, STMicroelectronics is leading the charge with its groundbreaking STM32N6 series, featuring the Neural-ART Accelerator. This next-generation microcontroller delivers 600 times more AI performance than previous STM32 MCUs, redefining real-time AI processing for embedded systems.

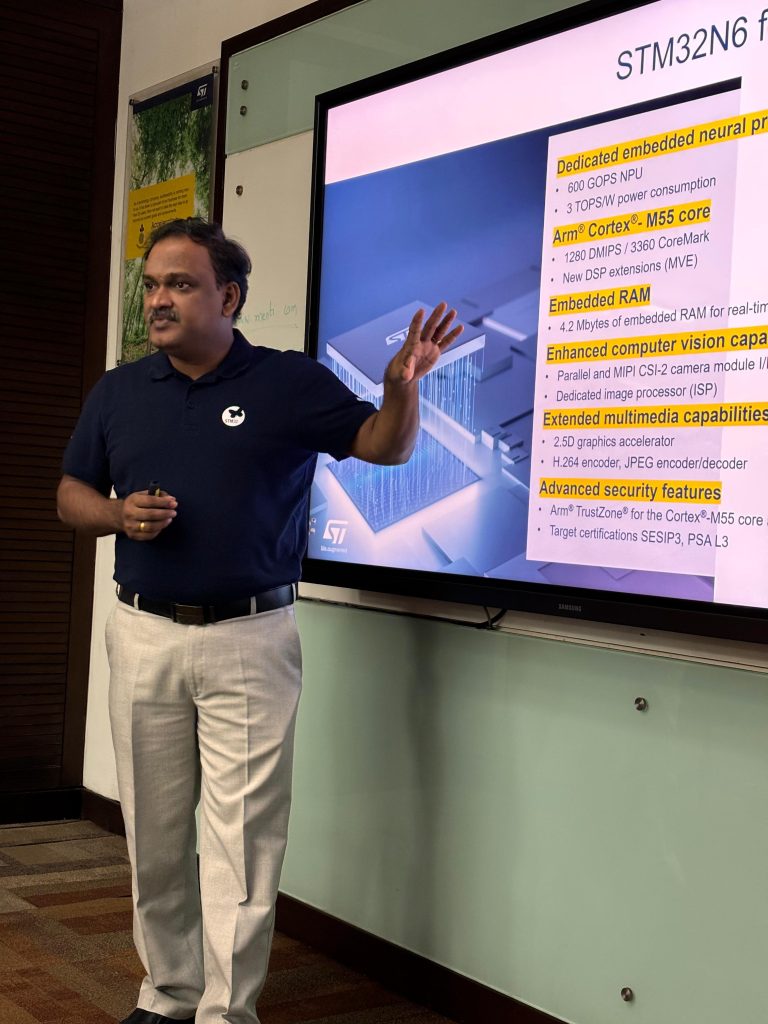

Sridhar Ethiraj, Technical Marketing – General Purpose MCU at STMicroelectronics India

Sridhar Ethiraj, Technical Marketing – General Purpose MCU at STMicroelectronics India

In this exclusive interview, Rashi Bajpai from ELE Times sits down with Sridhar Ethiraj, Technical Marketing – General Purpose MCU at STMicroelectronics India, to explore the technological breakthroughs behind the STM32N6. We discuss the challenges of integrating high-performance AI with ultra-low power consumption, the real-world impact of AI-accelerated MCUs in industries like automotive, healthcare, and smart devices, and how ST’s Edge AI Suite is simplifying AI deployment for developers worldwide.

With industry giants like LG, Lenovo, and Alps Alpine already leveraging the STM32N6, this conversation unveils how AI-powered microcontrollers are shaping the future of intelligent embedded systems.

Excerpts:

STMicroelectronics has introduced the STM32N6 MCU with the Neural-ART Accelerator, delivering 600 times more machine-learning performance than previous high-end STM32 MCUs. What key challenges did ST overcome to integrate such advanced AI capabilities into a microcontroller while maintaining power efficiency?

Ans: STMicroelectronics faced several key challenges while integrating the Neural-ART Accelerator into the STM32N6 MCU. The need for high computing power for advanced AI features clashed with power efficiency goals was a major hurdle. ST tackled this by creating its own neural processing unit (NPU) to handle most AI tasks while keeping power use low. Making the system work with different AI frameworks and models also proved tough, but ST fixed this by supporting common frameworks like TensorFlow Keras, and the ONNX ecosystem. ST also had to tweak the hardware to run neural networks for computer vision and audio in real-time. This meant building a computer vision pipeline and special image processing abilities. In the end, all this work resulted in the STM32N6 MCU, which offers 600 times better machine-learning performance than earlier top-end STM32 MCUs, while still being very power-efficient.

Keras, and the ONNX ecosystem. ST also had to tweak the hardware to run neural networks for computer vision and audio in real-time. This meant building a computer vision pipeline and special image processing abilities. In the end, all this work resulted in the STM32N6 MCU, which offers 600 times better machine-learning performance than earlier top-end STM32 MCUs, while still being very power-efficient.

With AI inference moving to the edge, how does the STM32N6 differentiate itself from other AI-enabled MCUs in terms of performance, memory, and AI software support?

Ans: The STM32N6 series redefines AI-enabled microcontrollers with its Neural-ART Accelerator, a custom neural processing unit (NPU) delivering up to 600 GOPS for real-time neural network inference. Built on the Arm Cortex-M55 core running at 800 MHz, it leverages Arm Helium vector processing to enhance DSP performance. With 4.2 MB of built-in RAM and high-speed external memory interfaces, it excels in AI and graphics-intensive applications. Backed by a comprehensive AI software ecosystem—including STM32Cube.AI, ST Edge AI Developer Cloud, and the STM32 model zoo—it simplifies model optimization and deployment. This seamless blend of hardware acceleration and software support makes the STM32N6 a powerful and scalable solution for edge AI workloads.

The STM32N6 has been tested and adopted by industry leaders like LG, Lenovo, and Alps Alpine. Can you share some real-world applications where this MCU is making a significant impact?

Ans: The STM32N6 MCU is driving innovation across multiple industries. In automotive, it powers advanced driver assistance systems (ADAS) by enabling real-time processing of camera and sensor inputs for collision avoidance and lane-keeping. In smart home technology, companies like LG and Lenovo use it in security cameras and smart doorbells, leveraging its AI capabilities for motion detection and facial recognition. In healthcare, it enhances medical devices by accurately processing patient data for diagnostics and continuous monitoring. These real-world applications highlight how the STM32N6 delivers high-performance AI processing, improving safety, efficiency, and user experience across industries.

AI at the edge is evolving rapidly, particularly in vision, audio processing, and sensor-based analytics. How does ST’s Edge AI Suite support developers in optimizing AI models for the STM32N6, and what industries stand to benefit the most from this innovation?

Ans: Through three main tools in the Edge AI Suite from ST, developers gain complete functionality to improve AI models for the STM32N6 platform: STM32Cube.AI and ST Edge AI Developer Cloud and STM32 model zoo. The tools in this platform optimize AI model deployment on the Neural-ART Accelerator of STM32N6 while supporting the entire process from data preparation to code generation. The STM32N6 has the potential to revolutionize multiple sectors including automotive production, automotive components and consumer electronics together with healthcare and industrial automation markets. The STM32N6 allows advanced driver assistance systems (ADAS) in automotive applications as it provides healthcare products with real-time data analysis capabilities. Through their suite developers can build effective edge AI solutions which operate throughout numerous applications.

Looking ahead, how does STMicroelectronics envision the future of AI-accelerated microcontrollers, and what role will the STM32N6 series play in shaping next-generation embedded systems?

Ans: STMicroelectronics envisions a future where AI-accelerated microcontrollers like the STM32N6 series play a pivotal role in edge computing, enabling smarter, more efficient, and autonomous embedded systems. The STM32N6, with its Neural-ART Accelerator, is designed to handle complex AI tasks such as computer vision and audio processing with exceptional efficiency. Its energy-optimized AI processing will drive advancements across automotive, healthcare, consumer electronics, and industrial automation, enabling real-time data analysis, improved user experiences, and enhanced operational efficiency. As AI at the edge continues to evolve, the STM32N6 series will be instrumental in shaping intelligent, responsive, and secure next-generation embedded systems.

Sources:

STMicroelectronics to boost AI at the edge with new NPU-accelerated STM32 microcontrollers – ST News

STM32N6 series – STMicroelectronics

STM32N6-AI – AI software ecosystem for STM32N6 with Neural-ART accelerator – STMicroelectronics

Microcontrollers-stm32n6-series-overview.pdf

The post STMicroelectronics enables unmatched edge AI performance on MCU with STM32N6 appeared first on ELE Times.

Top 10 Industrial Robot Manufacturers in India

India’s industrial landscape is undergoing a significant transformation, with robotics playing a pivotal role in enhancing manufacturing efficiency, precision, and productivity. Several Indian companies have emerged as leaders in industrial robotics, offering innovative solutions tailored to various sectors. Here are ten notable industrial robot manufacturers in India:

- DiFACTO Robotics

Headquartered in Bengaluru, DiFACTO Robotics is a prominent provider of robotic solutions for the manufacturing sector. Their expertise encompasses conceptualizing, manufacturing, and implementing advanced factory automation systems. Serving industries such as automotive, metal forming, transportation, energy, electronics, and consumer goods, DiFACTO has established itself as a key player in India’s industrial automation landscape.

- Hi-Tech Robotic Systemz

Hi-Tech Robotic Systemz specializes in autonomous mobile robots and automated guided vehicles (AGVs). Their solutions cater to sectors like automotive, warehousing, and defense, focusing on enhancing operational efficiency and safety. With a strong emphasis on research and development, Hi-Tech Robotic Systemz continues to innovate in the field of industrial robotics.

- Gridbots

Based in Ahmedabad, Gridbots is known for developing robots and artificial intelligence systems for industrial applications. Their product portfolio includes robotic arms, vision systems, and automation solutions designed to optimize manufacturing processes. Gridbots’ commitment to innovation has positioned them as a significant contributor to India’s robotics industry.

- Systemantics

Systemantics focuses on designing and manufacturing industrial robots that are cost-effective and easy to deploy. Their flagship product, the ASYSTR 600, is a six-axis industrial robot designed for tasks such as material handling, welding, and assembly. By addressing the challenges of automation adoption, Systemantics aims to make robotics accessible to a broader range of industries.

- Invento Robotics

Invento Robotics specializes in service robots with applications in hospitality, healthcare, and retail. Their robots, such as Mitra, have been deployed in various settings to assist with customer engagement and service delivery. While their primary focus is on service robotics, their technological advancements contribute to the broader robotics ecosystem in India.

- Addverb Technologies

Addverb Technologies is a leading provider of automation solutions for warehouses and distribution centers. Their robotics offerings include autonomous mobile robots (AMRs), sorting robots, and pallet shuttles, designed to streamline intralogistics operations. By integrating robotics with advanced software systems, Addverb enhances efficiency and accuracy in supply chain processes.

- CynLr

CynLr (Cybernetics Laboratory) focuses on developing vision systems and robotic solutions that enable machines to handle objects with human-like dexterity. Their technology addresses complex manufacturing challenges, particularly in tasks requiring precision and adaptability. CynLr’s innovations are paving the way for more versatile industrial automation.

- PARI (Precision Automation and Robotics India)

Founded in 1991 and headquartered in Satara, Maharashtra, PARI is a global automation company that has provided over 3,000 automated solutions worldwide. Their services encompass conceptualizing, manufacturing, and implementing advanced factory automation systems, catering to industries such as automotive, aerospace, and heavy engineering.

- Goat Robotics

Based in Coimbatore, Goat Robotics specializes in autonomous mobile robots (AMRs) designed for manufacturing industries. Their robots are equipped with advanced navigation systems and are used for material transport and logistics within industrial settings. Goat Robotics’ solutions aim to enhance operational efficiency and safety in manufacturing environments.

- Peer Robotics

Peer Robotics is a startup focusing on collaborative mobile robots designed to work alongside human operators. Their robots are used in manufacturing and warehousing environments to assist with material handling and repetitive tasks. By emphasizing human-robot collaboration, Peer Robotics aims to create safer and more efficient workplaces.

India’s industrial robot manufacturing sector is characterized by a blend of established companies and innovative startups, each contributing uniquely to the automation landscape. As industries increasingly adopt automation to enhance productivity and competitiveness, these companies are poised to play a crucial role in shaping the future of manufacturing in India.

The post Top 10 Industrial Robot Manufacturers in India appeared first on ELE Times.

Top 10 Drone Camera Manufacturers in India

India’s drone industry has experienced remarkable growth, positioning itself as a global leader in unmanned aerial vehicle (UAV) technology. This surge is driven by the government’s “Make in India” initiative and supportive policies, fostering a robust ecosystem of drone manufacturers. Here, we explore the top 10 drone camera manufacturers in India, highlighting their contributions to this dynamic sector.

- ideaForge Technology

Founded in 2007, ideaForge is a pioneer in India’s UAV industry, renowned for developing high-endurance drones tailored for defense, homeland security, and industrial applications. Their flagship product, the Switch UAV, boasts a flight time of over two hours, equipped with advanced imaging capabilities, making it indispensable for long-range surveillance and mapping. The company’s commitment to innovation has solidified its position as a leader in the Indian drone market.

- Zen Technologies

Established in 1993, Zen Technologies specializes in designing and manufacturing combat training solutions, including UAVs and anti-drone systems. Their drones are integral to military training, offering realistic simulations and live training exercises. The company’s focus on defense applications has made it a trusted partner of the Indian armed forces.

- Paras Defence and Space Technologies

Paras Defence is a prominent player in the defense sector, providing a range of products, including UAVs equipped with sophisticated imaging systems. Their drones are engineered for intelligence, surveillance, and reconnaissance missions, featuring high-resolution cameras and thermal imaging capabilities. The company’s expertise in optics and defense electronics enhances the performance of their UAVs.

- Asteria Aerospace

Based in Bengaluru, Asteria Aerospace focuses on developing drones for security and industrial applications. Their Genesis series offers real-time aerial surveillance with advanced imaging systems, catering to sectors like oil and gas, mining, and agriculture. The company’s emphasis on data analytics and automation sets them apart in the industry.

- Quidich Innovation Labs

Quidich specializes in aerial cinematography and has expanded into industrial inspections and mapping. Their drones are equipped with high-definition cameras, providing unique perspectives for film production and critical data for infrastructure assessments. Their versatility has made them a preferred choice in both entertainment and industrial sectors.

- Garuda Aerospace

Garuda Aerospace offers a diverse range of drones for applications such as agriculture, delivery, and surveillance. Their Kisan Drone is designed for precision agriculture, featuring multispectral imaging to monitor crop health. The company’s focus on affordability and functionality has made drone technology accessible to various industries.

- Dhaksha Unmanned Systems

Specializing in agriculture and surveillance drones, Dhaksha Unmanned Systems provides UAVs equipped with advanced imaging systems for crop monitoring and security applications. Their drones are known for durability and ease of use, benefiting farmers and law enforcement agencies alike.

- RattanIndia Enterprises

RattanIndia has ventured into the drone sector, focusing on logistics and delivery solutions. Their drones are designed to transport goods efficiently, equipped with cameras for navigation and monitoring. The company’s entry into the drone market signifies the expanding applications of UAV technology in India.

- DCM Shriram Industries

DCM Shriram has diversified into drone manufacturing, offering UAVs for industrial inspections and agricultural use. Their drones are equipped with high-resolution cameras and sensors, providing valuable data for maintenance and farming operations. The company’s industrial expertise enhances the reliability of their drone solutions.

- Aeroarc Innovations

Aeroarc Innovations is an emerging player in India’s drone ecosystem, specializing in high-performance UAVs for industrial, defense, and environmental applications. Their drones feature advanced imaging capabilities, including multispectral and LiDAR sensors, making them ideal for precision agriculture, infrastructure monitoring, and disaster response. The company is focused on developing AI-powered drone solutions that enhance automation and real-time data analysis, positioning itself as a key innovator in the Indian drone market.

The advancements made by these companies reflect India’s burgeoning capabilities in drone technology. With applications spanning defense, agriculture, industrial inspections, and entertainment, the integration of advanced imaging systems into UAVs is transforming traditional practices. As regulatory frameworks evolve and technology advances, these manufacturers are poised to play a pivotal role in the global drone ecosystem, showcasing India’s commitment to innovation and self-reliance in this cutting-edge field.

The post Top 10 Drone Camera Manufacturers in India appeared first on ELE Times.

NEPCON JAPAN 2025 Welcomes 85,430 Attendees for a Grand Showcase of Electronics R&D and Innovation

NEPCON JAPAN 2025 concluded its successful run at Tokyo Big Sight. The

event attracted a remarkable 85,430* attendees from around the globe, serving as a premier, world-class platform for innovation, networking, and business growth.

(*including concurrent shows)

This year’s edition brought together key industry players and the latest technologies across

multiple shows, including the 17th AUTOMOTIVE WORLD, the 11th Wearable Expo, Factory

Innovation Week 2024, and the 4th SMART LOGISTICS Expo.

The global reach and influence of NEPCON JAPAN was evident through the participation of

industry-leading exhibitors hailing from countries such as Austria, Estonia, Germany, the Czech Republic, Italy, the United States, Malaysia, and many more.

Industry Leaders and Global Participation

The exhibition halls were packed with cutting-edge technologies and breakthrough innovations presented by some of the biggest names in the industry. These companies, among 1,711 exhibitors, showcased state-of-the-art advancements in power devices, AI-driven automation, IoT solutions, next-gen mobility, and smart logistics technology.

Notable exhibitors included:

- NEPCON JAPAN: Yamaha Motor Co., Ltd., Omron Corporation, Siemens EDA Japan, Panasonic Corporation, Denso Corporation

- AUTOMOTIVE WORLD: Bosch Corporation, Continental AG, Denso Corporation, Aisin Seiki Co., Ltd., Toyota Auto Body

- Factory Innovation Week: LG Electronics Japan Inc., Mitsubishi Electric Corporation, Siemens AG, Fanuc Corporation, Kawasaki Heavy Industries, Ltd., Yaskawa Electric Corporation, Toshiba Digital Engineering Corporation

- SMART LOGOSTICS Expo: Honeywell International Inc., Daifuku Co., Ltd., Toyota Logistics Solutions, Murata Manufacturing Co., Ltd., Zebra Technologies Corporation

- WEARABLE EXPO:Lenovo Japan LLC, Sony Corporation, Samsung Electronics Co., Ltd., Google LLC, HTC Corporation, NXP Semiconductors N.V.

Event Highlights

One of the major attractions of NEPCON JAPAN 2025 was the expanded Power Device & Module Expo and the Power Device Summit, featuring discussions by leading power device manufacturers. Attendees also had access to a range of free-to-attend conference sessions, where industry leaders from Samsung, Intel, Qualcomm, and other major players shared insights on the future of electronics and R&D.

Build on NEPCON Japan’s Success: Register for the First-Ever NEPCON Osaka

Following the success of NEPCON Japan 2025, the inaugural edition of NEPCON Osaka is set to take place from May 14–16, 2025, further strengthening Japan’s presence in the electronics industry. Expected to welcome 45,000* visitors and 600* exhibitors, NEPCON Osaka will provide a new platform for business expansion and industry growth.

With yet another remarkable edition in the books, NEPCON JAPAN continues to set the standard for electronics innovation, R&D, and manufacturing excellence. Industry professionals, exhibitors, and tech enthusiasts alike can look forward to the next milestone event at NEPCON Osaka 2025.

The post NEPCON JAPAN 2025 Welcomes 85,430 Attendees for a Grand Showcase of Electronics R&D and Innovation appeared first on ELE Times.

Substantial turnout for SPS – Smart Production Solutions Guangzhou 2025

SPS – Smart Production Solutions Guangzhou concluded successfully on 27 February 2025 at Area A of the China Import and Export Fair Complex, attracting strong attendance from across the manufacturing sector. Drawing on the extensive resources of the global SPS network, the event welcomed a wide range of international exhibitors, presenting regional manufacturers with advanced smart manufacturing technologies and solutions from around the world. Through this convergence of global expertise, the platform continues to advance the intelligent transformation of the region’s industries while enabling knowledge exchange and technological transfer across markets.

Mr Louis Leung, Deputy General Manager, Guangzhou Guangya Messe Frankfurt Co Ltd commented: “2025 marks a meaningful step forward for SPS – Smart Production Solutions Guangzhou, following last year’s brand upgrade. The exhibition now integrates more deeply within the global SPS network, delivering world-class intelligent manufacturing resources to the region. We are very pleased with the success of this year’s shows, as evidenced by the increased visitor flow, including buyers and visitors from around the world, and the highly positive feedback we’ve received from participants.”

The three-day exhibition featured leading manufacturing enterprises showcasing breakthrough developments in sensing technology, drive systems, motion control, and other key areas. Major industry players including Autonics, Binder, Bonfiglioli, CODESYS, Controlway, Crouzet, Datalogic, DEGSON, DINKLE, GSK, HIKVISION, HUALONG XUNDA, ifm, INVT, Jaten Robot, Li-Gong, MatriBox, SERVOTRONIX, SICK, SIEMENS, SUPU, TURCK, Wanjie, WATTSAN, and Zhongda Leader presented their advanced technologies, solutions and services.

The exhibition was supported by a diverse program of concurrent events, with over 100 presentations addressing key developments in sustainability, digital transformation, and artificial intelligence in manufacturing. The sessions encouraged substantive dialogue between industry experts and participants, facilitating technical collaboration and knowledge transfer.

Partnerships with the World Manufacturing Foundation (WMF) and the Innovative Industry Fair for E x E Solutions (IIFES) brought an enhanced international dimension to the exhibition through two specialised forums: “The Future of Manufacturing: Outlook 2030” and “Manufacturing Meets the Future in Japan”. At these forums, global manufacturing experts including Prof Dr David Romero, Scientific Vice-chairman of the World Manufacturing Foundation, and Ms Shuran Yamaguchi, General Manager of Global Marketing Communications at IDEC Corporation offered insights and perspectives on global developments in manufacturing, strengthening the event’s position as a leading international platform for the industry.

Further expanding its industry reach, the event was held concurrently with Guangzhou Industrial Technology and Asiamold Select – Guangzhou. The three exhibitions worked in synergy as a comprehensive platform for procurement and exchange, where participants explored complementary technologies, discovered business opportunities, and engaged in technical dialogue and resource sharing, supporting wider innovation and growth throughout the manufacturing sector.

Exhibitor comments

“SPS – Smart Production Solutions Guangzhou has provided us with an outstanding platform for business networking and enhancing our brand visibility. At this year’s event, we connected with customers from Southeast Asia, India, and Germany, gaining valuable insights into market trends and requirements. Our ten core sensor product lines were well-received by customers from many different industries. The 2025 edition has seen steady growth in visitor numbers and more focused customer interactions, enabling us to expand our customer base and have in-depth discussions about our existing partners’ future requirements and R&D plans.”

Ms Cristing Zhou, General Manager, Guangzhou Heyi Intelligent Technology Co Ltd

“This is our sixth year exhibiting at SPS – Smart Production Solutions Guangzhou, and it remains a key event for reaching quality customers. This year, we’ve had productive meetings with potential customers from India and Vietnam, among others. With its strong line-up of both domestic and international automation companies, and high concentration of industry professionals, the exhibition provides an excellent platform for presenting our technologies and enhancing our brand.”

Mr Xiangfu Li, Sales Manager, Shinier Intelligent Tech Co Ltd

“While this is our first year exhibiting, we were already well aware of this show’s strong reputation within the industry. We saw excellent visitor flow, with many high-quality prospects and meaningful inquiries, including buyers from Pakistan and other parts of Asia. Compared to other exhibitions, this platform stands out for its high standard of execution and full coverage of the industry chain. Overall, we’re very satisfied with the results and plan to exhibit again next year.”

Ms Xiaoting Li, Secretary General Director, Guangdong MingYu Technology Co Ltd

“To stand out in this competitive industry, we’re working on two fronts: improving our operations while building our presence in both domestic and international markets. We saw strong interest in our products at the show, with several customers arranging factory visits on the spot. Beyond domestic buyers, we received inquiries from Saudi Arabia, the Middle East and Singapore. This is only our second time exhibiting, but we’re already seeing its value in supporting our market development objectives.”

Mr Xiangpeng Ning, Factory Manager, Guangzhou Jiawei Intelligent Control Technology Co Ltd

Visitor Comments:

“As a traditional flour and noodle manufacturer, we came to the show looking for ways to digitalise and automate our operations. After two days of discussions, we’ve made excellent progress, and have found several potential partnership opportunities. The forum sessions were also valuable – the speakers offered great insights about business growth and new technologies, particularly about how to implement AI in our business.”

Mr Ningyuan Wei, Information Specialist, Xingtai Jinshahe Flour Co Ltd

“As specialists in robotics and vending solutions for the hospitality sector, we came seeking new robotics and automation technologies, along with component vendors for solutions we’re developing in India. This has proven to be the most comprehensive exhibition of its type I’ve attended. We’ve made promising contacts, particularly in sensors, robotics, and automation, and the selection of exhibitors from Germany, Korea and China aligns well with our needs. The exhibition has proven well worth our time, and we look forward to returning in the future.”

Mr Sujith Mohandas, Deputy General Manager – Robotics Unit, Urban Harvest (India)

Speaker comments:

“During my presentation, the audience was highly engaged, especially when discussing ESG and sustainability, with many taking photographs and notes. The forum provides a valuable platform for advancing manufacturing, and these shared perspectives and experiences will help drive developments in our equipment and manufacturing sectors. Representing Rockwell Automation, a global authority in industrial automation and digital transformation, I was impressed by the organisers’ thoroughness at every stage, from speaker management to content preparation, delivering a well-organised and efficiently executed event.”

Mr Jason Dong, ESG & Sustainability Business Lead, Rockwell Automation (China) Company Limited

SPS – Smart Production Solutions Guangzhou is co-organised by Guangzhou Guangya Messe Frankfurt Co Ltd, China Foreign Trade Guangzhou Exhibition Co Ltd, Guangzhou Overseas Trade Fairs Ltd and Mesago Messe Frankfurt GmbH. Guangdong Association of Automation, Guangzhou Association of Automation and Guangzhou Instrument and Control Society serve as honourary organisers. The fair is also supported by the China Light Industry Machinery Association, the China International Chamber of Commerce Guangzhou Chamber of Commerce, and the Beijing Internet of Things Intelligent Technology Application Association.

The 2026 edition of SPS – Smart Production Solutions Guangzhou will take place from

4 – 6 March 2026. For more details about the fairs, please visit www.spsinchina.com or email sps@china.messefrankfurt.com.

Further events in the international SPS network include:

- SPS Stage Bangkok

6 – 8 March 2025, Bangkok, Thailand - SPS Italia

13 – 15 May 2025, Parma, Italy

- SPS Stage Kuala Lumpur

14 – 16 May 2025, Kuala Lumpur, Malaysia

- SPS Atlanta

16 – 18 September 2025, Atlanta, United States - SPS – Smart Production Solutions

25 – 27 November 2025, Nuremberg, Germany

The post Substantial turnout for SPS – Smart Production Solutions Guangzhou 2025 appeared first on ELE Times.

Laser Soldering Definition, Process, Working, Uses & Advantages

Laser soldering is an advanced soldering technique that utilizes a highly focused laser beam to heat and join soldered components. It is widely used in electronics, automotive, aerospace, and medical device manufacturing, offering precision and minimal thermal impact compared to traditional soldering techniques like wave and reflow soldering.

How Laser Soldering WorksLaser soldering operates by directing a controlled laser beam onto a solder joint, heating it to the required temperature to melt the solder and form a secure electrical or mechanical connection. The key components of a laser soldering system include:

- Laser Source: Typically a fiber laser, diode laser, or Nd:YAG laser, chosen based on the application requirements.

- Beam Delivery System: Optical fibers or galvanometer scanners to direct the laser beam to the precise soldering location.

- Process Monitoring System: Infrared or vision-based sensors to ensure quality and consistency.

- Flux Application System: To clean oxidation and improve wetting properties of the solder.

The laser’s intensity, duration, and focus are carefully controlled to avoid overheating and ensure optimal bonding.

Laser Soldering ProcessThe laser soldering process follows these key steps:

- Surface Preparation: The materials to be soldered must be cleaned and flux applied to prevent oxidation.

- Positioning & Fixturing: Components are precisely positioned to ensure accurate solder joint formation.

- Laser Heating: The laser is directed at the soldering site with carefully controlled power and duration.

- Solder Melting & Wetting: The solder melts and flows over the joint, creating a reliable electrical and mechanical connection.

- Cooling & Solidification: The solder joint solidifies, forming a strong bond with minimal stress or thermal damage.

- Inspection & Quality Control: Automated systems, such as X-ray or infrared imaging, may be used to verify joint integrity.

Laser soldering is employed in various high-precision industries, including:

- Electronics Manufacturing: Used in PCB assembly, wire bonding, and micro-soldering for miniaturized circuits.

- Automotive Industry: Employed in sensor connections, battery pack manufacturing, and electronic control modules.

- Medical Devices: Used for assembling compact and delicate electronic components in medical implants and diagnostic equipment.

- Aerospace & Defense: Essential for high-reliability solder joints in avionics and military electronics.

- Telecommunications: Applied in optical fiber splicing and high-frequency circuit board soldering.

Laser soldering offers several advantages over conventional soldering techniques:

- Precision & Control: Allows fine control over temperature, beam positioning, and solder flow.

- Minimal Thermal Impact: Reduces heat stress on delicate components, making it ideal for miniaturized electronics.

- Non-Contact Process: Eliminates mechanical stress and contamination risks.

- Automation Friendly: Easily integrates with robotics and inline inspection systems for high-throughput manufacturing.

- Consistent & Repeatable Results: Ensures uniform solder joints, reducing defects and rework.

- Eco-Friendly: Reduces solder waste and eliminates the need for high-temperature heating elements.

Despite its many benefits, laser soldering also has some limitations:

- High Initial Cost: Requires investment in specialized equipment and skilled operators.

- Material Sensitivity: Some materials may not absorb laser energy efficiently, requiring careful parameter adjustments.

- Limited Joint Sizes: May not be suitable for large-area soldering applications.

- Laser Safety Considerations: Requires protective measures to prevent accidental exposure to high-intensity laser beams.

- Dependency on Precise Alignment: Small misalignments can affect solder quality and reliability.

Laser soldering is a cutting-edge technology that enhances precision, efficiency, and reliability in soldering applications. While it comes with a higher upfront cost and requires careful process control, its advantages in high-precision industries make it a preferred choice for modern manufacturing. As technology evolves, improvements in laser systems and automation will further expand the scope of laser soldering, making it an indispensable tool in next-generation electronics and industrial applications.

The post Laser Soldering Definition, Process, Working, Uses & Advantages appeared first on ELE Times.

Top 10 Smart Lighting Manufacturers in India

India’s smart lighting industry has experienced significant growth in recent years, driven by technological advancements and a growing emphasis on energy efficiency. Smart lighting integrates advanced controls and connectivity, allowing users to customize illumination levels, colors, and schedules, often through mobile apps or voice assistants.

This article highlights ten prominent smart lighting manufacturers in India, showcasing their contributions to this dynamic sector.

1. Philips Lighting India (Signify)

Philips Lighting, now operating as Signify, is a global leader in lighting solutions with a substantial presence in India. The company offers a diverse range of smart lighting products, including the popular Philips Hue series, which allows users to control lighting ambiance and intensity through mobile applications and voice commands. Philips’ commitment to innovation and quality has solidified its reputation in the Indian smart lighting market.

2. Wipro Lighting

Wipro Lighting, a division of Wipro Enterprises, has been at the forefront of providing innovative lighting solutions in India. The company offers smart LED lighting products that are compatible with voice assistants like Amazon Alexa and Google Assistant. Wipro’s smart bulbs and fixtures enable users to adjust brightness and color temperatures, enhancing both residential and commercial spaces with energy-efficient lighting solutions.

3. Havells India